地址:https://github.com/facebookresearch/StyleNeRF

Readme

- 训练新的模型

python run_train.py outdir=${OUTDIR} data=${DATASET} spec=paper512 model=stylenerf_ffhq

- 使用预训练模型渲染

python generate.py --outdir=${OUTDIR} --trunc=0.7 --seeds=${SEEDS} --network=${CHECKPOINT_PATH} --render-program="rotation_camera"

- Run a demo page by

python web_demo.py 21111

报错。

Loading networks from "./pretrained/ffhq_512.pkl"...

Traceback (most recent call last):

File "web_demo.py", line 82, in <module>

global_states = list(get_model(check_name()))

File "web_demo.py", line 64, in get_model

with dnnlib.util.open_url(network_pkl) as f:

File "/home/joselyn/workspace/0419-course/StyleNeRF-main/dnnlib/util.py", line 392, in open_url

return url if return_filename else open(url, "rb")

FileNotFoundError: [Errno 2] No such file or directory: './pretrained/ffhq_512.pkl'

- Run a GUI visualizer by

python visualizer.py

项目代码

training_loop.py

run_dir = '.', # Output directory.

training_set_kwargs = {}, # Options for training set.

data_loader_kwargs = {}, # Options for torch.utils.data.DataLoader.

G_kwargs = {}, # Options for generator network.

D_kwargs = {}, # Options for discriminator network.

G_opt_kwargs = {}, # Options for generator optimizer.

D_opt_kwargs = {}, # Options for discriminator optimizer.

augment_kwargs = None, # Options for augmentation pipeline. None = disable.

loss_kwargs = {}, # Options for loss function.

metrics = [], # Metrics to evaluate during training.

random_seed = 0, # Global random seed.

world_size = 1, # Number of GPUs participating in the training. GPU数量

rank = 0, # Rank of the current process.

gpu = 0, # Index of GPU used in training

batch_gpu = 4, # Batch size for once GPU

batch_size = 4, # Total batch size for one training iteration. Can be larger than batch_gpu * world_size.

ema_kimg = 10, # Half-life of the exponential moving average (EMA) of generator weights.

ema_rampup = None, # EMA ramp-up coefficient.

G_reg_interval = 4, # How often to perform regularization for G? None = disable lazy regularization.

D_reg_interval = 16, # How often to perform regularization for D? None = disable lazy regularization.

augment_p = 0, # Initial value of augmentation probability.

ada_target = None, # ADA target value. None = fixed p.

ada_interval = 4, # How often to perform ADA adjustment?

ada_kimg = 500, # ADA adjustment speed, measured in how many kimg it takes for p to increase/decrease by one unit.

total_kimg = 25000, # Total length of the training, measured in thousands of real images.

kimg_per_tick = 4, # Progress snapshot interval.

image_snapshot_ticks = 50, # How often to save image snapshots? None = disable.

network_snapshot_ticks = 50, # How often to save network snapshots? None = disable.

resume_pkl = None, # Network pickle to resume training from.

resume_start = 0, # Resume from steps

cudnn_benchmark = True, # Enable torch.backends.cudnn.benchmark?

allow_tf32 = False, # Enable torch.backends.cuda.matmul.allow_tf32 and torch.backends.cudnn.allow_tf32?

abort_fn = None, # Callback function for determining whether to abort training. Must return consistent results across ranks.

progress_fn = None, # Callback function for updating training progress. Called for all ranks.

update_cam_prior_ticks = None, # (optional) Non-parameteric updating camera poses of the dataset

generation_with_image = False, # (optional) For each random z, you also sample an image associated with it.

Generate.py

测试运行

python generate.py --outdir=./mylogs/test_generate --trunc=0.7 --seeds=[2,3,7,1,9,4] --network=./ffhq-res256-mirror-paper256-noaug.pkl --render-program="rotation_camera"

报错:

Loading networks from "./ffhq-res256-mirror-paper256-noaug.pkl"...

Error loading: synthesis.b64.conv0.weight torch.Size([256, 512, 3, 3]) torch.Size([512, 512, 3, 3])

Traceback (most recent call last):

File "generate.py", line 200, in <module>

generate_images() # pylint: disable=no-value-for-parameter

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 1128, in __call__

return self.main(*args, **kwargs)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 1053, in main

rv = self.invoke(ctx)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 1395, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 754, in invoke

return __callback(*args, **kwargs)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/decorators.py", line 26, in new_func

return f(get_current_context(), *args, **kwargs)

File "generate.py", line 102, in generate_images

misc.copy_params_and_buffers(G, G2, require_all=False) # 拷贝了G的参数。

File "/home/joselyn/workspace/0419-course/StyleNeRF-main/torch_utils/misc.py", line 168, in copy_params_and_buffers

raise e

File "/home/joselyn/workspace/0419-course/StyleNeRF-main/torch_utils/misc.py", line 165, in copy_params_and_buffers

tensor.copy_(src_tensors[name].detach()).requires_grad_(tensor.requires_grad)

RuntimeError: The size of tensor a (512) must match the size of tensor b (256) at non-singleton dimension 0

更换模型,再次尝试 python generate.py --outdir=./mylogs/test_generate --trunc=0.7 --seeds=0 --network=./ffhq-res512-mirror-stylegan2-noaug.pkl --render-program="rotation_camera"

报错:

Loading networks from "./ffhq-res512-mirror-stylegan2-noaug.pkl"...

Error loading: synthesis.b128.conv0.weight torch.Size([256, 512, 3, 3]) torch.Size([512, 512, 3, 3])

Traceback (most recent call last):

File "generate.py", line 200, in <module>

generate_images() # pylint: disable=no-value-for-parameter

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 1128, in __call__

return self.main(*args, **kwargs)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 1053, in main

rv = self.invoke(ctx)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 1395, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/core.py", line 754, in invoke

return __callback(*args, **kwargs)

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/click/decorators.py", line 26, in new_func

return f(get_current_context(), *args, **kwargs)

File "generate.py", line 102, in generate_images

misc.copy_params_and_buffers(G, G2, require_all=False) # 拷贝了G的参数。

File "/home/joselyn/workspace/0419-course/StyleNeRF-main/torch_utils/misc.py", line 168, in copy_params_and_buffers

raise e

File "/home/joselyn/workspace/0419-course/StyleNeRF-main/torch_utils/misc.py", line 165, in copy_params_and_buffers

tensor.copy_(src_tensors[name].detach()).requires_grad_(tensor.requires_grad)

RuntimeError: The size of tensor a (512) must match the size of tensor b (256) at non-singleton dimension 0

和上一个问题报错的情况一样。

- 把pkl换成ffhq_512.pkl,新的报错

verify_ninja_availability()

File "/home/joselyn/.conda/envs/stylenerf/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 1323, in verify_ninja_availability

raise RuntimeError("Ninja is required to load C++ extensions")

RuntimeError: Ninja is required to load C++ extensions

解决方法:

wget https://github.com/ninja-build/ninja/releases/download/v1.8.2/ninja-linux.zip

sudo unzip ninja-linux.zip -d /usr/local/bin/

sudo update-alternatives --install /usr/bin/ninja ninja /usr/local/bin/ninja 1 --force

再次运行。 【成功】

输出如下:

ffhq_512_20220421.213901_0

Debug 测试过程

运行命令:

python generate.py --outdir=./mylogs/test_generate_seed5 --trunc=0.7 --seeds=5 --network=./pkl/ffhq_512.pkl --render-program="rotation_camera"

处理流程:

-

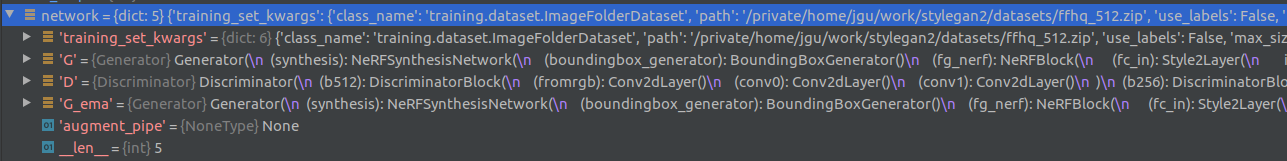

加载训练好的模型:

network_pkl = sorted(glob.glob(network_pkl + '/*.pkl'))[-1]值为'./pkl/ffhq_512.pkl'

network = legacy.load_network_pkl(f)

-

提取预训练网络中 生成器:

G = network['G_ema'].to(device)

-

提取预训练网络中 判别器:

D = network['D'].to(device)

-

标签:

label = torch.zeros([1, G.c_dim], device=device)

-

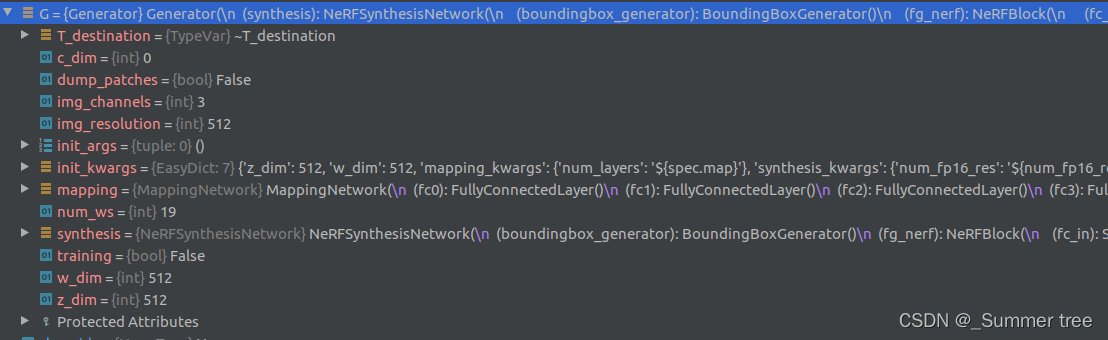

初始化一个新的生成器:

G2 = Generator(*G.init_args, **G.init_kwargs).to(device)

其中,init_args 和init_kwargs 和一致。 -

从G中拷贝 参数和缓存到 G2 :

misc.copy_params_and_buffers(G, G2, require_all=False)

miscmisc.copy_params_and_buffers()是指misc.py文件中copy_params_and_buffers()函数。

misc.py文章在torch_utils文件夹中。

函数内容如下:def copy_params_and_buffers(src_module, dst_module, require_all=False): assert isinstance(src_module, torch.nn.Module) assert isinstance(dst_module, torch.nn.Module) src_tensors = dict(named_params_and_buffers(src_module)) for name, tensor in named_params_and_buffers(dst_module): assert (name in src_tensors) or (not require_all) if name in src_tensors: try: tensor.copy_(src_tensors[name].detach()).requires_grad_(tensor.requires_grad) except Exception as e: print(f'Error loading: {name} {src_tensors[name].shape} {tensor.shape}') raise e通过便于目标模型的参数和值,逐个进行copy。

执行完之后,对G2和G进行比对如下:G没有encoder且training = False。

-

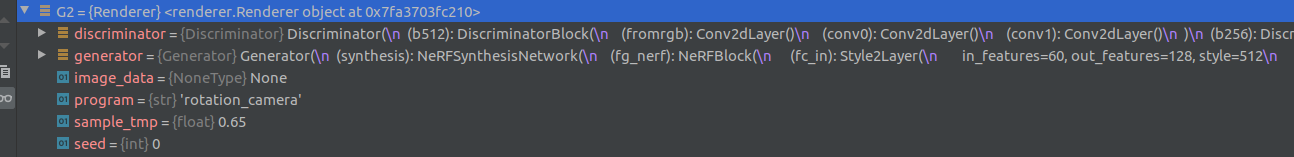

根据G2 和D初始化一个

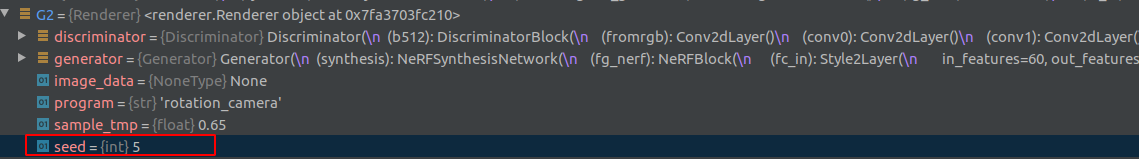

Render类型:G2 = Renderer(G2, D, program=render_program)

Renderer的构造函数如下:def __init__(self, generator, discriminator=None, program=None): self.generator = generator self.discriminator = discriminator self.sample_tmp = 0.65 self.program = program self.seed = 0 if (program is not None) and (len(program.split(':')) == 2): # 给定了program的情况。 from training.dataset import ImageFolderDataset # training文件夹下的dataset.py文件中 ImageFolderDataset 类。 self.image_data = ImageFolderDataset(program.split(':')[1]) self.program = program.split(':')[0] else: # 执行流程。 self.image_data = None不晓得

program还要哪些可以取的值? (program的值是在运行命令的时候指定的)

program = ‘rotation_camera’

image_data = None

返回后,G2如下:

sample_tmp是什么意思?

-

遍历种子:

for seed_idx, seed in enumerate(seeds),其中seeds = [5] , seed_idx = 0, seed = 5 -

设置随机种子

G2.set_random_seed(seed), 使得G2的seed = 5

-

计算

z:z = torch.from_numpy(np.random.RandomState(seed).randn(2, G.z_dim)).to(device)不晓得z是干什么用的,也不晓得z_dim的含义 。

z的shape是 2,512。

-

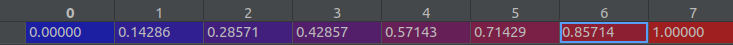

确定相对范围区间:

relative_range_u = [0.5 - 0.5 * relative_range_u_scale, 0.5 + 0.5 * relative_range_u_scale]值为[0.0,1.0] -

根据G2计算输出 :

outputs = G2( z=z, c=label, truncation_psi=truncation_psi, noise_mode=noise_mode, render_option=render_option, n_steps=n_steps, relative_range_u=relative_range_u, return_cameras=True)。

该语句会调用Renderer类的__call__()函数,具体的执行内容如下:self.generator.eval() outputs = getattr(self, f"render_{self.program}")(*args, **kwargs) return outputsoutput中即包含了 计算得到的多张图片和相机信息。

关于output到底是怎么计算的,getattr()函数的提示中说:

Get a named attribute from an object; getattr(x, ‘y’) is equivalent to x.y. (这是一个根据变量灵活调用函数的好方法。)

也就是说getattr(self, f"render_{self.program}")(*args, **kwargs)实际执行的是self.render_rotation_camera(). 这个函数也位于类Renderer中。

在函数render_rotation_camera()中:-

确定batch_size 和 n_steps :

batch_size, n_steps = 2, kwargs["n_steps"]具体怎么用? -

把生成器中的synthesis网络提取到

gen中:gen = self.generator.synthesis。

-

确定

ws的值:ws = self.generator.mapping(*args, **kwargs)。 使用的是mapping network,也就是说,ws 就是潜空间。

-

如果

hasattr(gen, 'get_latent_codes'),则kwargs["latent_codes"] = gen.get_latent_codes(batch_size, tmp=self.sample_tmp, device=ws.device)且kwargs.pop('img', None)。 并没有在gen中的找到get_laten_codes这个属性, 但是运行表明是有的。

-

初始化一些值。

out = [] cameras = [] relatve_range_u = kwargs['relative_range_u'] u_samples = np.linspace(relatve_range_u[0], relatve_range_u[1], n_steps) #这里是控制采样多少张,如果设置得够得,变换就会更平滑。

-

对每个step做遍历

for step in tqdm.tqdm(range(n_steps))- 设置相机。

kwargs["camera_matrices"] = gen.get_camera(batch_size=batch_size, mode=[u, 0.5, 0.5], device=ws.device)。 这里暂时不对get_camera进行解析。

- 把相机信息添加到

cameras中:cameras.append(gen.get_camera(batch_size=batch_size, mode=[u, 0.5, 0.5], device=ws.device)) - 计算合成网络的输出 :

out_i = gen(ws, **kwargs)暂时没有找打这行代码具体执行了哪里

其中,styleshape (2,10,512),img_nerfshape(2,3,32,32),imgshape (2,3,512,512)。 - 接下里把img 添加到out中。

out.append(out_i) - 返回信息

return out, cameras

- 设置相机。

-

-

返回到

generate.py文件中。 -

把返回值赋值给

image 和 cameras:img, cameras = outputs。img是长度为 8 的list,每个 元素是shape为(2,3,512,512)的tensor。cameras也是长度为8 的list, 每个元素都是个4元组。

-

对img进行调整

imgs = [proc_img(i) for i in img],具体如下:def proc_img(img): # 不晓得这个操作是干什么的。 return (img.permute(0, 2, 3, 1) * 127.5 + 128).clamp(0, 255).to(torch.uint8).cpu() -

如果

not no_video, 则all_imgs += [imgs]。all_imgs的长度是1。 -

当前的输出目录

curr_out_dir = os.path.join(outdir, 'seed_{:0>6d}'.format(seed)):'./mylogs/test_generate_seed5/seed_000005' -

把原始图片输出到指定的位置

for step, img in enumerate(imgs): PIL.Image.fromarray(img[0].detach().cpu().numpy(), 'RGB').save(f'{img_dir}/{step:03d}.png') -

最后视频的输出部分如下:

if len(all_imgs) > 0 and (not no_video): # 不是空的,并且让渲染视频。

# write to video

timestamp = time.strftime('%Y%m%d.%H%M%S',time.localtime(time.time())) # 获取当前时间。

seeds = ','.join([str(s) for s in seeds]) if seeds is not None else 'projected'

network_pkl = network_pkl.split('/')[-1].split('.')[0] # 用于给输出文件命名。

all_imgs = [stack_imgs([a[k] for a in all_imgs]).numpy() for k in range(len(all_imgs[0]))] # 把每个image里面的两张图片堆叠了起来。

imageio.mimwrite(f'{outdir}/{network_pkl}_{timestamp}_{seeds}.mp4', all_imgs, fps=30, quality=8)

outdir = f'{outdir}/{network_pkl}_{timestamp}_{seeds}'

os.makedirs(outdir, exist_ok=True)

for step, img in enumerate(all_imgs):

PIL.Image.fromarray(img, 'RGB').save(f'{outdir}/{step:04d}.png')

demo 测试

- linear mixing ratio(geometry) 1 vs 0.5 vs 0

mode

- linear mixing ratio (apparence) 0 vs 0.5 vs 1

- yaw (偏航) -1 vs 0 vs 1

- Pitch (俯仰) -1 vs 1

- 组合。 (yaw, pitch) = 1,1

- 组合。 (yaw, pitch) = 1,-1

- 组合。 (yaw, pitch) = -1,-1

- 组合。 (yaw, pitch) = -1,1

- Fov (视野) 正常值是10 (12-14)。 12 vs 14