一、安装cudnn和tensorRT

需要下载的文件:

cuda11.3下载地址:

CUDA Toolkit 11.3 Downloads | NVIDIA Developer

(以下内容默认已经安装了cuda)

cudnn下载地址:

cuDNN Archive | NVIDIA Developer

tensorRT下载地址:

https://developer.nvidia.com/nvidia-tensorrt-8x-download

首先确保cuda在环境变量中:

cmd 输入nvcc -V 能够显示出版本号即可

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Sun_Mar_21_19:24:09_Pacific_Daylight_Time_2021

Cuda compilation tools, release 11.3, V11.3.58

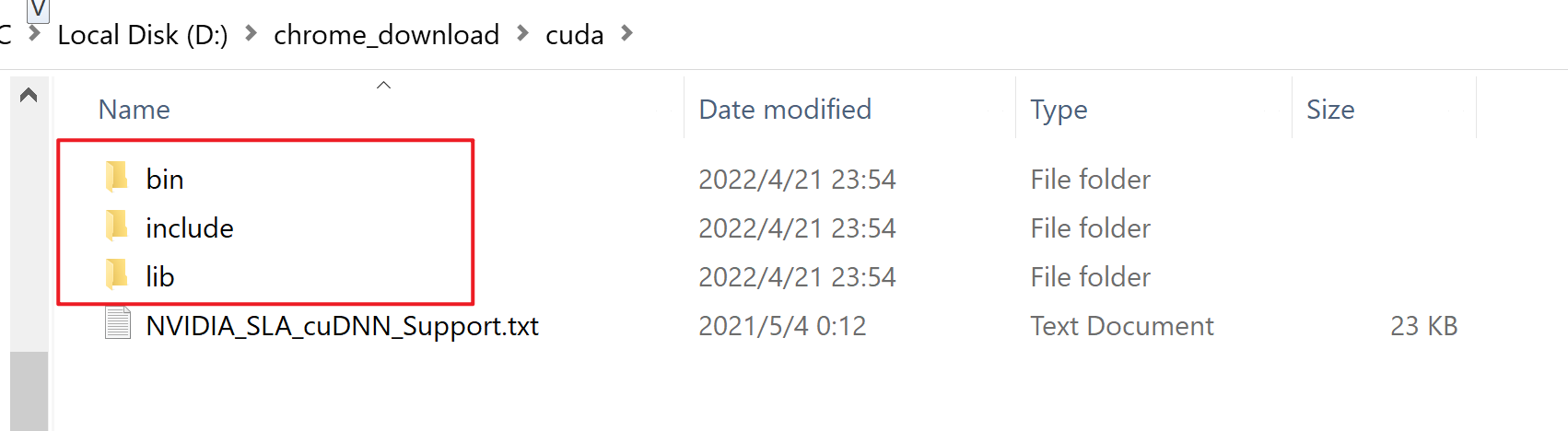

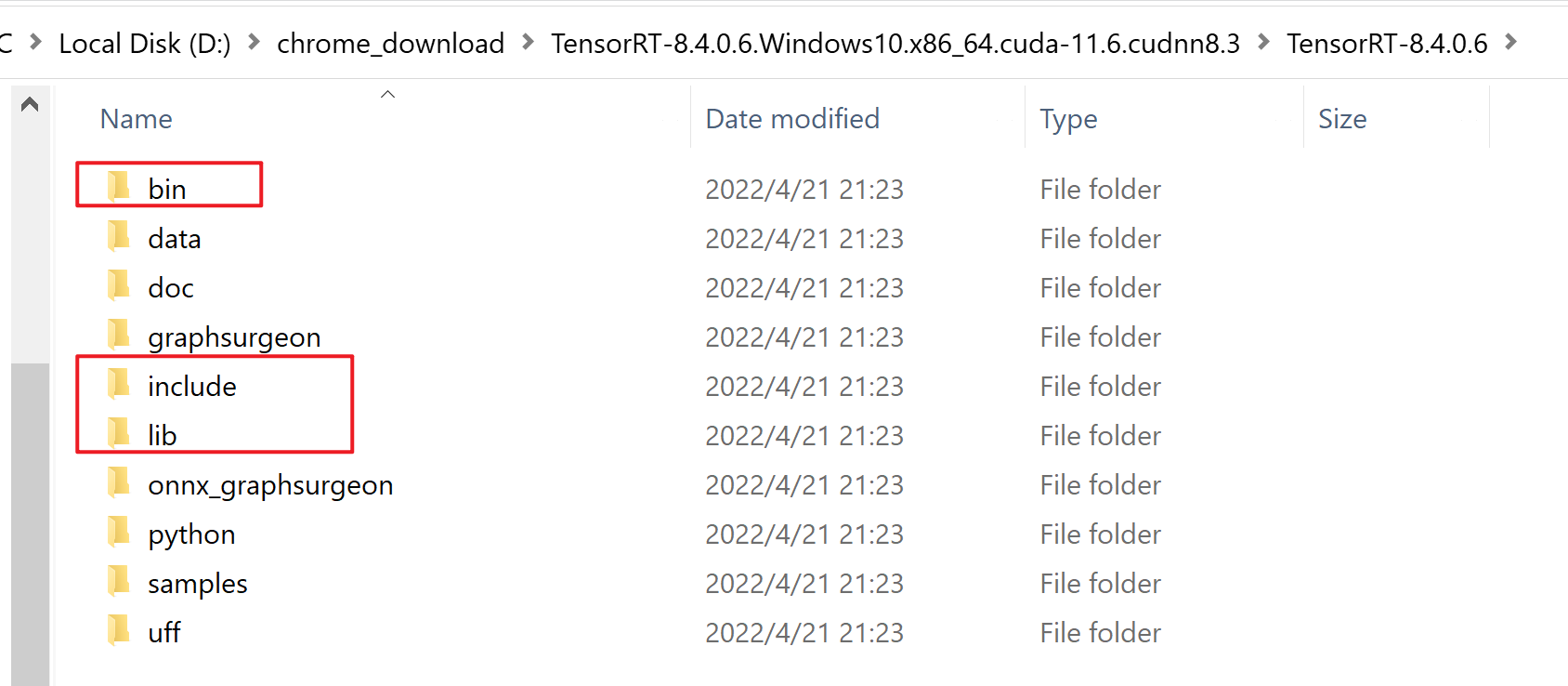

Build cuda_11.3.r11.3/compiler.29745058_0将下载好的TensorRT和cudnn分别解压,分别将:两个解压后的bin\, include, lib\ 目录复制到cuda安装路径下:

将上边两个复制到cuda安装路径:

cuda默认安装路径为:C:\Program Files\NVIDIA GPU Computing Toolkit

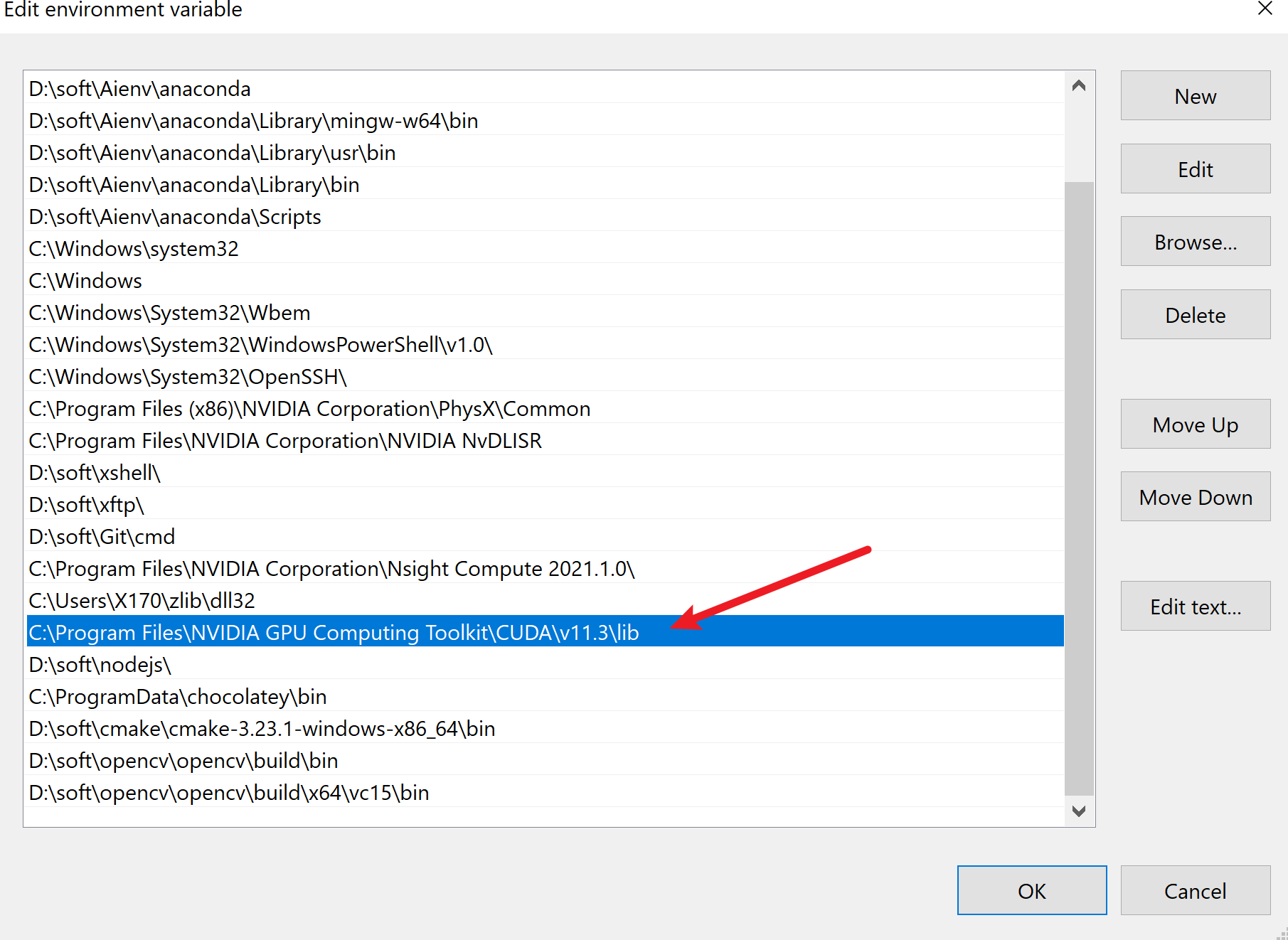

将cuda的库文件路径添加到环境变量中:

二、生成可执行demo文件

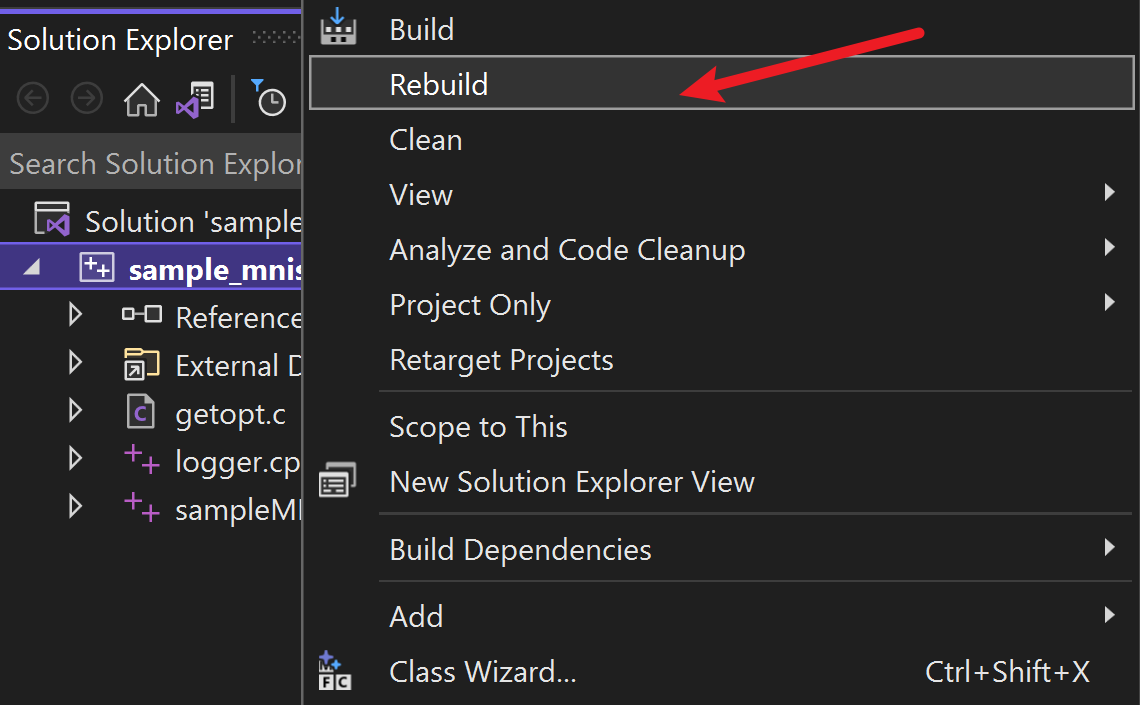

右键使用vs打开sample_mnist.sln

进入vs,右键项目名称,点击重建

报错如下:

Rebuild started...

1>------ Rebuild All started: Project: sample_mnist, Configuration: Release x64 ------

1>sampleMNIST.cpp

1>E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6.Windows10.x86_64.cuda-11.6.cudnn8.3\TensorRT-8.4.0.6\include\NvInferRuntimeCommon.h(56,10): fatal error C1083: Cannot open include file: 'cuda_runtime_api.h': No such file or directory

1>logger.cpp

1>E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6.Windows10.x86_64.cuda-11.6.cudnn8.3\TensorRT-8.4.0.6\include\NvInferRuntimeCommon.h(56,10): fatal error C1083: Cannot open include file: 'cuda_runtime_api.h': No such file or directory

1>Generating Code...

1>Done building project "sample_mnist.vcxproj" -- FAILED.

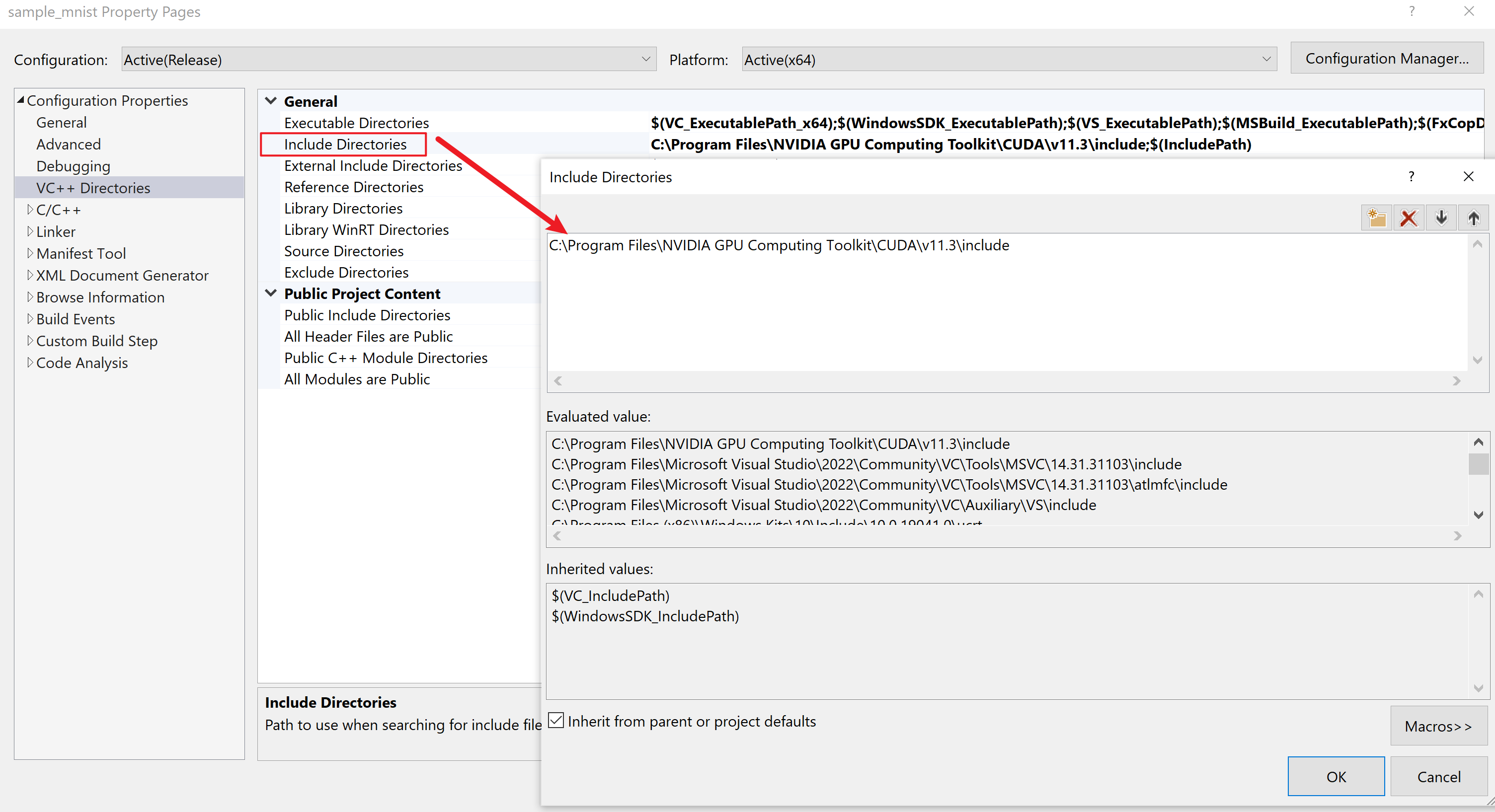

========== Rebuild All: 0 succeeded, 1 failed, 0 skipped ==========将cuda的包含目录添加到VC++包含目录:

重建项目:报错如下:

Rebuild started...

1>------ Rebuild All started: Project: sample_mnist, Configuration: Release x64 ------

1>sampleMNIST.cpp

1>E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\include\NvInfer.h(5337,1): warning C4819: The file contains a character that cannot be represented in the current code page (936). Save the file in Unicode format to prevent data loss

1>logger.cpp

1>E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\include\NvInfer.h(5337,1): warning C4819: The file contains a character that cannot be represented in the current code page (936). Save the file in Unicode format to prevent data loss

1>Generating Code...

1>getopt.c

1>LINK : fatal error LNK1181: cannot open input file 'cudnn.lib'

1>Done building project "sample_mnist.vcxproj" -- FAILED.

========== Rebuild All: 0 succeeded, 1 failed, 0 skipped ==========继续添加:

将cuda的库路径添加到VC++的库目录:

重建项目--运行成功:

Rebuild started...

1>------ Rebuild All started: Project: sample_mnist, Configuration: Release x64 ------

1>sampleMNIST.cpp

1>E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\include\NvInfer.h(5337,1): warning C4819: The file contains a character that cannot be represented in the current code page (936). Save the file in Unicode format to prevent data loss

1>logger.cpp

1>E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\include\NvInfer.h(5337,1): warning C4819: The file contains a character that cannot be represented in the current code page (936). Save the file in Unicode format to prevent data loss

1>Generating Code...

1>getopt.c

1>sample_mnist.vcxproj -> E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\bin\sample_mnist.exe

1>Done building project "sample_mnist.vcxproj".

========== Rebuild All: 1 succeeded, 0 failed, 0 skipped ==========三、查看demo效果

进入到解压的tensorRT\bin目录下,双击sample_minist.exe, 过段时间窗口会一闪而过,

在这里打开powershell,输入:

.\sample_mnist.exe --datadir=解压路径\data\mnist

完整输出如下:

PS E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\bin> .\sample_mnist.exe --datadir=E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\data\mnist

&&&& RUNNING TensorRT.sample_mnist [TensorRT v8400] # E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\bin\sample_mnist.exe --datadir=E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\data\mnist

[04/23/2022-14:37:46] [I] Building and running a GPU inference engine for MNIST

[04/23/2022-14:37:46] [I] [TRT] [MemUsageChange] Init CUDA: CPU +470, GPU +0, now: CPU 20429, GPU 1270 (MiB)

[04/23/2022-14:37:46] [I] [TRT] [MemUsageSnapshot] Begin constructing builder kernel library: CPU 20638 MiB, GPU 1270 MiB

[04/23/2022-14:37:47] [I] [TRT] [MemUsageSnapshot] End constructing builder kernel library: CPU 21017 MiB, GPU 1392 MiB

[04/23/2022-14:37:47] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 11.8.0 but loaded cuBLAS/cuBLAS LT 11.4.2

[04/23/2022-14:37:47] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +727, GPU +272, now: CPU 21590, GPU 1664 (MiB)

[04/23/2022-14:37:48] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +264, GPU +264, now: CPU 21854, GPU 1928 (MiB)

[04/23/2022-14:37:48] [W] [TRT] TensorRT was linked against cuDNN 8.3.2 but loaded cuDNN 8.2.1

[04/23/2022-14:37:48] [I] [TRT] Local timing cache in use. Profiling results in this builder pass will not be stored.

[04/23/2022-14:37:59] [I] [TRT] Detected 1 inputs and 1 output network tensors.

[04/23/2022-14:37:59] [I] [TRT] Total Host Persistent Memory: 7136

[04/23/2022-14:37:59] [I] [TRT] Total Device Persistent Memory: 0

[04/23/2022-14:37:59] [I] [TRT] Total Scratch Memory: 8192

[04/23/2022-14:37:59] [I] [TRT] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 1 MiB, GPU 884 MiB

[04/23/2022-14:37:59] [I] [TRT] [BlockAssignment] Algorithm ShiftNTopDown took 0.0425ms to assign 4 blocks to 9 nodes requiring 59908 bytes.

[04/23/2022-14:37:59] [I] [TRT] Total Activation Memory: 59908

[04/23/2022-14:37:59] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 11.8.0 but loaded cuBLAS/cuBLAS LT 11.4.2

[04/23/2022-14:37:59] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +8, now: CPU 22954, GPU 2342 (MiB)

[04/23/2022-14:37:59] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in building engine: CPU +0, GPU +4, now: CPU 0, GPU 4 (MiB)

[04/23/2022-14:37:59] [I] [TRT] [MemUsageChange] Init CUDA: CPU +0, GPU +0, now: CPU 22741, GPU 2052 (MiB)

[04/23/2022-14:37:59] [I] [TRT] Loaded engine size: 1 MiB

[04/23/2022-14:37:59] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 11.8.0 but loaded cuBLAS/cuBLAS LT 11.4.2

[04/23/2022-14:37:59] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +200, GPU +268, now: CPU 22941, GPU 2322 (MiB)

[04/23/2022-14:37:59] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in engine deserialization: CPU +0, GPU +1, now: CPU 0, GPU 1 (MiB)

[04/23/2022-14:38:00] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 11.8.0 but loaded cuBLAS/cuBLAS LT 11.4.2

[04/23/2022-14:38:00] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +219, GPU +268, now: CPU 22599, GPU 2202 (MiB)

[04/23/2022-14:38:00] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in IExecutionContext creation: CPU +0, GPU +0, now: CPU 0, GPU 1 (MiB)

[04/23/2022-14:38:00] [I] Input:

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@%.:@@@@@@@@@@@@

@@@@@@@@@@@@@: *@@@@@@@@@@@@

@@@@@@@@@@@@* =@@@@@@@@@@@@@

@@@@@@@@@@@% :@@@@@@@@@@@@@@

@@@@@@@@@@@- *@@@@@@@@@@@@@@

@@@@@@@@@@# .@@@@@@@@@@@@@@@

@@@@@@@@@@: #@@@@@@@@@@@@@@@

@@@@@@@@@+ -@@@@@@@@@@@@@@@@

@@@@@@@@@: %@@@@@@@@@@@@@@@@

@@@@@@@@+ +@@@@@@@@@@@@@@@@@

@@@@@@@@:.%@@@@@@@@@@@@@@@@@

@@@@@@@% -@@@@@@@@@@@@@@@@@@

@@@@@@@% -@@@@@@#..:@@@@@@@@

@@@@@@@% +@@@@@- :@@@@@@@

@@@@@@@% =@@@@%.#@@- +@@@@@@

@@@@@@@@..%@@@*+@@@@ :@@@@@@

@@@@@@@@= -%@@@@@@@@ :@@@@@@

@@@@@@@@@- .*@@@@@@+ +@@@@@@

@@@@@@@@@@+ .:-+-: .@@@@@@@

@@@@@@@@@@@@+: :*@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

[04/23/2022-14:38:00] [I] Output:

0:

1:

2:

3:

4:

5:

6: **********

7:

8:

9:

&&&& PASSED TensorRT.sample_mnist [TensorRT v8400] # E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\bin\sample_mnist.exe --datadir=E:\CODE\CPP\TensorRT\TensorRT-8.4.0.6\data\mnist这里就说明安装成功了,虽然提示了要加载cudnn8.3,但是本版本的cudnn8.2是支持cuda11.x的,所以可以正常运行

四、其它

其他错误:

Could not load library cudnn_cnn_infer64_8.dll.

我这里因为cudnn一开始的版本不对导致的,后来将cudnn由8.4.0切换为8.2.1成功解决

参考:

python - Could not load library cudnn_cnn_infer64_8.dll. Error code 126 - Stack Overflow

win10+CUDA11.1+cudnn11.1+TensorRT8.0开发环境搭建_SongpingWang的博客-CSDN博客