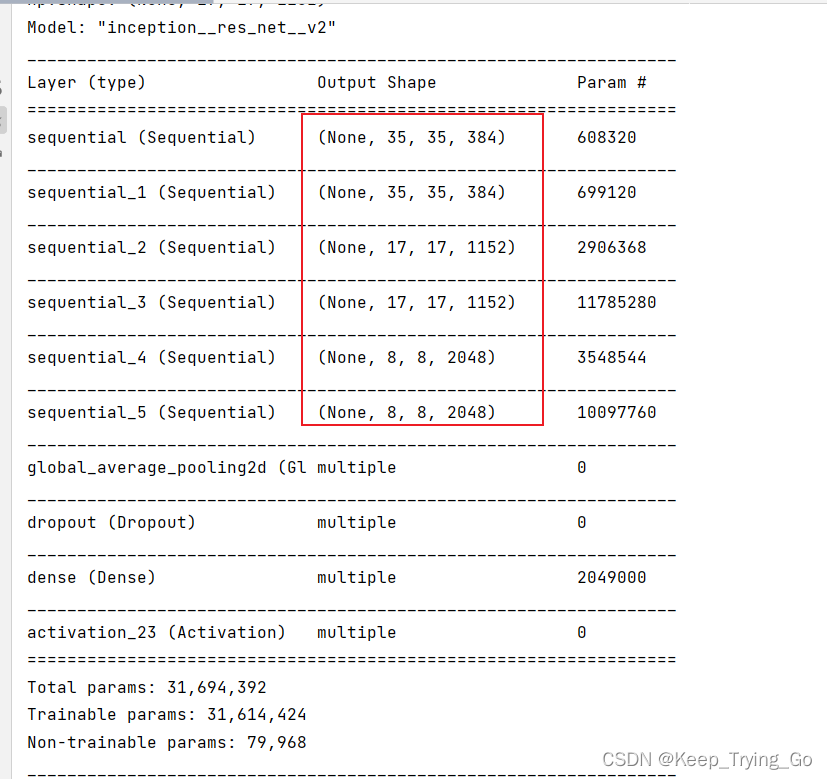

1.输出结果

2.InceptionV4网络结构细节

https://mydreamambitious.blog.csdn.net/article/details/124414800

3.代码复现

import os

import keras

import numpy as np

import tensorflow as tf

from tensorflow.keras import models

from tensorflow.keras import layers

from tensorflow.keras.models import Model

#主干网络Stem

class Inception_ResNet_V2_Stem(tf.keras.Model):

def __init__(self):

super(Inception_ResNet_V2_Stem,self).__init__()

self.conv1=layers.Conv2D(32,kernel_size=[3,3],strides=[2,2],padding='valid')

self.conv1batch=layers.BatchNormalization()

self.conv2=layers.Conv2D(32,kernel_size=[3,3],strides=[1,1],padding='valid')

self.conv2batch=layers.BatchNormalization()

self.conv3=layers.Conv2D(64,kernel_size=[3,3],strides=[1,1],padding='same')

self.conv3batch=layers.BatchNormalization()

self.maxpool1=layers.MaxPool2D(pool_size=[3,3],strides=[2,2],padding='valid')

self.maxpool1conv4=layers.Conv2D(96,kernel_size=[3,3],strides=[2,2],padding='valid')

self.maxbatch1=layers.BatchNormalization()

self.conv5left=layers.Conv2D(64,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv5leftbatch=layers.BatchNormalization()

self.conv6left=layers.Conv2D(96,kernel_size=[3,3],strides=[1,1],padding='valid')

self.conv6leftbatch=layers.BatchNormalization()

self.conv5right=layers.Conv2D(64,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv5rightbatch=layers.BatchNormalization()

self.conv6right=layers.Conv2D(64,kernel_size=[7,1],strides=[1,1],padding='same')

self.conv6rightbatch=layers.BatchNormalization()

self.conv7right=layers.Conv2D(64,kernel_size=[1,7],strides=[1,1],padding='same')

self.conv7rightbatch=layers.BatchNormalization()

self.conv8right=layers.Conv2D(96,kernel_size=[3,3],strides=[1,1],padding='valid')

self.conv8rightbatch=layers.BatchNormalization()

self.maxpool2=layers.MaxPool2D(pool_size=[3,3],strides=[2,2],padding='valid')

self.conv9=layers.Conv2D(192,kernel_size=[3,3],strides=[2,2],padding='valid')

self.conv9batch=layers.BatchNormalization()

self.relu=layers.Activation('relu')

def call(self,inputs,training=None):

x_conv1=self.conv1(inputs)

x_conv1=self.conv1batch(x_conv1)

x_conv1=self.relu(x_conv1)

x_conv2=self.conv2(x_conv1)

x_conv2=self.conv2batch(x_conv2)

x_conv2=self.relu(x_conv2)

x_conv3=self.conv3(x_conv2)

x_conv3=self.conv3batch(x_conv3)

x_conv3=self.relu(x_conv3)

max_x=self.maxpool1(x_conv3)

max_conv_x=self.maxpool1conv4(x_conv3)

max_conv_x=self.maxbatch1(max_conv_x)

max_conv_x=self.relu(max_conv_x)

max_conv_out=tf.concat([

max_x,max_conv_x

],axis=3)

xleft=self.conv5left(max_conv_out)

xleft=self.conv5leftbatch(xleft)

xleft=self.relu(xleft)

xleft=self.conv6left(xleft)

xleft=self.conv6leftbatch(xleft)

xleft=self.relu(xleft)

xright=self.conv5right(max_conv_out)

xright=self.conv5rightbatch(xright)

xright=self.relu(xright)

xright=self.conv6right(xright)

xright=self.conv6rightbatch(xright)

xright=self.relu(xright)

xright=self.conv7right(xright)

xright=self.conv7rightbatch(xright)

xright=self.relu(xright)

xright=self.conv8right(xright)

xright=self.conv8rightbatch(xright)

xright=self.relu(xright)

x_left_right=tf.concat([

xleft,xright

],axis=3)

x_max_2=self.maxpool2(x_left_right)

x_conv9=self.conv9(x_left_right)

x_conv9=self.conv9batch(x_conv9)

x_conv9=self.relu(x_conv9)

x_max2_conv9=tf.concat([

x_max_2,x_conv9

],axis=3)

return x_max2_conv9

#InceptionV4_ResNet_V2的A模块

class InceptionV4_ResNet_V2_A(tf.keras.Model):

def __init__(self):

super(InceptionV4_ResNet_V2_A, self).__init__()

self.conv1=layers.Conv2D(32,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv1batch=layers.BatchNormalization()

self.conv2=layers.Conv2D(32,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv2batch=layers.BatchNormalization()

self.conv3=layers.Conv2D(32,kernel_size=[3,3],strides=[1,1],padding='same')

self.conv3batch=layers.BatchNormalization()

self.conv4=layers.Conv2D(32,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv4batch=layers.BatchNormalization()

self.conv5=layers.Conv2D(48,kernel_size=[3,3],strides=[1,1],padding='same')

self.conv5batch=layers.BatchNormalization()

self.conv6=layers.Conv2D(64,kernel_size=[3,3],strides=[1,1],padding='same')

self.conv6batch=layers.BatchNormalization()

self.conv1_3_6=layers.Conv2D(384,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv1_3_6batch=layers.BatchNormalization()

self.relu=layers.Activation('relu')

def call(self,inputs,training=None):

x1=self.conv1(inputs)

x1=self.conv1batch(x1)

x1=self.relu(x1)

x2=self.conv2(inputs)

x2=self.conv2batch(x2)

x2=self.relu(x2)

x2=self.conv3(x2)

x2=self.conv3batch(x2)

x2=self.relu(x2)

x3=self.conv4(inputs)

x3=self.conv4batch(x3)

x3=self.relu(x3)

x3=self.conv5(x3)

x3=self.conv5batch(x3)

x3=self.relu(x3)

x3=self.conv6(x3)

x3=self.conv6batch(x3)

x3=self.relu(x3)

x4=tf.concat([

x1,x2,x3

],axis=3)

x5=self.conv1_3_6(x4)

x5=self.conv1_3_6batch(x5)

x_out=tf.add(x5,inputs)

x_out = self.relu(x_out)

return x_out

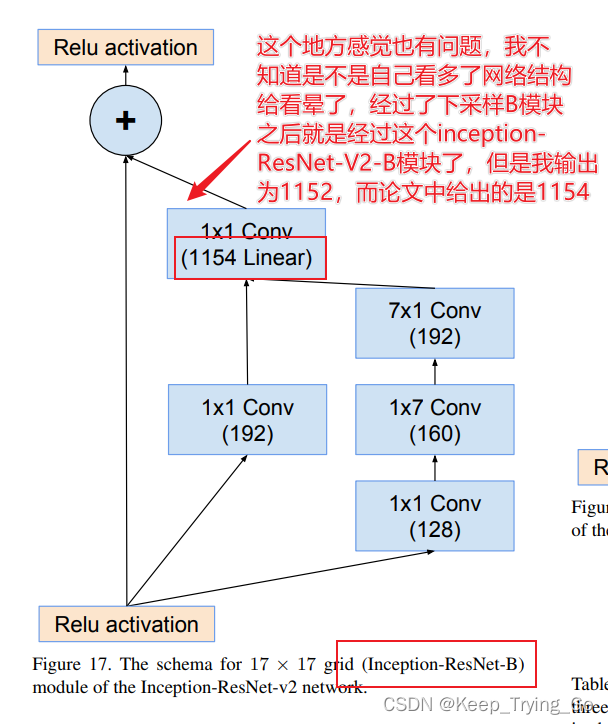

#InceptionV4_ResNet_V2的B模块

class InceptionV4_ResNet_V2_B(tf.keras.Model):

def __init__(self):

super(InceptionV4_ResNet_V2_B, self).__init__()

self.conv1=layers.Conv2D(192,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv1batch=layers.BatchNormalization()

self.conv2=layers.Conv2D(128,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv2batch=layers.BatchNormalization()

self.conv3=layers.Conv2D(160,kernel_size=[1,7],strides=[1,1],padding='same')

self.conv3batch=layers.BatchNormalization()

self.conv4=layers.Conv2D(192,kernel_size=[7,1],strides=[1,1],padding='same')

self.conv4batch=layers.BatchNormalization()

self.relu=layers.Activation('relu')

self.conv5=layers.Conv2D(1152,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv5batch=layers.BatchNormalization()

def call(self,inputs,training=None):

print('np.shape: {}'.format(np.shape(inputs)))

x=self.conv1(inputs)

x=self.conv1batch(x)

x=self.relu(x)

x2=self.conv2(inputs)

x2=self.conv2batch(x2)

x2=self.relu(x2)

x2=self.conv3(x2)

x2=self.conv3batch(x2)

x2=self.relu(x2)

x2=self.conv4(x2)

x2=self.conv4batch(x2)

x2=self.relu(x2)

x3=tf.concat([

x,x2

],axis=3)

x4=self.conv5(x3)

x4=self.conv5batch(x4)

xx_out=tf.add(x4,inputs)

xx_out=self.relu(xx_out)

return xx_out

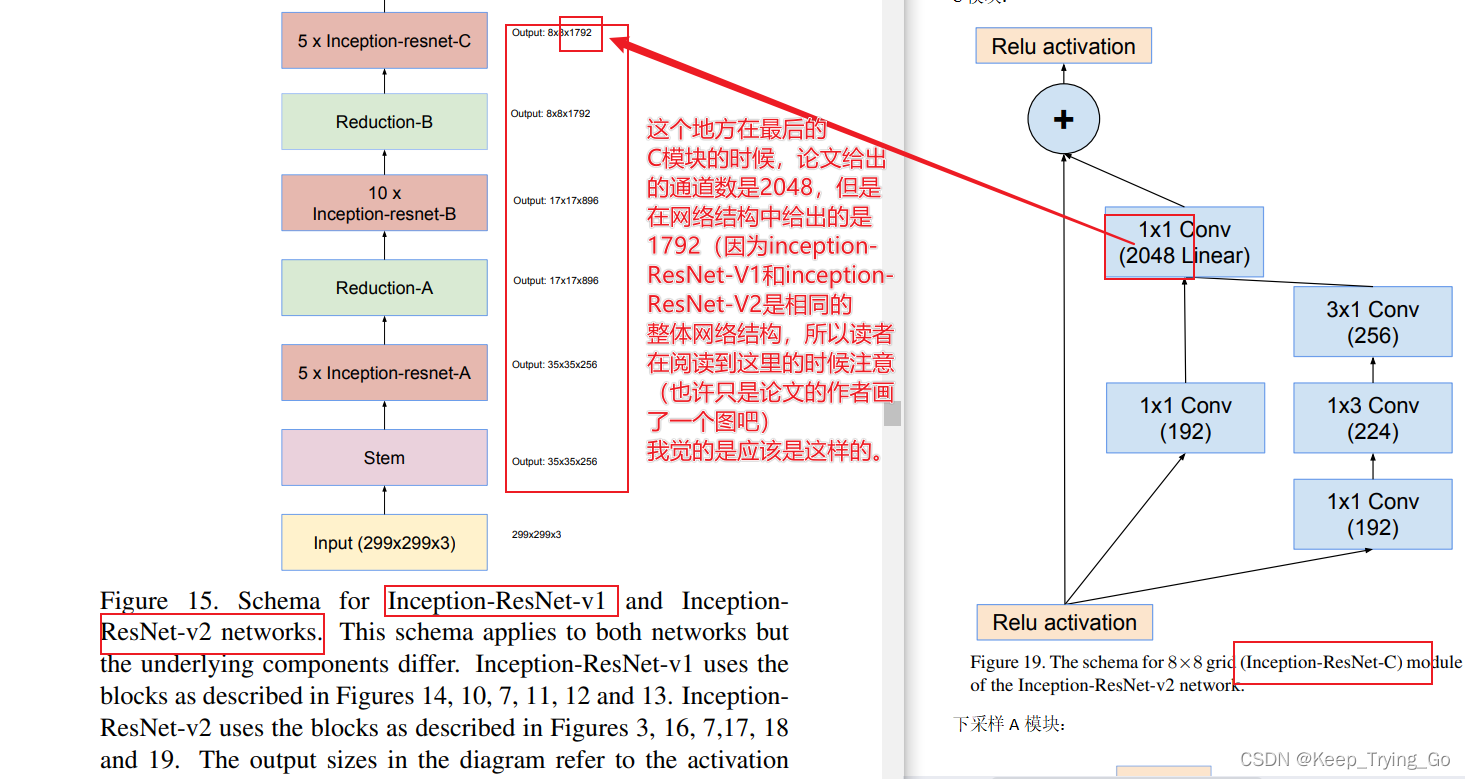

#InceptionV4_ResNet_V2的C模块

class InceptionV4_ResNet_V2_C(tf.keras.Model):

def __init__(self):

super(InceptionV4_ResNet_V2_C, self).__init__()

self.conv1=layers.Conv2D(192,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv1batch=layers.BatchNormalization()

self.conv2=layers.Conv2D(192,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv2batch=layers.BatchNormalization()

self.conv3=layers.Conv2D(224,kernel_size=[1,3],strides=[1,1],padding='same')

self.conv3batch=layers.BatchNormalization()

self.conv4=layers.Conv2D(256,kernel_size=[3,1],strides=[1,1],padding='same')

self.conv4batch=layers.BatchNormalization()

self.relu=layers.Activation('relu')

self.conv5=layers.Conv2D(2048,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv5batch=layers.BatchNormalization()

def call(self,inputs,training=None):

x=self.conv1(inputs)

x=self.conv1batch(x)

x=self.relu(x)

x2=self.conv2(inputs)

x2=self.conv2batch(x2)

x2=self.relu(x2)

x2=self.conv3(x2)

x2=self.conv3batch(x2)

x2=self.relu(x2)

x2=self.conv4(x2)

x2=self.conv4batch(x2)

x2=self.relu(x2)

x3=tf.concat([

x,x2

],axis=3)

x4=self.conv5(x3)

x4=self.conv5batch(x4)

xx_out=tf.add(x4,inputs)

xx_out=self.relu(xx_out)

return xx_out

#Inception-ResNet-V2的下采样A模块

class InceptionV4_ResNet_ReducetionModuleA(tf.keras.Model):

def __init__(self):

super(InceptionV4_ResNet_ReducetionModuleA, self).__init__()

self.maxpool=layers.MaxPool2D(pool_size=[3,3],strides=[2,2],padding='valid')

self.conv1=layers.Conv2D(384,kernel_size=[3,3],strides=[2,2],padding='valid')

self.conv1batch=layers.BatchNormalization()

self.conv2=layers.Conv2D(256,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv2batch=layers.BatchNormalization()

self.conv3=layers.Conv2D(256,kernel_size=[3,3],strides=[1,1],padding='same')

self.conv3batch=layers.BatchNormalization()

self.conv4=layers.Conv2D(384,kernel_size=[3,3],strides=[2,2],padding='valid')

self.conv4batch=layers.BatchNormalization()

self.relu=layers.Activation('relu')

def call(self,inputs,training=None):

maxpool=self.maxpool(inputs)

conv1=self.conv1(inputs)

conv1=self.conv1batch(conv1)

conv1=self.relu(conv1)

conv2=self.conv2(inputs)

conv2=self.conv2batch(conv2)

conv2=self.relu(conv2)

conv3=self.conv3(conv2)

conv3=self.conv3batch(conv3)

conv3=self.relu(conv3)

conv4=self.conv4(conv3)

conv4=self.conv4batch(conv4)

conv4=self.relu(conv4)

x_out=tf.concat([

conv1,maxpool,conv4

],axis=3)

return x_out

#Inception-ResNet-V1的下采样B模块

class InceptionV4_ResNet_ReducetionModuleB(tf.keras.Model):

def __init__(self):

super(InceptionV4_ResNet_ReducetionModuleB, self).__init__()

self.maxpool=layers.MaxPool2D(pool_size=[3,3],strides=[2,2],padding='valid')

self.conv1=layers.Conv2D(256,kernel_size=[1,1],strides=[1,1],padding='same')

self.conv1batch=layers.BatchNormalization()

self.conv2 = layers.Conv2D(384, kernel_size=[3,3], strides=[2,2], padding='valid')

self.conv2batch = layers.BatchNormalization()

self.conv3 = layers.Conv2D(256, kernel_size=[1, 1], strides=[1, 1], padding='same')

self.conv3batch = layers.BatchNormalization()

self.conv4 = layers.Conv2D(256, kernel_size=[3,3], strides=[2,2], padding='valid')

self.conv4batch = layers.BatchNormalization()

self.conv5 = layers.Conv2D(256, kernel_size=[1, 1], strides=[1, 1], padding='same')

self.conv5batch = layers.BatchNormalization()

self.conv6 = layers.Conv2D(256, kernel_size=[3,3], strides=[1, 1], padding='same')

self.conv6batch = layers.BatchNormalization()

self.conv7 = layers.Conv2D(256, kernel_size=[3, 3], strides=[2, 2], padding='valid')

self.conv7batch = layers.BatchNormalization()

self.relu=layers.Activation('relu')

def call(self,inputs,training=None):

max_x=self.maxpool(inputs)

x=self.conv1(inputs)

x=self.conv1batch(x)

x=self.relu(x)

x=self.conv2(x)

x=self.conv2batch(x)

x=self.relu(x)

x2=self.conv3(inputs)

x2=self.conv3batch(x2)

x2=self.relu(x2)

x2=self.conv4(x2)

x2=self.conv4batch(x2)

x2=self.relu(x2)

x3=self.conv5(inputs)

x3=self.conv5batch(x3)

x3=self.relu(x3)

x3=self.conv6(x3)

x3=self.conv6batch(x3)

x3=self.relu(x3)

x3=self.conv7(x3)

x3=self.conv7batch(x3)

x3=self.relu(x3)

x_out=tf.concat([

max_x,x,x2,x3

],axis=3)

return x_out

#整体网络结构

class Inception_ResNet_V2(tf.keras.Model):

def __init__(self,blocks,num_classes):

self.inception_resnet_V2_A = blocks[0]

self.inception_resnet_V2_B = blocks[1]

self.inception_resnet_V2_C = blocks[2]

super(Inception_ResNet_V2, self).__init__()

self.stem=self.Stem()

self.inceptionResNetA=self.inception_ResNet_V2_A(self.inception_resnet_V2_A)

self.inceptionReduceA=self.inception_Reduce_A()

self.inceptionResNetB = self.inception_ResNet_V2_B(self.inception_resnet_V2_B)

self.inceptionReduceB=self.inception_Reduce_B()

self.inceptionResNetC = self.inception_ResNet_V2_C(self.inception_resnet_V2_C)

self.avgpool=layers.GlobalAveragePooling2D()

self.dropout=layers.Dropout(rate=0.8)

self.dense=layers.Dense(num_classes)

self.softmax=layers.Activation('softmax')

def Stem(self):

stem=keras.Sequential([])

stem.add(Inception_ResNet_V2_Stem())

return stem

def inception_ResNet_V2_A(self,blocks):

inception_resnetA=keras.Sequential([])

for i in range(blocks):

inception_resnetA.add(InceptionV4_ResNet_V2_A())

return inception_resnetA

def inception_ResNet_V2_B(self,blocks):

inception_resnetB=keras.Sequential([])

for i in range(blocks):

inception_resnetB.add(InceptionV4_ResNet_V2_B())

return inception_resnetB

def inception_ResNet_V2_C(self,blocks):

inception_resnetC=keras.Sequential([])

for i in range(blocks):

inception_resnetC.add(InceptionV4_ResNet_V2_C())

return inception_resnetC

def inception_Reduce_A(self):

redA=keras.Sequential([])

redA.add(InceptionV4_ResNet_ReducetionModuleA())

return redA

def inception_Reduce_B(self):

redB=keras.Sequential([])

redB.add(InceptionV4_ResNet_ReducetionModuleB())

return redB

def call(self,inputs,training=None):

x=self.stem(inputs)

x=self.inceptionResNetA(x)

x=self.inceptionReduceA(x)

x = self.inceptionResNetB(x)

x = self.inceptionReduceB(x)

x = self.inceptionResNetC(x)

x=self.avgpool(x)

x=self.dropout(x)

x=self.dense(x)

x=self.softmax(x)

return x

model_inception_resnet_v2=Inception_ResNet_V2(blocks=[5,10,5],num_classes=1000)

model_inception_resnet_v2.build(input_shape=(None,299,299,3))

model_inception_resnet_v2.summary()