背景:之前爬了好几本小说,但又没有时间看(上班期间也不能看啊),所以就想转成语音,这样不就能听了嘛(ˉ﹃ˉ)

python版本:3.7.4

最开始是用3.8.x的,但是pyttsx3里面有很多坑,有一些模块仅支持到3.7.4,不支持以上的版本,所以最好使用3.7.4以下版本。

啥,你也是跟我一样是高版本的?最容易的操作如下:

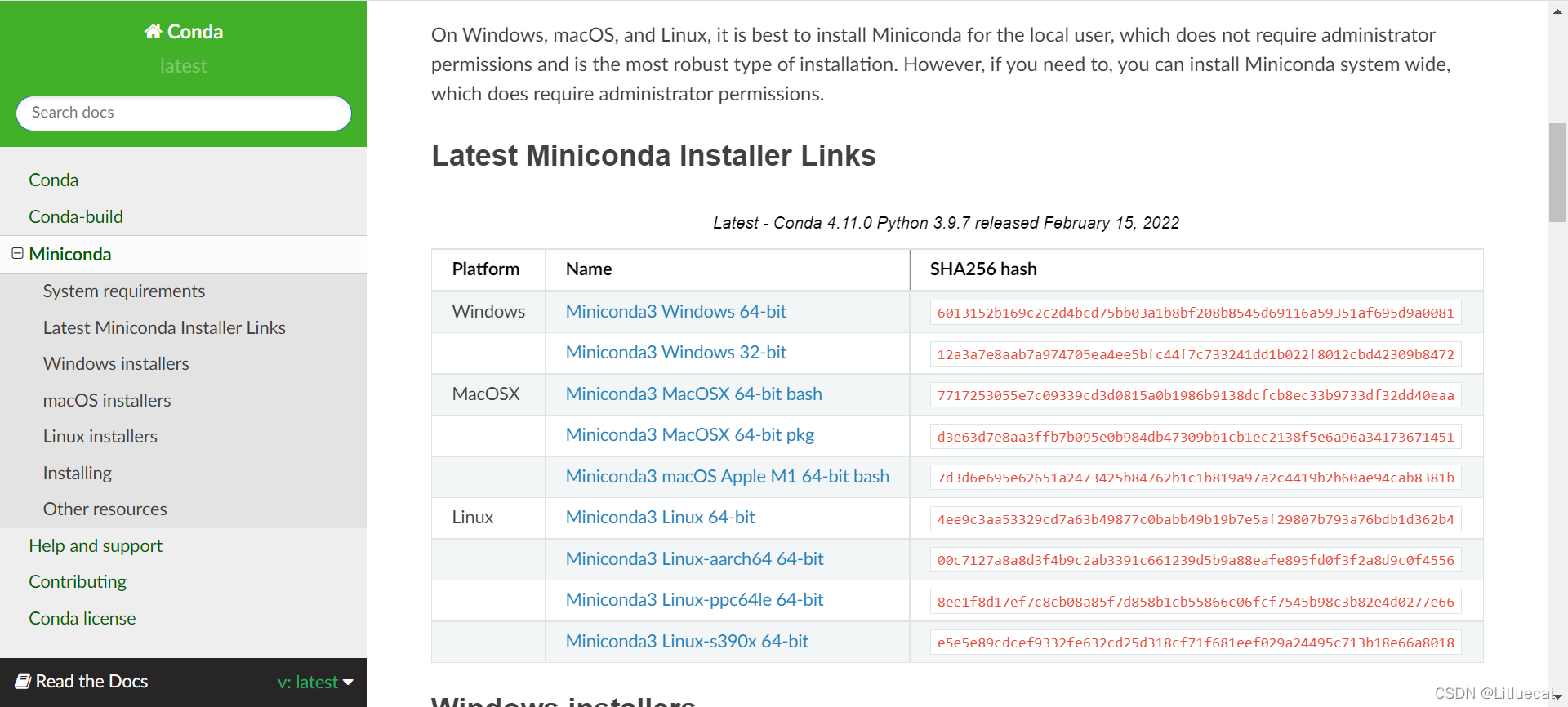

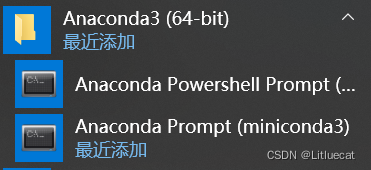

- 安装Miniconda,打开powershell,输入

conda install python==3.7.4

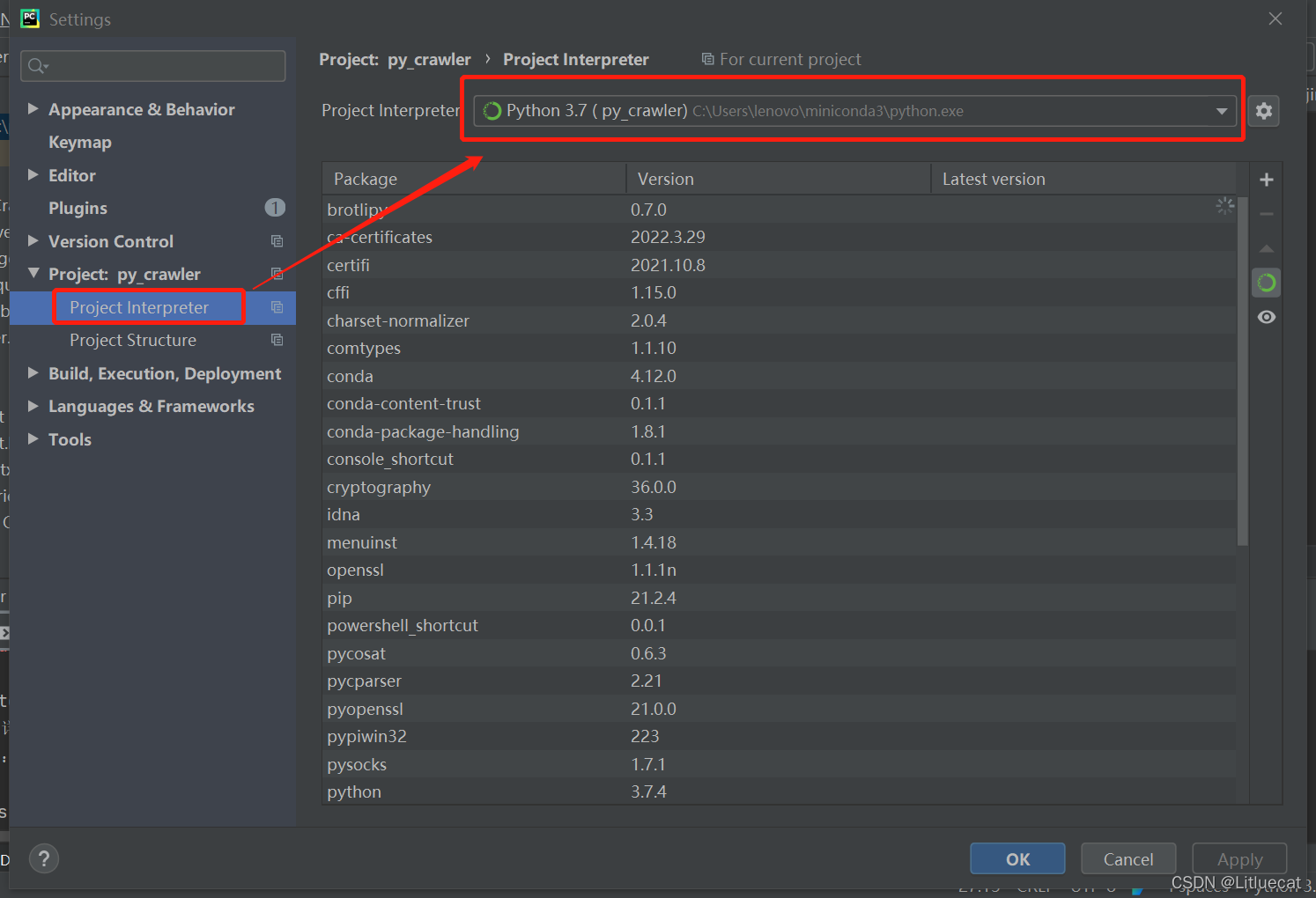

- 如果你之前不是用miniconda装的python,那就出现两个,一个是你原来的,另外一个在miniconda文件夹里面,所以我们需要改一下项目里的python版本,也可以改系统环境。

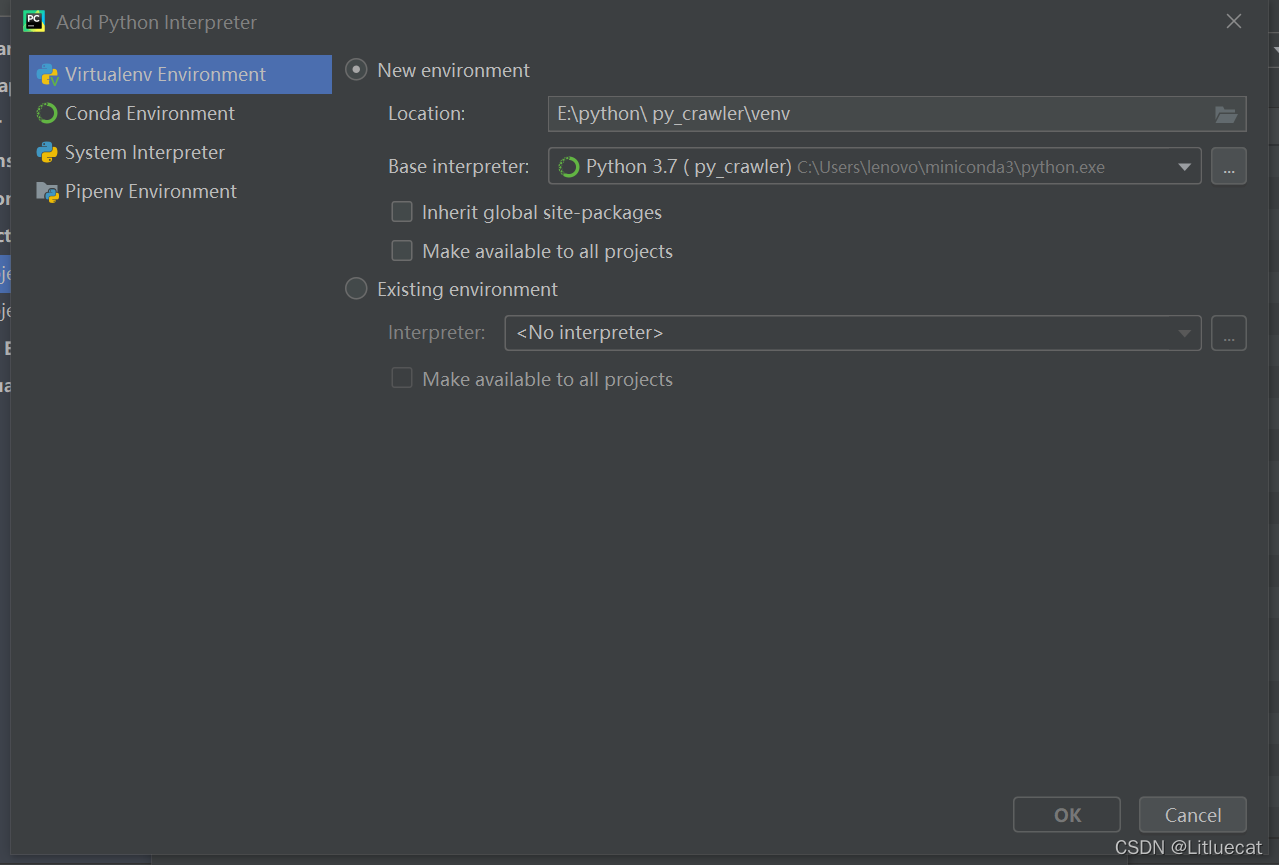

File --> setting --> project --> show all --> +

- 开始文本转语音

pip install pyttsx3

import pyttsx3

def main():

# 创建对象,有就使用旧的,没有就创建,单一原则

engine = pyttsx3.init()

# 设置新的语音速率,默认200

engine.setProperty('rate', 180)

# 设置新的语音音量,音量最小为 0,最大为 1,默认1

engine.setProperty('volume', 1.0)

# 获取当前语音声音的详细信息,这个是获取你电脑上语音识别的语音列表

# voices = engine.getProperty('voices')

# 设置当前语音声音,根据自己的语音列表设置

engine.setProperty('voice', voices[0].id)

# 语音文本

out_file = 'text2audio.mp3'

in_file = '完美世界.txt'

f = open(in_file, 'r', encoding='utf-8')

my_text = f.read()

f.close()

# 将语音文本说出来

# engine.say(my_text )

# 将文本转换成音频,并输出到out_file

engine.save_to_file(my_text , out_file)

engine.runAndWait()

engine.stop()

if __name__ == '__main__':

main()

- 附带小说爬取代码,request版

import datetime

import random

import time

import requests

from bs4 import BeautifulSoup

def get_html(url, headers):

r = requests.get(url, headers=headers)

r.encoding = r.apparent_encoding

return r.text

def get_wanMeiShiJie(html, f):

soup = BeautifulSoup(html, "html.parser")

# 章节标题

title = soup.find("div", class_="bookname").find("h1").string

# 章节内容

all_content = soup.find("div", id="content").text

f.write(title + "\n\n")

f.write(all_content + "\n\n")

print(title + " ----爬取完成")

def main():

# 开始时间

start_time = datetime.datetime.now()

url = "https://www.xbiquge.la"

start_url = "/26/26874/"

headers = {"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) "

"Chrome/96.0.4664.45 Safari/537.36"}

temp_url = start_url

f = open('沧元图.txt', 'w', encoding='utf-8')

# 每max_size章 暂停sleep_time秒

max_size = random.randint(50, 200)

chapter_size = 0

html = get_html(url + temp_url, headers)

soup = BeautifulSoup(html, "html.parser")

list_name = set()

list_href = []

list_a = soup.find('div', id="list").find_all('a')

again_ = 1

for _a in list_a:

# if -1 < str(_a.text).find('第四千八百七十六章', 0, len(_a.text)):

# again_ = 1

# continue

if again_ == 0 or _a.text in list_name:

continue

else:

list_name.add(_a.text)

list_href.append(_a['href'])

print("【遍历所有章节,并进行章节去重】,完成")

for href in list_href:

html = get_html(url + href, headers)

get_wanMeiShiJie(html, f)

chapter_size += 1

if max_size <= chapter_size:

chapter_size = 0

sleep_time = random.uniform(0.5, 5)

print("----------已爬取" + str(max_size) + "章,休息" + str(sleep_time) + "秒----------")

max_size = random.randint(50, 200)

time.sleep(sleep_time)

f.close()

print("爬取结束,总耗时:")

print(datetime.datetime.now() - start_time)

if __name__ == '__main__':

main()

- 附带小说爬取代码,webdriver版

from selenium import webdriver

from bs4 import BeautifulSoup

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def get_html(url, driver, title):

driver.get(url)

# 显示等待,10秒内,直到title标签包含title字符串,才返回页面

WebDriverWait(driver, 10).until(EC.title_contains(title))

return driver.page_source

def main():

urls = [

"http://www.7pingtan.com/archives/3199",

"http://www.7pingtan.com/archives/2987",

"http://www.7pingtan.com/archives/2450"

]

driver = None

f = None

try:

driver = webdriver.Chrome()

f = open('平潭蓝眼泪.txt', 'w', encoding='utf-8')

for url in urls:

html = get_html(url, driver, "平潭蓝眼泪")

soup = BeautifulSoup(html, "html.parser")

_main = soup.find("div", id="content").find("div", class_="main").find("div", class_="content")

all_url = _main.find_all("a")

for index, temp_url in enumerate(all_url):

if 0 == index or 1 == index:

continue

_url = temp_url["href"]

_html = get_html(_url, driver, "平潭蓝眼泪")

soup = BeautifulSoup(_html, "html.parser")

# 内容

_main = soup.find("div", id="content").find("div", class_="content")

all_content = _main.find_all("p")

for content in all_content:

str = content.text

if ("蓝眼泪" not in str) or ("(无)" in str):

break

strs = str.split("蓝眼泪实况:")

begin_time = strs[0].replace("夜光藻", "")

temp_strs = strs[1].split("。")

f.write(temp_strs[0] + begin_time + "\n")

except Exception as e:

print(e)

finally:

if None is not f:

f.close()

if None is not driver:

driver.close()

if __name__ == '__main__':

main()

总结:不要让生活难倒你,摸鱼的方法有很多。