下载数据集

网上找的数据集,和项目的数据集数目不同,但是无所谓了

提取码:jzyc

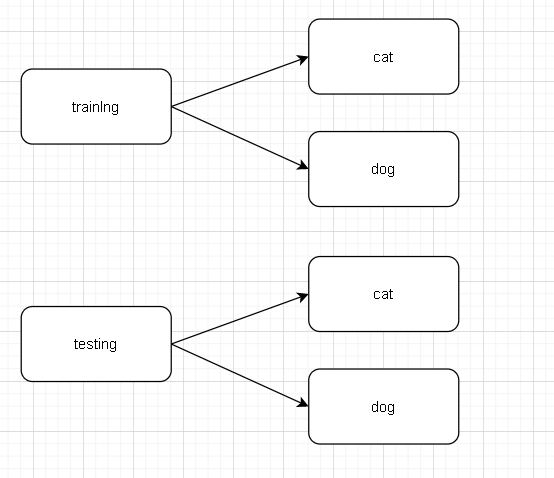

划分数据

需要向整体的数据划分位训练集和测试集

下载下来的zip文件中有img文件夹,内部图片0.xx.jpeg是猫类型图片

1.xx.jpeg是狗的类型图片,总共有12000张图片,每个种类个6000张

需要将器划分为

import random

cat_dir = "E:/datasets/tmp/cat_dog/cat_dog/cat/"

dog_dir = "E:/datasets/tmp/cat_dog/cat_dog/dog/"

train_cat_dir = "E:/datasets/tmp/cat_dog/training/cat/"

train_dog_dir = "E:/datasets/tmp/cat_dog/training/dog/"

test_cat_dir = "E:/datasets/tmp/cat_dog/testing/cat/"

test_dog_dir = "E:/datasets/tmp/cat_dog/testing/dog/"

def split_data(source, train, test, split_size):

"""

根据传入的源文件夹,将总共的图片经过一定的比例放到训练和测试的文件夹中

param:

source: 要切分的所有图片的总文件夹

train: 要保存训练集图片的文件夹

test: 要保存测试集图片的文件夹

split_size: 切分的比例

return:

None

"""

files = []

for filename in os.listdir(source):

file = source + '/' + filename

files.append(filename)

train_len = int(len(files) * split_size)

test_len = len(files) - train_len

shuffled_set = random.sample(files, len(files))

train_set = shuffled_set[0 : train_len]

test_set = shuffled_set[-test_len: ]

for filename in train_set:

this_file = source + filename

destination = train + filename

copyfile(this_file, destination)

for filename in test_set:

this_file = source + filename

destination = test + filename

copyfile(this_file, destination)

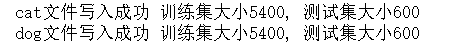

print("{}文件写入成功 训练集大小{}, 测试集大小{}".format(source.split('/')[-2] ,len(os.listdir(train)), len(os.listdir(test))))

split_data(cat_dir, train_cat_dir, test_cat_dir, 0.9)

split_data(dog_dir, train_dog_dir, test_dog_dir, 0.9)

数据预处理

图片格式归一化,规定batch_size,以及class类型

train_dir = "E:/datasets/tmp/cat_dog/training/"

validation_dir = "E:/datasets/tmp/cat_dog/testing/"

train_datagen = ImageDataGenerator(rescale=1/255)

train_generator = train_datagen.flow_from_directory(train_dir,batch_size=54, class_mode='binary', target_size=(100, 100))

validation_datagen = ImageDataGenerator(rescale=1/255)

validation_generator = validation_datagen.flow_from_directory(validation_dir,batch_size=60, class_mode='binary', target_size=(100, 100))

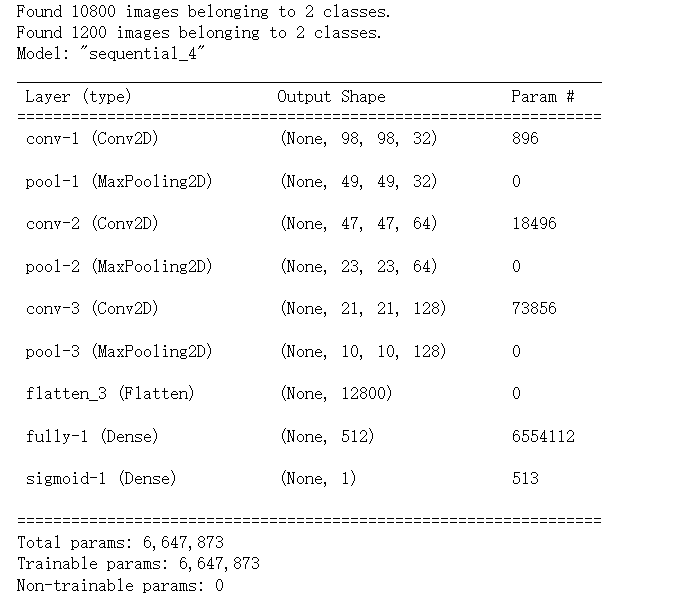

搭建神经网络

采用Le_Net5网络

def netWork():

model = tf.keras.Sequential()

model.add(tf.keras.layers.Conv2D(32,(3, 3), input_shape=(100, 100, 3), activation='relu', name='conv-1'))

model.add(tf.keras.layers.MaxPooling2D((2, 2), name='pool-1'))

model.add(tf.keras.layers.Conv2D(64, (3, 3), activation='relu', name='conv-2'))

model.add(tf.keras.layers.MaxPooling2D((2, 2), name='pool-2'))

model.add(tf.keras.layers.Conv2D(128, (3, 3), activation='relu', name='conv-3'))

model.add(tf.keras.layers.MaxPooling2D((2, 2), name='pool-3'))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(512, activation='relu', name='fully-1'))

model.add(tf.keras.layers.Dense(1, activation='sigmoid', name='sigmoid-1'))

model.compile(optimizer=RMSprop(learning_rate=0.01), loss=tf.losses.binary_crossentropy, metrics=['accuracy'])

model.summary()

return model

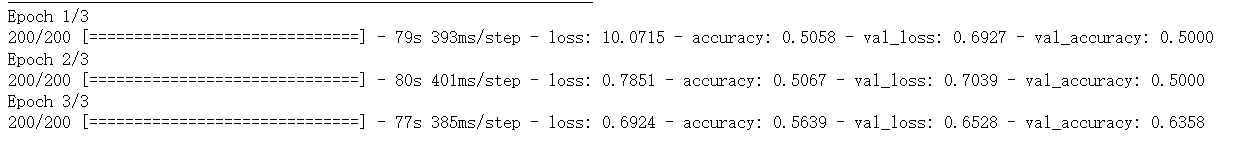

fit操作

model = netWork()

model.fit(train_generator,

epochs=3,

verbose=1,

validation_data=validation_generator)

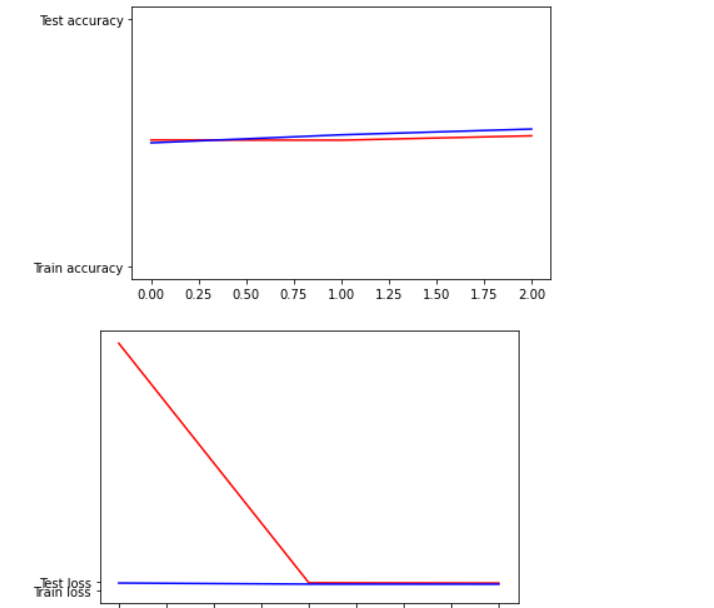

制作散点图

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'r', "Train accuracy")

plt.plot(epochs, val_acc, 'b', f"Test accuracy")

plt.figure()

plt.plot(epochs, loss, 'r', "Train loss")

plt.plot(epochs, val_loss, 'b', "Test loss")

plt.figure()

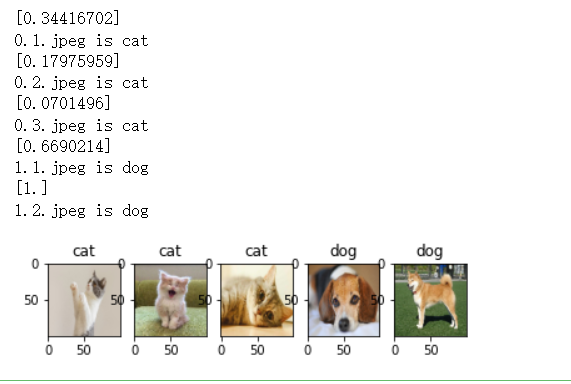

自测数据

自己添加数据到项目中,来检查模型训练的好坏

from tensorflow.keras.preprocessing import image

import numpy as np

path = './image/'

index = 1

for file in os.listdir(path):

img = image.load_img(path+file, target_size=(100, 100))

x= image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(classes[0])

plt.subplot(1,5,index)

plt.imshow(img)

num = file.split('.')[0]

if num == '0':

plt.title('cat')

else:

plt.title('dog')

if classes[0]>0.5:

print(file + " is dog")

else:

print(file + " is cat")

index += 1