TF - IDF

参考:https://mofanpy.com/tutorials/machine-learning/nlp/intro-search/

TF - IDF 用于寻找搜索的最佳匹配。它使用词语的重要程度与独特性来代表每篇文章,然后通过对比搜索词与代表的相似性,提供最相似的文章列表

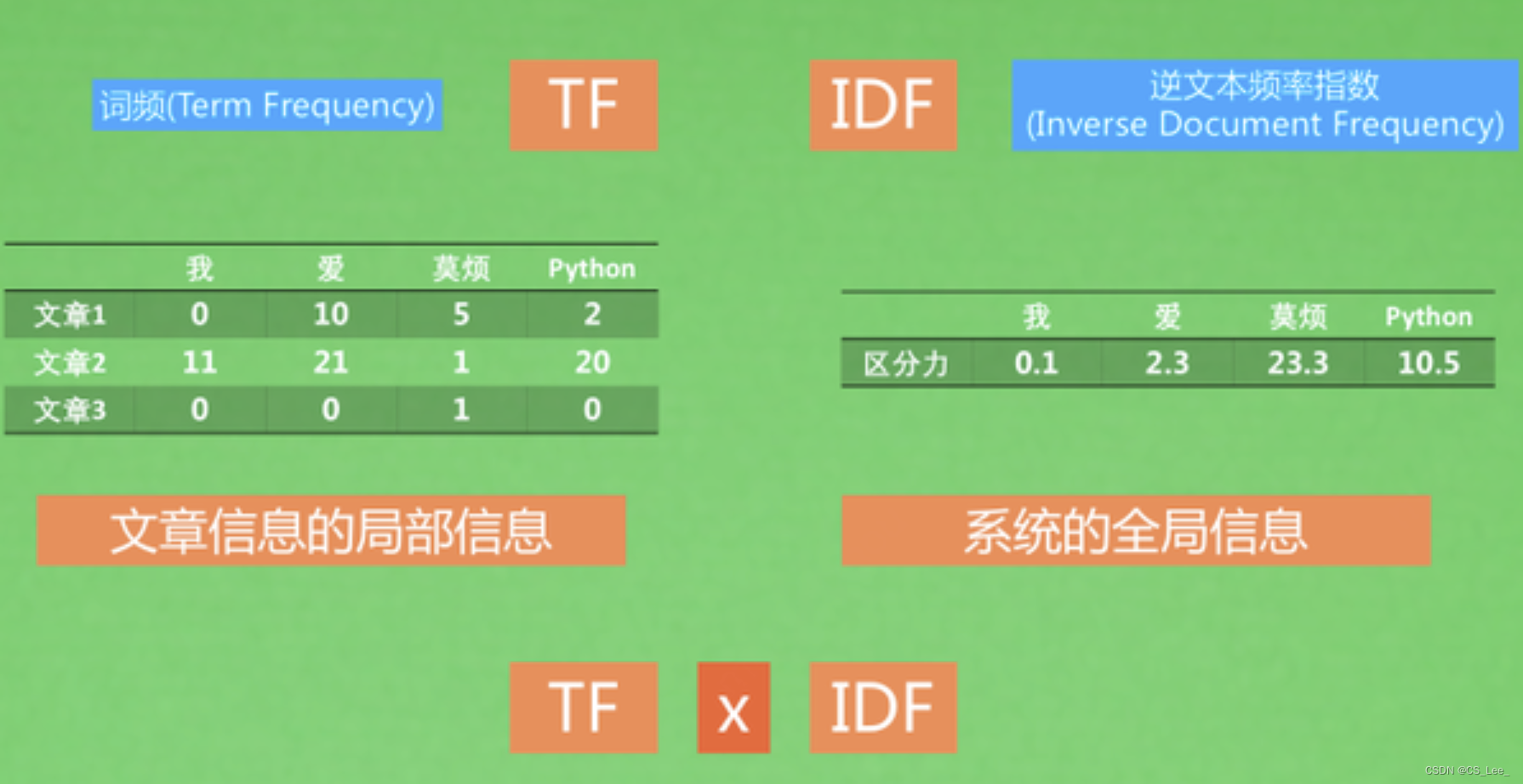

一篇文章中,越重要的内容,强调的次数也越多,所以频率会越高,词频 TF (Term Frequency)高的词代表着某篇文章的属性, 词w 在 文档d 的TF本质计算 TF = 文档d 中 词w 总数

一些词频高的词没有代表意义,不具有区分力,光看局部信息(某篇文档中的词频 TF)会带来统计偏差, 所以引入了一个全局参数 IDF(Inverse Document Frequency)来判断这个词在系统中的区分力,词w 的IDF本质计算 IDF = log(所有文档数 / 所有文档中 词w 数 )

当然 TF 和 IDF 还有很多种变异的计算方式

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-11qOj79p-1651586510181)(/home/cslee/NLP_Learning/NLP_Learning.assets/image-20220429000556207.png)]](https://img-blog.csdnimg.cn/77f6669fe02d4dd397e51a762d06ed4c.png)

最简单的一个搜索引擎,就是计算好所有的文档向量,然后每次来一个搜索问题,机器会利用词表的模式计算问题的 TF-IDF 值,就将这个问题转换成同样的向量,计算问题向量和每篇文章的文档向量的距离,距离越近则文档越相近,下面尝试来实现这个过程:

import numpy as np

from collections import Counter

import itertools

from visual import show_tfidf # this refers to visual.py in my [repo](https://github.com/MorvanZhou/NLP-Tutorials/)

# 假设我们所有的文档被列在下面,一共15篇

docs = [

"it is a good day, I like to stay here",

"I am happy to be here",

"I am bob",

"it is sunny today",

"I have a party today",

"it is a dog and that is a cat",

"there are dog and cat on the tree",

"I study hard this morning",

"today is a good day",

"tomorrow will be a good day",

"I like coffee, I like book and I like apple",

"I do not like it",

"I am kitty, I like bob",

"I do not care who like bob, but I like kitty",

"It is coffee time, bring your cup",

]

# 将文档的单词转换成ID形式,这样便于后续通过ID进行统计

docs_words = [d.replace(",","").split(" ") for d in docs]

#print(docs_words)

vocab = set(itertools.chain(*docs_words))

#print(vocab)

v2i = {v: i for i, v in enumerate(vocab)}

i2v = {i: v for v, i in v2i.items()}

#print(v2i,i2v)

#构建每一篇文档的 TF-IDF 向量表示

idf_methods = {

"log": lambda x: 1 + np.log(len(docs) / (x+1)),

"prob": lambda x: np.maximum(0, np.log((len(docs) - x) / (x+1))),

"len_norm": lambda x: x / (np.sum(np,square(x))+1),

}

def get_idf(method="log"):

# inverse document frequency: low idf for a word appears in more docs,

# mean less important

df = np.zeros((len(i2v), 1))

for i in range(len(i2v)):

d_count = 0

for d in docs_words:

d_count +=1 if i2v[i] in d else 0

df[i] = d_count

idf_fn = idf_methods.get(method, None) #别加 default=None

if idf_fn is None:

raise ValueError

return idf_fn(df) # [n_vocab, 1]

# def safe_log(x):

# mask = x != 0

# x[mask] = np.log(x[mask])

# return x

tf_methods = {

"log": lambda x: np.log(1+x),

"augmented": lambda x: 0.5 + 0.5 * x / np.max(x, axis=1, keepdims=True),

"boolean": lambda x: np.minimum(x, 1),

#"log_avg": lambda x: (1 + safe_log(x)) / (1 + safe_log(np.mean(x,\

# axis=1, keepdims=True))),

}

def get_tf(method="log"):

# term frequency: how frequent a word appears in a doc

_tf = np.zeros((len(vocab), len(docs)), dtype=np.float64) # [n_vocab, n_doc]

for i, d in enumerate(docs_words):

counter = Counter(d)

for v in counter.keys():

_tf[v2i[v], i] = counter[v] / counter.most_common(1)[0][1] #

weighted_tf = tf_methods.get(method, None)

if weighted_tf is None:

raise ValueError

return weighted_tf(_tf) # [n_vocab, n_doc]

tf = get_tf() # [n_vocab, n_doc]

idf = get_idf() # [n_vocab, 1]

tf_idf = tf * idf # [n_vocab, n_doc]

# print("tf shape(vecb in each docs): ", tf.shape)

# print("\ntf samples:\n", tf[:2])

# print("\nidf shape(vecb in all docs): ", idf.shape)

# print("\nidf samples:\n", idf[:2])

# print("\ntf_idf shape: ", tf_idf.shape)

# print("\ntf_idf sample:\n", tf_idf[:2])

# 尝试搜索“I get a coffee cup“, 并返回15篇文档当中最像这句搜索的前3篇文档

def cosine_similarity(q, _tf_idf):

unit_q = q / np.sqrt(np.sum(np.square(q), axis=0, keepdims=True))

unit_ds = _tf_idf / np.sqrt(np.sum(np.square(_tf_idf), axis=0,\

keepdims=True))

similarity = unit_ds.T.dot(unit_q).ravel()

return similarity

def docs_score(q, len_norm=False):

q_words = q.replace(",", "").split(" ")

# add unknown words

unknown_v = 0

for v in set(q_words):

if v not in v2i:

v2i[v] = len(v2i)

i2v[len(v2i)-1] = v

unknown_v += 1

if unknown_v > 0:

_idf = np.concatenate((idf, np.zeros((unknown_v, 1), dtype=np.float)),\

axis=0)

_tf_idf = np.concatenate((tf_idf, np.zeros((unknown_v,\

tf_idf.shape[1]),dtype=np.float)), axis=0)

else:

_idf, _tf_idf = idf, tf_idf

counter = Counter(q_words)

q_tf = np.zeros((len(_idf), 1), dtype=np.float) # [n_vocab, 1]

for v in counter.keys():

q_tf[v2i[v], 0] = counter[v]

#------------------------------------------------

q_vec = q_tf * _idf # [n_vocab, 1]

q_scores = cosine_similarity(q_vec, _tf_idf)

#------------------------------------------------

if len_norm:

len_docs = [len(d) for d in docs_words]

q_scores = q_scores / np.array(len_docs)

return q_scores

q = "I get a coffee cup"

scores = docs_score(q)

d_ids = scores.argsort()[-3:][::-1]

print("\ntop 3 docs for '{}':\n{}".format(q, [docs[i] for i in d_ids]))

#最终计算出来的TF-IDF实际是一个词语和文章的矩阵,代表着用词语向量表示的文章

show_tfidf(tf_idf.T, [i2v[i] for i in range(tf_idf.shape[0])], "tfidf_matrix")

#给前三篇文章挑选两个关键词

def get_keywords(n=2):

for c in range(3):

col = tf_idf[:, c]

idx = np.argsort(col)[-n:]

print("doc{}, top{} keywords {}".format(c, n, [i2v[i] for i in idx]))

在 IF-IDF 这张巨大的分别代表文章索引和单词索引的二维表中,每篇文章不一定会提及到所有词汇,这些不提及的词汇可以不用存储,所以为了解决内存占用大的问题,利用稀疏矩阵(Sparse Matrix)。用 Skearn 模块的 Sparse Matrix 功能,能更快速,有效地计算和存储海量的数据

还是以上面的 made up 文档举例,如果直接调用 sklearn 的TF-IDF 功能,会方便很多:

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

from visual import show_tfidf # this refers to visual.py in my [repo](https://github.com/MorvanZhou/NLP-Tutorials/)

docs = [

"it is a good day, I like to stay here",

"I am happy to be here",

"I am bob",

"it is sunny today",

"I have a party today",

"it is a dog and that is a cat",

"there are dog and cat on the tree",

"I study hard this morning",

"today is a good day",

"tomorrow will be a good day",

"I like coffee, I like book and I like apple",

"I do not like it",

"I am kitty, I like bob",

"I do not care who like bob, but I like kitty",

"It is coffee time, bring your cup",

]

#计算文档中的 TF-IDF

#vectorizer._idf —— IDF的预计算模式

vectorizer = TfidfVectorizer()

tf_idf = vectorizer.fit_transform(docs)

print("idf: ", [(n, idf) for idf, n in zip(vectorizer.idf_, vectorizer.get_feature_names())])

print("v2i: ", vectorizer.vocabulary_)

q = "I get a coffee cup"

qtf_idf = vectorizer.transform([q])

res = cosine_similarity(tf_idf, qtf_idf)

res = res.ravel().argsort()[-3:]

print("\ntop 3 docs for '{}':\n{}".format(q, [docs[i] for i in res[::-1]]))

i2v = {i: v for v, i in vectorizer.vocabulary_.items()}

dense_tfidf = tf_idf.todense()

show_tfidf(dense_tfidf, [i2v[i] for i in range(dense_tfidf.shape[1])], "tfidf_sklearn_matrix")

此外,也能用 TF-IDF 作为文档的训练数据,将文档向量化表示后,当做神经网络的输入,对这篇文档进行后续处理,比如分类,回归等拟合过程

Word2Vec

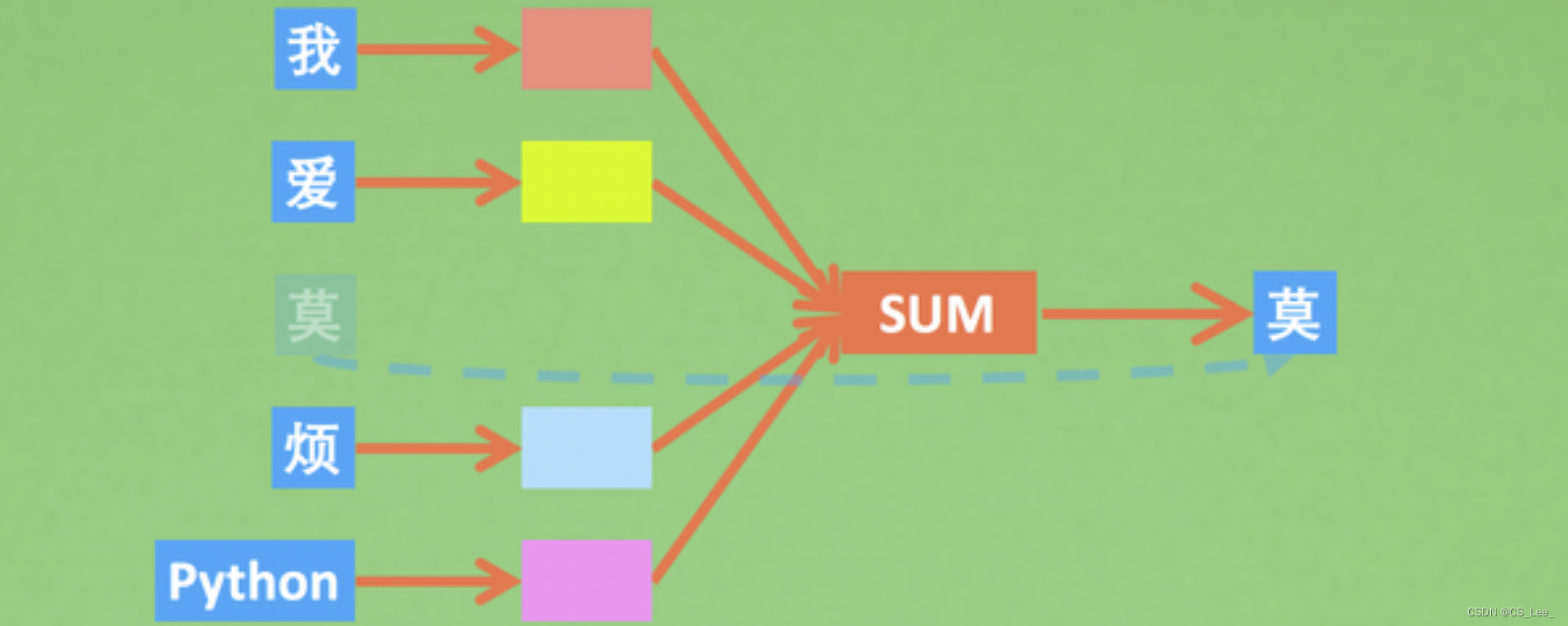

词向量是词语的向量表示,对于理解词语甚至是句子都有很强的适用性。Word2Vec 是一种将词转化为向量的方法,其包含两种算法,分别是 skip-gram 和 CBOW(Continuous Bag of Words),它们的最大区别是 skip-gram 是通过中心词去预测中心词周围的词,而 CBOW 是通过周围的词去预测中心词应用

CBOW

参考:https://mofanpy.com/tutorials/machine-learning/nlp/cbow/

CBOW 挑一个要预测的词,来学习这个词前后词语和预测词的关系

如有这样一句话

我爱莫烦Python,莫烦Python通俗易懂。

模型将这句话拆成输入和输出,用前后文的词向量来预测句中的某个词

这个模型的输入输出可以是:

# 1

# 输入:[我,爱] + [烦,Python]

# 输出:莫

# 2

# 输入:[爱,莫] + [Python,' ,']

# 输出:烦

# 3

# 输入:[莫,烦] + [',',莫]

# 输出:Python

# 4

# 输入:[烦,Python] + [莫,烦]

# 输出:','

通过在大数据量的短语或文章中学习这样的词语关系,这个模型就能理解要预测的词和前后文的关系。而图中彩色的词向量就是这种训练过程的一个副产品

为了做一个有区分力的词向量,这里做了一些假数据,想让计算机学会这些假词向量的正确向量空间:

from torch import nn

import torch

from torch.nn.functional import cross_entropy,softmax

from utils import process_w2v_data

# this refers to utils.py in my [repo](https://github.com/MorvanZhou/NLP-Tutorials/)

from visual import show_w2v_word_embedding

# this refers to visual.py in my [repo](https://github.com/MorvanZhou/NLP-Tutorials/)

#将训练的句子人工分为两派(数字派,字母派),虽然都是文本,但是期望模型能自动区分出在空间上,数字和字母是有差别的。因为数字总是和数字一同出现, 而字母总是和字母一同出现

#在字母中安排了一个数字卧底,这个卧底的任务就是把字母那边的情报向数字通风报信。 所以期望数字 9 不但靠近数字,而且也靠近字母

corpus = [

# numbers

"5 2 4 8 6 2 3 6 4",

"4 8 5 6 9 5 5 6",

"1 1 5 2 3 3 8",

"3 6 9 6 8 7 4 6 3",

"8 9 9 6 1 4 3 4",

"1 0 2 0 2 1 3 3 3 3 3",

"9 3 3 0 1 4 7 8",

"9 9 8 5 6 7 1 2 3 0 1 0",

# alphabets, expecting that 9 is close to letters

"a t g q e h 9 u f",

"e q y u o i p s",

"q o 9 p l k j o k k o p",

"h g y i u t t a e q",

"i k d q r e 9 e a d",

"o p d g 9 s a f g a",

"i u y g h k l a s w",

"o l u y a o g f s",

"o p i u y g d a s j d l",

"u k i l o 9 l j s",

"y g i s h k j l f r f",

"i o h n 9 9 d 9 f a 9",

]

class CBOW(nn.Module):

def __init__(self, v_dim, emb_dim):

super().__init__()

self.v_dim = v_dim

# 词向量存在于 self.embeddings 里面

self.embeddings = nn.Embedding(num_embeddings=v_dim, embedding_dim=emb_dim)

# [n_vocab, emb_dim]

self.embeddings.weight.data.normal_(0, 0.1)

# self.opt = torch.optim.Adam(0.01)

self.hidden_out = nn.Linear(emb_dim, v_dim)

self.opt = torch.optim.SGD(self.parameters(),momentum=0.9, lr=0.01)

# 把预测时的 embedding 词向量拿出来,然后求一个词向量平均

def forward(self, x, training=None, mask=None):

# x.shape = [n, skip_window*2]

o = self.embeddings(x) # [n, skip_window*2, emb_dim]

o = torch.mean(o, dim=1) # [n, emb_dim]

return o

def loss(self, x, y, training=None):

embedded = self(x, training)

pred = self.hidden_out(embedded)

return cross_entropy(pred, y)

def step(self, x, y):

self.opt.zero_grad()

loss = self.loss(x, y, True)

loss.backward()

self.opt.step()

#return loss.detach().to("cpu").numpy() #

return loss

def train(model, data):

if torch.cuda.is_available():

print("GPU train avaliable")

device =torch.device("cuda")

model = model.cuda()

else:

device = torch.device("cpu")

model = model.cpu()

for t in range(800000):

bx, by = data.sample(100)

bx, by = torch.from_numpy(bx).to(device), torch.from_numpy(by).to(device)

loss = model.step(bx, by)

if t%200 == 0:

print(f"step: {t} | loss: {loss}")

if __name__ == "__main__":

# skip_window 的作用是在我们要预测的词周围要选取多少个词

d = process_w2v_data(corpus, skip_window=2, method="cbow")

m = CBOW(d.num_word, 2)

train(m, d)

show_w2v_word_embedding(m,d,"./visual/results/cbow.png")

常用的方式是将这些训练好的词向量当做预训练模型,然后放入另一个神经网络(比如RNN)当成输入,使用另一个神经网络加工后,训练句向量

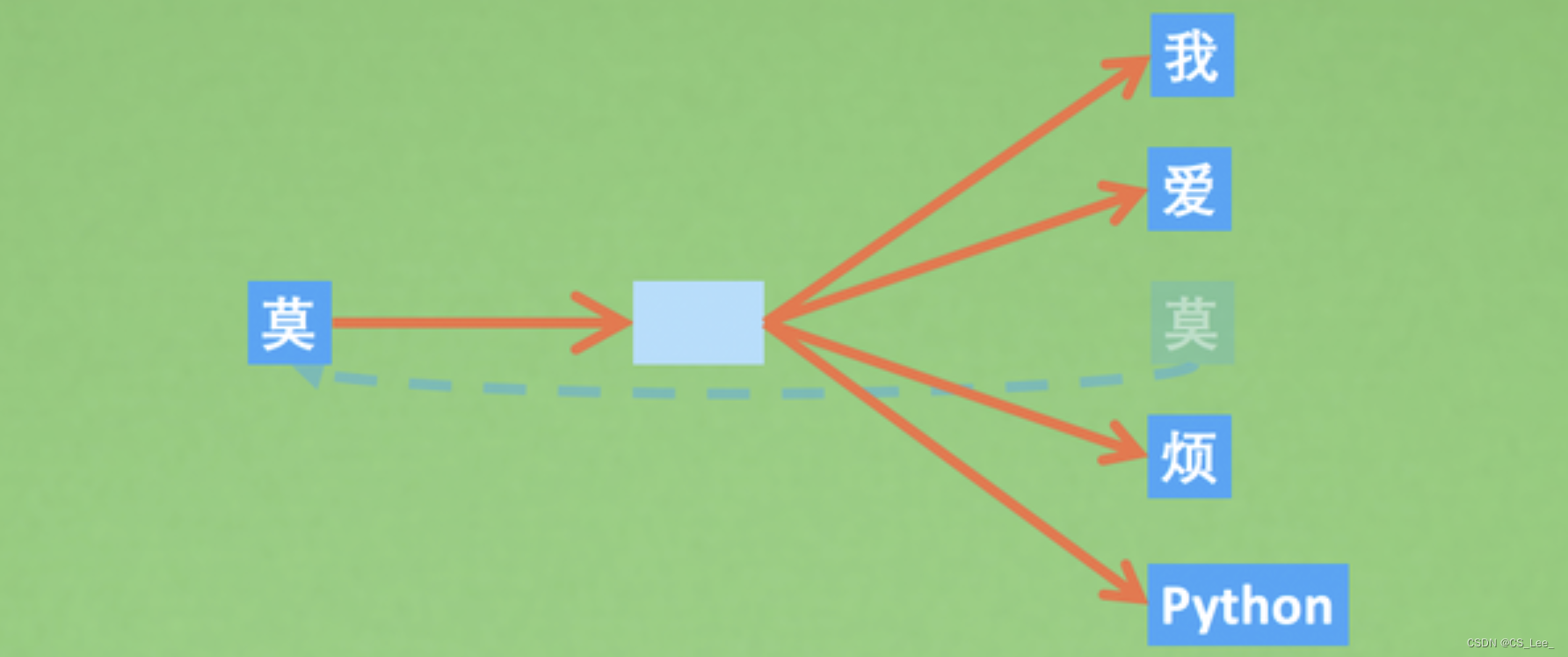

Skip-Gram

参考:https://mofanpy.com/tutorials/machine-learning/nlp/skip-gram/

相比 CBOW 使用上下文来预测上下文之间的结果,Skip-Gram 则是把这个过程反过来,使用文中的某个词,然后预测这个词周边的词

Skip-Gram 相比 CBOW 最大的不同,就是剔除了中间的那个 SUM 求和的过程,加工的始终是输入端单个词向量

使用和上文一样的假数据:

from torch import nn

import torch

from torch.nn.functional import cross_entropy

from utils import Dataset,process_w2v_data

from visual import show_w2v_word_embedding

corpus = [

# numbers

"5 2 4 8 6 2 3 6 4",

"4 8 5 6 9 5 5 6",

"1 1 5 2 3 3 8",

"3 6 9 6 8 7 4 6 3",

"8 9 9 6 1 4 3 4",

"1 0 2 0 2 1 3 3 3 3 3",

"9 3 3 0 1 4 7 8",

"9 9 8 5 6 7 1 2 3 0 1 0",

# alphabets, expecting that 9 is close to letters

"a t g q e h 9 u f",

"e q y u o i p s",

"q o 9 p l k j o k k o p",

"h g y i u t t a e q",

"i k d q r e 9 e a d",

"o p d g 9 s a f g a",

"i u y g h k l a s w",

"o l u y a o g f s",

"o p i u y g d a s j d l",

"u k i l o 9 l j s",

"y g i s h k j l f r f",

"i o h n 9 9 d 9 f a 9",

]

class SkipGram(nn.Module):

def __init__(self,v_dim,emb_dim):

super().__init__()

self.v_dim = v_dim

self.embeddings = nn.Embedding(v_dim, emb_dim)

self.embeddings.weight.data.normal_(0,0.1)

self.hidden_out = nn.Linear(emb_dim, v_dim)

self.opt = torch.optim.Adam(self.parameters(), lr=0.01)

# Skip-Gram 的前向比 CBOW更简单,只有一个取 embedding 的过程

def forward(self,x,training=None, mask=None):

# x.shape = [n, 1]

o = self.embeddings(x) # [n, emb_dim]

return o

#为了简化训练过程,在数据处理时 process_w2v_data() 做了一些小处理。在 CBOW 中,我们的 X

#数据每行有前后N个词语,Y 中只有1个词;而在 Skip-Gram 中,X 数据只有1个词,Y也只有1个词

#。虽然 Y 只有1个词,但我们使用同一个 X,对前后N个词都预测一遍,逻辑是下面这样:

# 原本应该是这样:

# 输入:莫

# 输出:[我,爱] + [烦,Python]

# 对同一批训练数据,你也可以这样

# 输入:莫 -> 输出:我

# 输入:莫 -> 输出:爱

# 输入:莫 -> 输出:烦

# 输入:莫 -> 输出:Python

def loss(self,x,y,training=None):

embedded = self(x, training)

pred= self.hidden_out(embedded)

return cross_entropy(pred,y)

def step(self,x,y):

self.opt.zero_grad()

loss = self.loss(x, y, True)

loss.backward()

self.opt.step()

return loss

#return loss.detach().numpy()

def train(model,data):

if torch.cuda.is_available():

print("GPU train avaliable")

device =torch.device("cuda")

model = model.cuda()

else:

device = torch.device("cpu")

model = model.cpu()

for t in range(2500):

bx, by = data.sample(8)

bx, by = torch.from_numpy(bx).to(device), torch.from_numpy(by).to(device)

loss = model.step(bx, by)

if t%200 == 0:

print(f"step: {t} | loss: {loss}")

if __name__ == "__main__":

# skip_window 的作用是在我们要预测的词周围要选取多少个词

d = process_w2v_data(corpus, skip_window=2, method="skip_gram")

m = SkipGram(d.num_word, 2)

train(m, d)

#plotting

show_w2v_word_embedding(m,d,"./visual/results/skipgram.png")