循环神经网络(RNN)

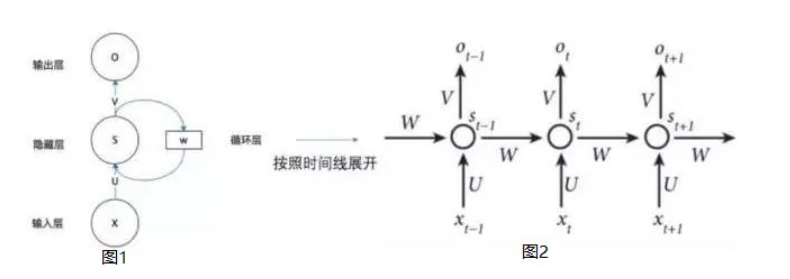

对于循环神经网络,网上的讲解都是按照时间线展开进行的:

图片来自网络

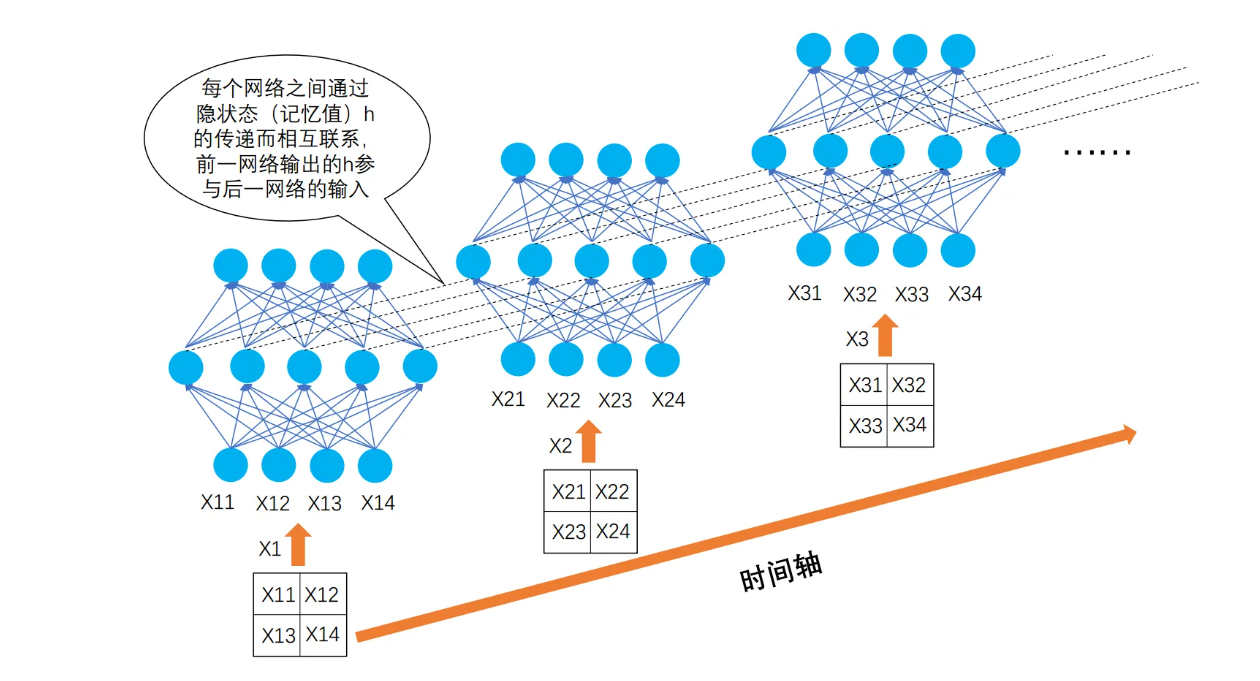

我个人觉得这样复杂化了。然后,又有人用以下图片来解释这种复杂化:

图片来自网络

看起来挺酷。

其实,循环神经网络没那么复杂。下面,本文将以“序列动,网络不动”的角度展示循环神经网络的前向传播,并有前馈神经网络作为对照。然后,同样以“序列动,网络不动”的角度清晰地展示双向循环神经网络的前向传播。

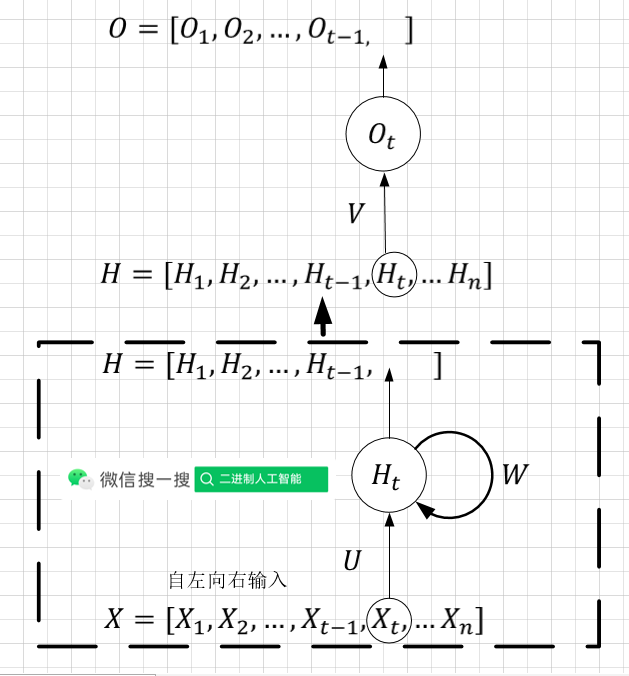

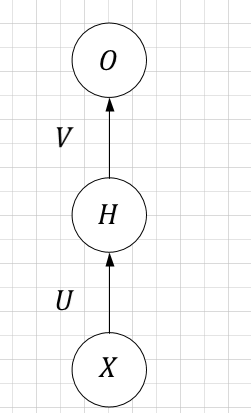

RNN与前馈神经网络对比

| 循环神经网络(RNN) | 前馈神经网络 | |

|---|---|---|

| 网络简图 |  |  |

| 输入 | 时间序列: X = [ X 1 . X 2 , X 3 , . . . , X t ? 1 , X t . . . , X n ] X=[X_1.X_2,X_3,...,X_{t-1},X_{t}...,X_n] X=[X1?.X2?,X3?,...,Xt?1?,Xt?...,Xn?] | 非时间序列: X X X |

前向传播(不考虑偏置

b

b

b,

f

f

f为激活函数)

(1)前馈神经网络:

O = f ( V H ) = f ( V f ( U X ) ) O=f(VH)=f(Vf(UX)) O=f(VH)=f(Vf(UX))

(2)循环神经网络:

X

X

X的元素依次输入,计算

H

1

=

f

(

U

X

1

+

W

H

0

)

,

H

0

常

设

为

0

H

2

=

f

(

U

X

2

+

W

H

1

)

=

f

(

U

X

2

+

W

U

X

1

)

H

3

=

f

(

U

X

3

+

W

H

2

)

=

f

(

U

X

3

+

W

U

(

U

X

2

+

W

H

1

)

=

f

(

U

X

3

+

W

U

(

U

X

2

+

W

U

X

1

)

)

.

.

.

H

t

=

f

(

U

X

t

+

W

H

t

?

1

)

.

.

.

\begin{aligned} H_1&=f(UX_1+WH_0),H_0常设为0\\ H_2&=f(UX_2+WH_1)=f(UX_2+WUX_1)\\ H_3&=f(UX_3+WH_2)=f(UX_3+WU(UX_2+WH_1)\\ &=f(UX_3+WU(UX_2+WUX_1))\\ ...\\ H_t&=f(UX_t+WH_{t-1})\\ ... \end{aligned}

H1?H2?H3?...Ht?...?=f(UX1?+WH0?),H0?常设为0=f(UX2?+WH1?)=f(UX2?+WUX1?)=f(UX3?+WH2?)=f(UX3?+WU(UX2?+WH1?)=f(UX3?+WU(UX2?+WUX1?))=f(UXt?+WHt?1?)?

得到:

H

=

[

H

1

.

H

2

,

H

3

,

.

.

.

,

H

t

?

1

,

H

t

.

.

.

,

H

n

]

H=[H_1.H_2,H_3,...,H_{t-1},H_{t}...,H_n]

H=[H1?.H2?,H3?,...,Ht?1?,Ht?...,Hn?]

后接一个前馈网络:

O

1

=

f

(

V

H

1

)

O

2

=

f

(

V

H

2

)

O

3

=

f

(

V

H

3

)

.

.

.

O

t

=

f

(

V

H

t

)

.

.

.

\begin{aligned} O_1&=f(VH_1)\\ O_2&=f(VH_2)\\ O_3&=f(VH_3)\\ ...\\ O_t&=f(VH_t)\\ ... \end{aligned}

O1?O2?O3?...Ot?...?=f(VH1?)=f(VH2?)=f(VH3?)=f(VHt?)?

输出:

O

=

[

O

1

.

O

2

,

O

3

,

.

.

.

,

O

t

?

1

,

O

t

.

.

.

,

O

n

]

O=[O_1.O_2,O_3,...,O_{t-1},O_{t}...,O_n]

O=[O1?.O2?,O3?,...,Ot?1?,Ot?...,On?]

就这么简单,下面举两个例子。

Pytorch实现

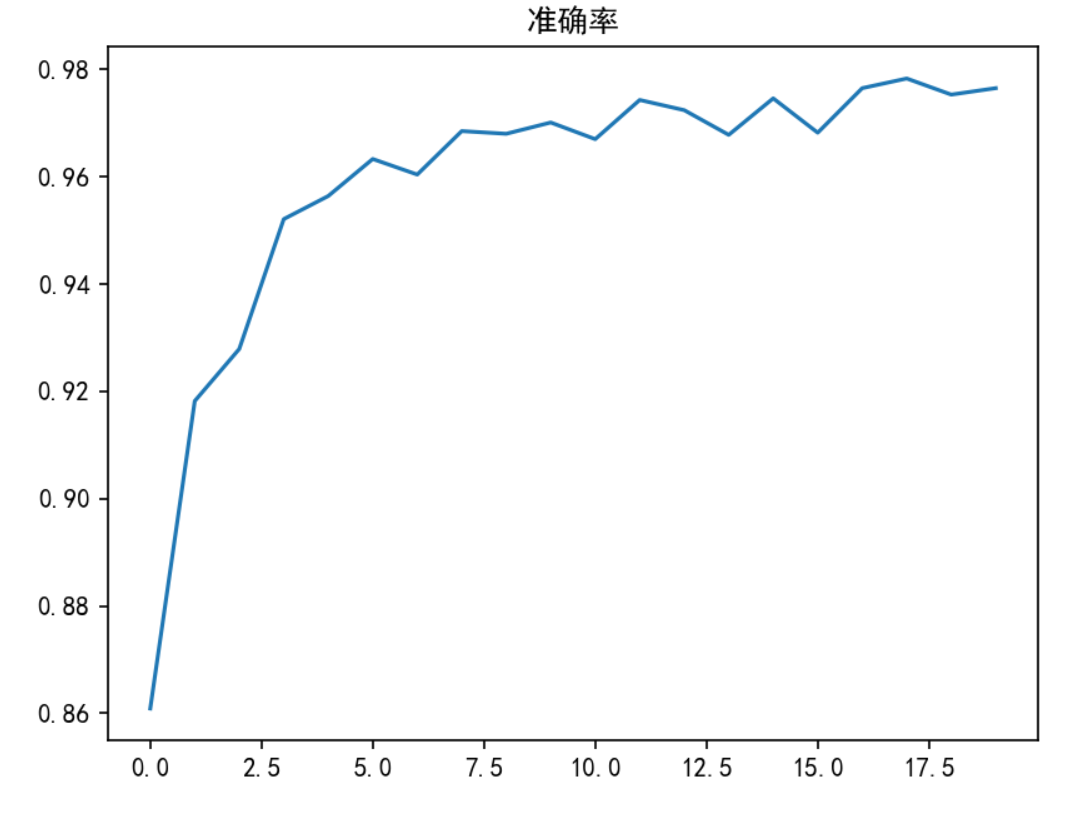

序列到类别

将图像(28x28)自上而下视为一个时间序列

X = [ X 1 . X 2 , X 3 , . . . , X t ? 1 , X t . . . , X 28 ] X=[X_1.X_2,X_3,...,X_{t-1},X_{t}...,X_{28}] X=[X1?.X2?,X3?,...,Xt?1?,Xt?...,X28?]

其中 X t X_t Xt?为图像第 t t t行的像素。

序列输入循环神经网络,输出

H

=

[

H

1

.

H

2

,

H

3

,

.

.

.

,

H

t

?

1

,

H

t

.

.

.

,

H

28

]

H=[H_1.H_2,H_3,...,H_{t-1},H_{t}...,H_{28}]

H=[H1?.H2?,H3?,...,Ht?1?,Ht?...,H28?]

取其中的 H 28 H_{28} H28?输入前馈神经网络,得到分类预测值。

import torch

import torch.nn as nn

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import matplotlib

torch.manual_seed(1)

matplotlib.rcParams['font.family'] = 'SimHei'

matplotlib.rcParams['axes.unicode_minus'] = False

plt.rcParams['figure.dpi'] = 150

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(device)

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, n_class):

super(RNN, self).__init__()

self.num_layers = num_layers

self.hidden_size = hidden_size

self.rnn = nn.RNN(

input_size=input_size,

hidden_size=hidden_size,

num_layers=num_layers,

batch_first=True,

nonlinearity='relu'

)

self.linear = nn.Linear(hidden_size, n_class)

def forward(self, x):

h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

out, hn = self.rnn(x, h0)

out = out[:, -1, :] # = hn

out = self.linear(out)

return out

def test(dataset_test, model):

correct = 0

total_num = len(dataset_test)

test_loader = DataLoader(dataset=dataset_test, batch_size=64, shuffle=False)

for (test_images, test_labels) in test_loader:

test_images = test_images.squeeze(1)

test_images = test_images.to(device)

test_labels = test_labels.to(device)

predicted = model(test_images)

_, predicted = torch.max(predicted, 1)

correct += torch.sum(predicted == test_labels)

accuracy = correct.item() / total_num

print('测试准确率:{:.5}'.format(accuracy))

return accuracy

def train():

accuracy_list = []

learning_rate = 0.001

batch_size = 128

model = RNN(input_size=28, hidden_size=128, num_layers=1, n_class=10) # 图片大小是28x28

model = model.to(device)

dataset_train = datasets.MNIST(root='./mnist', transform=transforms.ToTensor(), train=True, download=False)

dataset_test = datasets.MNIST(root='./mnist', transform=transforms.ToTensor(), train=False, download=False)

train_loader = DataLoader(dataset=dataset_train, batch_size=batch_size, shuffle=True)

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

loss_f = nn.CrossEntropyLoss()

for epoch in range(20):

model.train()

for step, (train_images, train_labels) in enumerate(train_loader):

train_images = train_images.squeeze(1) # [64, 1, 28, 28]->[64, 28, 28]

train_images = train_images.to(device)

train_labels = train_labels.to(device)

predicted = model(train_images)

loss = loss_f(predicted, train_labels)

# 反向传播,更新参数

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (step % 100 == 0):

print('epoch:{},step:{},loss:{}'.format(epoch + 1, step, loss.item()))

model.eval()

accuracy = test(dataset_test, model)

accuracy_list.append(accuracy)

plt.plot(accuracy_list)

plt.title('准确率')

plt.show()

if __name__ == '__main__':

train()

结果:

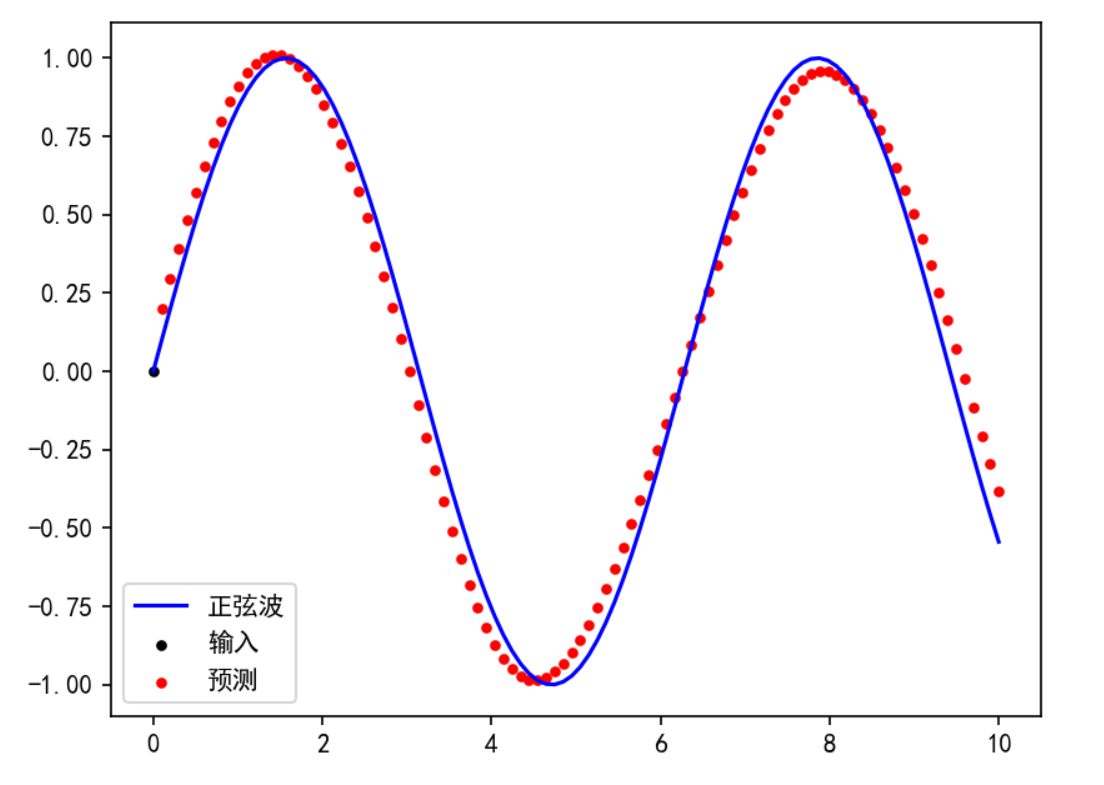

序列到序列

训练:

正弦波预测。有一正弦波序列

S

=

[

S

1

.

S

2

,

S

3

,

.

.

.

,

S

t

?

1

,

S

t

.

.

.

,

S

n

]

S=[S_1.S_2,S_3,...,S_{t-1},S_{t}...,S_n]

S=[S1?.S2?,S3?,...,St?1?,St?...,Sn?]。令

X

=

[

S

1

.

S

2

,

S

3

,

.

.

.

,

S

t

?

1

,

S

t

.

.

.

,

S

n

?

1

]

X=[S_1.S_2,S_3,...,S_{t-1},S_{t}...,S_{n-1}]

X=[S1?.S2?,S3?,...,St?1?,St?...,Sn?1?]

Y = [ S 2 , S 3 , . . . , S t ? 1 , S t . . . , S n ] Y=[S_2,S_3,...,S_{t-1},S_{t}...,S_{n}] Y=[S2?,S3?,...,St?1?,St?...,Sn?]

X

X

X输入网络,输出

H

=

[

H

1

.

H

2

,

H

3

,

.

.

.

,

H

t

?

1

,

H

t

.

.

.

,

H

n

?

1

]

H=[H_1.H_2,H_3,...,H_{t-1},H_{t}...,H_{n-1}]

H=[H1?.H2?,H3?,...,Ht?1?,Ht?...,Hn?1?]

输入前馈网络,得到输出:

O

=

[

O

1

.

O

2

,

O

3

,

.

.

.

,

O

t

?

1

,

O

t

.

.

.

,

O

n

?

1

]

O=[O_1.O_2,O_3,...,O_{t-1},O_{t}...,O_{n-1}]

O=[O1?.O2?,O3?,...,Ot?1?,Ot?...,On?1?]

最小化 O O O与 Y Y Y之间的误差。

测试:输入[0],输出正弦波序列

import torch

import torch.nn as nn

import numpy as np

import torch.optim as optim

from matplotlib import pyplot as plt

import matplotlib

torch.manual_seed(1)

np.random.seed(1)

matplotlib.rcParams['font.family'] = 'SimHei'

matplotlib.rcParams['axes.unicode_minus'] = False

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(device)

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, output_size):

super(RNN, self).__init__()

self.num_layers = num_layers

self.hidden_size = hidden_size

self.rnn = nn.RNN(

input_size=input_size,

hidden_size=hidden_size,

num_layers=num_layers,

batch_first=True,

bidirectional=False,

)

self.linear = nn.Linear(hidden_size, output_size)

def forward(self, inputs, h0):

# x: [batch_size, seq_len, input_size]

# h0: [num_layers, batch_size, hidden_size]

outputs, hn = self.rnn(inputs, h0)

# out: [batch_size, seq_len, hidden_size]

# hn: [num_layers, batch_size, hidden_size]

# [batch, seq_len, hidden_size] => [batch * seq_len, hidden_size]

outputs = outputs.view(-1, self.hidden_size)

# [batch_size * seq_len, hidden_size] => [batch_size * seq_len, output_size]

outputs = self.linear(outputs)

# [batch_size * seq_len, output_size] => [batch_size, seq_len, output_size]

outputs = outputs.view(inputs.size())

return outputs, hn

def test(model, seq_len, h0):

input = torch.tensor(0, dtype=torch.float)

predictions = []

for _ in range(seq_len):

input = input.view(1, 1, 1)

prediction, hn = model(input, h0)

input = prediction

h0 = hn

predictions.append(prediction.detach().numpy().ravel()[0])

# 画图

time_steps = np.linspace(0, 10, seq_len)

data = np.sin(time_steps)

a, = plt.plot(time_steps, data, color='b')

b = plt.scatter(time_steps[0], 0, color='black', s=10)

c = plt.scatter(time_steps[1:], predictions[1:], color='red', s=10)

plt.legend([a, b, c], ['正弦波', '输入', '预测'])

plt.show()

def train():

seq_len = 100

input_size = 1

hidden_size = 16

output_size = 1

num_layers = 1

lr = 0.01

model = RNN(input_size, hidden_size, num_layers, output_size)

loss_function = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr)

h0 = torch.zeros(num_layers, input_size, hidden_size)

for i in range(3000):

# 训练的数据:batch_size=1

time_steps = np.linspace(0, 10, seq_len + 1)

data = np.sin(time_steps)

data = data.reshape(seq_len + 1, 1)

# 去掉最后一个元素,作为输入

inputs = torch.tensor(data[:-1]).float().view(1, seq_len, 1)

# 去掉第一个元素,作为目标值 [batch_size, seq_len, input_size]

target = torch.tensor(data[1:]).float().view(1, seq_len, 1)

output, hn = model(inputs, h0)

# 与上一个批次的计算图分离 https://www.cnblogs.com/catnofishing/p/13287322.html

hn.detach()

loss = loss_function(output, target)

model.zero_grad()

loss.backward()

optimizer.step()

if i % 100 == 0:

print("迭代次数: {} loss {}".format(i, loss.item()))

test(model, seq_len, h0)

if __name__ == '__main__':

train()

结果:

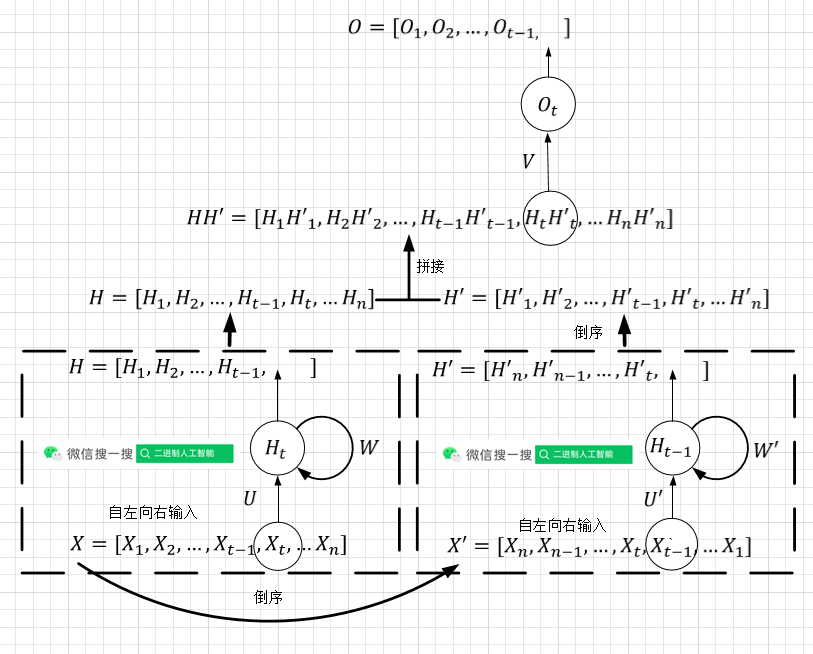

双向RNN

原序列:

H

1

=

f

(

U

X

1

+

W

H

0

)

,

H

0

常

设

为

0

H

2

=

f

(

U

X

2

+

W

H

1

)

)

=

f

(

U

X

2

+

W

U

X

1

)

H

3

=

f

(

U

X

3

+

W

H

2

)

)

=

f

(

U

X

3

+

W

U

(

U

X

2

+

W

H

1

)

)

=

f

(

U

X

3

+

W

U

(

U

X

2

+

W

U

X

1

)

)

.

.

.

H

t

=

f

(

U

X

t

+

W

H

t

?

1

)

.

.

.

\begin{aligned} H_1&=f(UX_1+WH_0),H_0常设为0\\ H_2&=f(UX_2+WH_1))=f(UX_2+WUX_1)\\ H_3&=f(UX_3+WH_2))=f(UX_3+WU(UX_2+WH_1))\\ &=f(UX_3+WU(UX_2+WUX_1))\\ ...\\ H_t&=f(UX_t+WH_{t-1})\\ ... \end{aligned}

H1?H2?H3?...Ht?...?=f(UX1?+WH0?),H0?常设为0=f(UX2?+WH1?))=f(UX2?+WUX1?)=f(UX3?+WH2?))=f(UX3?+WU(UX2?+WH1?))=f(UX3?+WU(UX2?+WUX1?))=f(UXt?+WHt?1?)?

得到:

H

=

[

H

1

.

H

2

,

H

3

,

.

.

.

,

H

t

?

1

,

H

t

.

.

.

,

H

T

]

H=[H_1.H_2,H_3,...,H_{t-1},H_{t}...,H_T]

H=[H1?.H2?,H3?,...,Ht?1?,Ht?...,HT?]

倒序序列:

H

n

′

=

f

(

U

′

X

n

+

W

′

H

n

+

1

′

)

,

H

n

+

1

′

常

设

为

0

H

n

?

1

′

=

f

(

U

′

X

n

?

1

+

W

H

n

′

)

)

=

f

(

U

′

X

n

?

1

+

W

′

U

′

X

n

)

H

n

?

2

=

f

(

U

′

X

n

?

2

+

W

′

H

n

?

1

)

)

=

f

(

U

′

X

n

?

2

+

W

′

U

′

(

U

′

X

n

?

1

+

W

′

H

n

)

)

=

f

(

U

′

X

n

?

2

+

W

′

U

′

(

U

′

X

n

?

1

+

W

′

U

′

X

n

)

)

.

.

.

H

t

′

=

f

(

U

′

X

t

+

W

′

H

t

+

1

)

.

.

.

\begin{aligned} H'_n&=f(U'X_n+W'H'_{n+1}),H'_{n+1}常设为0\\ H'_{n-1}&=f(U'X_{n-1}+WH'_{n}))=f(U'X_{n-1}+W'U'X_n)\\ H_{n-2}&=f(U'X_{n-2}+W'H_{n-1}))=f(U'X_{n-2}+W'U'(U'X_{n-1}+W'H_{_n}))\\ &=f(U'X_{n-2}+W'U'(U'X_{n-1}+W'U'X_n))\\ ...\\ H'_t&=f(U'X_t+W'H_{t+1})\\ ... \end{aligned}

Hn′?Hn?1′?Hn?2?...Ht′?...?=f(U′Xn?+W′Hn+1′?),Hn+1′?常设为0=f(U′Xn?1?+WHn′?))=f(U′Xn?1?+W′U′Xn?)=f(U′Xn?2?+W′Hn?1?))=f(U′Xn?2?+W′U′(U′Xn?1?+W′Hn??))=f(U′Xn?2?+W′U′(U′Xn?1?+W′U′Xn?))=f(U′Xt?+W′Ht+1?)?

得到:

H

′

=

[

H

1

′

.

H

2

′

,

H

3

′

,

.

.

.

,

H

t

?

1

′

,

H

t

′

.

.

.

,

H

T

′

]

H'=[H'_1.H'_2,H'_3,...,H'_{t-1},H'_{t}...,H'_T]

H′=[H1′?.H2′?,H3′?,...,Ht?1′?,Ht′?...,HT′?]

拼接:

H

H

′

=

[

H

1

H

1

′

,

H

2

H

2

′

,

…

,

H

t

?

1

H

t

?

1

′

,

H

t

H

′

t

,

…

H

n

H

n

′

]

H H^{\prime}=\left[H_{1} H_{1}^{\prime}, H_{2} H_{2}^{\prime}, \ldots, H_{t-1} H_{t-1}^{\prime}, H_{t} H^{\prime}{ }_{t}, \ldots H_{n} H_{n}^{\prime}\right]

HH′=[H1?H1′?,H2?H2′?,…,Ht?1?Ht?1′?,Ht?H′t?,…Hn?Hn′?]

后接一个前馈网络:

O

1

=

f

(

V

[

H

1

H

1

′

]

)

O

2

=

f

(

V

[

H

2

H

2

′

]

)

O

3

=

f

(

V

[

H

3

H

3

′

]

)

.

.

.

O

t

=

f

(

V

[

H

t

H

t

′

)

]

.

.

.

\begin{aligned} O_1&=f(V[H_1H'_1])\\ O_2&=f(V[H_2H'_2])\\ O_3&=f(V[H_3H'_3])\\ ...\\ O_t&=f(V[H_tH'_t)]\\ ... \end{aligned}

O1?O2?O3?...Ot?...?=f(V[H1?H1′?])=f(V[H2?H2′?])=f(V[H3?H3′?])=f(V[Ht?Ht′?)]?

输出:

O

=

[

O

1

.

O

2

,

O

3

,

.

.

.

,

O

t

?

1

,

O

t

.

.

.

,

O

T

]

O=[O_1.O_2,O_3,...,O_{t-1},O_{t}...,O_T]

O=[O1?.O2?,O3?,...,Ot?1?,Ot?...,OT?]

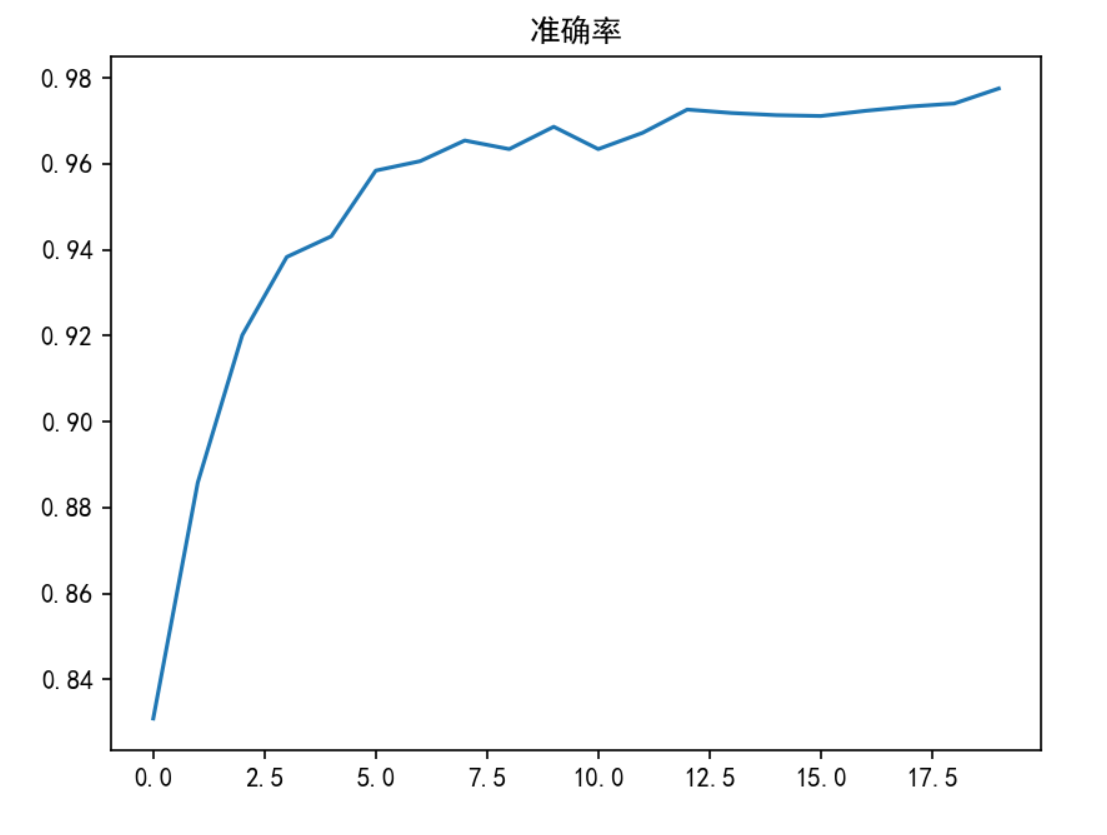

Pytorch实现:序列到类别

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, n_class):

super(RNN, self).__init__()

self.num_layers = num_layers

self.hidden_size = hidden_size

self.rnn = nn.RNN(

input_size=input_size,

hidden_size=hidden_size,

num_layers=num_layers,

batch_first=True,

bidirectional=True,

nonlinearity='relu'

)

self.linear = nn.Linear(2*hidden_size, n_class)

def forward(self, x):

h0 = torch.zeros(self.num_layers*2, x.size(0), self.hidden_size).to(device)

out, hn = self.rnn(x, h0)

out = out[:, -1, :] # = hn

out = self.linear(out)

return out

结果:

附:nn.RNN类

CLASS torch.nn.RNN(*args, **kwargs)

参数说明:

input_size:输入序列单元 x t x_t xt?的维度。hidden_size:隐藏层神经元个数,或者也叫输出的维度num_layers:网络的层数,默认为1nonlinearity:激活函数,默认为tanhbias:是否使用偏置batch_first:输入数据的形式,默认是False,形式为(seq, batch, feature),也就是将序列长度放在第一位,batch 放在第二位。如果为True,则为(batch, seq, feature)dropout:dropout率, 默认为0,即不使用。如若使用将其设置成一个0-1的数字即可。birdirectional:是否使用两层的双向的 rnn,默认是False

记:

N

=

batch?size

L

=

?sequence?length?

D

=

2

?if?bidirectional=True?otherwise?

1

H

in

=

?input_size?

H

o

u

t

=

?hidden_size?

\begin{aligned} N &=\text{batch size} \\ L &=\text { sequence length } \\ D &=2 \text { if bidirectional=True otherwise } 1 \\ H_{\text {in}}&=\text{ input\_size } \\ H_{o u t} &=\text { hidden\_size } \end{aligned}

NLDHin?Hout??=batch?size=?sequence?length?=2?if?bidirectional=True?otherwise?1=?input_size?=?hidden_size??

输入:input, h_0

(1)input(输入序列)

非批量: ( L , H i n ) (L,H_{in}) (L,Hin?)

批量:如果batch_first=False,

(

L

,

N

,

H

i

n

)

(L,N,H_{in})

(L,N,Hin?);如果batch_first=True,

(

N

,

L

,

H

i

n

)

(N,L,H_{in})

(N,L,Hin?)

(2) h_0(每一层网络的初始状态。如果未提供,则默认为零。)

非批量:

(

D

×

num_layers

,

H

o

u

t

)

(D\times \text{num\_layers},H_{out})

(D×num_layers,Hout?)

批量: ( D × num_layers , N , H o u t ) (D\times \text{num\_layers},N,H_{out}) (D×num_layers,N,Hout?)

输出,output,hn:

(1)output:

非批量:

(

L

,

D

×

H

o

u

t

)

(L,D\times H_{out})

(L,D×Hout?)

批量:如果batch_first=False,

(

L

,

N

,

H

o

u

t

)

(L,N,H_{out})

(L,N,Hout?);如果batch_first=True,

(

N

,

L

,

H

o

u

t

)

(N,L,H_{out})

(N,L,Hout?)

(2)h_n:状态

非批量:

(

D

×

num_layers

,

H

o

u

t

)

(D\times \text{num\_layers},H_{out})

(D×num_layers,Hout?)

批量: ( D × num_layers , N , H o u t ) (D\times \text{num\_layers},N,H_{out}) (D×num_layers,N,Hout?)

权重和偏置初始化:

从均匀分布 U ( ? k , k ) U(-\sqrt{k},\sqrt{k}) U(?k?,k?)采样,其中 k = 1 h i d d e n _ s i z e k=\frac{1}{hidden\_size} k=hidden_size1?