先给出代码框架(data在最后有链接)

utils.py

#读取数据集的函数,这里有两个数据集 MR & TREC

from sklearn.utils import shuffle

import pickle

def read_TREC():

data = {}

def read(mode):

#'train' 'test'

x, y = [], []

with open("data/TREC/TREC_" + mode + ".txt", "r", encoding="utf-8") as f:

#10903行句子 500行句子

for line in f:

#'DESC:manner How did serfdom develop in and then leave Russia?\n'

if line[-1] == "\n":

line = line[:-1] #去除换行符

y.append(line.split()[0].split(":")[0])

#得到标签 (先按空格分开,取第一个部分,由此根据:分开,再取第一部分)

#['DESC', 'ENTY']

x.append(line.split()[1:]) #得到内容

# [['How', 'did', 'serfdom', 'develop', 'in', 'and', 'then', 'leave', 'Russia', '?'],

# ['What', 'films', 'featured', 'the', 'character', 'Popeye', 'Doyle', '?']]

#x,y中都有10903个元素

x, y = shuffle(x, y) #x,y 一一对应打乱:(x,y作为一组)将不同组打乱

if mode == "train":

dev_idx = len(x) // 10 #分成10份 第一份为验证集,后9份为训练集

#验证集 是为了在训练过程中得到多个模型

#1090个

data["dev_x"], data["dev_y"] = x[:dev_idx], y[:dev_idx]

#9813个

data["train_x"], data["train_y"] = x[dev_idx:], y[dev_idx:]

else:

#500个

data["test_x"], data["test_y"] = x, y

# data.keys()——(['dev_x', 'dev_y', 'train_x', 'train_y', 'test_x', 'test_y'])

read("train")

read("test")

#len(data)=6

return data

def read_MR():

data = {}

x, y = [], []

with open("data/MR/rt-polarity.pos", "r", encoding="utf-8") as f:

for line in f:

if line[-1] == "\n":

line = line[:-1]

x.append(line.split())

y.append(1)

with open("data/MR/rt-polarity.neg", "r", encoding="utf-8") as f:

for line in f:

if line[-1] == "\n":

line = line[:-1]

x.append(line.split())

y.append(0)

x, y = shuffle(x, y)

dev_idx = len(x) // 10 * 8

test_idx = len(x) // 10 * 9

#构建词表

data["train_x"], data["train_y"] = x[:dev_idx], y[:dev_idx]

data["dev_x"], data["dev_y"] = x[dev_idx:test_idx], y[dev_idx:test_idx]

data["test_x"], data["test_y"] = x[test_idx:], y[test_idx:]

return data

def save_model(model, params):

path = f"saved_models/{params['DATASET']}_{params['MODEL']}_{params['EPOCH']}.pkl"

pickle.dump(model, open(path, "wb"))

print(f"A model is saved successfully as {path}!")

def load_model(params):

path = f"saved_models/{params['DATASET']}_{params['MODEL']}_{params['EPOCH']}.pkl"

try:

model = pickle.load(open(path, "rb"))

print(f"Model in {path} loaded successfully!")

return model

except:

print(f"No available model such as {path}.")

exit()

model.py

#模型实现

import torch

import torch.nn as nn

import torch.nn.functional as F

class CNN(nn.Module):

def __init__(self, **kwargs):

#**kwargs——{'MODEL': 'rand', 'DATASET': 'TREC', 'SAVE_MODEL': True, 'EARLY_STOPPING': False, 'EPOCH': 10, 'LEARNING_RATE': 0.001,

# 'MAX_SENT_LEN': 37, 'BATCH_SIZE': 50, 'WORD_DIM': 300, 'VOCAB_SIZE': 9776, 'CLASS_SIZE': 6, 'FILTERS': [3, 4, 5],

# 'FILTER_NUM': [100, 100, 100], 'DROPOUT_PROB': 0.5, 'NORM_LIMIT': 3}

super(CNN, self).__init__()

#一些模型参数

self.MODEL = kwargs["MODEL"]

self.BATCH_SIZE = kwargs["BATCH_SIZE"]

self.MAX_SENT_LEN = kwargs["MAX_SENT_LEN"]

self.WORD_DIM = kwargs["WORD_DIM"]

self.VOCAB_SIZE = kwargs["VOCAB_SIZE"]

self.CLASS_SIZE = kwargs["CLASS_SIZE"]

self.FILTERS = kwargs["FILTERS"]

self.FILTER_NUM = kwargs["FILTER_NUM"]

self.DROPOUT_PROB = kwargs["DROPOUT_PROB"]

self.IN_CHANNEL = 1 #输入通道数为1

assert (len(self.FILTERS) == len(self.FILTER_NUM))

# 3 3 判断是否给每一个卷积核窗口都分配了卷积核通道数,如果没有,就直接停止程序

# one for UNK and one for zero padding

self.embedding = nn.Embedding(self.VOCAB_SIZE + 2, self.WORD_DIM, padding_idx=self.VOCAB_SIZE + 1)

#Embedding(9778, 300, padding_idx=9777)

#+2 是为了加入未收录词的词向量和padding的词向量

if self.MODEL == "static" or self.MODEL == "non-static" or self.MODEL == "multichannel":

self.WV_MATRIX = kwargs["WV_MATRIX"]

self.embedding.weight.data.copy_(torch.from_numpy(self.WV_MATRIX))

if self.MODEL == "static":

self.embedding.weight.requires_grad = False #

elif self.MODEL == "multichannel":

self.embedding2 = nn.Embedding(self.VOCAB_SIZE + 2, self.WORD_DIM, padding_idx=self.VOCAB_SIZE + 1)

self.embedding2.weight.data.copy_(torch.from_numpy(self.WV_MATRIX))

self.embedding2.weight.requires_grad = False

self.IN_CHANNEL = 2

for i in range(len(self.FILTERS)): #定义卷积核 0,1,2

conv = nn.Conv1d(self.IN_CHANNEL, self.FILTER_NUM[i], self.WORD_DIM * self.FILTERS[i], stride=self.WORD_DIM)

#Conv1d(1, 100, kernel_size=(900,), stride=(300,))

setattr(self, f'conv_{i}', conv) #setattr用于设置属性值

#conv_0——Conv1d(1, 100, kernel_size=(900,), stride=(300,)) 300*3

#conv_1——Conv1d(1, 100, kernel_size=(1200,), stride=(300,)) 300*4

#conv_2——Conv1d(1, 100, kernel_size=(1500,), stride=(300,)) 300*5

self.fc = nn.Linear(sum(self.FILTER_NUM), self.CLASS_SIZE)

#Linear(in_features=300, out_features=6, bias=True)

def get_conv(self, i):

return getattr(self, f'conv_{i}')

def forward(self, inp):

#inp 为 50*37 50句子 每一句有37个词序号

x = self.embedding(inp).view(-1, 1, self.WORD_DIM * self.MAX_SENT_LEN)

# x = 50*1*11100 11100=300*37 就是50个张量

if self.MODEL == "multichannel":

x2 = self.embedding2(inp).view(-1, 1, self.WORD_DIM * self.MAX_SENT_LEN)

x = torch.cat((x, x2), 1)

conv_results = [

F.max_pool1d(F.relu(self.get_conv(i)(x)), self.MAX_SENT_LEN - self.FILTERS[i] + 1)

.view(-1, self.FILTER_NUM[i])

for i in range(len(self.FILTERS))]

#conv_results —— 3*50*100

x = torch.cat(conv_results, 1) #50*300

x = F.dropout(x, p=self.DROPOUT_PROB, training=self.training)

x = self.fc(x) #50*6

return x

run.py

#功能实现 文本分词

import datetime

import time

# 方法一:datetime.datetime.now() 时间和日期的结合 eg: 2021-10-15 14:19:27.875779

#——————————————————————————————————————————————————————————————————————#

start_dt = datetime.datetime.now()

print("start_datetime:", start_dt)

time.sleep(2)

for i in range(10000):

i += 1

from model import CNN

import utils

from torch.autograd import Variable

import torch

import torch.optim as optim

import torch.nn as nn

from sklearn.utils import shuffle

from gensim.models.keyedvectors import KeyedVectors

import numpy as np

import argparse

import copy

def train(data, params):

if params["MODEL"] != "rand": #这里是不使用rand模式

# load word2vec

print("loading word2vec...")

word_vectors = KeyedVectors.load_word2vec_format("GoogleNews-vectors-negative300.bin", binary=True)

wv_matrix = []

for i in range(len(data["vocab"])):

word = data["idx_to_word"][i]

if word in word_vectors.vocab:

wv_matrix.append(word_vectors.word_vec(word))

else:

wv_matrix.append(np.random.uniform(-0.01, 0.01, 300).astype("float32"))

# one for UNK and one for zero padding

wv_matrix.append(np.random.uniform(-0.01, 0.01, 300).astype("float32"))

wv_matrix.append(np.zeros(300).astype("float32"))

wv_matrix = np.array(wv_matrix)

params["WV_MATRIX"] = wv_matrix

#model = CNN(**params).cuda(params["GPU"])

model = CNN(**params)

parameters = filter(lambda p: p.requires_grad, model.parameters()) #过滤器,只保留那些需要更新的参数

optimizer = optim.Adadelta(parameters, params["LEARNING_RATE"])

#Adadelta (Parameter Group 0 eps: 1e-06 lr: 0.001 rho: 0.9 weight_decay: 0)

criterion = nn.CrossEntropyLoss()

pre_dev_acc = 0

max_dev_acc = 0

max_test_acc = 0

for e in range(params["EPOCH"]): #开始训练

data["train_x"], data["train_y"] = shuffle(data["train_x"], data["train_y"])

#(0,9813,50)

for i in range(0, len(data["train_x"]), params["BATCH_SIZE"]):

batch_range = min(params["BATCH_SIZE"], len(data["train_x"]) - i)

#到最后一些数据时,已经不足50个,就有多少取多少

batch_x = [[data["word_to_idx"][w] for w in sent] +

[params["VOCAB_SIZE"] + 1] * (params["MAX_SENT_LEN"] - len(sent))

for sent in data["train_x"][i:i + batch_range]]

#将每一个句子都转化为长度为37的列表[id0,id1,id2,...,id36]

batch_y = [data["classes"].index(c) for c in data["train_y"][i:i + batch_range]]

# batch_x = Variable(torch.LongTensor(batch_x)).cuda(params["GPU"])

# batch_y = Variable(torch.LongTensor(batch_y)).cuda(params["GPU"])

#将列表转化为张量类型

batch_x = Variable(torch.LongTensor(batch_x))

batch_y = Variable(torch.LongTensor(batch_y))

optimizer.zero_grad()

model.train()

pred = model(batch_x)

loss = criterion(pred, batch_y)

loss.backward()

nn.utils.clip_grad_norm_(parameters, max_norm=params["NORM_LIMIT"])

optimizer.step()

dev_acc = test(data, model, params, mode="dev")

test_acc = test(data, model, params)

print("epoch:", e + 1, "/ dev_acc:", dev_acc, "/ test_acc:", test_acc)

if params["EARLY_STOPPING"] and dev_acc <= pre_dev_acc:

print("early stopping by dev_acc!")

break

else:

pre_dev_acc = dev_acc

if dev_acc > max_dev_acc:

max_dev_acc = dev_acc

max_test_acc = test_acc

best_model = copy.deepcopy(model)

print("max dev acc:", max_dev_acc, "test acc:", max_test_acc)

return best_model

def test(data, model, params, mode="test"):

model.eval()

if mode == "dev":

x, y = data["dev_x"], data["dev_y"]

elif mode == "test":

x, y = data["test_x"], data["test_y"]

x = [[data["word_to_idx"][w] if w in data["vocab"] else params["VOCAB_SIZE"] for w in sent] +

[params["VOCAB_SIZE"] + 1] * (params["MAX_SENT_LEN"] - len(sent))

for sent in x]

# x = Variable(torch.LongTensor(x)).cuda(params["GPU"])

x = Variable(torch.LongTensor(x))

y = [data["classes"].index(c) for c in y]

pred = np.argmax(model(x).cpu().data.numpy(), axis=1)

acc = sum([1 if p == y else 0 for p, y in zip(pred, y)]) / len(pred)

return acc

def main():

#CNN分类器的相关参数

parser = argparse.ArgumentParser(description="-----[CNN-classifier]-----")

#模式设置为train

parser.add_argument("--mode", default="train", help="train: train (with test) a model / test: test saved models")

#运行模型 四种模型的区别在embedding层:

#CNN-rand:baseline模型,所有词随机初始化然后随着训练不断修改。

# CNN-static:使用经过word2vec预训练的词向量,而未覆盖在内的词则随机初始化。训练过程中,预训练的词向量不变,随机初始化的词向量不断修改。

# CNN-non-static:同上,但预训练的词向量会在训练过程中微调(fine-tune)

# CNN-multichannel:用上面两种词向量,每一种词向量一个通道,一共两个通道。

parser.add_argument("--model", default="rand", help="available models: rand, static, non-static, multichannel")

#所用的数据集名称

parser.add_argument("--dataset", default="TREC", help="available datasets: MR, TREC")

#是否要保存模型

parser.add_argument("--save_model", default=True, action='store_true', help="whether saving model or not") #True

#提前终止(early stopping) 理论上可能的局部极小值数量随参数的数量呈指数增长, 到达某个精确的最小值是不良泛化的一个来源.

parser.add_argument("--early_stopping", default=False, action='store_true', help="whether to apply early stopping")

#训练的轮次

parser.add_argument("--epoch", default=100, type=int, help="number of max epoch")

#学习率

parser.add_argument("--learning_rate", default=1e-3, type=float, help="learning rate")

#这里不使用gpu

#parser.add_argument("--gpu", default=-1, type=int, help="the number of gpu to be used")

options = parser.parse_args()

#options用来存储我们刚刚设置的参数——(dataset='TREC', early_stopping=False, epoch=10,

# learning_rate=0.001, mode='train', model='rand', save_model=True)

data = getattr(utils, f"read_{options.dataset}")()

#data.keys()——(['dev_x', 'dev_y', 'train_x', 'train_y', 'test_x', 'test_y'])

data["vocab"] = sorted(list(set([w for sent in data["train_x"] + data["dev_x"] + data["test_x"] for w in sent])))

#先将三类数据集的单词收集于集合,以确保囊括所有单词;一共有9776个单词

data["classes"] = sorted(list(set(data["train_y"])))

#所有的类别:6类

# ['ABBR', 'DESC', 'ENTY', 'HUM', 'LOC', 'NUM']

#缩写,描述,目录,人,地点,数目

data["word_to_idx"] = {w: i for i, w in enumerate(data["vocab"])}

#将单词映射到下标,9776 字典

data["idx_to_word"] = {i: w for i, w in enumerate(data["vocab"])}

#将下标映射到单词,9776 字典

#data长度为10——(['dev_x', 'dev_y', 'train_x', 'train_y', 'test_x', 'test_y',

# 'vocab', 'classes', 'word_to_idx', 'idx_to_word'])

params = { #15个参数

"MODEL": options.model, #'rand'

"DATASET": options.dataset, #'TREC'

"SAVE_MODEL": options.save_model, #True

"EARLY_STOPPING": options.early_stopping, #False

"EPOCH": options.epoch, #10

"LEARNING_RATE": options.learning_rate, #0.001

"MAX_SENT_LEN": max([len(sent) for sent in data["train_x"] + data["dev_x"] + data["test_x"]]),

#MAX_SENT_LEN : 最大句子长度,统计所有词表中,最大的句子长度,用来补齐所有比这句短的句子的长度,

# 因为CNN要求输入维度一样 37

"BATCH_SIZE": 50, #一次输入进50行句子

"WORD_DIM": 300, #每一个词表示为300维张量

"VOCAB_SIZE": len(data["vocab"]), #9776

"CLASS_SIZE": len(data["classes"]), #6

"FILTERS": [3, 4, 5], #3个卷积核的窗口大小

"FILTER_NUM": [100, 100, 100], #输出的通道数(也就是卷积核的个数)

"DROPOUT_PROB": 0.5,

"NORM_LIMIT": 3, #用于梯度裁剪,防止梯度爆炸,当梯度范数>3时,则进行梯度裁剪

#"GPU": options.gpu

}

print("=" * 20 + "INFORMATION" + "=" * 20)

print("MODEL:", params["MODEL"])

print("DATASET:", params["DATASET"])

print("VOCAB_SIZE:", params["VOCAB_SIZE"])

print("EPOCH:", params["EPOCH"])

print("LEARNING_RATE:", params["LEARNING_RATE"])

print("EARLY_STOPPING:", params["EARLY_STOPPING"])

print("SAVE_MODEL:", params["SAVE_MODEL"])

print("=" * 20 + "INFORMATION" + "=" * 20)

if options.mode == "train":

print("=" * 20 + "TRAINING STARTED" + "=" * 20)

model = train(data, params)

if params["SAVE_MODEL"]:

utils.save_model(model, params)

print("=" * 20 + "TRAINING FINISHED" + "=" * 20)

else:

# model = utils.load_model(params).cuda(params["GPU"])

model = utils.load_model(params)

test_acc = test(data, model, params)

print("test acc:", test_acc)

if __name__ == "__main__":

main()

#——————————————————————————————————————————————————————————————————————#

end_dt = datetime.datetime.now()

print("end_datetime:", end_dt)

print("time cost:", (end_dt - start_dt).seconds, "s")

#——————————————————————————————————————————————————————————————————————#

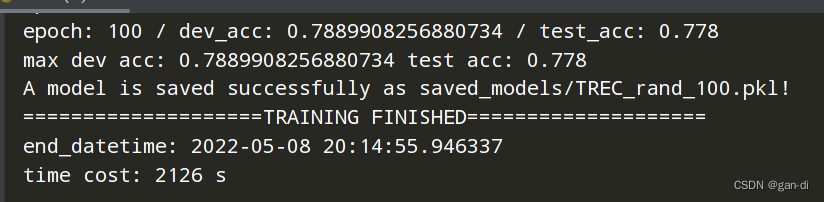

运行结果

这里可以看到运行了100轮,花费时间35分钟,准确率77.8%,还算不错,这是一共有16个类,我觉得是很不错了!!

data

链接:https://pan.baidu.com/s/1sfoCh7qYsIHVn3ee3lrwlw

提取码:2933