前言

1.这是一个是检测人员是否佩戴口罩的的安卓NDK项目,只检测人脸上是否有口罩是,是于检测是否佩戴标准,比如是否盖住嘴巴和鼻子,是否标准的医用的口罩的模型还在优化训练中。项目源码地址:https://download.csdn.net/download/matt45m/85252239

2.开发环境是win10,IDE是Android studio 北极狐,用到的库有NCNN,OpenCV,NCNN库可以用官方编译好的releases库,也可以按官方文档自己编译。OpenCV用的是nihui大佬简化过的opencv-mobile,大小只有10多M,如果不嫌大也可以用OpenCV官方的版本。测试使用的安卓手机是mate 30 pro ,有测试CPU和GPU两个性能。

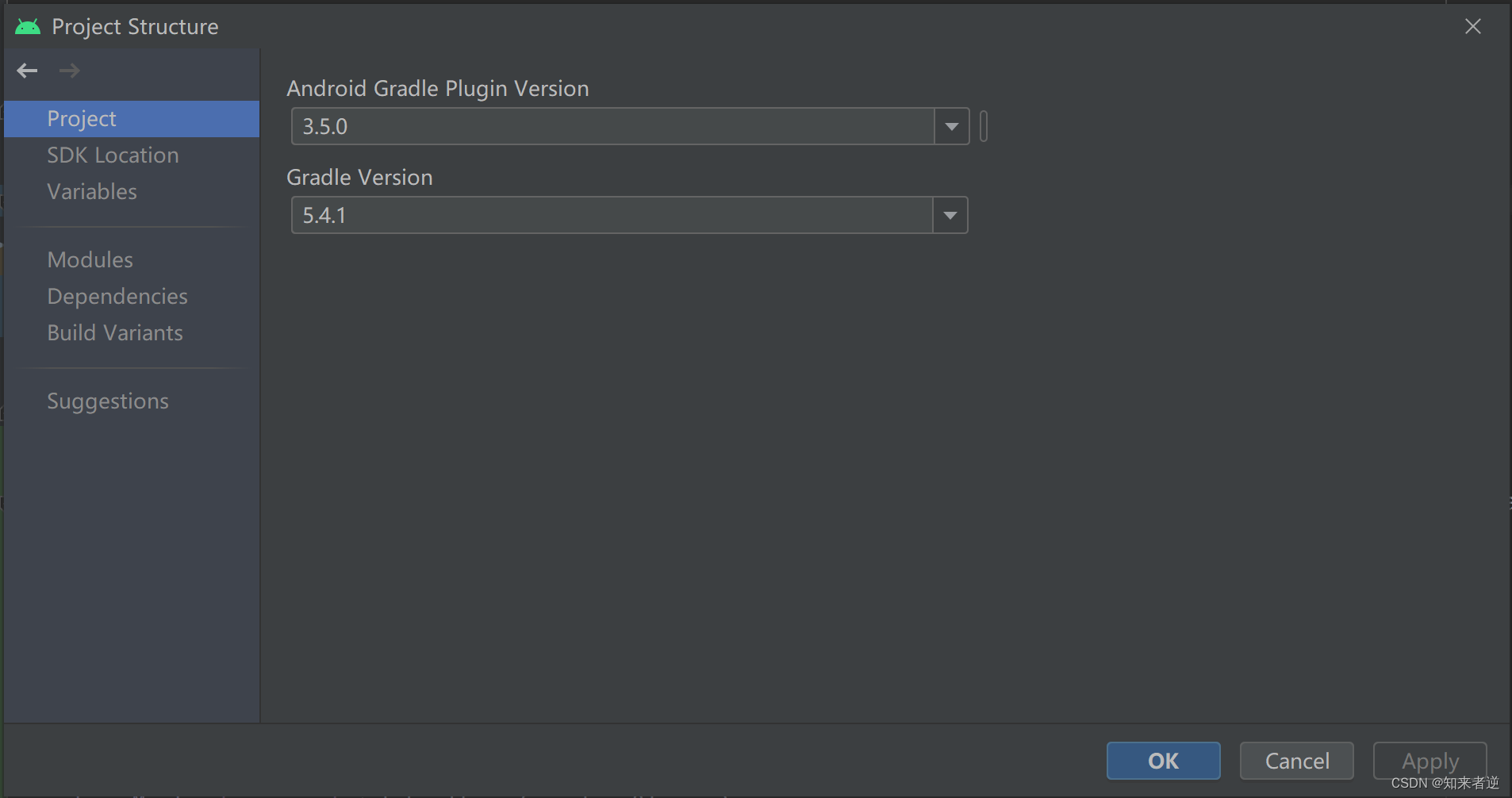

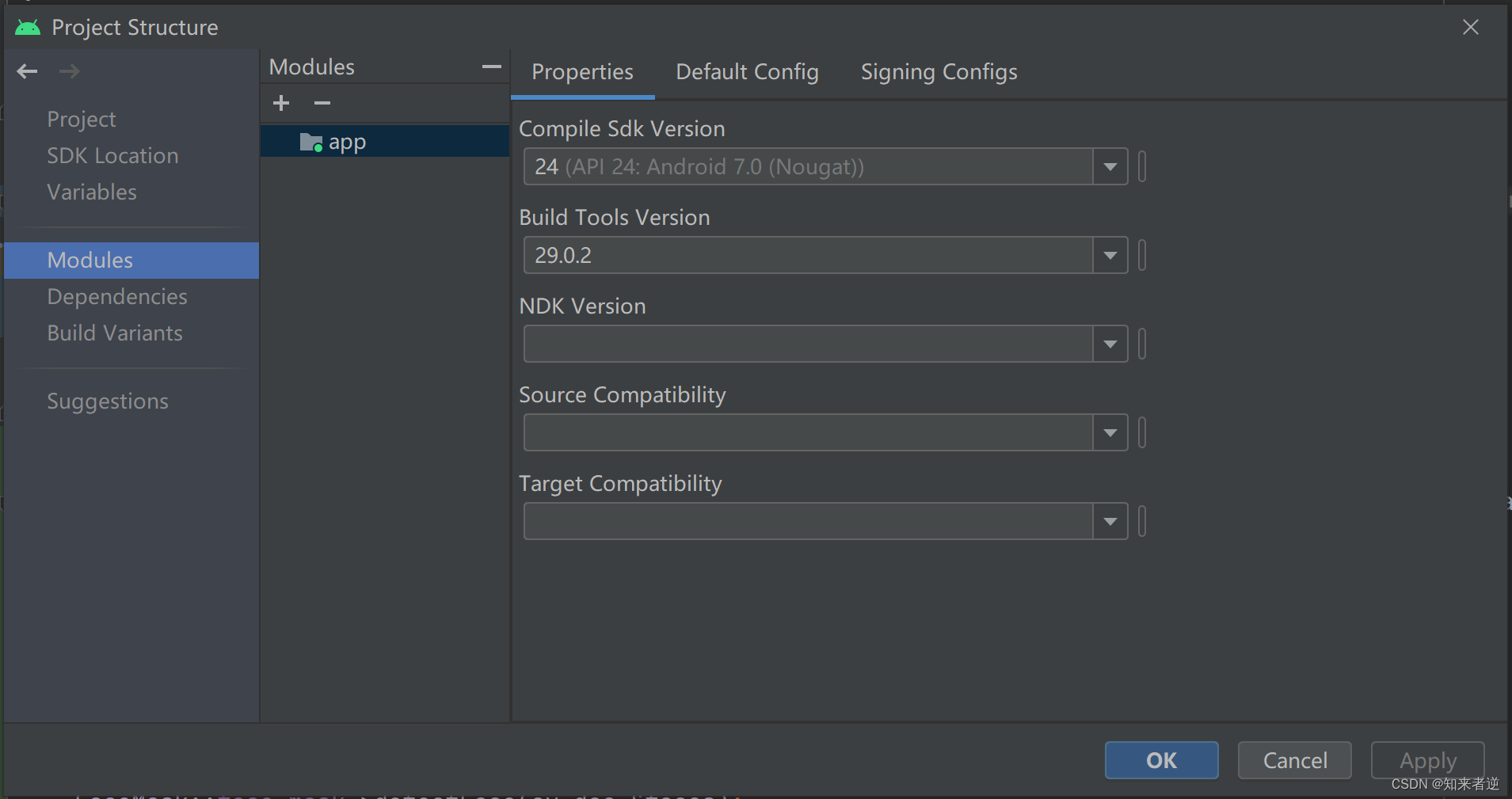

3.项目的各种依赖版本:

一、模型训练

1.使用的数据集是开源的人脸口罩数据集:https://github.com/X-zhangyang/Real-World-Masked-Face-Dataset。数据集基本都是标佩戴。

2.所用的模型训练框架是 yolov5 lite ,在移动端上在保证精度的同时,如果能用上GPU加速,速度能达到10FPS以上。

3.把训练好的模型先onnx,简化之后再转ncnn的模型,这个可以参考ncnn的官网,如果觉得麻烦,也可以直接转pnnx之后直接转ncnn。

二、项目部署

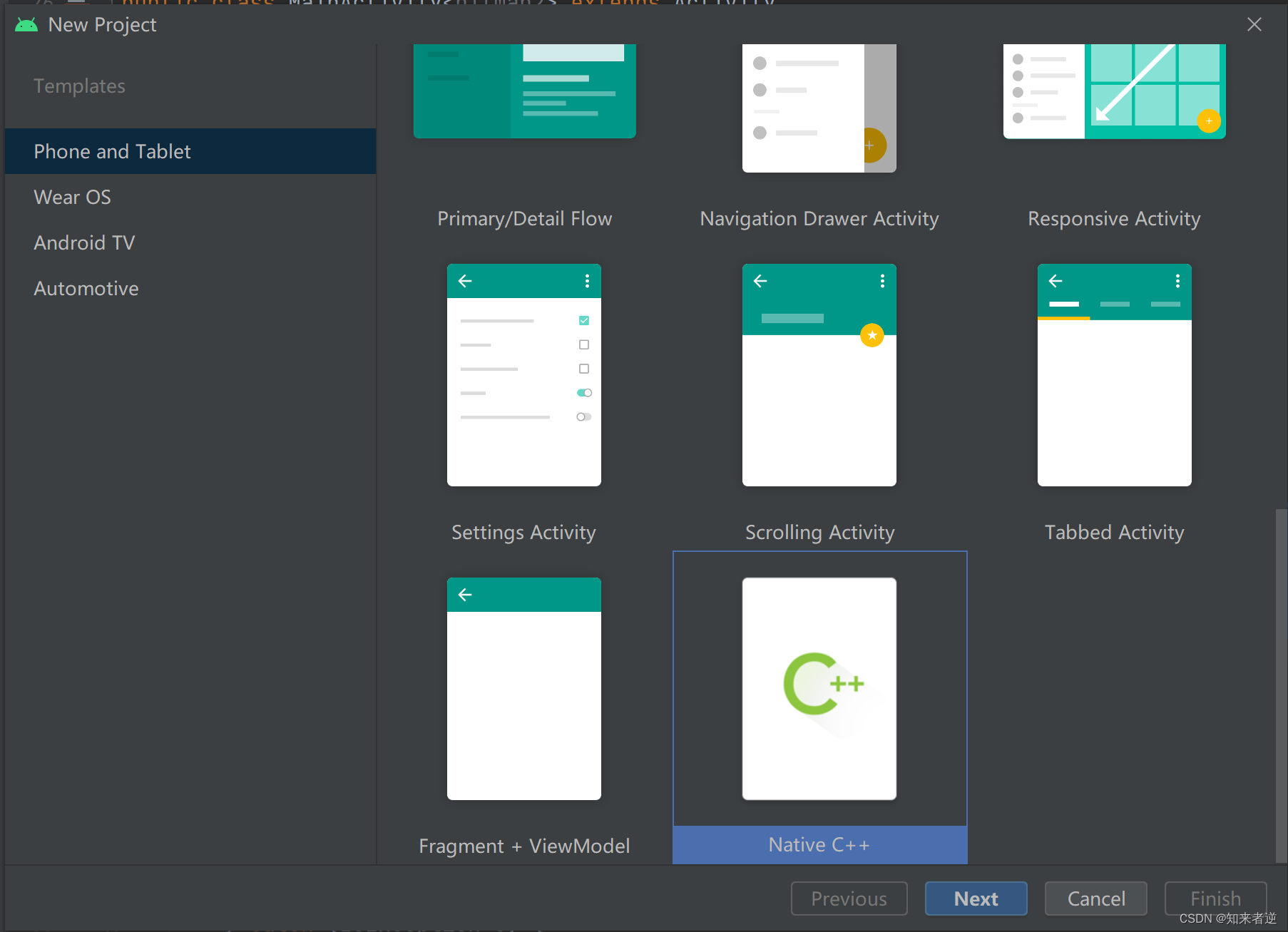

1.创建一个Native C++项目

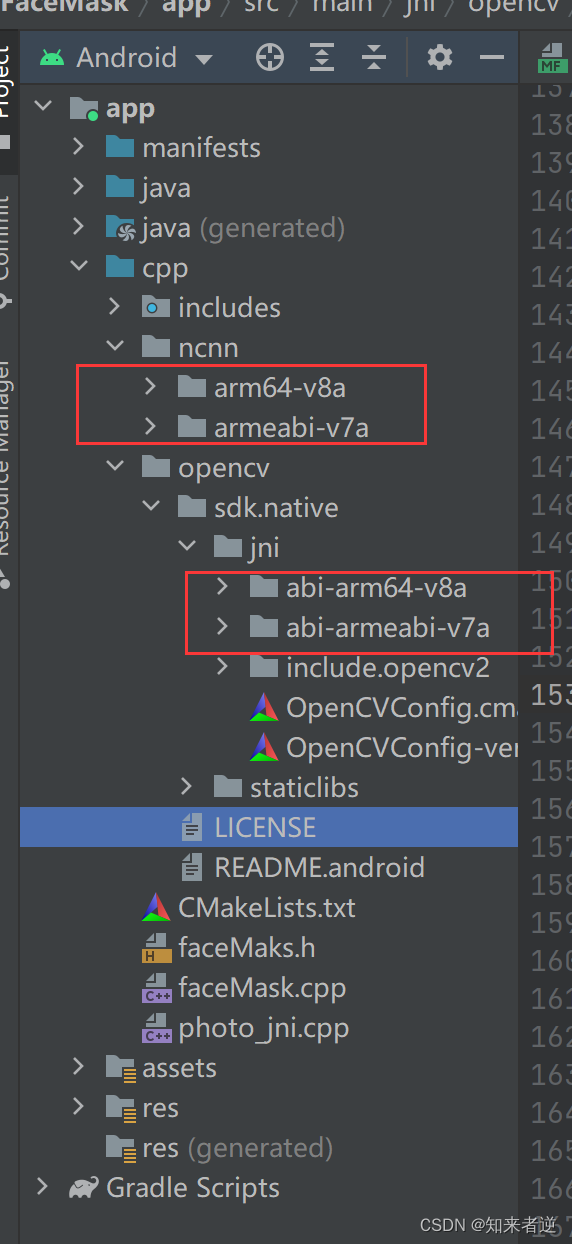

2.把下载好的NCNN和OpenCV so库粘贴到cpp目录下,这里只要arm64-v8a和armeadbi-v7a这两个库,这样的话,项目会小很多,但就是不能在安卓的虚拟机上运行,毕竟ncnn在虚拟机也是跑不动的。

3.添加推理代码

3.1 faceMask.h

#ifndef _FACE_FACEMASK_H_

#define _FACE_FACEMASK_H_

#include "net.h"

#include <opencv2/opencv.hpp>

#include "cpu.h" //安卓上有cpu推理优化

struct FaceInfo

{

cv::Rect location_;

float score_;

bool mask_;

float mask_prod;

};

class FaceMask

{

public:

FaceMask(AAssetManager *mgr,bool use_gpu); //如果机子有GPU ,这里可以设置成true,就可以使用GPU进行推理

~FaceMask();

int loadModel(AAssetManager *mgr,bool use_gpu);

int detectFace(const cv::Mat& img_src, std::vector<FaceInfo> *faces);

static FaceMask* face_mask;

private:

ncnn::Net mask_net;

std::vector<std::vector<cv::Rect>> anchors_generated;

bool initialized_;

const int RPNs_[3] = { 32, 16, 8 };

const cv::Size inputSize_ = { 640, 640 };

const float iouThreshold_ = 0.4f;

const float scoreThreshold_ = 0.8f;

const float maskThreshold_ = 0.2f;

};

#endif

3.2 faceMask.cpp

#include "faceMaks.h"

#include <iostream>

FaceMask::FaceMask(AAssetManager *mgr,bool use_gpu)

{

loadModel(mgr,use_gpu);

}

FaceMask::~FaceMask()

{

}

int RatioAnchors(const cv::Rect& anchor,const std::vector<float>& ratios,std::vector<cv::Rect>* anchors)

{

anchors->clear();

cv::Point center = cv::Point(anchor.x + (anchor.width - 1) * 0.5f,

anchor.y + (anchor.height - 1) * 0.5f);

float anchor_size = anchor.width * anchor.height;

#if defined(_OPENMP)

#pragma omp parallel for num_threads(threads_num)

#endif

for (int i = 0; i < static_cast<int>(ratios.size()); ++i) {

float ratio = ratios.at(i);

float anchor_size_ratio = anchor_size / ratio;

float curr_anchor_width = std::sqrt(anchor_size_ratio);

float curr_anchor_height = curr_anchor_width * ratio;

float curr_x = center.x - (curr_anchor_width - 1) * 0.5f;

float curr_y = center.y - (curr_anchor_height - 1) * 0.5f;

cv::Rect curr_anchor = cv::Rect(curr_x, curr_y,

curr_anchor_width - 1, curr_anchor_height - 1);

anchors->push_back(curr_anchor);

}

return 0;

}

int ScaleAnchors(const std::vector<cv::Rect>& ratio_anchors,const std::vector<float>& scales, std::vector<cv::Rect>* anchors)

{

anchors->clear();

#if defined(_OPENMP)

#pragma omp parallel for num_threads(threads_num)

#endif

for (int i = 0; i < static_cast<int>(ratio_anchors.size()); ++i)

{

cv::Rect anchor = ratio_anchors.at(i);

cv::Point2f center = cv::Point2f(anchor.x + anchor.width * 0.5f,

anchor.y + anchor.height * 0.5f);

for (int j = 0; j < static_cast<int>(scales.size()); ++j)

{

float scale = scales.at(j);

float curr_width = scale * (anchor.width + 1);

float curr_height = scale * (anchor.height + 1);

float curr_x = center.x - curr_width * 0.5f;

float curr_y = center.y - curr_height * 0.5f;

cv::Rect curr_anchor = cv::Rect(curr_x, curr_y,

curr_width, curr_height);

anchors->push_back(curr_anchor);

}

}

return 0;

}

int GenerateAnchors(const int& base_size,const std::vector<float>& ratios,const std::vector<float> scales,std::vector<cv::Rect>* anchors)

{

anchors->clear();

cv::Rect anchor = cv::Rect(0, 0, base_size, base_size);

std::vector<cv::Rect> ratio_anchors;

RatioAnchors(anchor, ratios, &ratio_anchors);

ScaleAnchors(ratio_anchors, scales, anchors);

return 0;

}

int FaceMask::loadModel(AAssetManager *mgr,bool use_gpu)

{

bool has_gpu = false;

#if NCNN_VULKAN

ncnn::create_gpu_instance();

has_gpu = ncnn::get_gpu_count() > 0;

#endif

bool to_use_gpu = has_gpu && use_gpu;

mask_net.opt.use_vulkan_compute = to_use_gpu;

ncnn::set_cpu_powersave(2);

ncnn::set_omp_num_threads(ncnn::get_big_cpu_count());

mask_net.opt.num_threads = ncnn::get_big_cpu_count();

mask_net.opt.use_int8_inference = true;

int rp = mask_net.load_param(mgr, "mask.param");

int rm = mask_net.load_model(mgr, "mask.bin");

// generate anchors

for (int i = 0; i < 3; ++i)

{

std::vector<cv::Rect> anchors;

if (0 == i)

{

GenerateAnchors(16, { 1.0f }, { 32, 16 }, &anchors);

}

else if (1 == i)

{

GenerateAnchors(16, { 1.0f }, { 8, 4 }, &anchors);

}

else

{

GenerateAnchors(16, { 1.0f }, { 2, 1 }, &anchors);

}

anchors_generated.push_back(anchors);

}

initialized_ = true;

return 0;

}

float InterRectArea(const cv::Rect& a, const cv::Rect& b)

{

cv::Point left_top = cv::Point(MAX(a.x, b.x), MAX(a.y, b.y));

cv::Point right_bottom = cv::Point(MIN(a.br().x, b.br().x), MIN(a.br().y, b.br().y));

cv::Point diff = right_bottom - left_top;

return (MAX(diff.x + 1, 0) * MAX(diff.y + 1, 0));

}

int ComputeIOU(const cv::Rect& rect1,const cv::Rect& rect2, float* iou,const std::string& type)

{

float inter_area = InterRectArea(rect1, rect2);

if (type == "UNION")

{

*iou = inter_area / (rect1.area() + rect2.area() - inter_area);

}

else

{

*iou = inter_area / MIN(rect1.area(), rect2.area());

}

return 0;

}

template <typename T>int const NMS(const std::vector<T>& inputs, std::vector<T>* result,const float& threshold, const std::string& type = "UNION")

{

result->clear();

if (inputs.size() == 0)

return -1;

std::vector<T> inputs_tmp;

inputs_tmp.assign(inputs.begin(), inputs.end());

std::sort(inputs_tmp.begin(), inputs_tmp.end(),

[](const T& a, const T& b)

{

return a.score_ > b.score_;

});

std::vector<int> indexes(inputs_tmp.size());

for (int i = 0; i < indexes.size(); i++)

{

indexes[i] = i;

}

while (indexes.size() > 0)

{

int good_idx = indexes[0];

result->push_back(inputs_tmp[good_idx]);

std::vector<int> tmp_indexes = indexes;

indexes.clear();

for (int i = 1; i < tmp_indexes.size(); i++)

{

int tmp_i = tmp_indexes[i];

float iou = 0.0f;

ComputeIOU(inputs_tmp[good_idx].location_, inputs_tmp[tmp_i].location_, &iou, type);

if (iou <= threshold)

{

indexes.push_back(tmp_i);

}

}

}

return 0;

}

int FaceMask::detectFace(const cv::Mat & cv_src,std::vector<FaceInfo>* faces)

{

cv::Mat cv_cpy = cv_src.clone();

int img_width = cv_cpy.cols;

int img_height = cv_cpy.rows;

float factor_x = static_cast<float>(img_width) / inputSize_.width;

float factor_y = static_cast<float>(img_height) / inputSize_.height;

ncnn::Extractor ex = mask_net.create_extractor();

ncnn::Mat in = ncnn::Mat::from_pixels_resize(cv_cpy.data,

ncnn::Mat::PIXEL_BGR2RGB, img_width, img_height, inputSize_.width, inputSize_.height);

ex.input("data", in);

std::vector<FaceInfo> faces_tmp;

for (int i = 0; i < 3; ++i)

{

std::string class_layer_name = "face_rpn_cls_prob_reshape_stride" + std::to_string(RPNs_[i]);

std::string bbox_layer_name = "face_rpn_bbox_pred_stride" + std::to_string(RPNs_[i]);

std::string landmark_layer_name = "face_rpn_landmark_pred_stride" + std::to_string(RPNs_[i]);

std::string type_layer_name = "face_rpn_type_prob_reshape_stride" + std::to_string(RPNs_[i]);

ncnn::Mat class_mat, bbox_mat, landmark_mat, type_mat;

ex.extract(class_layer_name.c_str(), class_mat);

ex.extract(bbox_layer_name.c_str(), bbox_mat);

ex.extract(landmark_layer_name.c_str(), landmark_mat);

ex.extract(type_layer_name.c_str(), type_mat);

std::vector<cv::Rect> anchors = anchors_generated.at(i);

int width = class_mat.w;

int height = class_mat.h;

int anchor_num = static_cast<int>(anchors.size());

for (int h = 0; h < height; ++h)

{

for (int w = 0; w < width; ++w)

{

int index = h * width + w;

for (int a = 0; a < anchor_num; ++a)

{

float score = class_mat.channel(anchor_num + a)[index];

if (score < scoreThreshold_)

{

continue;

}

float prob = type_mat.channel(2 * anchor_num + a)[index];

cv::Rect box = cv::Rect(w * RPNs_[i] + anchors[a].x,

h * RPNs_[i] + anchors[a].y,

anchors[a].width,

anchors[a].height);

float delta_x = bbox_mat.channel(a * 4 + 0)[index];

float delta_y = bbox_mat.channel(a * 4 + 1)[index];

float delta_w = bbox_mat.channel(a * 4 + 2)[index];

float delta_h = bbox_mat.channel(a * 4 + 3)[index];

cv::Point2f center = cv::Point2f(box.x + box.width * 0.5f,box.y + box.height * 0.5f);

center.x = center.x + delta_x * box.width;

center.y = center.y + delta_y * box.height;

float curr_width = std::exp(delta_w) * (box.width + 1);

float curr_height = std::exp(delta_h) * (box.height + 1);

cv::Rect curr_box = cv::Rect(center.x - curr_width * 0.5f,

center.y - curr_height * 0.5f, curr_width, curr_height);

curr_box.x = MAX(curr_box.x * factor_x, 0);

curr_box.y = MAX(curr_box.y * factor_y, 0);

curr_box.width = MIN(img_width - curr_box.x, curr_box.width * factor_x);

curr_box.height = MIN(img_height - curr_box.y, curr_box.height * factor_y);

FaceInfo face_info;

memset(&face_info, 0, sizeof(face_info));

face_info.score_ = score;

face_info.mask_prod = prob;

face_info.mask_ = (prob > maskThreshold_);

face_info.location_ = curr_box;

faces_tmp.push_back(face_info);

}

}

}

}

NMS(faces_tmp, faces, iouThreshold_);

return 0;

}

3.3 在原本的jni.cpp文件上添加代码

#include <android/asset_manager_jni.h>

#include <android/bitmap.h>

#include <android/log.h>

#include <jni.h>

#include <string>

#include <vector>

// ncnn

#include "net.h"

#include "benchmark.h"

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include "faceMaks.h"

void BitmapToMat2(JNIEnv *env, jobject& bitmap, cv::Mat& mat, jboolean needUnPremultiplyAlpha)

{

AndroidBitmapInfo info;

void *pixels = 0;

cv::Mat &dst = mat;

try {

CV_Assert(AndroidBitmap_getInfo(env, bitmap, &info) >= 0);

CV_Assert(info.format == ANDROID_BITMAP_FORMAT_RGBA_8888 ||

info.format == ANDROID_BITMAP_FORMAT_RGB_565);

CV_Assert(AndroidBitmap_lockPixels(env, bitmap, &pixels) >= 0);

CV_Assert(pixels);

dst.create(info.height, info.width, CV_8UC4);

if (info.format == ANDROID_BITMAP_FORMAT_RGBA_8888) {

cv::Mat tmp(info.height, info.width, CV_8UC4, pixels);

if (needUnPremultiplyAlpha) cvtColor(tmp, dst, cv::COLOR_mRGBA2RGBA);

else tmp.copyTo(dst);

} else {

// info.format == ANDROID_BITMAP_FORMAT_RGB_565

cv::Mat tmp(info.height, info.width, CV_8UC2, pixels);

cvtColor(tmp, dst, cv::COLOR_BGR5652RGBA);

}

AndroidBitmap_unlockPixels(env, bitmap);

return;

} catch (const cv::Exception &e) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("org/opencv/core/CvException");

if (!je) je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, e.what());

return;

} catch (...) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, "Unknown exception in JNI code {nBitmapToMat}");

return;

}

}

void BitmapToMat(JNIEnv *env, jobject& bitmap, cv::Mat& mat) {

BitmapToMat2(env, bitmap, mat, false);

}

void MatToBitmap2(JNIEnv *env, cv::Mat& mat, jobject& bitmap, jboolean needPremultiplyAlpha)

{

AndroidBitmapInfo info;

void *pixels = 0;

cv::Mat &src = mat;

try {

CV_Assert(AndroidBitmap_getInfo(env, bitmap, &info) >= 0);

CV_Assert(info.format == ANDROID_BITMAP_FORMAT_RGBA_8888 ||

info.format == ANDROID_BITMAP_FORMAT_RGB_565);

CV_Assert(src.dims == 2 && info.height == (uint32_t) src.rows &&

info.width == (uint32_t) src.cols);

CV_Assert(src.type() == CV_8UC1 || src.type() == CV_8UC3 || src.type() == CV_8UC4);

CV_Assert(AndroidBitmap_lockPixels(env, bitmap, &pixels) >= 0);

CV_Assert(pixels);

if (info.format == ANDROID_BITMAP_FORMAT_RGBA_8888)

{

cv::Mat tmp(info.height, info.width, CV_8UC4, pixels);

if (src.type() == CV_8UC1)

{

cvtColor(src, tmp, cv::COLOR_GRAY2RGBA);

}

else if (src.type() == CV_8UC3)

{

cvtColor(src, tmp, cv::COLOR_RGB2RGBA);

}

else if (src.type() == CV_8UC4)

{

if (needPremultiplyAlpha)

{

cvtColor(src, tmp, cv::COLOR_RGBA2mRGBA);

}

else{

src.copyTo(tmp);

}

}

} else {

// info.format == ANDROID_BITMAP_FORMAT_RGB_565

cv::Mat tmp(info.height, info.width, CV_8UC2, pixels);

if (src.type() == CV_8UC1)

{

cvtColor(src, tmp, cv::COLOR_GRAY2BGR565);

}

else if (src.type() == CV_8UC3)

{

cvtColor(src, tmp, cv::COLOR_RGB2BGR565);

}

else if (src.type() == CV_8UC4)

{

cvtColor(src, tmp, cv::COLOR_RGBA2BGR565);

}

}

AndroidBitmap_unlockPixels(env, bitmap);

return;

}catch (const cv::Exception &e) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("org/opencv/core/CvException");

if (!je) je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, e.what());

return;

} catch (...) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, "Unknown exception in JNI code {nMatToBitmap}");

return;

}

}

void MatToBitmap(JNIEnv *env, cv::Mat& mat, jobject& bitmap)

{

MatToBitmap2(env, mat, bitmap, false);

}

FaceMask *FaceMask::face_mask = nullptr;

extern "C" {

JNIEXPORT jint JNI_OnLoad(JavaVM* vm, void* reserved)

{

__android_log_print(ANDROID_LOG_DEBUG, "photo", "JNI_OnLoad");

ncnn::create_gpu_instance();

return JNI_VERSION_1_4;

}

JNIEXPORT void JNI_OnUnload(JavaVM* vm, void* reserved)

{

__android_log_print(ANDROID_LOG_DEBUG, "photo", "JNI_OnUnload");

ncnn::destroy_gpu_instance();

}

//模型加载

JNIEXPORT jboolean JNICALL Java_com_dashu_photo_Mask_Init(JNIEnv* env, jobject thiz, jobject assetManager)

{

AAssetManager *mgr = AAssetManager_fromJava(env, assetManager);

bool use_gpu = false;//不使用GPU推理

FaceMask::face_mask = new FaceMask(mgr,use_gpu);

return JNI_TRUE;

}

}

//模型推理

extern "C" JNIEXPORT jobject JNICALL Java_com_dashu_photo_Mask_maskDetect(JNIEnv *env, jobject, jobject image)

{

cv::Mat cv_src,cv_dst,cv_doc;

//bitmap转化成mat

BitmapToMat(env,image,cv_src);

cv::cvtColor(cv_src,cv_doc,cv::COLOR_BGRA2BGR);

std::vector<FaceInfo> faces;

double start = static_cast<double>(cv::getTickCount());

FaceMask::face_mask->detectFace(cv_doc,&faces);

double end = static_cast<double>(cv::getTickCount());

double time_cost = (end - start) / cv::getTickFrequency() * 1000;

std::string time = std::to_string(time_cost);

cv::putText(cv_doc,time, cv::Point(10,20), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0,255,0), 2);

int num_face = static_cast<int>(faces.size());

for (int i = 0; i < num_face; ++i)

{

if (faces[i].mask_)

{

std::string mask = "mask = " + std::to_string(faces[i].mask_prod);

cv::putText(cv_doc,mask , faces[i].location_.tl(), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0,0,255), 1);

cv::rectangle(cv_doc, faces[i].location_, cv::Scalar(0, 255, 0), 2);

}

else {

std::string mask = "No Mask = " + std::to_string(faces[i].mask_prod);

cv::putText(cv_doc, mask, faces[i].location_.tl(), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 255), 1);

cv::rectangle(cv_doc, faces[i].location_, cv::Scalar(255, 0, 0), 2);

}

}

MatToBitmap(env,cv_doc,image);

return image;

}

以上C++部分的代码已添加完成。

4.编写makefile文件

project(photo)

cmake_minimum_required(VERSION 3.4.1)

#导入ncnn库

set(ncnn_DIR ${CMAKE_SOURCE_DIR}/ncnn/${ANDROID_ABI}/lib/cmake/ncnn)

find_package(ncnn REQUIRED)

#导入opencv库

set(OpenCV_DIR ${CMAKE_SOURCE_DIR}/opencv/sdk/native/jni)

find_package(OpenCV REQUIRED core imgproc)

#导入cpp文件

add_library(${PROJECT_NAME} SHARED faceMask.cpp mask_jni.cpp)

target_link_libraries(${PROJECT_NAME} ncnn ${OpenCV_LIBS})

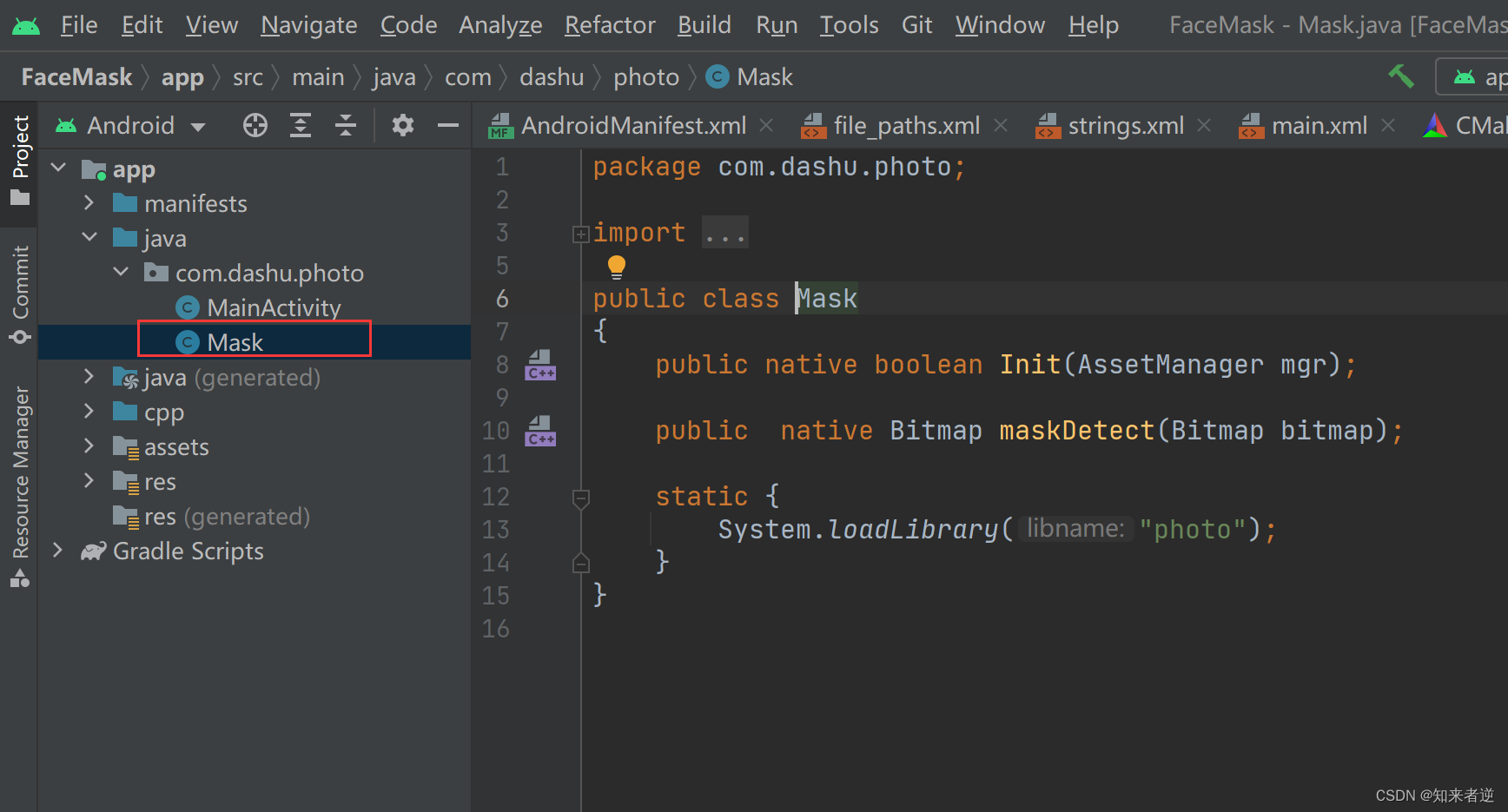

5.添加java接口文件

package com.dashu.photo;

import android.content.res.AssetManager;

import android.graphics.Bitmap;

public class Mask

{

public native boolean Init(AssetManager mgr);

public native Bitmap maskDetect(Bitmap bitmap);

static {

System.loadLibrary("photo");

}

}

6.jave实现代码

package com.dashu.photo;

import android.app.Activity;

import android.content.Intent;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.net.Uri;

import android.os.Bundle;

import android.util.Log;

import android.view.View;

import android.widget.ImageButton;

import android.widget.ImageView;

import android.media.ExifInterface;

import android.graphics.Matrix;

import java.io.IOException;

import java.io.FileNotFoundException;

public class MainActivity extends Activity

{

private static final int SELECT_IMAGE = 1;

private ImageView imageView;

private Bitmap bitmap = null;

private Bitmap temp = null;

private Bitmap showImage = null;

private Bitmap bitmapCopy = null;

private Bitmap dst = null;

boolean useGPU = true;

private Mask mask = new Mask();

@Override

public void onCreate(Bundle savedInstanceState)

{

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

imageView = (ImageView) findViewById(R.id.imageView);

boolean ret_init = mask.Init(getAssets());

if (!ret_init)

{

Log.e("MainActivity", "Mask Init failed");

}

//打开图像

ImageButton openFile = (ImageButton) findViewById(R.id.btn_open_images);

openFile.setOnClickListener(new View.OnClickListener()

{

@Override

public void onClick(View arg0)

{

Intent i = new Intent(Intent.ACTION_PICK);

i.setType("image/*");

startActivityForResult(i, SELECT_IMAGE);

}

});

//检测图像

ImageButton image_gray = (ImageButton) findViewById(R.id.btn_detection);

image_gray.setOnClickListener(new View.OnClickListener()

{

@Override

public void onClick(View arg0)

{

if (showImage == null)

{

return;

}

Bitmap bitmap = mask.maskDetect(showImage);

imageView.setImageBitmap(bitmap);

}

});

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data)

{

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK && null != data) {

Uri selectedImage = data.getData();

try

{

if (requestCode == SELECT_IMAGE)

{

bitmap = decodeUri(selectedImage);

showImage = bitmap.copy(Bitmap.Config.ARGB_8888, true);

bitmapCopy = bitmap.copy(Bitmap.Config.ARGB_8888, true);

imageView.setImageBitmap(bitmap);

}

}

catch (FileNotFoundException e)

{

Log.e("MainActivity", "FileNotFoundException");

return;

}

}

}

private Bitmap decodeUri(Uri selectedImage) throws FileNotFoundException

{

// Decode image size

BitmapFactory.Options o = new BitmapFactory.Options();

o.inJustDecodeBounds = true;

BitmapFactory.decodeStream(getContentResolver().openInputStream(selectedImage), null, o);

// The new size we want to scale to

final int REQUIRED_SIZE = 640;

// Find the correct scale value. It should be the power of 2.

int width_tmp = o.outWidth, height_tmp = o.outHeight;

int scale = 1;

while (true) {

if (width_tmp / 2 < REQUIRED_SIZE

|| height_tmp / 2 < REQUIRED_SIZE) {

break;

}

width_tmp /= 2;

height_tmp /= 2;

scale *= 2;

}

// Decode with inSampleSize

BitmapFactory.Options o2 = new BitmapFactory.Options();

o2.inSampleSize = scale;

Bitmap bitmap = BitmapFactory.decodeStream(getContentResolver().openInputStream(selectedImage), null, o2);

// Rotate according to EXIF

int rotate = 0;

try

{

ExifInterface exif = new ExifInterface(getContentResolver().openInputStream(selectedImage));

int orientation = exif.getAttributeInt(ExifInterface.TAG_ORIENTATION, ExifInterface.ORIENTATION_NORMAL);

switch (orientation) {

case ExifInterface.ORIENTATION_ROTATE_270:

rotate = 270;

break;

case ExifInterface.ORIENTATION_ROTATE_180:

rotate = 180;

break;

case ExifInterface.ORIENTATION_ROTATE_90:

rotate = 90;

break;

}

}

catch (IOException e)

{

Log.e("MainActivity", "ExifInterface IOException");

}

Matrix matrix = new Matrix();

matrix.postRotate(rotate);

return Bitmap.createBitmap(bitmap, 0, 0, bitmap.getWidth(), bitmap.getHeight(), matrix, true);

}

}

7.布局文件

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout

xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

xmlns:app="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

tools:context=".MainActivity">

<ImageView

android:id="@+id/imageView"

android:layout_width="match_parent"

android:layout_height="0dp"

android:layout_weight="1">

</ImageView>

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="horizontal">

<ImageButton

android:id="@+id/btn_open_images"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_weight="1"

android:src="@mipmap/open_images" />

<ImageButton

android:id="@+id/btn_detection"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_weight="1"

android:src="@mipmap/detection" />

</LinearLayout>

</LinearLayout>

三、项目效果

1.佩戴口罩

2.不佩戴口罩