文章目录

1.论文地址

https://arxiv.org/pdf/1707.01083.pdf

2.旷视轻量化卷积神经网络shuffleNet

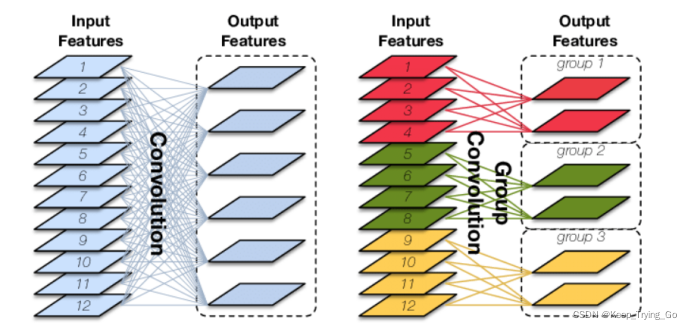

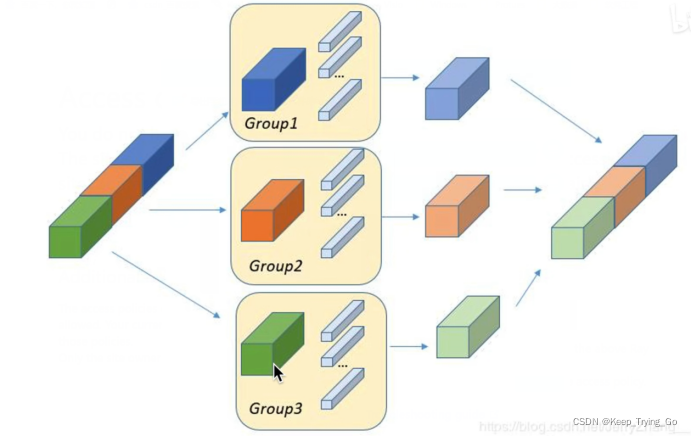

分组卷积:每个卷积核不再处理所有输入通道,而是只处理一部分通道。

(1)分组卷积

左边是标准卷积:参数量 3x3x12x6=648;右边是分组卷积: 参数量 3x3x(12/3)x(6/3)x3=216。

https://programmersought.com/article/27351093852/

分组1X1卷积:就是将1x1卷积使用在分组卷积之上。但是分组卷积之后不是跨通道的,所以导致信息之间没有失去联系,所以就有了下面的通道重排,实现信息之间的交流。

注:其中LeNet5和AlexNet是最早使用分组卷积:

LeNet5论文地址:

http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf

LeNet5结构讲解:

https://mydreamambitious.blog.csdn.net/article/details/123976153

AlexNet论文地址:

http://www.cs.toronto.edu/~fritz/absps/imagenet.pdf

AlexNet结构讲解:

https://mydreamambitious.blog.csdn.net/article/details/124077928

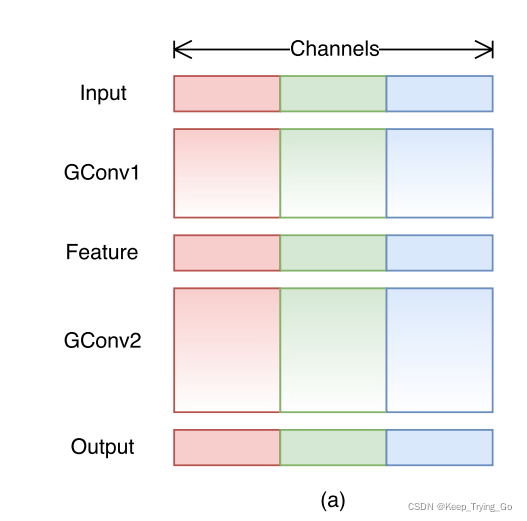

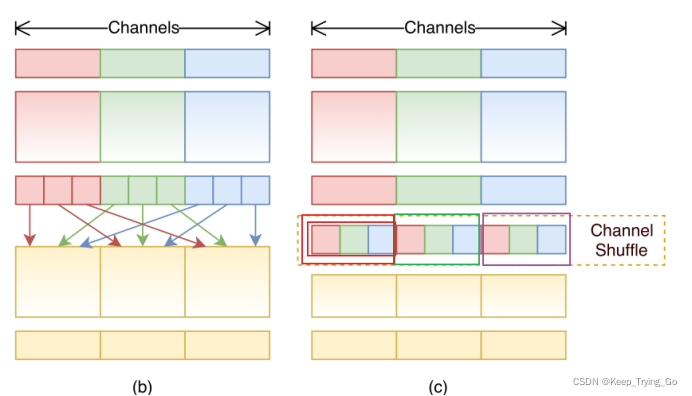

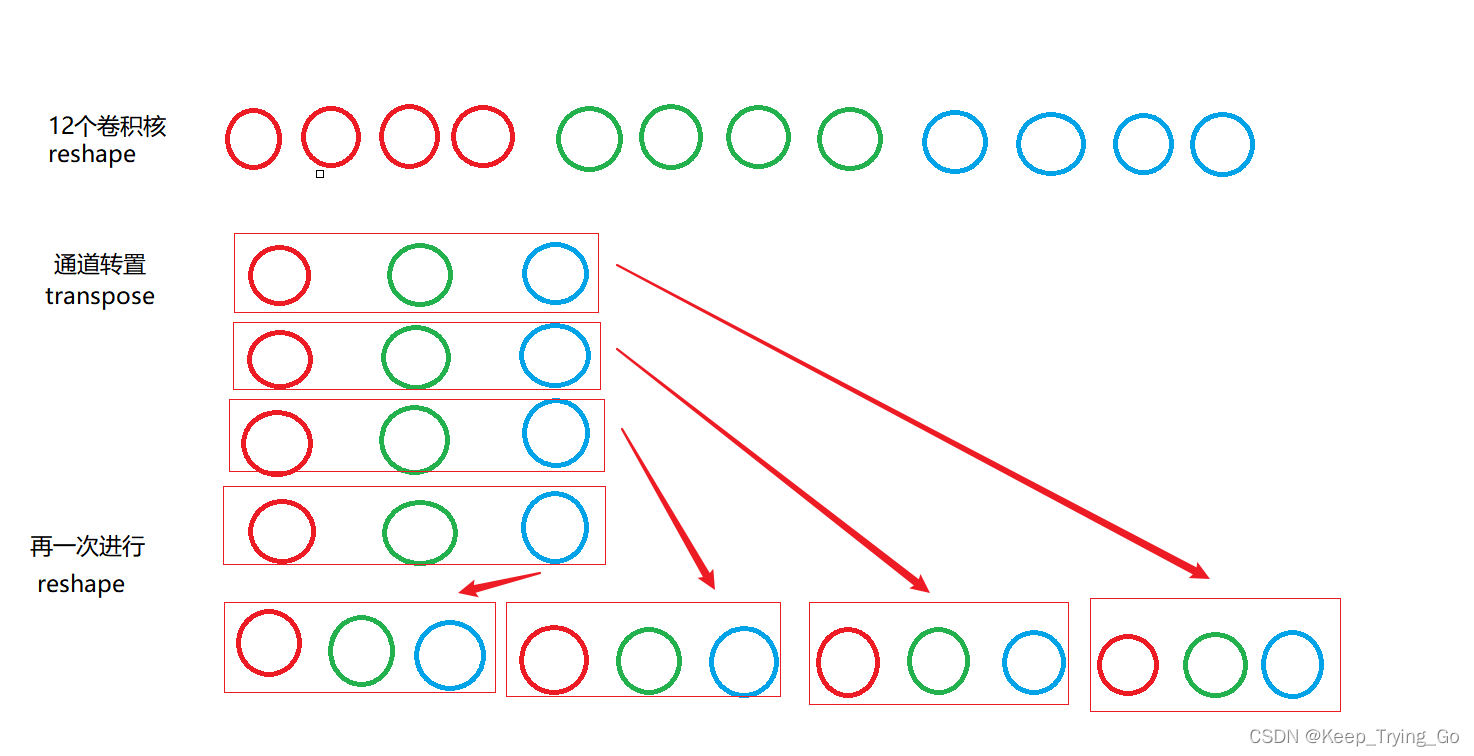

(2)Channel Shuffle(通道重排)

通道重排的实现:

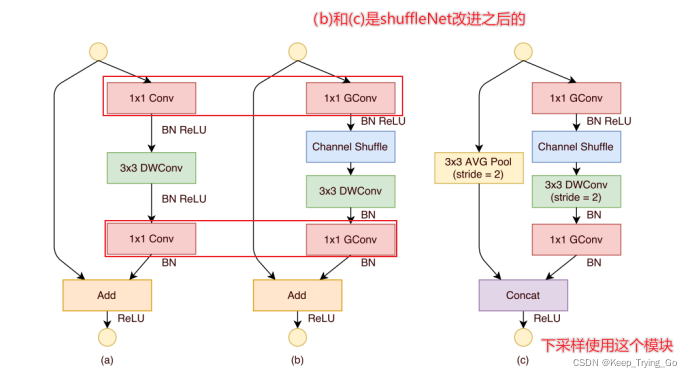

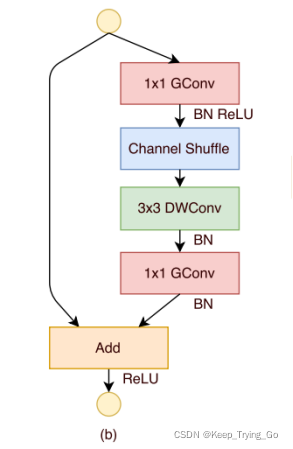

3.shuffletNet模块

(a)将ResNet中的3x3卷积改成了3x3的Depthwise Convolutions;

(b)将(a)中的对应的1x1卷积换成了1x1的分组卷积,随后再加一个Channel shuffle;

(c)将(b)中3x3的Depthwise Convolutions步长改成了2,由于需要进行shortcut,所以短接的时候使用AvgeragePool2D进行下采样,便于后面的channel concat操作。

4.ResNet,ResNeXt和ShuffleNet计算量的对比

这里假设输入的特征图为: h x w x c;1x1卷积核: 1 x 1 x m;3x3卷积核:3 x 3 x m

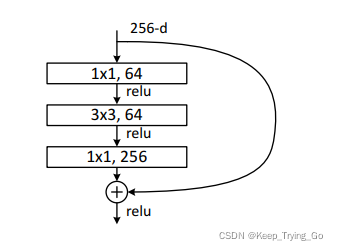

(1)ResNet

FLOPs:h x w x m x (1 x 1 x c)+h x w x m x (3 x 3 x m)+h x w x c x(1 x 1 x m)

注:m=64;先使用1x1卷积核降维(c=>m),再使用3x3卷积进行运算,最后使用1x1卷积进行升维。

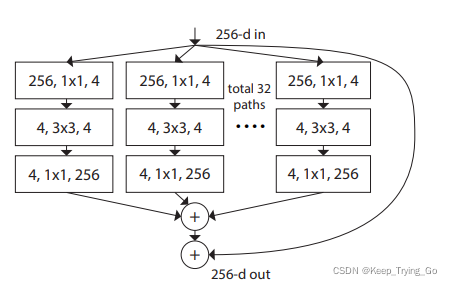

(2)ResNeXt

FLOPs: g x h x w x m (1 x 1 x c)+g x h x w x m x (3 x 3 x m)+g x h x w x c x(1 x 1 x m)

注:g——分的组数;m=4;使用1x1卷积降维,再进行3x3的卷积运算,最后使用1x1卷积升维。

(3)ShuffleNet

FLOPs: g x h x w x m x (1 x 1 x c/g)+ h x w x m x (3 x 3 x 1)+g x h x w x c x(1 x 1 x m/g)

注:g——分的组数;先使用分组的1x1卷积降维,在进行3x3的深度可分离卷积(depthwise convolutions),最后进行分组的1x1卷积升维。

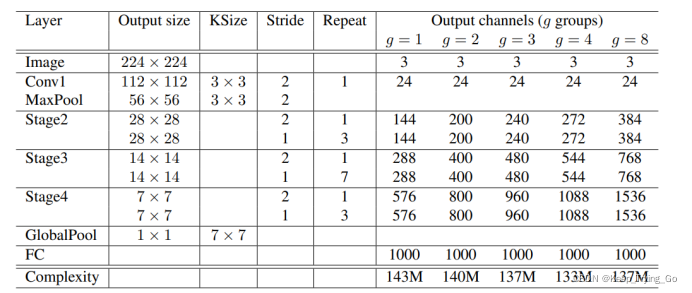

5.ShuffleNet结构

6.实验结果对比

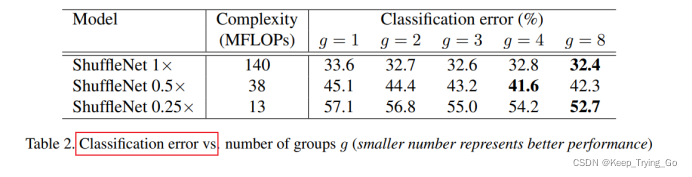

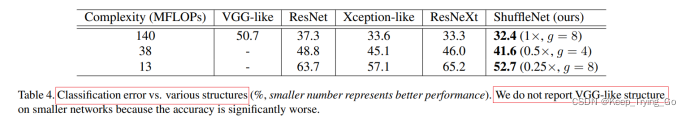

(1)对比一

从该表中可以得到分的组数越多的话,对于宽度(卷积核个数)更小的网络效果明显要好很多。

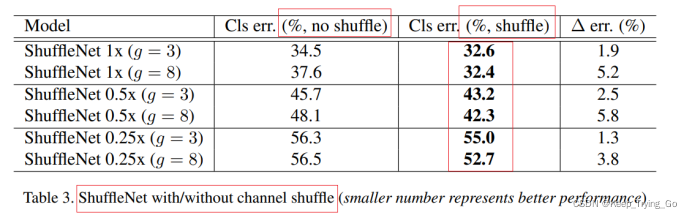

(2)不同网络宽度下的比较

从该表中可以看到加了shuffle之后的效果比不使用shuffle要好很多,并且也可以看到对于宽度(卷积核个数)更小的网络效果也很好。

(3)改造各个模型之后进行对比

为了和本文的模型效果进行对比,将各个模型进行改造,可以发现本文的ShuffleNet比其他模型要好很多。

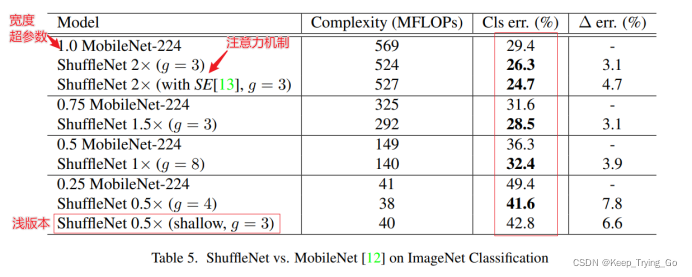

(4)ShuffleNet和MobileNet进行对比

可以发现ShuffleNet比MobileNet更好,分类错误率更低(不管是更深层还是浅层)。

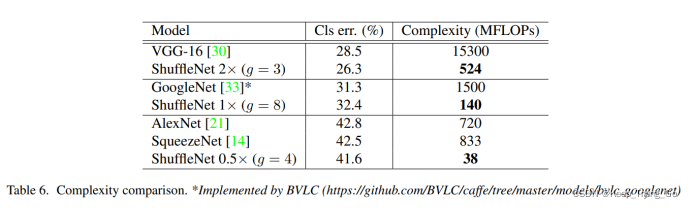

(5)和经典模型之间的额对比

和VGG-16相比,ShuffleNet更好,而且计算量要少很多;和GoogLeNet相比,准确率要低一点,但是计算量也要少很多;和AlexNet相比,效果要好一点,但是计算量也要少很多;和SqueezeNet相比,效果要好一点,但是计算量也要少很多;所以ShuffleNet的效果不仅好,而且计算量也要少很多(轻量化网络)。

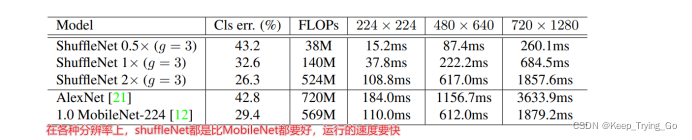

(6)

8.TensorFlow实现结构

import os

import keras

import numpy as np

import tensorflow as tf

from tensorflow.keras import layers

#通道重排

def Shuffle_Channels(inputs,groups):

#获取图片batch,高度,宽度,通道数

batch,h,w,c=inputs.shape

#对通道进行变形

input_reshape=tf.reshape(inputs,[-1,h,w,groups,c//groups])

#退通道进行转置

input_transposed=tf.transpose(input_reshape,[0,1,2,4,3])

#再对通道进行变形

output_reshape=tf.reshape(input_transposed,[-1,h,w,c])

return output_reshape

class ShuffleNetUnitB(tf.keras.Model):

def __init__(self,filter,groups):

super(ShuffleNetUnitB, self).__init__()

self.groups=groups

self.conv11=layers.Conv2D(filter//4,kernel_size=[1,1],strides=[1,1],groups=groups,padding='same')

self.conv11batch=layers.BatchNormalization()

self.conv11relu=layers.Activation('relu')

self.depthwise=layers.DepthwiseConv2D(kernel_size=[3,3],strides=[1,1],padding='same')

self.depthwisebatch=layers.BatchNormalization()

self.conv22=layers.Conv2D(filter,kernel_size=[1,1],strides=[1,1],groups=groups,padding='same')

self.conv22batch=layers.BatchNormalization()

def call(self,inputs,training=None):

x=self.conv11(inputs)

x=self.conv11batch(x)

x=self.conv11relu(x)

x=Shuffle_Channels(x,self.groups)

x=self.depthwise(x)

x=self.depthwisebatch(x)

x=self.conv22(x)

x=self.conv22batch(x)

x_out=tf.add(inputs, x)

x_out=layers.Activation('relu')(x_out)

return x_out

class ShuffleNetUnitC(tf.keras.Model):

def __init__(self, filter,groups,inputFilter):

super(ShuffleNetUnitC, self).__init__()

self.groups=groups

self.outputChannel = filter -inputFilter

self.conv11 = layers.Conv2D(filter//4, kernel_size=[1, 1], strides=[1, 1], groups=groups, padding='same')

self.conv11batch = layers.BatchNormalization()

self.conv11relu = layers.Activation('relu')

self.depthwise = layers.DepthwiseConv2D(kernel_size=[3, 3], strides=[2,2], padding='same')

self.depthwisebatch = layers.BatchNormalization()

self.conv22 = layers.Conv2D(self.outputChannel, kernel_size=[1, 1], strides=[1, 1],groups=groups, padding='same')

self.conv22batch = layers.BatchNormalization()

def call(self, inputs, training=None):

x = self.conv11(inputs)

x = self.conv11batch(x)

x = self.conv11relu(x)

x=Shuffle_Channels(x,self.groups)

x = self.depthwise(x)

x = self.depthwisebatch(x)

x = self.conv22(x)

x = self.conv22batch(x)

x_avgpool3x3=layers.AveragePooling2D(pool_size=[3,3],strides=[2,2],padding='same')(inputs)

#对channel进行堆叠

x_out = tf.concat([x, x_avgpool3x3],

axis=3)

x_out=layers.Activation('relu')(x_out)

return x_out

class ShuffleNetV1(tf.keras.Model):

def __init__(self,stage2Filter,stage3Filter,stage4Filter,groups,numlayers):

super(ShuffleNetV1, self).__init__()

self.groups=groups

self.conv11=layers.Conv2D(24,kernel_size=[3,3],strides=[2,2],padding='same')

self.conv11batch=layers.BatchNormalization()

self.conv11relu=layers.Activation('relu')

self.maxpool=layers.MaxPool2D(pool_size=[3,3],strides=[2,2],padding='same')

self.Stage2=self.StageX(numlayers[0],stage2Filter,24,2)

self.Stage3=self.StageX(numlayers[1],stage3Filter,240,3)

self.Stage4=self.StageX(numlayers[2],stage4Filter,480,4)

self.globalavgpool=layers.GlobalAveragePooling2D()

self.dense=layers.Dense(1000)

self.softmax=layers.Activation('softmax')

def StageX(self,numlayers,filter,inputFilter,i):

stage2=keras.Sequential([],name='stage'+str(i))

stage2.add(ShuffleNetUnitC(filter,self.groups,inputFilter))

for i in range(numlayers):

stage2.add(ShuffleNetUnitB(filter,self.groups))

return stage2

def call(self,inputs,training=None):

x=self.conv11(inputs)

x=self.conv11batch(x)

x=self.conv11relu(x)

x=self.maxpool(x)

x=self.Stage2(x)

x=self.Stage3(x)

x=self.Stage4(x)

x=self.globalavgpool(x)

x=self.dense(x)

x_out=self.softmax(x)

return x_out

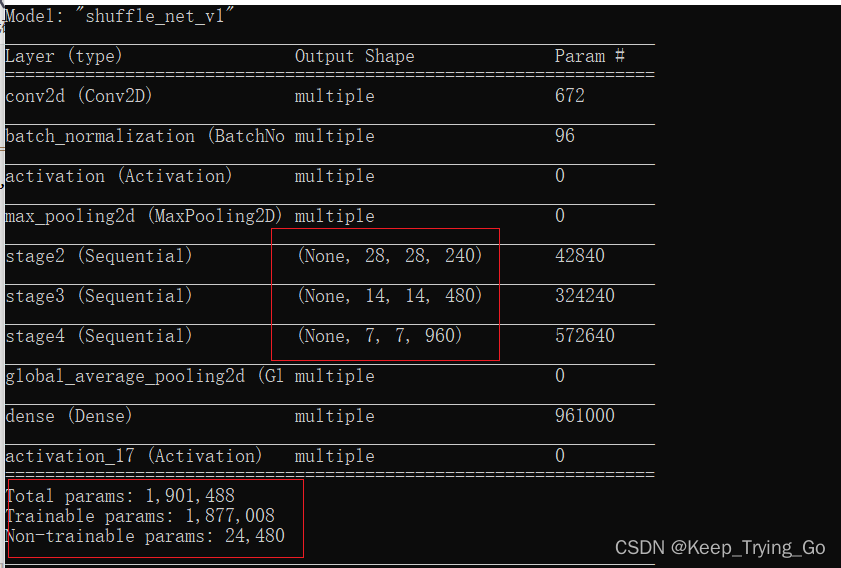

model_shufflenet=ShuffleNetV1(240,480,960,groups=3,numlayers=[3,7,3])

model_shufflenet.build(input_shape=(None,224,224,3))

model_shufflenet.summary()

if __name__ == '__main__':

print('Pycharm')

关于使用ShuffleNetV1模型训练数据并进行测试下一篇文章(体验一下ShuffleNetV1的测试效果)

9.参考文章

ShuffleNetV1代码:

https://github.com/megvii-model/ShuffleNet-Series

MobileNetV1:

https://mydreamambitious.blog.csdn.net/article/details/124560414

MobileNetV2

https://mydreamambitious.blog.csdn.net/article/details/124617584

1x1卷积的作用:

https://mydreamambitious.blog.csdn.net/article/details/123027344

分组卷积:

https://www.cnblogs.com/shine-lee/p/10243114.html

标准卷积和深度可分离卷积:

https://mydreamambitious.blog.csdn.net/article/details/124503596

关于分组卷积的计算公式:

https://www.cnblogs.com/hejunlin1992/p/12978988.html

ShuffleNetV1

https://zhuanlan.zhihu.com/p/51566209