一、图像提取SIFT特征点

img1 = cv2.imread(filename)

img2 = cv2.imread(filename)

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2RGB)

img2 = cv2.cvtColor(img2, cv2.COLOR_BGR2RGB)

def extract_sift_features(image, num_features):

# 提取特征点和计算描述符.

sift_detector = cv2.SIFT_create(num_features, contrastThreshold=-10000, edgeThreshold=-10000) # 创建一个sift特征检测对象

gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

kp, desc = sift_detector.detectAndCompute(gray, None) # 用检测器检测关键点并计算描述符

return kp[:num_features], desc[:num_features]

key_point_1, descriptor_1 = extract_sift_features(img1, 2000)

key_point_2, descriptor_2 = extract_sift_features(img2, 2000)

img_with_kp_1 = cv2.drawKeypoints(img1, key_point_1, outImage=None, flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

img_with_kp_2 = cv2.drawKeypoints(img2, key_point_2, outImage=None, flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

print(f'img1 find {len(key_point_1)} features')

print(f'img2 find {len(key_point_2)} features')

fig = plt.figure(figsize=(30, 15))

plt.subplot(121)

plt.imshow(img_with_kp_1)

plt.axis('off')

plt.subplot(122)

plt.imshow(img_with_kp_2)

plt.axis('off')

plt.show()

output:

img1 find 2000 features

img2 find 2000 features

二、图像拼接并绘制匹配线条

bf = cv2.BFMatcher(cv2.NORM_L2, crossCheck=True) # 创建一个L2范数的匹配器

cv_matches = bf.match(descriptor_1, descriptor_2) # 匹配两个描述子,返回最佳匹配(object)

# 将关键点和匹配点转换成矩阵

matches = np.array([[m.queryIdx, m.trainIdx] for m in cv_matches])

keyarr_1 = np.array([kp.pt for kp in key_point_1])

keyarr_2 = np.array([kp.pt for kp in key_point_2])

def build_composite_image(im1, im2, axis=1, margin=0, background=1):

'''拼接两张不同尺寸的图片.'''

# 输入:axis:拼接基准坐标

# margin:间距

# background:背景色

# 输出:composite:拼接结果;

# (voff1, voff2):左右偏移;

# (hoff1, hoff2):上下偏移

if background != 0 and background != 1:

background = 1

if axis != 0 and axis != 1:

raise RuntimeError('Axis must be 0 (vertical) or 1 (horizontal')

h1, w1, _ = im1.shape

h2, w2, _ = im2.shape

if axis == 1:

composite = np.zeros((max(h1, h2), w1 + w2 + margin, 3), dtype=np.uint8) + 255 * background

if h1 > h2:

voff1, voff2 = 0, (h1 - h2) // 2

else:

voff1, voff2 = (h2 - h1) // 2, 0

hoff1, hoff2 = 0, w1 + margin

else:

composite = np.zeros((h1 + h2 + margin, max(w1, w2), 3), dtype=np.uint8) + 255 * background

if w1 > w2:

hoff1, hoff2 = 0, (w1 - w2) // 2

else:

hoff1, hoff2 = (w2 - w1) // 2, 0

voff1, voff2 = 0, h1 + margin

composite[voff1:voff1 + h1, hoff1:hoff1 + w1, :] = im1

composite[voff2:voff2 + h2, hoff2:hoff2 + w2, :] = im2

return composite, (voff1, voff2), (hoff1, hoff2)

def draw_matches(im1, im2, kp1, kp2, matches, axis=1, margin=0, background=0, linewidth=2):

'''绘制匹配.'''

composite, v_offset, h_offset = build_composite_image(im1, im2, axis, margin, background)

# Draw all keypoints.

for coord_a, coord_b in zip(kp1, kp2):

# 注意坐标系转换,cv画图和np画图坐标系相反。

composite = cv2.drawMarker(composite, (int(coord_a[0] + h_offset[0]), int(coord_a[1] + v_offset[0])),

color=(255, 0, 0), markerType=cv2.MARKER_CROSS, markerSize=5, thickness=1)

composite = cv2.drawMarker(composite, (int(coord_b[0] + h_offset[1]), int(coord_b[1] + v_offset[1])),

color=(255, 0, 0), markerType=cv2.MARKER_CROSS, markerSize=5, thickness=1)

# Draw matches, and highlight keypoints used in matches.

for idx_a, idx_b in matches:

composite = cv2.drawMarker(composite, (int(kp1[idx_a, 0] + h_offset[0]), int(kp1[idx_a, 1] + v_offset[0])),

color=(0, 0, 255), markerType=cv2.MARKER_CROSS, markerSize=12, thickness=1)

composite = cv2.drawMarker(composite, (int(kp2[idx_b, 0] + h_offset[1]), int(kp2[idx_b, 1] + v_offset[1])),

color=(0, 0, 255), markerType=cv2.MARKER_CROSS, markerSize=12, thickness=1)

composite = cv2.line(composite,

tuple([int(kp1[idx_a][0] + h_offset[0]),

int(kp1[idx_a][1] + v_offset[0])]),

tuple([int(kp2[idx_b][0] + h_offset[1]),

int(kp2[idx_b][1] + v_offset[1])]), color=(0, 0, 255), thickness=1)

return composite

pic = draw_matches(img1, img2, keyarr_1, keyarr_2, matches)

plt.imshow(pic)

plt.axis('off')

plt.show()

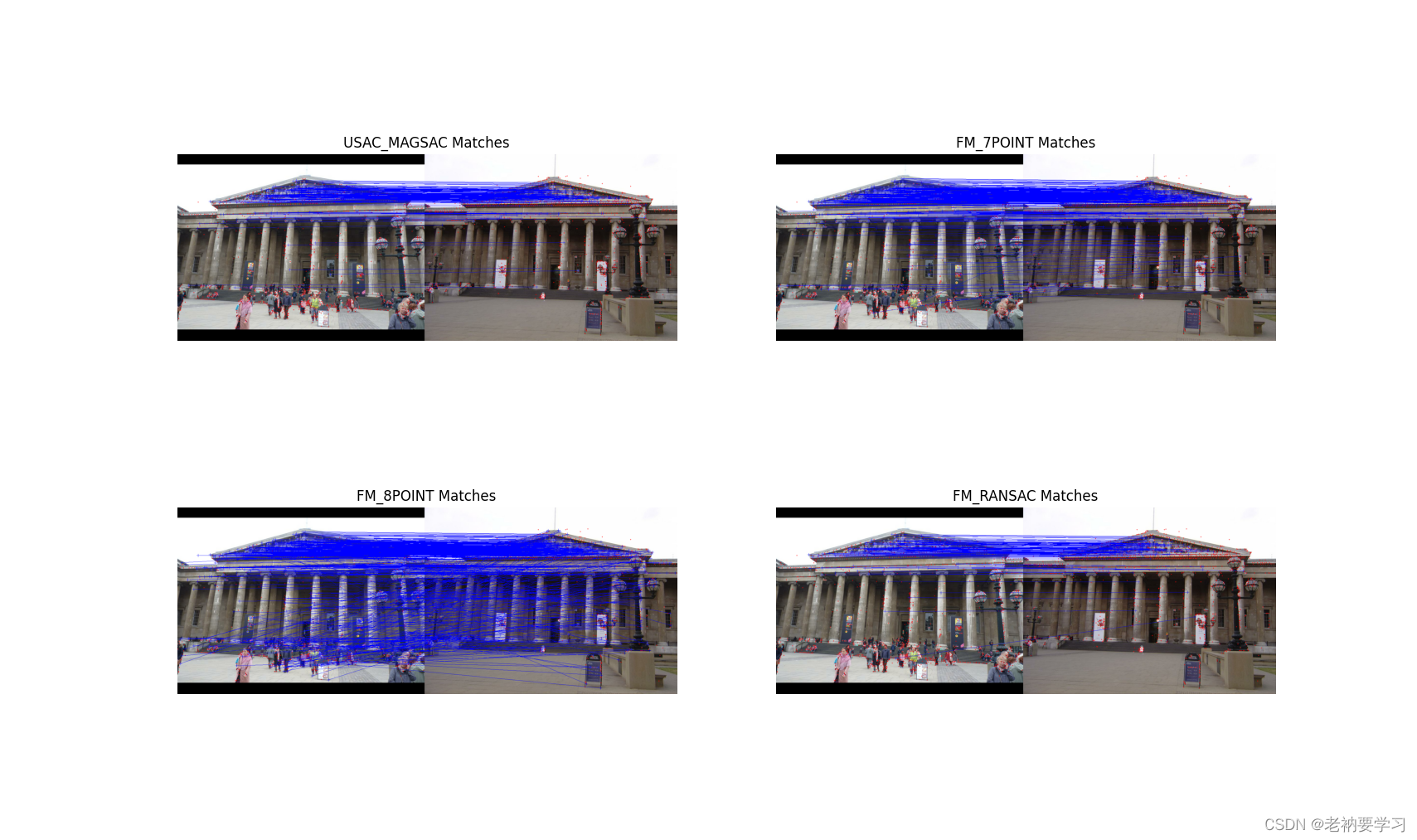

我们发现上图的特征点有很多是匹配错误的,如果能使用更加合适的特征匹配算法,结果会更好,如RANSAC,但是不能去除所有的异常值。

# 从两张图片的关键点计算对应矩阵 cv2.findFundamentalMat

# keyarr_1[matches[:,0]]:从关键点矩阵keyarr_1找到匹配矩阵matches中的对应点

# 参数method具有多种形式:

# FM_7POINT for a 7-point algorithm

# FM_8POINT for an 8-point algorithm.

# FM_RANSAC for the RANSAC algorithm.

# FM_LMEDS for the LMedS algorithm.

# USAC_MAGSAC

fig = plt.figure(figsize=(25, 25))

plt.subplot(221)

pred, inlier_mask = cv2.findFundamentalMat(keyarr_1[matches[:, 0]], keyarr_2[matches[:, 1]],

method=cv2.USAC_MAGSAC, ransacReprojThreshold=0.25,

confidence=0.99999, maxIters=10000)

matches_after_ransac = np.array([match for match, is_inlier in zip(matches, inlier_mask) if is_inlier])

im_inliers = draw_matches(img1, img2, keyarr_1, keyarr_2, matches_after_ransac)

plt.title('USAC_MAGSAC Matches')

plt.imshow(im_inliers)

plt.axis('off')

plt.subplot(222)

pred, inlier_mask2 = cv2.findFundamentalMat(keyarr_1[matches[:, 0]], keyarr_2[matches[:, 1]],

method=cv2.FM_7POINT, ransacReprojThreshold=0.25,

confidence=0.99999, maxIters=10000)

matches_after_ransac = np.array([match for match, is_inlier in zip(matches, inlier_mask2) if is_inlier])

im_inliers = draw_matches(img1, img2, keyarr_1, keyarr_2, matches_after_ransac)

plt.title('FM_7POINT Matches')

plt.imshow(im_inliers)

plt.axis('off')

plt.subplot(223)

pred, inlier_mask3 = cv2.findFundamentalMat(keyarr_1[matches[:, 0]], keyarr_2[matches[:, 1]],

method=cv2.FM_8POINT, ransacReprojThreshold=0.25,

confidence=0.99999, maxIters=10000)

matches_after_ransac = np.array([match for match, is_inlier in zip(matches, inlier_mask3) if is_inlier])

im_inliers = draw_matches(img1, img2, keyarr_1, keyarr_2, matches_after_ransac)

plt.title('FM_8POINT Matches')

plt.imshow(im_inliers)

plt.axis('off')

plt.subplot(224)

pred, inlier_mask4 = cv2.findFundamentalMat(keyarr_1[matches[:, 0]], keyarr_2[matches[:, 1]],

method=cv2.FM_RANSAC, ransacReprojThreshold=0.25,

confidence=0.99999, maxIters=10000)

matches_after_ransac = np.array([match for match, is_inlier in zip(matches, inlier_mask4) if is_inlier])

im_inliers = draw_matches(img1, img2, keyarr_1, keyarr_2, matches_after_ransac)

plt.title('FM_RANSAC Matches')

plt.imshow(im_inliers)

plt.axis('off')

plt.show()