1.关于.xml文件位置,Opencv下载和导入Visual studio 2022

https://blog.csdn.net/Keep_Trying_Go/article/details/124902276

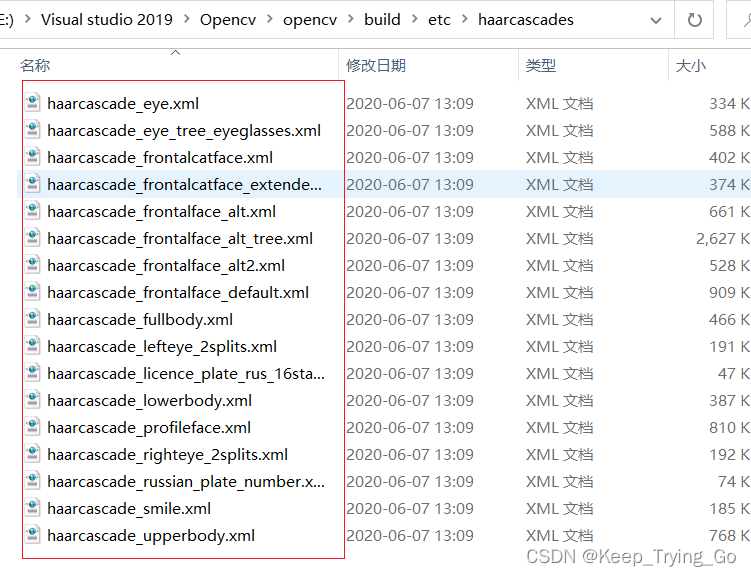

关于.xml位置

关于使用python中的opencv实现人脸识别:

https://mydreamambitious.blog.csdn.net/article/details/124851743

2.一张图片的测试

这里根据自己的需要加载相应的.xml文件

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

//haarcascade_frontalcatface.xml

//.xml文件的位置

String filename = "E:\\conda_3\\PyCharm\\OpenCV\\FaceDetect\\CascadeClassifer\\haarcascade_frontalface_alt.xml";

//图片的位置

String facefile = "E:\\conda_3\\PyCharm\\OpenCV\\FaceDetect\\images\\face1.jpg";

CascadeClassifier face_classifiler;

int main() {

//加载人脸识别.xml文件

if (!face_classifiler.load(filename)) {

printf("The CascadeClassifier load fail!");

return 0;

}

Mat img = imread(facefile);

if (img.empty()) {

printf("The picture read fail!");

return 0;

}

Mat gray;

cvtColor(img, gray, COLOR_BGR2GRAY);

//equalizeHist直方图均衡化,,用于提高图像的质量

equalizeHist(gray, gray);

vector<Rect>faces;

//输入图像 vector<Rect>& objects 缩放比例 检测次数 检测方法 图像大小

face_classifiler.detectMultiScale(gray, faces, 1.2, 3, 0, Size(24, 24));

for (size_t t = 0; t < faces.size(); t++) {

rectangle(img, faces[static_cast<int>(t)], Scalar(255, 255, 0), 2, 8, 0);

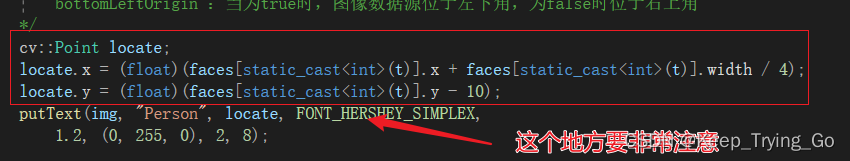

cv::Point locate;

locate.x = (float)(faces[static_cast<int>(t)].x + faces[static_cast<int>(t)].width / 4);

locate.y = (float)(faces[static_cast<int>(t)].y - 10);

putText(img, "Person", locate, FONT_HERSHEY_SIMPLEX,

1.2, (0, 255, 0), 2, 8);

}

imshow("face", img);

waitKey(0);

return 0;

}

这个地方的坐标表示方式要注意。

上面相关函数的解释:

detectMultiScale(InputArray image,

CV_OUT std::vector<Rect>& objects,

double scaleFactor = 1.1,

int minNeighbors = 3, int flags = 0,

Size minSize = Size(),

Size maxSize = Size() );

参数说明:

参数1:image–待检测图片,一般为灰度图像加快检测速度;

参数2:objects–被检测物体的矩形框向量组;为输出量,如人脸检测矩阵Mat

参数3:scaleFactor–表示在前后两次相继的扫描中,搜索窗口的比例系数。默认为1.1即每次搜索窗口依次扩大10%;一般设置为1.1

参数4:minNeighbors–表示构成检测目标的相邻矩形的最小个数(默认为3个)。

如果组成检测目标的小矩形的个数和小于 min_neighbors - 1 都会被排除。

如果min_neighbors 为 0, 则函数不做任何操作就返回所有的被检候选矩形框,

这种设定值一般用在用户自定义对检测结果的组合程序上;

参数5:flags–要么使用默认值,要么使用CV_HAAR_DO_CANNY_PRUNING,如果设置为CV_HAAR_DO_CANNY_PRUNING,那么函数将会使用Canny边缘检测来排除边缘过多或过少的区域,因此这些区域通常不会是人脸所在区域;

参数6、7:minSize和maxSize用来限制得到的目标区域的范围。也就是我本次训练得到实际项目尺寸大小

rectangle(InputOutputArray img, Rect rec,

const Scalar& color, int thickness = 1,

int lineType = LINE_8, int shift = 0)

参数说明:

img 图像

pt1 矩形的一个顶点

pt2 矩形对角线上的另一个顶点

color 线条颜色 (RGB) 或亮度(灰度图像 )(grayscale image)。

thickness 组成矩形的线条的粗细程度。取负值时(如 CV_FILLED)函数绘制填充了色彩的矩形。

line_type 线条的类型。见cvLine的描述

shift 坐标点的小数点位数。

putText( InputOutputArray img, const String& text, Point org,

int fontFace, double fontScale, Scalar color,

int thickness = 1, int lineType = LINE_8,

bool bottomLeftOrigin = false );

参数说明:

img:写文本的图像

text:要绘制的文本字符串

org:文本位置

fontFace:字体类型

fontScalar:字体大小

color:文本颜色

thickness:绘制文本线条的厚度

lineType:线类型

bottomLeftOrigin :当为true时,图像数据源位于左下角,为false时位于右上角

3.实时检测

这里根据自己的需要加载相应的.xml文件

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

//haarcascade_frontalcatface.xml

//opencv_world455d.lib

//E:\Visual studio 2019\Opencv\opencv\build\include

//E:\Visual studio 2019\Opencv\opencv\build\x64\vc15\lib

String filename = "E:\\conda_3\\PyCharm\\OpenCV\\FaceDetect\\CascadeClassifer\\haarcascade_frontalface_alt.xml";

CascadeClassifier face_classifiler;

int main() {

if (!face_classifiler.load(filename)) {

printf("The CascadeClassifier load fail!");

return 0;

}

//打开摄像头实时检测

namedWindow("face",WINDOW_AUTOSIZE);

VideoCapture capture(0);

Mat frame;

Mat gray;

while (capture.read(frame)) {

cvtColor(frame, gray, COLOR_BGR2GRAY);

//equalizeHist直方图均衡化,,用于提高图像的质量

equalizeHist(gray, gray);

vector<Rect>faces;

//输入图像 vector<Rect>& objects 缩放比例 检测次数 检测方法 图像大小

face_classifiler.detectMultiScale(gray, faces, 1.2, 3, 0, Size(30,30));

for (size_t t = 0; t < faces.size(); t++) {

rectangle(frame, faces[static_cast<int>(t)], Scalar(255, 255, 0), 2, 8, 0);

cv::Point locate;

locate.x = (float)(faces[static_cast<int>(t)].x + faces[static_cast<int>(t)].width / 4);

locate.y = (float)(faces[static_cast<int>(t)].y - 10);

putText(frame, "Person", locate, FONT_HERSHEY_SIMPLEX,

1.2, (0, 0, 255), 2, 8);

}

imshow("face", frame);

if (waitKey(10) == 27) {

break;

}

}

capture.release();

destroyAllWindows();

return 0;

}

4.实时人眼检测

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

//haarcascade_frontalcatface.xml

//opencv_world455d.lib

//E:\Visual studio 2019\Opencv\opencv\build\include

//E:\Visual studio 2019\Opencv\opencv\build\x64\vc15\lib

String filename = "E:\\conda_3\\PyCharm\\OpenCV\\FaceDetect\\CascadeClassifer\\haarcascade_frontalface_alt.xml";

String filename_eye = "E:\\conda_3\\PyCharm\\OpenCV\\FaceDetect\\CascadeClassifer\\haarcascade_eye.xml";

CascadeClassifier face_classifiler;

CascadeClassifier eye_detect;

int main() {

if (!face_classifiler.load(filename)) {

printf("The CascadeClassifier load fail!");

return 0;

}

if (!eye_detect.load(filename_eye)) {

printf("The CascadeClassifier load fail!");

return 0;

}

//打开摄像头实时检测

namedWindow("face", WINDOW_AUTOSIZE);

VideoCapture capture(0);

Mat frame;

Mat gray;

while (capture.read(frame)) {

cvtColor(frame, gray, COLOR_BGR2GRAY);

//equalizeHist直方图均衡化,,用于提高图像的质量

equalizeHist(gray, gray);

vector<Rect>faces;

vector<Rect>eyes;

//输入图像 vector<Rect>& objects 缩放比例 检测次数 检测方法 图像大小

face_classifiler.detectMultiScale(gray, faces, 1.2, 3, 0, Size(30, 30));

for (size_t t = 0; t < faces.size(); t++) {

rectangle(frame, faces[static_cast<int>(t)], Scalar(255, 255, 0), 2, 8, 0);

cv::Point locate;

locate.x = (float)(faces[static_cast<int>(t)].x + faces[static_cast<int>(t)].width / 4);

locate.y = (float)(faces[static_cast<int>(t)].y - 10);

putText(frame, "Person", locate, FONT_HERSHEY_SIMPLEX,

1.2, (0, 0, 255), 2, 8);

//首先在找到人脸的基础上进行人眼检测

Mat eyeLocate = frame(faces[static_cast<int>(t)]);

eye_detect.detectMultiScale(eyeLocate, eyes, 1.2, 10, 0, Size(20, 20));

for (size_t s = 0; s < eyes.size(); s++) {

Rect rect;

rect.x = faces[static_cast<int>(t)].x + eyes[s].x;

rect.y = faces[static_cast<int>(t)].y + eyes[s].y;

rect.width = eyes[s].width;

rect.height = eyes[s].height;

rectangle(frame, rect, Scalar(255, 255, 0), 2, 8, 0);

}

}

imshow("face", frame);

if (waitKey(10) == 27) {

break;

}

}

capture.release();

destroyAllWindows();

return 0;

}

4.视频实时检测

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

//haarcascade_frontalcatface.xml

//opencv_world455d.lib

//E:\Visual studio 2019\Opencv\opencv\build\include

//E:\Visual studio 2019\Opencv\opencv\build\x64\vc15\lib

String filename = "E:\\conda_3\\PyCharm\\OpenCV\\FaceDetect\\CascadeClassifer\\haarcascade_frontalface_alt.xml";

CascadeClassifier face_classifiler;

int main() {

if (!face_classifiler.load(filename)) {

printf("The CascadeClassifier load fail!");

return 0;

}

//打开摄像头实时检测

namedWindow("face", WINDOW_AUTOSIZE);

VideoCapture capture;

Mat frame;

Mat gray;

//打开视频文件

capture.open("E:\\conda_3\\PyCharm\\OpenCV\\FaceDetect\\Video\\video.mp4");

while (capture.read(frame)) {

cvtColor(frame, gray, COLOR_BGR2GRAY);

//equalizeHist直方图均衡化,,用于提高图像的质量

equalizeHist(gray, gray);

vector<Rect>faces;

//输入图像 vector<Rect>& objects 缩放比例 检测次数 检测方法 图像大小

face_classifiler.detectMultiScale(gray, faces, 1.2, 3, 0, Size(30, 30));

for (size_t t = 0; t < faces.size(); t++) {

rectangle(frame, faces[static_cast<int>(t)], Scalar(255, 255, 0), 2, 8, 0);

cv::Point locate;

locate.x = (float)(faces[static_cast<int>(t)].x + faces[static_cast<int>(t)].width / 4);

locate.y = (float)(faces[static_cast<int>(t)].y - 10);

putText(frame, "Person", locate, FONT_HERSHEY_SIMPLEX,

1.2, (0, 0, 255), 2, 8);

}

imshow("face", frame);

if (waitKey(10) == 27) {

break;

}

}

capture.release();

destroyAllWindows();

return 0;

}

5.HAAR与LBP检测人眼(模版匹配)

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

String facefile = "E:\\Visual studio 2019\\Opencv\\opencv\\build\\etc\\haarcascades\\haarcascade_frontalface_alt.xml";

String lefteyefile = "E:\\Visual studio 2019\\Opencv\\opencv\\build\\etc\\haarcascades\\haarcascade_eye.xml";

String righteyefile = "E:\\Visual studio 2019\\Opencv\\opencv\\build\\etc\\haarcascades\\haarcascade_eye.xml";

CascadeClassifier face_detector;

CascadeClassifier leftyeye_detector;

CascadeClassifier righteye_detector;

Rect leftEye, rightEye;

//人眼区域 人眼的具体区域 人眼的具体坐标

void trackEye(Mat& im, Mat& tpl, Rect& rect) {

Mat result;

//图像宽度

int result_cols = im.cols - tpl.cols + 1;

//图像高度

int result_rows = im.rows - tpl.rows + 1;

// 模板匹配 create(int rows, int cols, int type)创建多维数组

//U——usigned char(无符号字符型,不是无符号整型)C——通道数 S——signed int(有符号整型);

//其中前两个参数分别表示行(row)跟列(column)、第三个CV_8UC3中的8表示每个通道占8位、U表示无符号、

// C表示Char类型、3表示通道数目是3,第四个参数是向量表示初始化每个像素值是多少,向量长度对应通道数目一致

//https://blog.csdn.net/FightingCSH/article/details/124195152

//而我们这里人眼区域图是gray,所以最后的通道数为1

result.create(result_rows, result_cols, CV_32FC1);

//matchTemplate( InputArray image, InputArray templ,OutputArray result, int method, InputArray mask = noArray() );

//基本思想是将模板图像在目标图像上滑动逐一对比,通过统计的基本方法进行匹配,比如方差检验,相关性检验等方法来寻找最佳匹配;

//输入图像 匹配图像 输出结果(函数返回值) 比较的方法

matchTemplate(im, tpl, result, TM_CCORR_NORMED);

// 寻找位置

double minval, maxval;

Point minloc, maxloc;

//功能:从一个矩阵中找出全局的最大值和最小值。

//输入单通道矩阵 最小值指针 最大值指针 最小值位置指针 最大值位置指针

//https://blog.csdn.net/jndingxin/article/details/108447110

minMaxLoc(result, &minval, &maxval, &minloc, &maxloc);

if (maxval > 0.75) {

rect.x = rect.x + maxloc.x;

rect.y = rect.y + maxloc.y;

}

else {

rect.x = rect.y = rect.width = rect.height = 0;

}

}

int main(int argc, char** argv) {

if (!face_detector.load(facefile)) {

printf("could not load data file...\n");

return -1;

}

if (!leftyeye_detector.load(lefteyefile)) {

printf("could not load data file...\n");

return -1;

}

if (!righteye_detector.load(righteyefile)) {

printf("could not load data file...\n");

return -1;

}

Mat frame;

VideoCapture capture(0);

namedWindow("demo-win", WINDOW_AUTOSIZE);

Mat gray;

vector<Rect> faces;

vector<Rect> eyes;

Mat lefttpl, righttpl; // 模板

while (capture.read(frame)) {

//对图像进行翻转

flip(frame, frame, 1);

cvtColor(frame, gray, COLOR_BGR2GRAY);

//提高图片的质量

equalizeHist(gray, gray);

//人脸检测

face_detector.detectMultiScale(gray, faces, 1.1, 3, 0, Size(30, 30));

for (size_t t = 0; t < faces.size(); t++) {

rectangle(frame, faces[t], Scalar(255, 0, 0), 2, 8, 0);

// 计算 人眼的offset ROI

int offsety = faces[t].height / 4;

int offsetx = faces[t].width / 8;

int eyeheight = faces[t].height / 2 - offsety;

int eyewidth = faces[t].width / 2 - offsetx;

// 截取左眼区域

Rect leftRect;

leftRect.x = faces[t].x + offsetx;

leftRect.y = faces[t].y + offsety;

leftRect.width = eyewidth;

leftRect.height = eyeheight;

//得到人眼的图片区域(不是左眼的具体位置)

Mat leftRoi = gray(leftRect);

// 检测左眼

leftyeye_detector.detectMultiScale(leftRoi, eyes, 1.1, 1, 0, Size(20, 20));

if (lefttpl.empty()) {

if (eyes.size()) {

//获取左眼的准确坐标

leftRect = eyes[0] + Point(leftRect.x, leftRect.y);

lefttpl = gray(leftRect);

rectangle(frame, leftRect, Scalar(0, 0, 255), 2, 8, 0);

}

}

else {

// 跟踪, 基于模板匹配

leftEye.x = leftRect.x;

leftEye.y = leftRect.y;

trackEye(leftRoi, lefttpl, leftEye);

if (leftEye.x > 0 && leftEye.y > 0) {

leftEye.width = lefttpl.cols;

leftEye.height = lefttpl.rows;

rectangle(frame, leftEye, Scalar(0, 0, 255), 2, 8, 0);

}

}

// 截取右眼区域(注意这里的摄像头截取的图片人眼和我们本身人眼是相反的)

Rect rightRect;

rightRect.x = faces[t].x + faces[t].width / 2;

rightRect.y = faces[t].y + offsety;

rightRect.width = eyewidth;

rightRect.height = eyeheight;

//获取右眼的区域(不是右眼的具体位置)

Mat rightRoi = gray(rightRect);

// 检测右眼

righteye_detector.detectMultiScale(rightRoi, eyes, 1.1, 1, 0, Size(20, 20));

if (righttpl.empty()) {

if (eyes.size()) {

//获取右眼的准确坐标

rightRect = eyes[0] + Point(rightRect.x, rightRect.y);

righttpl = gray(rightRect);

rectangle(frame, rightRect, Scalar(0, 255, 255), 2, 8, 0);

}

}

else {

// 跟踪, 基于模板匹配

rightEye.x = rightRect.x;

rightEye.y = rightRect.y;

trackEye(rightRoi, righttpl, rightEye);

if (rightEye.x > 0 && rightEye.y > 0) {

//righttpl.cols表示图片匹配之后的宽度 righttpl.rows表示图片匹配之后的高度

rightEye.width = righttpl.cols;

rightEye.height = righttpl.rows;

rectangle(frame, rightEye, Scalar(0, 255, 255), 2, 8, 0);

}

}

}

imshow("demo-win", frame);

char c = waitKey(100);

if (c == 27) { // ESC

break;

}

}

// release resource

capture.release();

waitKey(0);

return 0;

}

参考视频教程:

https://b23.tv/LnlcNDh