hdl_localization代码解析

简介

hdl_localization是基于UKF滤波框架,融合了ndt点云配准结果,在已经构建的点云地图上实习激光重定位的一种方法。在使用16线激光雷达进行机器人定位时,不用IMU也可以取得不错的效果。

安装依赖

依赖库

1.Ros-Melodic

2.Pcl-1.8

3.Open-MP

4.Eigen3.3(及以上)

依赖包

1.ndt_omp

2.fast-gicp

3.hdl_global_localization

运行

rosparam set use_sim_time true

roslaunch hdl_localization hdl_localization.launch

roscd hdl_localization/rviz

rviz -d hdl_localization.rviz

注意

1.如果进行纯定位时的初始位姿在地图坐标系附近,在launch文件中可以将 “specify_init_pose" 设为 ”true“,这样,其默认的三维位置(0,0,0)和默认的表示旋转的四元数(0,0,0,1)就可以很好的给予点云一个初始状态,有利于其后续匹配和重定位。

2.如果想在地图中任意位置进行重定位,需要在开启rviz -d hdl_localization.rviz后,选择rviz上方的2D pose estimator,并在地图中左键点击和鼠标拖动,选择一个与真实位置相近的位置与航向。

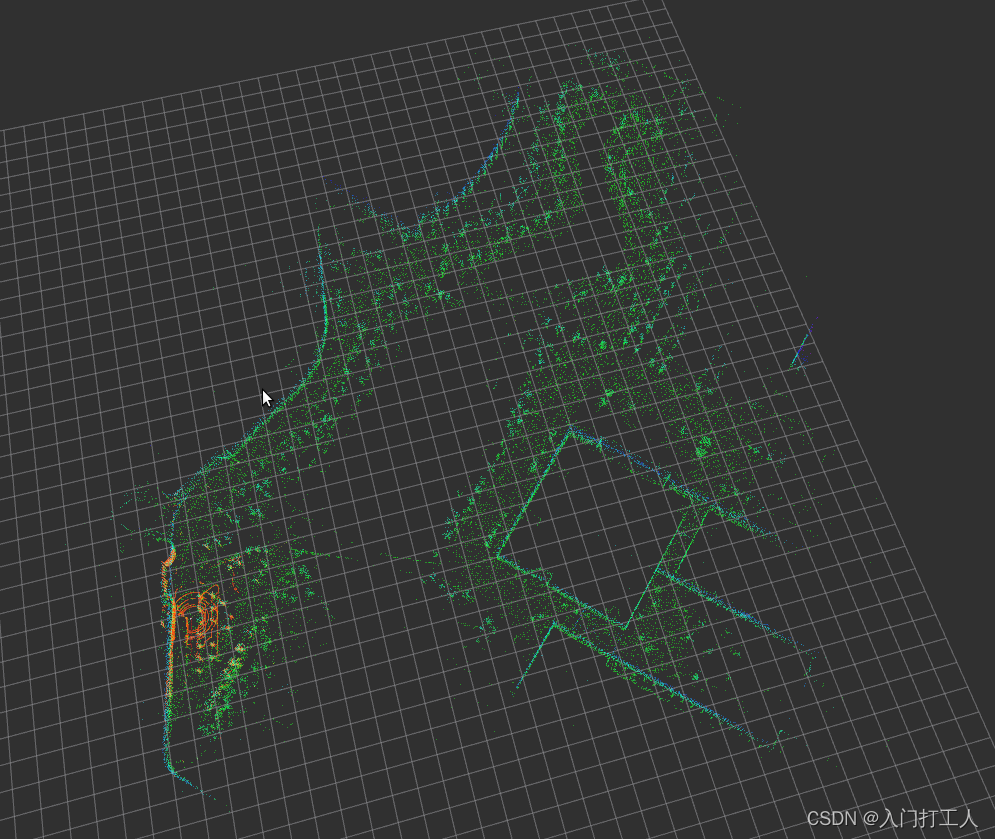

效果

UKF知识补充(无迹卡尔曼滤波)

网页链接

原始高斯分布经过非线性变换之后其实是一个不规则的非线性分布,在EKF中我们在高斯均值点附近用泰勒级数近似非线性分布,从而获得近似高斯分布。但是问题在于,这个近似高斯分布有时不是足够精确,单单一个均值点往往无法预测上图中这种很不规则的分布的。这个时候我们就需要无迹卡尔曼滤波UKF了,通过无迹转换将非线性函数变换的结果近似成高斯分布。

以下是无迹变换执行的步骤:

1.计算Sigma点集

2.为每个Sigma点分配权重

3.把所有单个Sigma点代入非线性函数f

4.对经过上述加权和转换的点近似新的高斯分布

5.计算新高斯分布的均值和方差。

代码阅读

总览

该项目是使用nodelet统一管理的,apps为定义的两个类,也就是程序实现。include内为状态估计器和无迹卡尔曼的实现。launch是启动文件。rviz内为rviz的配置文件。data为实例的定位用点云地图。

launch

定义了几个参数,使用nodelet运行了velodyne_nodelet_manager、globalmap_server_nodelet、hdl_localization_nodelet三个节点。如果只用于仿真,可以在 arguments 前面加上。

<param name="use_sim_time" value="true"/>

apps(程序实现)

本文件夹是只有两个cpp文件,直接继承了nodelet的类。

globalmap_server_nodelet

类GlobalmapServerNodelet 继承了 nodelet::Nodelet。

关于ros,声明了三个句柄,1个发布,1个计时器,1个globalmap的变量。

ros::NodeHandle nh;

ros::NodeHandle mt_nh;

ros::NodeHandle private_nh;

ros::Publisher globalmap_pub;

ros::WallTimer globalmap_pub_timer;

pcl::PointCloud<PointT>::Ptr globalmap;

globalmap_server_nodelet::onInit()

这里是在重写了初始化函数。同时利用计时器出发回调函数。

void onInit() override {

//定义三个节点,

nh = getNodeHandle();

mt_nh = getMTNodeHandle();

private_nh = getPrivateNodeHandle();

initialize_params();

// publish globalmap with "latched" publisher

globalmap_pub = nh.advertise<sensor_msgs::PointCloud2>("/globalmap", 5, true);

globalmap_pub_timer = nh.createWallTimer(ros::WallDuration(0.05), &GlobalmapServerNodelet::pub_once_cb, this, true, true); //20Hz

}

globalmap_server_nodelet::initialize_params()

在程序initialize_params()中,完成了读取地图pcd文件的功能,并对该地图进行下采样,最终的globalmap是下采样的地图。

void initialize_params() {

// read globalmap from a pcd file

std::string globalmap_pcd = private_nh.param<std::string>("globalmap_pcd", "");

globalmap.reset(new pcl::PointCloud<PointT>());

pcl::io::loadPCDFile(globalmap_pcd, *globalmap);

globalmap->header.frame_id = "map";

//TODO:这个实际上是没有到这里来的,初步想法是没有utm文件。类似于经纬度的坐标文件。

std::ifstream utm_file(globalmap_pcd + ".utm");

if (utm_file.is_open() && private_nh.param<bool>("convert_utm_to_local", true)) {

std::cout << "now utf_file is open" << std::endl;

double utm_easting;

double utm_northing;

double altitude;

utm_file >> utm_easting >> utm_northing >> altitude;

for(auto& pt : globalmap->points) {

pt.getVector3fMap() -= Eigen::Vector3f(utm_easting, utm_northing, altitude);

}

ROS_INFO_STREAM("Global map offset by UTM reference coordinates (x = "

<< utm_easting << ", y = " << utm_northing << ") and altitude (z = " << altitude << ")");

}

//endTODO

// downsample globalmap

double downsample_resolution = private_nh.param<double>("downsample_resolution", 0.1);

boost::shared_ptr<pcl::VoxelGrid<PointT>> voxelgrid(new pcl::VoxelGrid<PointT>());

voxelgrid->setLeafSize(downsample_resolution, downsample_resolution, downsample_resolution);

voxelgrid->setInputCloud(globalmap);

pcl::PointCloud<PointT>::Ptr filtered(new pcl::PointCloud<PointT>());

voxelgrid->filter(*filtered);

globalmap = filtered;

}

同时,每隔0.05s发布一次。(onInit定义的)

void pub_once_cb(const ros::WallTimerEvent& event) {

globalmap_pub.publish(globalmap);

}

hdl_localization_nodelet

类 HdlLocalizationNodelet 继承了 nodelet::Nodelet,先看初始化。

hdl_localization_nodelet::onInit()

void onInit() override {

//依然是三个句柄

nh = getNodeHandle();

mt_nh = getMTNodeHandle();

private_nh = getPrivateNodeHandle();

//这里的时间用了boost库里的 circular_buffer<double>。感兴趣的可以自己百度一下,毕竟……我也没用过。

processing_time.resize(16);

//这些参数,又来了。

initialize_params();

//这个默认的base_link, launch里覆盖了。实际上是velodyne。参数类的在launch里改写了一部分,这里就不一一赘述了。可以自己对比来看,比较容易。

odom_child_frame_id = private_nh.param<std::string>("odom_child_frame_id", "base_link");

//是否使用imu

use_imu = private_nh.param<bool>("use_imu", true);

//imu是否倒置

invert_imu = private_nh.param<bool>("invert_imu", false);

if(use_imu) {//如果使用imu,则定义订阅函数。

NODELET_INFO("enable imu-based prediction");

imu_sub = mt_nh.subscribe("/gpsimu_driver/imu_data", 256, &HdlLocalizationNodelet::imu_callback, this);

}

//点云数据、全局地图、初始位姿的订阅。initialpose_sub只是用于rviz划点用的。

points_sub = mt_nh.subscribe("/velodyne_points", 5, &HdlLocalizationNodelet::points_callback, this);

globalmap_sub = nh.subscribe("/globalmap", 1, &HdlLocalizationNodelet::globalmap_callback, this);

initialpose_sub = nh.subscribe("/initialpose", 8, &HdlLocalizationNodelet::initialpose_callback, this);

//发布里程计信息,以及对齐后的点云数据。

pose_pub = nh.advertise<nav_msgs::Odometry>("/odom", 5, false);

aligned_pub = nh.advertise<sensor_msgs::PointCloud2>("/aligned_points", 5, false);

}

hdl_localization_nodelet::initialize_params()

初始化参数

void initialize_params() {

// intialize scan matching method

double downsample_resolution = private_nh.param<double>("downsample_resolution", 0.1);

std::string ndt_neighbor_search_method = private_nh.param<std::string>("ndt_neighbor_search_method", "DIRECT7");

double ndt_resolution = private_nh.param<double>("ndt_resolution", 1.0);

boost::shared_ptr<pcl::VoxelGrid<PointT>> voxelgrid(new pcl::VoxelGrid<PointT>());

voxelgrid->setLeafSize(downsample_resolution, downsample_resolution, downsample_resolution);

downsample_filter = voxelgrid;

//定义了ndt和glcp

pclomp::NormalDistributionsTransform<PointT, PointT>::Ptr ndt(new pclomp::NormalDistributionsTransform<PointT, PointT>());

pclomp::GeneralizedIterativeClosestPoint<PointT, PointT>::Ptr gicp(new pclomp::GeneralizedIterativeClosestPoint<PointT, PointT>());

//ndt参数与搜索方法。默认DIRECT7,作者说效果不好可以尝试改为DIRECT1.

ndt->setTransformationEpsilon(0.01);

ndt->setResolution(ndt_resolution);

if(ndt_neighbor_search_method == "DIRECT1") {

NODELET_INFO("search_method DIRECT1 is selected");

ndt->setNeighborhoodSearchMethod(pclomp::DIRECT1);

registration = ndt;

} else if(ndt_neighbor_search_method == "DIRECT7") {

NODELET_INFO("search_method DIRECT7 is selected");

ndt->setNeighborhoodSearchMethod(pclomp::DIRECT7);

registration = ndt;

} else if(ndt_neighbor_search_method == "GICP_OMP"){

NODELET_INFO("search_method GICP_OMP is selected");

registration = gicp;

}

else {

if(ndt_neighbor_search_method == "KDTREE") {

NODELET_INFO("search_method KDTREE is selected");

} else {

NODELET_WARN("invalid search method was given");

NODELET_WARN("default method is selected (KDTREE)");

}

ndt->setNeighborhoodSearchMethod(pclomp::KDTREE);

registration = ndt;

}

// initialize pose estimator

//设置起点用。

if(private_nh.param<bool>("specify_init_pose", true)) {

NODELET_INFO("initialize pose estimator with specified parameters!!");

pose_estimator.reset(new hdl_localization::PoseEstimator(registration,

ros::Time::now(),

Eigen::Vector3f(private_nh.param<double>("init_pos_x", 0.0), private_nh.param<double>("init_pos_y", 0.0), private_nh.param<double>("init_pos_z", 0.0)),

Eigen::Quaternionf(private_nh.param<double>("init_ori_w", 1.0), private_nh.param<double>("init_ori_x", 0.0), private_nh.param<double>("init_ori_y", 0.0), private_nh.param<double>("init_ori_z", 0.0)),

private_nh.param<double>("cool_time_duration", 0.5)

));

}

}

-----------下面就是回调函数的处理---------

实际使用的回调函数就是HdlLocalizationNodelet::imu_callback、HdlLocalizationNodelet::points_callback 以及 HdlLocalizationNodelet::globalmap_callback三个。分别订阅了 “/gpsimu_driver/imu_data”、“/velodyne_points” 以及 "/globalmap"两个话题。

首先看HdlLocalizationNodelet::globalmap_callback。完成了对全局地图的订阅以及从ros消息到点云的转化。

HdlLocalizationNodelet::globalmap_callback

void globalmap_callback(const sensor_msgs::PointCloud2ConstPtr& points_msg) {

NODELET_INFO("globalmap received!");

pcl::PointCloud<PointT>::Ptr cloud(new pcl::PointCloud<PointT>());

pcl::fromROSMsg(*points_msg, *cloud);

globalmap = cloud;

//发布出来的全局地图用作配准用的目标点云。这里就是globalmap_server_nodelet发出来的。

registration->setInputTarget(globalmap);

}

HdlLocalizationNodelet::imu_callback

接收imu并扔到imu_data里去。会在HdlLocalizationNodelet::points_callback里用到。

void imu_callback(const sensor_msgs::ImuConstPtr& imu_msg) {

std::lock_guard<std::mutex> lock(imu_data_mutex);

imu_data.push_back(imu_msg);

}

HdlLocalizationNodelet::points_callback

输入点云输出位姿。

void points_callback(const sensor_msgs::PointCloud2ConstPtr& points_msg) {

//加锁

std::lock_guard<std::mutex> estimator_lock(pose_estimator_mutex);

if(!pose_estimator) {//等待位姿估计器初始化

NODELET_ERROR("waiting for initial pose input!!");

return;

}

if(!globalmap) {//等待全局地图

NODELET_ERROR("globalmap has not been received!!");

return;

}

//将ros消息转化为点云

const auto& stamp = points_msg->header.stamp;

pcl::PointCloud<PointT>::Ptr pcl_cloud(new pcl::PointCloud<PointT>());

pcl::fromROSMsg(*points_msg, *pcl_cloud);

//检查

if(pcl_cloud->empty()) {

NODELET_ERROR("cloud is empty!!");

return;

}

//将点云转换到odom_child_frame_id 坐标系。

//但是这个tf是自己发的,这一个还要再看一下。TODO。鸡生蛋蛋生鸡的问题一直搞不太懂。

// transform pointcloud into odom_child_frame_id

pcl::PointCloud<PointT>::Ptr cloud(new pcl::PointCloud<PointT>());

if(!pcl_ros::transformPointCloud(odom_child_frame_id, *pcl_cloud, *cloud, this->tf_listener)) {

NODELET_ERROR("point cloud cannot be transformed into target frame!!");

return;

}

//对点云下采样。这里用到的是同一文件下的函数。后面会放上。

auto filtered = downsample(cloud);

// predict

if(!use_imu) {//不用imu则用0。

pose_estimator->predict(stamp, Eigen::Vector3f::Zero(), Eigen::Vector3f::Zero());

} else {

std::lock_guard<std::mutex> lock(imu_data_mutex);

auto imu_iter = imu_data.begin();

//利用imu数据迭代。

for(imu_iter; imu_iter != imu_data.end(); imu_iter++) {

//若当前点云时间早于imu雷达,则跳出。imu做预测,点云观测。

if(stamp < (*imu_iter)->header.stamp) {

break;

}

//读取线加速度和角速度。判断是否倒置imu

const auto& acc = (*imu_iter)->linear_acceleration;

const auto& gyro = (*imu_iter)->angular_velocity;

double gyro_sign = invert_imu ? -1.0 : 1.0;

//利用imu数据做位姿的预测。这里用了pose_estimator→predict,进一步调用了ukf进行估计。还没具体看ukf。 TODO

pose_estimator->predict((*imu_iter)->header.stamp, Eigen::Vector3f(acc.x, acc.y, acc.z), gyro_sign * Eigen::Vector3f(gyro.x, gyro.y, gyro.z));

}

//删除用过的imu数据

imu_data.erase(imu_data.begin(), imu_iter);

}

// correct

auto t1 = ros::WallTime::now();

//用pose_estimator 来矫正点云。pcl库配准。获取到结果后利用ukf矫正位姿。

auto aligned = pose_estimator->correct(filtered);

auto t2 = ros::WallTime::now();

processing_time.push_back((t2 - t1).toSec());

double avg_processing_time = std::accumulate(processing_time.begin(), processing_time.end(), 0.0) / processing_time.size();

// NODELET_INFO_STREAM("processing_time: " << avg_processing_time * 1000.0 << "[msec]");

//如果有订阅才发布

if(aligned_pub.getNumSubscribers()) {

aligned->header.frame_id = "map";

aligned->header.stamp = cloud->header.stamp;

aligned_pub.publish(aligned);

}

//发布里程计。时间戳为当前帧雷达时间,里程计位姿为ukf校正后位姿。同时也会发布从map到odom_child_frame_id的tf

publish_odometry(points_msg->header.stamp, pose_estimator->matrix());

}

----------主要流程到此结束,下面是其他的一些功能函数-----------

downsample(const pcl::PointCloud::ConstPtr& cloud)

当前帧点云数据下采样

pcl::PointCloud<PointT>::ConstPtr downsample(const pcl::PointCloud<PointT>::ConstPtr& cloud) const {

//在函数initialize_params()里声明了。0.1,0.1,0.1网格

if(!downsample_filter) {

return cloud;

}

pcl::PointCloud<PointT>::Ptr filtered(new pcl::PointCloud<PointT>());

downsample_filter->setInputCloud(cloud);

downsample_filter->filter(*filtered);

filtered->header = cloud->header;

return filtered;

}

publish_odometry

发布里程计的tf和msg。输入为当前帧点云时间戳与pose_estimator的结果矩阵。这里还用到了matrix2transform这个函数,用于做eigen矩阵到tf的转化(取值)。

void publish_odometry(const ros::Time& stamp, const Eigen::Matrix4f& pose) {

// broadcast the transform over tf

geometry_msgs::TransformStamped odom_trans = matrix2transform(stamp, pose, "map", odom_child_frame_id);

pose_broadcaster.sendTransform(odom_trans);

// publish the transform

nav_msgs::Odometry odom;

odom.header.stamp = stamp;

odom.header.frame_id = "map";

odom.pose.pose.position.x = pose(0, 3);

odom.pose.pose.position.y = pose(1, 3);

odom.pose.pose.position.z = pose(2, 3);

odom.pose.pose.orientation = odom_trans.transform.rotation;

odom.child_frame_id = odom_child_frame_id;

odom.twist.twist.linear.x = 0.0;

odom.twist.twist.linear.y = 0.0;

odom.twist.twist.angular.z = 0.0;

pose_pub.publish(odom);

}

matrix2transform

matrix2transform(const ros::Time& stamp, const Eigen::Matrix4f& pose, const std::string& frame_id, const std::string& child_frame_id) 从matrix 到 geometry_msgs::TransformStamped。

geometry_msgs::TransformStamped matrix2transform(const ros::Time& stamp, const Eigen::Matrix4f& pose, const std::string& frame_id, const std::string& child_frame_id) {

Eigen::Quaternionf quat(pose.block<3, 3>(0, 0));

quat.normalize();

geometry_msgs::Quaternion odom_quat;

odom_quat.w = quat.w();

odom_quat.x = quat.x();

odom_quat.y = quat.y();

odom_quat.z = quat.z();

geometry_msgs::TransformStamped odom_trans;

odom_trans.header.stamp = stamp;

odom_trans.header.frame_id = frame_id;

odom_trans.child_frame_id = child_frame_id;

odom_trans.transform.translation.x = pose(0, 3);

odom_trans.transform.translation.y = pose(1, 3);

odom_trans.transform.translation.z = pose(2, 3);

odom_trans.transform.rotation = odom_quat;

return odom_trans;

}

include(状态估计器及ukf)

apps里的两个cpp大致内容均为以上。可以看到在points_callback里使用了pose_estimator作为位姿的估计。而该类又使用了ukf作为位姿的解算。二者的实现都在include文件夹内。

hdl_localization/pose_estimator.hpp

该文件定义了类PoseEstimator。

PoseEstimator(构造函数)

首先看构造函数。可以看到在初始化之后,最重要的是进入了ukf的处理。

PoseEstimator(pcl::Registration<PointT, PointT>::Ptr& registration, const ros::Time& stamp, const Eigen::Vector3f& pos, const Eigen::Quaternionf& quat, double cool_time_duration = 1.0)

: init_stamp(stamp),

registration(registration),

cool_time_duration(cool_time_duration)

{

//单位阵初始化,随后给过程噪声。

process_noise = Eigen::MatrixXf::Identity(16, 16);

process_noise.middleRows(0, 3) *= 1.0;

process_noise.middleRows(3, 3) *= 1.0;

process_noise.middleRows(6, 4) *= 0.5;

process_noise.middleRows(10, 3) *= 1e-6;

process_noise.middleRows(13, 3) *= 1e-6;

//测量噪声,单位阵

Eigen::MatrixXf measurement_noise = Eigen::MatrixXf::Identity(7, 7);

measurement_noise.middleRows(0, 3) *= 0.01;

measurement_noise.middleRows(3, 4) *= 0.001;

//加权平均的位姿。

Eigen::VectorXf mean(16);

mean.middleRows(0, 3) = pos;

mean.middleRows(3, 3).setZero();

mean.middleRows(6, 4) = Eigen::Vector4f(quat.w(), quat.x(), quat.y(), quat.z());

mean.middleRows(10, 3).setZero();

mean.middleRows(13, 3).setZero();

//初始化协方差

Eigen::MatrixXf cov = Eigen::MatrixXf::Identity(16, 16) * 0.01;

//声明posesystem。

PoseSystem system;

//初始化ukf

ukf.reset(new kkl::alg::UnscentedKalmanFilterX<float, PoseSystem>(system, 16, 6, 7, process_noise, measurement_noise, mean, cov));

}

pose_estimator->predict(预测)

另外在hdl_localization.cpp中用到的pose_estimator->predict等也在本文件进行了解释。

void predict(const ros::Time& stamp, const Eigen::Vector3f& acc, const Eigen::Vector3f& gyro) {

//当前与初始化的时间间隔小于设置的时间,或prev_stamp(上次更新时间)为0(未更新),或prev_stamp等于当前时间。则更新prev_stamp并跳出。

if((stamp - init_stamp).toSec() < cool_time_duration || prev_stamp.is_zero() || prev_stamp == stamp) {

prev_stamp = stamp;

return;

}

//正常处理,首先计算dt,更新prev_stamp。

double dt = (stamp - prev_stamp).toSec();

prev_stamp = stamp;

//对ukf设置噪声和处理间隔。

ukf->setProcessNoiseCov(process_noise * dt);

ukf->system.dt = dt;

//利用imu数据定义控制量

Eigen::VectorXf control(6);

control.head<3>() = acc;

control.tail<3>() = gyro;

//利用ukf预测。

ukf->predict(control);

}

pose_estimator->correct(观测)

pcl::PointCloud<PointT>::Ptr correct(const pcl::PointCloud<PointT>::ConstPtr& cloud) {

//单位阵来初始化

Eigen::Matrix4f init_guess = Eigen::Matrix4f::Identity();

init_guess.block<3, 3>(0, 0) = quat().toRotationMatrix();

init_guess.block<3, 1>(0, 3) = pos();

//点云的配准。ndt

pcl::PointCloud<PointT>::Ptr aligned(new pcl::PointCloud<PointT>());

registration->setInputSource(cloud);

registration->align(*aligned, init_guess);

//读取数据

Eigen::Matrix4f trans = registration->getFinalTransformation();

Eigen::Vector3f p = trans.block<3, 1>(0, 3);

Eigen::Quaternionf q(trans.block<3, 3>(0, 0));

if(quat().coeffs().dot(q.coeffs()) < 0.0f) {

q.coeffs() *= -1.0f;

}

//填充至观测矩阵observation

Eigen::VectorXf observation(7);

observation.middleRows(0, 3) = p;

observation.middleRows(3, 4) = Eigen::Vector4f(q.w(), q.x(), q.y(), q.z());

//ukf更新

ukf->correct(observation);

return aligned;

}

----------还有一些简单的函数不再说明了。直接怼上,很好理解。-----------

/* getters */

Eigen::Vector3f pos() const {

return Eigen::Vector3f(ukf->mean[0], ukf->mean[1], ukf->mean[2]);

}

Eigen::Vector3f vel() const {

return Eigen::Vector3f(ukf->mean[3], ukf->mean[4], ukf->mean[5]);

}

Eigen::Quaternionf quat() const {

return Eigen::Quaternionf(ukf->mean[6], ukf->mean[7], ukf->mean[8], ukf->mean[9]).normalized();

}

Eigen::Matrix4f matrix() const {

Eigen::Matrix4f m = Eigen::Matrix4f::Identity();

m.block<3, 3>(0, 0) = quat().toRotationMatrix();

m.block<3, 1>(0, 3) = pos();

return m;

}

hdl_localization/pose_system.hpp

本文件定义了完成了类PoseSystem的实现。主要是实现了ukf里 矩阵f(定义了系统)和h(观测)代码实现。这是要扔到ukf中去的。

系统状态量16位,分别是位姿(3)、速度(3)、四元数(4)、加速度偏差(3)、陀螺仪偏差(3)。另还有6位控制量,加速度(3)和陀螺仪(3)。

| 状态量 | 表示 |

|---|---|

| 位置 | p t = [ p x , p y , p z ] T pt = [p x , p y , p z ] T pt=[px,py,pz]T |

| 速度 | v t = [ v x , v y , v z ] T vt = [v x , v y , v z ] T vt=[vx,vy,vz]T |

| 四元数 | q t = [ q w , q x , q y , q z ] T qt = [q w , q x , q y , q z ] T qt=[qw,qx,qy,qz]T |

| 加速度偏差 | a c c ? b i a s = [ a c c ? b i a s x , a c c ? b i a s y , a c c ? b i a s z ] T acc_-bias = [a c c_-b i a s _x , a c c_-b i a s_y , a c c_-b i a s_z ] T acc??bias=[acc??biasx?,acc??biasy?,acc??biasz?]T |

| 陀螺仪偏差 | g y r o ? b i a s = [ g y r o ? b i a s x , g y r o ? b i a s y , g y r o ? b i a s z ] T gyro_-bias = [g y r o _- b i a s_x , g y r o _- b i a s_y , g y r o _- b i a s_z ] T gyro??bias=[gyro??biasx?,gyro??biasy?,gyro??biasz?]T |

| 控制量 | 表示 |

|---|---|

| 加速度 | r a w ? a c c = [ r a w ? a c c x , r a w ? a c c y , r a w ? a c c z ] T raw_-acc = [r a w _ -a c c_x , r a w _- a c c _y , r a w _ -a c c _z ] T raw??acc=[raw??accx?,raw??accy?,raw??accz?]T |

| 陀螺仪 | r a w ? g y r o = [ g y r o ? b i a s x , g y r o ? b i a s y , g y r o ? b i a s z ] T raw_-gyro = [g y r o _- b i a s_x , g y r o _ -b i a s_ y , g y r o _- b i a s_z ] T raw??gyro=[gyro??biasx?,gyro??biasy?,gyro??biasz?]T |

f(系统状态方程)

VectorXt f(const VectorXt& state, const VectorXt& control) const {

VectorXt next_state(16);

Vector3t pt = state.middleRows(0, 3); //位置

Vector3t vt = state.middleRows(3, 3); //速度

Quaterniont qt(state[6], state[7], state[8], state[9]);

qt.normalize(); // 归一化四元数

Vector3t acc_bias = state.middleRows(10, 3); //加速度偏差

Vector3t gyro_bias = state.middleRows(13, 3); //陀螺仪偏差

Vector3t raw_acc = control.middleRows(0, 3); //加速度控制

Vector3t raw_gyro = control.middleRows(3, 3); //陀螺仪控制

//下一时刻状态

// position 。 首先更新位置

next_state.middleRows(0, 3) = pt + vt * dt; //

// velocity。 更新速度,实际上并没有利用加速度矫正速度,原因是认为加速度噪声较大,对最终的精度并没有贡献。

Vector3t g(0.0f, 0.0f, -9.80665f);

Vector3t acc_ = raw_acc - acc_bias;

Vector3t acc = qt * acc_;

next_state.middleRows(3, 3) = vt; // + (acc - g) * dt; // acceleration didn't contribute to accuracy due to large noise

// orientation。首先完成了陀螺仪的增量计算并归一化(直接转化为四元数形式),将其转换为下一时刻的四元数。

Vector3t gyro = raw_gyro - gyro_bias;

Quaterniont dq(1, gyro[0] * dt / 2, gyro[1] * dt / 2, gyro[2] * dt / 2);

dq.normalize();

Quaterniont qt_ = (qt * dq).normalized();

next_state.middleRows(6, 4) << qt_.w(), qt_.x(), qt_.y(), qt_.z();

//将当前控制量传入下一时刻的状态向量。认为加速度和角速度上偏差不变

next_state.middleRows(10, 3) = state.middleRows(10, 3); // constant bias on acceleration

next_state.middleRows(13, 3) = state.middleRows(13, 3); // constant bias on angular velocity

return next_state;

}

h (观测方程)

观测方程直接将当前输入状态量作为观测量。这里的输入是在更新阶段(correct)生成的带误差方差的(error variances)的扩展状态空间下的(extended state space)状态量,也就是ext_sigma_points。

// observation equation

VectorXt h(const VectorXt& state) const {

VectorXt observation(7);

observation.middleRows(0, 3) = state.middleRows(0, 3);

observation.middleRows(3, 4) = state.middleRows(6, 4).normalized();

return observation;

}

kkl/unscented_kalman_filter.hpp

本文件中主要的函数也就构造函数、预测、矫正、计算sigma点、使协方差矩阵正有限(不太清楚)五个。

UnscentedKalmanFilterX2

首先,构造函数。可以看到输入了一系列包括待估计系统、状态向量维度、输入维度、观测维度、两个噪声、参数等等。完成了初始化操作。

UnscentedKalmanFilterX(const System& system, int state_dim, int input_dim, int measurement_dim, const MatrixXt& process_noise, const MatrixXt& measurement_noise, const VectorXt& mean, const MatrixXt& cov)

: state_dim(state_dim),

input_dim(input_dim),

measurement_dim(measurement_dim),

N(state_dim),

M(input_dim),

K(measurement_dim),

S(2 * state_dim + 1),

mean(mean),

cov(cov),

system(system),

process_noise(process_noise),

measurement_noise(measurement_noise),

lambda(1),

normal_dist(0.0, 1.0)

{

//设置长度。

weights.resize(S, 1);

sigma_points.resize(S, N);

ext_weights.resize(2 * (N + K) + 1, 1);

ext_sigma_points.resize(2 * (N + K) + 1, N + K);

expected_measurements.resize(2 * (N + K) + 1, K);

// initialize weights for unscented filter

weights[0] = lambda / (N + lambda);

for (int i = 1; i < 2 * N + 1; i++) {

weights[i] = 1 / (2 * (N + lambda));

}

// weights for extended state space which includes error variances

ext_weights[0] = lambda / (N + K + lambda);

for (int i = 1; i < 2 * (N + K) + 1; i++) {

ext_weights[i] = 1 / (2 * (N + K + lambda));

}

}

ukf->predict

通过pose_estimator->predict调用。

void predict(const VectorXt& control) {

// calculate sigma points. ukf的sigma点

ensurePositiveFinite(cov);

computeSigmaPoints(mean, cov, sigma_points);

//sigma_points更新。用在posesystem中定义的f函数来进行。

for (int i = 0; i < S; i++) {

sigma_points.row(i) = system.f(sigma_points.row(i), control);

}

/*----至此,sigma_points里存储的就是当前时刻的由ukf输出的系统状态。-----*/

//过程噪声,即ukf中的矩阵R

const auto& R = process_noise;

// unscented transform。定义当前的平均状态和协方差矩阵,并设置为0矩阵。

VectorXt mean_pred(mean.size());

MatrixXt cov_pred(cov.rows(), cov.cols());

mean_pred.setZero();

cov_pred.setZero();

//加权平均,预测状态

for (int i = 0; i < S; i++) {

mean_pred += weights[i] * sigma_points.row(i);

}

//根据状态预测协方差。

for (int i = 0; i < S; i++) {

VectorXt diff = sigma_points.row(i).transpose() - mean_pred;

cov_pred += weights[i] * diff * diff.transpose();

}

//附加过程噪声R,在pose_estimator中给出初值

cov_pred += R;

//更新mean和cov

mean = mean_pred;

cov = cov_pred;

}

ukf->correct

通过pose_estimator->correct调用。

void correct(const VectorXt& measurement) {

//N-状态方程维度。K-观测维度

// create extended state space which includes error variances

VectorXt ext_mean_pred = VectorXt::Zero(N + K, 1);

MatrixXt ext_cov_pred = MatrixXt::Zero(N + K, N + K);

//左上角N行1列

ext_mean_pred.topLeftCorner(N, 1) = VectorXt(mean);

//左上角N行N列

ext_cov_pred.topLeftCorner(N, N) = MatrixXt(cov);

//右下角K行K列。初始化为在pose_estimator输入的噪声。位置噪声0.01,四元数0.001

ext_cov_pred.bottomRightCorner(K, K) = measurement_noise;

/*---------------- 经过以上操作,现在扩展状态变量前N项为mean,扩展协方差左上角为N*N的cov,右下角为K*K的观测噪声--------------*/

//验证并计算

ensurePositiveFinite(ext_cov_pred);

//利用扩展状态空间的参数计算sigma点

computeSigmaPoints(ext_mean_pred, ext_cov_pred, ext_sigma_points);

// unscented transform

//这里使用了 ukf 的h 函数来计算观测。

//ext_sigma_points、expected_measurements是(2 * (N + K) + 1, K)的矩阵

//没太看明白 TODO

//取左上角前N个量,加上右下角K个量。

expected_measurements.setZero();

for (int i = 0; i < ext_sigma_points.rows(); i++) {

expected_measurements.row(i) = system.h(ext_sigma_points.row(i).transpose().topLeftCorner(N, 1));

expected_measurements.row(i) += VectorXt(ext_sigma_points.row(i).transpose().bottomRightCorner(K, 1));

}

//加权平均。同predict函数相似。

VectorXt expected_measurement_mean = VectorXt::Zero(K);

for (int i = 0; i < ext_sigma_points.rows(); i++) {

expected_measurement_mean += ext_weights[i] * expected_measurements.row(i);

}

MatrixXt expected_measurement_cov = MatrixXt::Zero(K, K);

for (int i = 0; i < ext_sigma_points.rows(); i++) {

VectorXt diff = expected_measurements.row(i).transpose() - expected_measurement_mean;

expected_measurement_cov += ext_weights[i] * diff * diff.transpose();

}

// calculated transformed covariance

//转换方差。用于计算sigama,进而计算卡尔曼增益

MatrixXt sigma = MatrixXt::Zero(N + K, K);

for (int i = 0; i < ext_sigma_points.rows(); i++) {

auto diffA = (ext_sigma_points.row(i).transpose() - ext_mean_pred);

auto diffB = (expected_measurements.row(i).transpose() - expected_measurement_mean);

sigma += ext_weights[i] * (diffA * diffB.transpose());

}

kalman_gain = sigma * expected_measurement_cov.inverse();

const auto& K = kalman_gain;

//更新最后的ukf。

VectorXt ext_mean = ext_mean_pred + K * (measurement - expected_measurement_mean);

MatrixXt ext_cov = ext_cov_pred - K * expected_measurement_cov * K.transpose();

mean = ext_mean.topLeftCorner(N, 1);

cov = ext_cov.topLeftCorner(N, N);

}

computeSigmaPoints

通过mean和cov计算sigma点。思路是将cov做Cholesky分解,用下三角矩阵L对mean做处理。得到一系列sigma_points.

void computeSigmaPoints(const VectorXt& mean, const MatrixXt& cov, MatrixXt& sigma_points) {

const int n = mean.size();

assert(cov.rows() == n && cov.cols() == n);

//llt分解。求Cholesky分解A=LL^*=U^*U。L是下三角矩阵

Eigen::LLT<MatrixXt> llt;

llt.compute((n + lambda) * cov);

MatrixXt l = llt.matrixL();

//mean是列向量。这里会自动转置处理。

sigma_points.row(0) = mean;

for (int i = 0; i < n; i++) {

sigma_points.row(1 + i * 2) = mean + l.col(i); //奇数1357

sigma_points.row(1 + i * 2 + 1) = mean - l.col(i); //偶数2468

}

}

ensurePositiveFinite

保证协方差的正有限。未实际应用。

void ensurePositiveFinite(MatrixXt& cov) {

return;

//就到这里了,在上面就return掉了。

const double eps = 1e-9;

Eigen::EigenSolver<MatrixXt> solver(cov);

MatrixXt D = solver.pseudoEigenvalueMatrix(); //特征值

MatrixXt V = solver.pseudoEigenvectors(); //特征向量

for (int i = 0; i < D.rows(); i++) {

if (D(i, i) < eps) {

D(i, i) = eps;

}

}

cov = V * D * V.inverse();

}