Paper地址:https://arxiv.org/abs/2201.00814

GitHub链接:https://github.com/Arnav0400/ViT-Slim

Methods

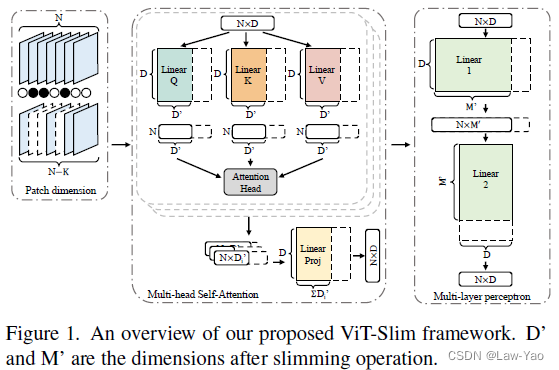

ViT Slimming通过结构搜索与Patch selection的结合,一方面实现了多维度、多尺度结构压缩,另一方面减少了Patch或Token的长度冗余,从而有效减少参数量与计算量。具体而言,为ViT结构中流动的Tensor定义了相应的Soft mask,在计算时将二者相乘,并在Loss function中引入Soft mask的L1正则约束:

![]()

其中![]() 表示一系列Mask矢量的集合,

表示一系列Mask矢量的集合,![]() 对应于中间张量

对应于中间张量![]() 。

。

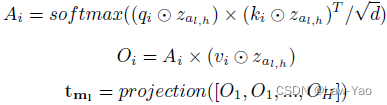

- 结构搜索:首先在MHSA中引入可微分Soft mask,在Attention head维度实现Feature size的L1稀疏化(也可以类似地构造Head number的稀疏化):

其中![]() 表示第l层的第h个Head的Soft mask。其次在FFN中引入可微分Soft mask,在FFN维度实现Intermediate size的L1稀疏化:

表示第l层的第h个Head的Soft mask。其次在FFN中引入可微分Soft mask,在FFN维度实现Intermediate size的L1稀疏化:

![]()

其中![]() 表示相应的Soft mask。

表示相应的Soft mask。

- Patch selection:针对每个Transformer layer的输入或输出Tensor,都定义了Soft mask以消除低重要性的Patches,且Mask value先经过Tanh以防止数值膨胀。另外,浅层被消除的Patch,在深层也需要消除,以避免计算异常。

class SparseAttention(Attention):

def __init__(self, attn_module, head_search=False, uniform_search=False):

super().__init__(attn_module.qkv.in_features, attn_module.num_heads, True, attn_module.scale, attn_module.attn_drop.p, attn_module.proj_drop.p)

self.is_searched = False

self.num_gates = attn_module.qkv.in_features // self.num_heads

if head_search:

self.zeta = nn.Parameter(torch.ones(1, 1, self.num_heads, 1, 1))

elif uniform_search:

self.zeta = nn.Parameter(torch.ones(1, 1, 1, 1, self.num_gates))

else:

self.zeta = nn.Parameter(torch.ones(1, 1, self.num_heads, 1, self.num_gates))

self.searched_zeta = torch.ones_like(self.zeta)

self.patch_zeta = nn.Parameter(torch.ones(1, self.num_patches, 1)*3)

self.searched_patch_zeta = torch.ones_like(self.patch_zeta)

self.patch_activation = nn.Tanh()

def forward(self, x):

z_patch = self.searched_patch_zeta if self.is_searched else self.patch_activation(self.patch_zeta)

x *= z_patch

B, N, C = x.shape

z = self.searched_zeta if self.is_searched else self.zeta

qkv = self.qkv(x).reshape(B, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4) # 3, B, H, N, d(C/H)

qkv *= z

q, k, v = qkv[0], qkv[1], qkv[2] # make torchscript happy (cannot use tensor as tuple) # B, H, N, d

attn = (q @ k.transpose(-2, -1)) * self.scale

attn = attn.softmax(dim=-1)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B, N, C)

x = self.proj(x)

x = self.proj_drop(x)

return x

def compress(self, threshold_attn):

self.is_searched = True

self.searched_zeta = (self.zeta>=threshold_attn).float()

self.zeta.requires_grad = False

def compress_patch(self, threshold_patch=None, zetas=None):

self.is_searched = True

zetas = torch.from_numpy(zetas).reshape_as(self.patch_zeta)

self.searched_patch_zeta = (zetas).float().to(self.zeta.device)

self.patch_zeta.requires_grad = False可微分Soft mask与网络权重的训练是联合进行的,并且用预训练参数初始化网络权重,因此搜索训练的整体时间开销是相对较低的。完成搜索训练之后,按Soft mask数值的大小排序,消除不重要的网络权重或Patches,从而实现结构搜索与Patch selection。提取特定的精简结构之后,需要额外的Re-training恢复模型精度。

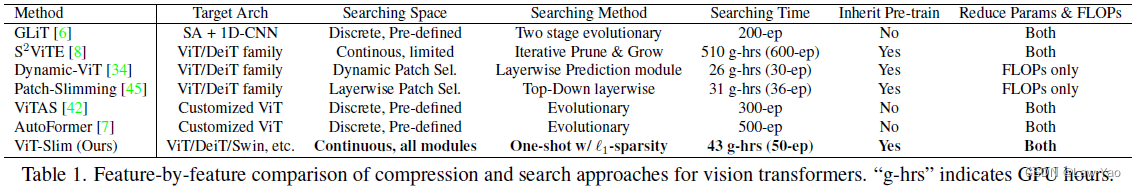

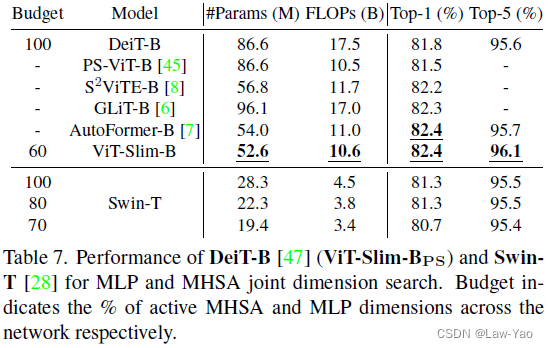

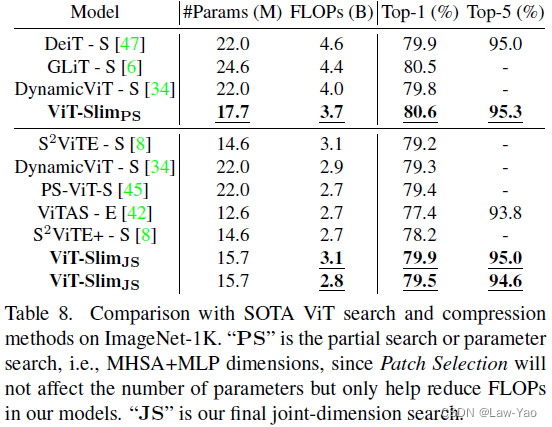

实验结果

有关Transformer模型压缩与优化加速的更多讨论,参考如下文章: