# 肿瘤预测(AdaBoost)

【实验内容】

基于威斯康星乳腺癌数据集,使用AdaBoost算法实现肿瘤预测。

【实验要求】

1.加载sklearn自带的数据集,使用DataFrame形式探索数据。

2.划分训练集和测试集,检查训练集和测试集的平均癌症发生率。

3.配置模型,训练模型,模型预测,模型评估。

(1)构建一棵最大深度为2的决策树弱学习器,训练、预测、评估。

(2)再构建一个包含50棵树的AdaBoost集成分类器(步长为3),训练、预测、评估。

参考:将决策树的数量从1增加到50,步长为3。输出集成后的准确度。

(3)将(2)的性能与弱学习者进行比较。

4.绘制准确度的折线图,x轴为决策树的数量,y轴为准确度。

AdaBoostClassifier参数解释:

- base_estimator:弱分类器,默认是CART分类树:DecisionTressClassifier

- algorithm:在scikit-learn实现了两种AdaBoost分类算法,即SAMME和SAMME.R, SAMME就是AdaBoost算法,指Discrete。AdaBoost.SAMME.R指Real AdaBoost,返回值不再是离散的类型,而是一个表示概率的实数值。SAMME.R的迭代一般比SAMME快,默认算法是SAMME.R。因此,base_estimator必须使用支持概率预测的分类器。

- n_estimator:最大迭代次数,默认50。在实际调参过程中,常常将n_estimator和学习率learning_rate一起考虑。

- learning_rate:每个弱分类器的权重缩减系数v。fk(x)=fk?1?ak?Gk(x)f_k(x)=f_{k-1}a_kG_k(x)f k(x)=f k?1?a k?G k(x)。较小的v意味着更多的迭代次数,默认是1,也就是v不发挥作用。

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

from sklearn import tree # 导入决策树包

from sklearn.ensemble import AdaBoostClassifier # 导入 AdaBoost 包

from sklearn.metrics import accuracy_score # 导入准确率评价指标

import numpy as np

from sklearn.metrics import accuracy_score # 导入准确率评价指标

加载sklearn自带的数据集,使用DataFrame形式探索数据

cancers = load_breast_cancer()

df = pd.DataFrame(cancers.data, columns=cancers.feature_names)

df

| mean radius | mean texture | mean perimeter | mean area | mean smoothness | mean compactness | mean concavity | mean concave points | mean symmetry | mean fractal dimension | ... | worst radius | worst texture | worst perimeter | worst area | worst smoothness | worst compactness | worst concavity | worst concave points | worst symmetry | worst fractal dimension | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 17.99 | 10.38 | 122.80 | 1001.0 | 0.11840 | 0.27760 | 0.30010 | 0.14710 | 0.2419 | 0.07871 | ... | 25.380 | 17.33 | 184.60 | 2019.0 | 0.16220 | 0.66560 | 0.7119 | 0.2654 | 0.4601 | 0.11890 |

| 1 | 20.57 | 17.77 | 132.90 | 1326.0 | 0.08474 | 0.07864 | 0.08690 | 0.07017 | 0.1812 | 0.05667 | ... | 24.990 | 23.41 | 158.80 | 1956.0 | 0.12380 | 0.18660 | 0.2416 | 0.1860 | 0.2750 | 0.08902 |

| 2 | 19.69 | 21.25 | 130.00 | 1203.0 | 0.10960 | 0.15990 | 0.19740 | 0.12790 | 0.2069 | 0.05999 | ... | 23.570 | 25.53 | 152.50 | 1709.0 | 0.14440 | 0.42450 | 0.4504 | 0.2430 | 0.3613 | 0.08758 |

| 3 | 11.42 | 20.38 | 77.58 | 386.1 | 0.14250 | 0.28390 | 0.24140 | 0.10520 | 0.2597 | 0.09744 | ... | 14.910 | 26.50 | 98.87 | 567.7 | 0.20980 | 0.86630 | 0.6869 | 0.2575 | 0.6638 | 0.17300 |

| 4 | 20.29 | 14.34 | 135.10 | 1297.0 | 0.10030 | 0.13280 | 0.19800 | 0.10430 | 0.1809 | 0.05883 | ... | 22.540 | 16.67 | 152.20 | 1575.0 | 0.13740 | 0.20500 | 0.4000 | 0.1625 | 0.2364 | 0.07678 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 564 | 21.56 | 22.39 | 142.00 | 1479.0 | 0.11100 | 0.11590 | 0.24390 | 0.13890 | 0.1726 | 0.05623 | ... | 25.450 | 26.40 | 166.10 | 2027.0 | 0.14100 | 0.21130 | 0.4107 | 0.2216 | 0.2060 | 0.07115 |

| 565 | 20.13 | 28.25 | 131.20 | 1261.0 | 0.09780 | 0.10340 | 0.14400 | 0.09791 | 0.1752 | 0.05533 | ... | 23.690 | 38.25 | 155.00 | 1731.0 | 0.11660 | 0.19220 | 0.3215 | 0.1628 | 0.2572 | 0.06637 |

| 566 | 16.60 | 28.08 | 108.30 | 858.1 | 0.08455 | 0.10230 | 0.09251 | 0.05302 | 0.1590 | 0.05648 | ... | 18.980 | 34.12 | 126.70 | 1124.0 | 0.11390 | 0.30940 | 0.3403 | 0.1418 | 0.2218 | 0.07820 |

| 567 | 20.60 | 29.33 | 140.10 | 1265.0 | 0.11780 | 0.27700 | 0.35140 | 0.15200 | 0.2397 | 0.07016 | ... | 25.740 | 39.42 | 184.60 | 1821.0 | 0.16500 | 0.86810 | 0.9387 | 0.2650 | 0.4087 | 0.12400 |

| 568 | 7.76 | 24.54 | 47.92 | 181.0 | 0.05263 | 0.04362 | 0.00000 | 0.00000 | 0.1587 | 0.05884 | ... | 9.456 | 30.37 | 59.16 | 268.6 | 0.08996 | 0.06444 | 0.0000 | 0.0000 | 0.2871 | 0.07039 |

569 rows × 30 columns

划分训练集和测试集,检查训练集和测试集的平均癌症发生率

x_train, x_test, y_train, y_test = train_test_split(cancers.data, cancers.target, test_size=0.30)

print("train: {0:.2f}%".format(100 * y_train.mean()))

print("test: {0:.2f}%".format(100 * y_test.mean()))

train: 62.06%

test: 64.33%

配置模型,训练模型,模型预测,模型评估

构建一棵最大深度为2的决策树弱学习器,训练、预测、评估。

tr = tree.DecisionTreeClassifier(max_depth=2) #加载决策树模型

tr.fit(x_train, y_train)

DecisionTreeClassifier(max_depth=2)

predictions = tr.predict(x_test)

WeakLearnersAccuracy = accuracy_score(y_test, predictions)

再构建一个包含50棵树的AdaBoost集成分类器(步长为3),训练、预测、评估。

clf = AdaBoostClassifier(base_estimator=tree.DecisionTreeClassifier(),n_estimators=50,learning_rate=3)

clf

AdaBoostClassifier(base_estimator=DecisionTreeClassifier(), learning_rate=3)

clf.fit(x_train, y_train)

AdaBoostClassifier(base_estimator=DecisionTreeClassifier(), learning_rate=3)

predictions = clf.predict(x_test)

StrongLearnersAccuracy = accuracy_score(y_test, predictions)

将强学习者的性能与弱学习者进行比较

print("StrongLearnersAccuracy: {0:.2f}%".format(StrongLearnersAccuracy))

print("WeakLearnersAccuracy: {0:.2f}%".format(WeakLearnersAccuracy))

StrongLearnersAccuracy: 0.89%

WeakLearnersAccuracy: 0.92%

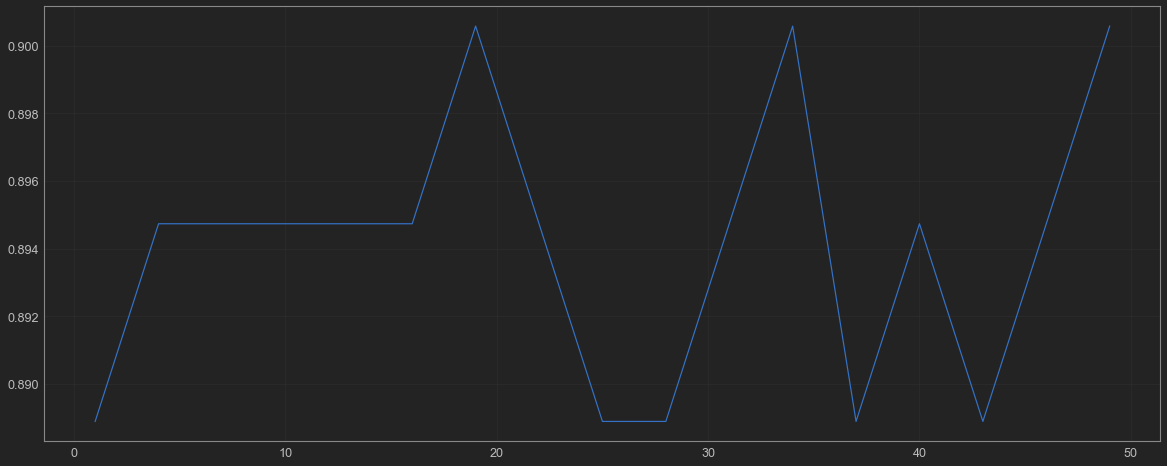

绘制准确度的折线图,x轴为决策树的数量,y轴为准确度

accuracys = []

for i in range(1,50,3):

clf = AdaBoostClassifier(base_estimator=tree.DecisionTreeClassifier(),n_estimators=50,learning_rate=3)

clf.fit(x_train, y_train)

predictions = clf.predict(x_test)

temp = accuracy_score(y_test, predictions)

accuracys.append(temp)

accuracys= np.array(accuracys)

accuracys

array([0.88888889, 0.89473684, 0.89473684, 0.89473684, 0.89473684,

0.89473684, 0.9005848 , 0.89473684, 0.88888889, 0.88888889,

0.89473684, 0.9005848 , 0.88888889, 0.89473684, 0.88888889,

0.89473684, 0.9005848 ])

plt.figure(figsize=(20,8))

plt.grid(True,linestyle='-',alpha=0.5)

plt.plot(range(1,50,3),accuracys)

plt.show()

【实验内容】

基于威斯康星乳腺癌数据集,使用AdaBoost算法实现肿瘤预测。

【实验要求】

1.加载sklearn自带的数据集,使用DataFrame形式探索数据。

2.划分训练集和测试集,检查训练集和测试集的平均癌症发生率。

3.配置模型,训练模型,模型预测,模型评估。

(1)构建一棵最大深度为2的决策树弱学习器,训练、预测、评估。

(2)再构建一个包含50棵树的AdaBoost集成分类器(步长为3),训练、预测、评估。

参考:将决策树的数量从1增加到50,步长为3。输出集成后的准确度。

(3)将(2)的性能与弱学习者进行比较。

4.绘制准确度的折线图,x轴为决策树的数量,y轴为准确度。

AdaBoostClassifier参数解释:

- base_estimator:弱分类器,默认是CART分类树:DecisionTressClassifier

- algorithm:在scikit-learn实现了两种AdaBoost分类算法,即SAMME和SAMME.R, SAMME就是AdaBoost算法,指Discrete。AdaBoost.SAMME.R指Real AdaBoost,返回值不再是离散的类型,而是一个表示概率的实数值。SAMME.R的迭代一般比SAMME快,默认算法是SAMME.R。因此,base_estimator必须使用支持概率预测的分类器。

- n_estimator:最大迭代次数,默认50。在实际调参过程中,常常将n_estimator和学习率learning_rate一起考虑。

- learning_rate:每个弱分类器的权重缩减系数v。fk(x)=fk?1?ak?Gk(x)f_k(x)=f_{k-1}a_kG_k(x)f k(x)=f k?1?a k?G k(x)。较小的v意味着更多的迭代次数,默认是1,也就是v不发挥作用。

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

from sklearn import tree # 导入决策树包

from sklearn.ensemble import AdaBoostClassifier # 导入 AdaBoost 包

from sklearn.metrics import accuracy_score # 导入准确率评价指标

import numpy as np

from sklearn.metrics import accuracy_score # 导入准确率评价指标

加载sklearn自带的数据集,使用DataFrame形式探索数据

cancers = load_breast_cancer()

df = pd.DataFrame(cancers.data, columns=cancers.feature_names)

df

| mean radius | mean texture | mean perimeter | mean area | mean smoothness | mean compactness | mean concavity | mean concave points | mean symmetry | mean fractal dimension | ... | worst radius | worst texture | worst perimeter | worst area | worst smoothness | worst compactness | worst concavity | worst concave points | worst symmetry | worst fractal dimension | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 17.99 | 10.38 | 122.80 | 1001.0 | 0.11840 | 0.27760 | 0.30010 | 0.14710 | 0.2419 | 0.07871 | ... | 25.380 | 17.33 | 184.60 | 2019.0 | 0.16220 | 0.66560 | 0.7119 | 0.2654 | 0.4601 | 0.11890 |

| 1 | 20.57 | 17.77 | 132.90 | 1326.0 | 0.08474 | 0.07864 | 0.08690 | 0.07017 | 0.1812 | 0.05667 | ... | 24.990 | 23.41 | 158.80 | 1956.0 | 0.12380 | 0.18660 | 0.2416 | 0.1860 | 0.2750 | 0.08902 |

| 2 | 19.69 | 21.25 | 130.00 | 1203.0 | 0.10960 | 0.15990 | 0.19740 | 0.12790 | 0.2069 | 0.05999 | ... | 23.570 | 25.53 | 152.50 | 1709.0 | 0.14440 | 0.42450 | 0.4504 | 0.2430 | 0.3613 | 0.08758 |

| 3 | 11.42 | 20.38 | 77.58 | 386.1 | 0.14250 | 0.28390 | 0.24140 | 0.10520 | 0.2597 | 0.09744 | ... | 14.910 | 26.50 | 98.87 | 567.7 | 0.20980 | 0.86630 | 0.6869 | 0.2575 | 0.6638 | 0.17300 |

| 4 | 20.29 | 14.34 | 135.10 | 1297.0 | 0.10030 | 0.13280 | 0.19800 | 0.10430 | 0.1809 | 0.05883 | ... | 22.540 | 16.67 | 152.20 | 1575.0 | 0.13740 | 0.20500 | 0.4000 | 0.1625 | 0.2364 | 0.07678 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 564 | 21.56 | 22.39 | 142.00 | 1479.0 | 0.11100 | 0.11590 | 0.24390 | 0.13890 | 0.1726 | 0.05623 | ... | 25.450 | 26.40 | 166.10 | 2027.0 | 0.14100 | 0.21130 | 0.4107 | 0.2216 | 0.2060 | 0.07115 |

| 565 | 20.13 | 28.25 | 131.20 | 1261.0 | 0.09780 | 0.10340 | 0.14400 | 0.09791 | 0.1752 | 0.05533 | ... | 23.690 | 38.25 | 155.00 | 1731.0 | 0.11660 | 0.19220 | 0.3215 | 0.1628 | 0.2572 | 0.06637 |

| 566 | 16.60 | 28.08 | 108.30 | 858.1 | 0.08455 | 0.10230 | 0.09251 | 0.05302 | 0.1590 | 0.05648 | ... | 18.980 | 34.12 | 126.70 | 1124.0 | 0.11390 | 0.30940 | 0.3403 | 0.1418 | 0.2218 | 0.07820 |

| 567 | 20.60 | 29.33 | 140.10 | 1265.0 | 0.11780 | 0.27700 | 0.35140 | 0.15200 | 0.2397 | 0.07016 | ... | 25.740 | 39.42 | 184.60 | 1821.0 | 0.16500 | 0.86810 | 0.9387 | 0.2650 | 0.4087 | 0.12400 |

| 568 | 7.76 | 24.54 | 47.92 | 181.0 | 0.05263 | 0.04362 | 0.00000 | 0.00000 | 0.1587 | 0.05884 | ... | 9.456 | 30.37 | 59.16 | 268.6 | 0.08996 | 0.06444 | 0.0000 | 0.0000 | 0.2871 | 0.07039 |

569 rows × 30 columns

划分训练集和测试集,检查训练集和测试集的平均癌症发生率

x_train, x_test, y_train, y_test = train_test_split(cancers.data, cancers.target, test_size=0.30)

print("train: {0:.2f}%".format(100 * y_train.mean()))

print("test: {0:.2f}%".format(100 * y_test.mean()))

train: 62.06%

test: 64.33%

配置模型,训练模型,模型预测,模型评估

构建一棵最大深度为2的决策树弱学习器,训练、预测、评估。

tr = tree.DecisionTreeClassifier(max_depth=2) #加载决策树模型

tr.fit(x_train, y_train)

DecisionTreeClassifier(max_depth=2)

predictions = tr.predict(x_test)

WeakLearnersAccuracy = accuracy_score(y_test, predictions)

再构建一个包含50棵树的AdaBoost集成分类器(步长为3),训练、预测、评估。

clf = AdaBoostClassifier(base_estimator=tree.DecisionTreeClassifier(),n_estimators=50,learning_rate=3)

clf

AdaBoostClassifier(base_estimator=DecisionTreeClassifier(), learning_rate=3)

clf.fit(x_train, y_train)

AdaBoostClassifier(base_estimator=DecisionTreeClassifier(), learning_rate=3)

predictions = clf.predict(x_test)

StrongLearnersAccuracy = accuracy_score(y_test, predictions)

将强学习者的性能与弱学习者进行比较

print("StrongLearnersAccuracy: {0:.2f}%".format(StrongLearnersAccuracy))

print("WeakLearnersAccuracy: {0:.2f}%".format(WeakLearnersAccuracy))

StrongLearnersAccuracy: 0.89%

WeakLearnersAccuracy: 0.92%

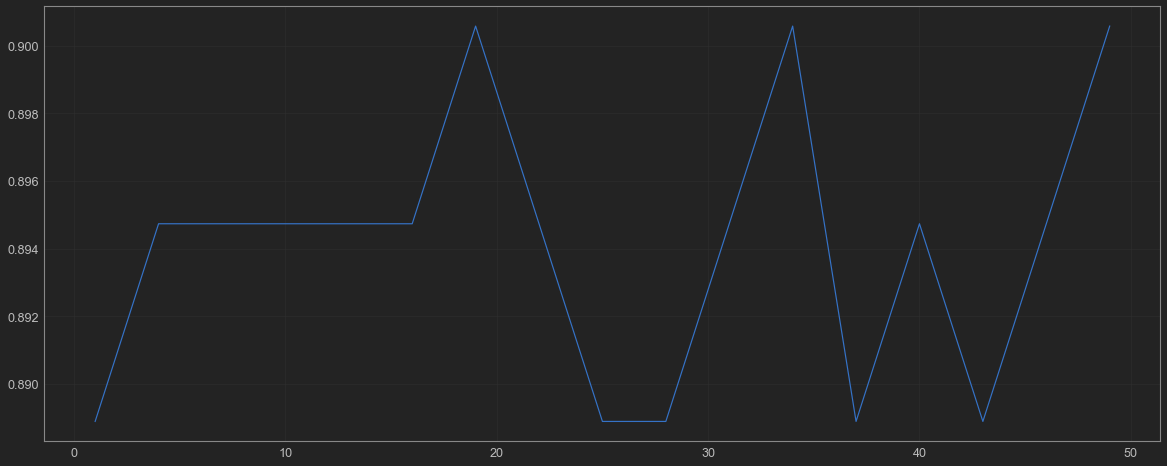

绘制准确度的折线图,x轴为决策树的数量,y轴为准确度

accuracys = []

for i in range(1,50,3):

clf = AdaBoostClassifier(base_estimator=tree.DecisionTreeClassifier(),n_estimators=50,learning_rate=3)

clf.fit(x_train, y_train)

predictions = clf.predict(x_test)

temp = accuracy_score(y_test, predictions)

accuracys.append(temp)

accuracys= np.array(accuracys)

accuracys

array([0.88888889, 0.89473684, 0.89473684, 0.89473684, 0.89473684,

0.89473684, 0.9005848 , 0.89473684, 0.88888889, 0.88888889,

0.89473684, 0.9005848 , 0.88888889, 0.89473684, 0.88888889,

0.89473684, 0.9005848 ])

plt.figure(figsize=(20,8))

plt.grid(True,linestyle='-',alpha=0.5)

plt.plot(range(1,50,3),accuracys)

plt.show()