又快又好,行人检测(人体检测)和人脸检测和人脸关键点检测(C++/Android)

目录

又快又好,行人检测(人体检测)和人脸检测和人脸关键点检测(C++/Android)

1.前言

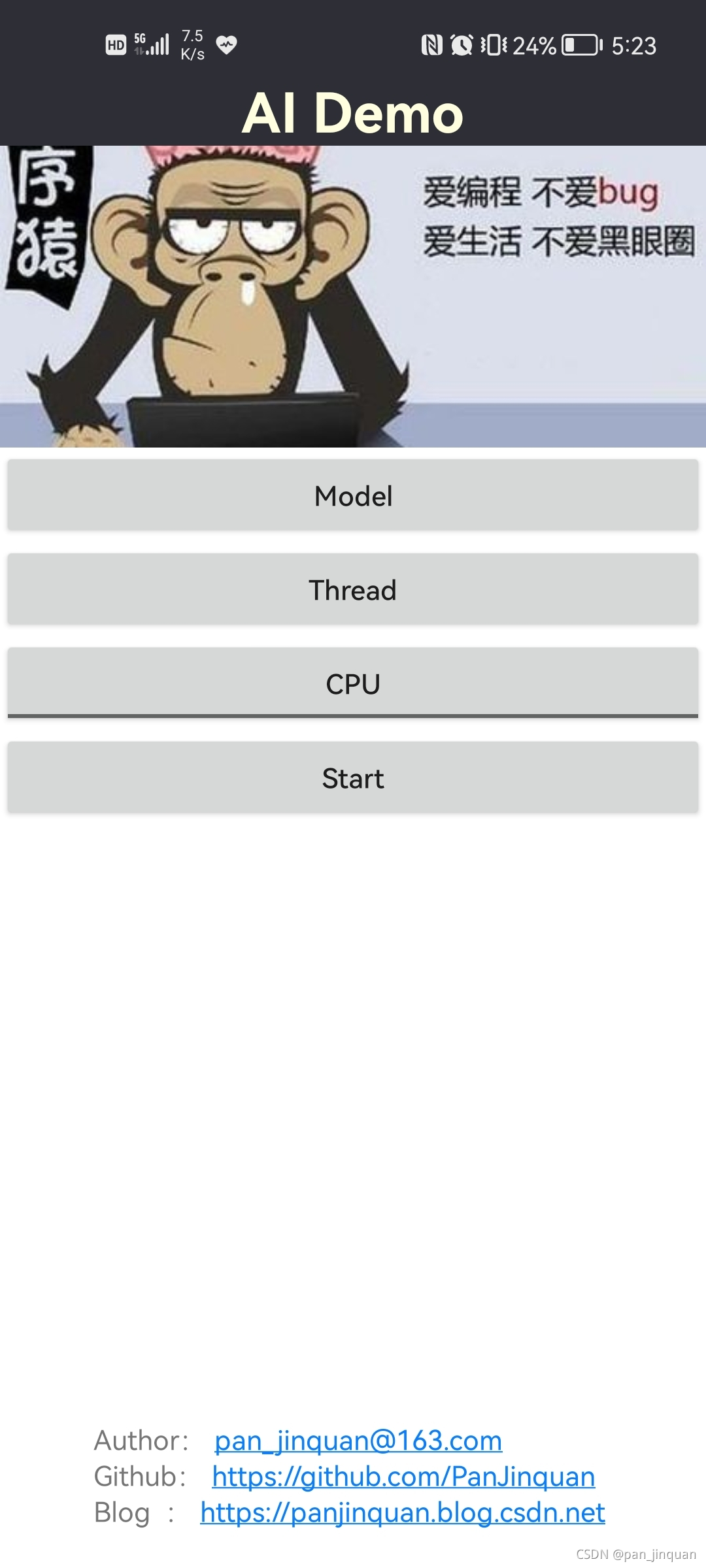

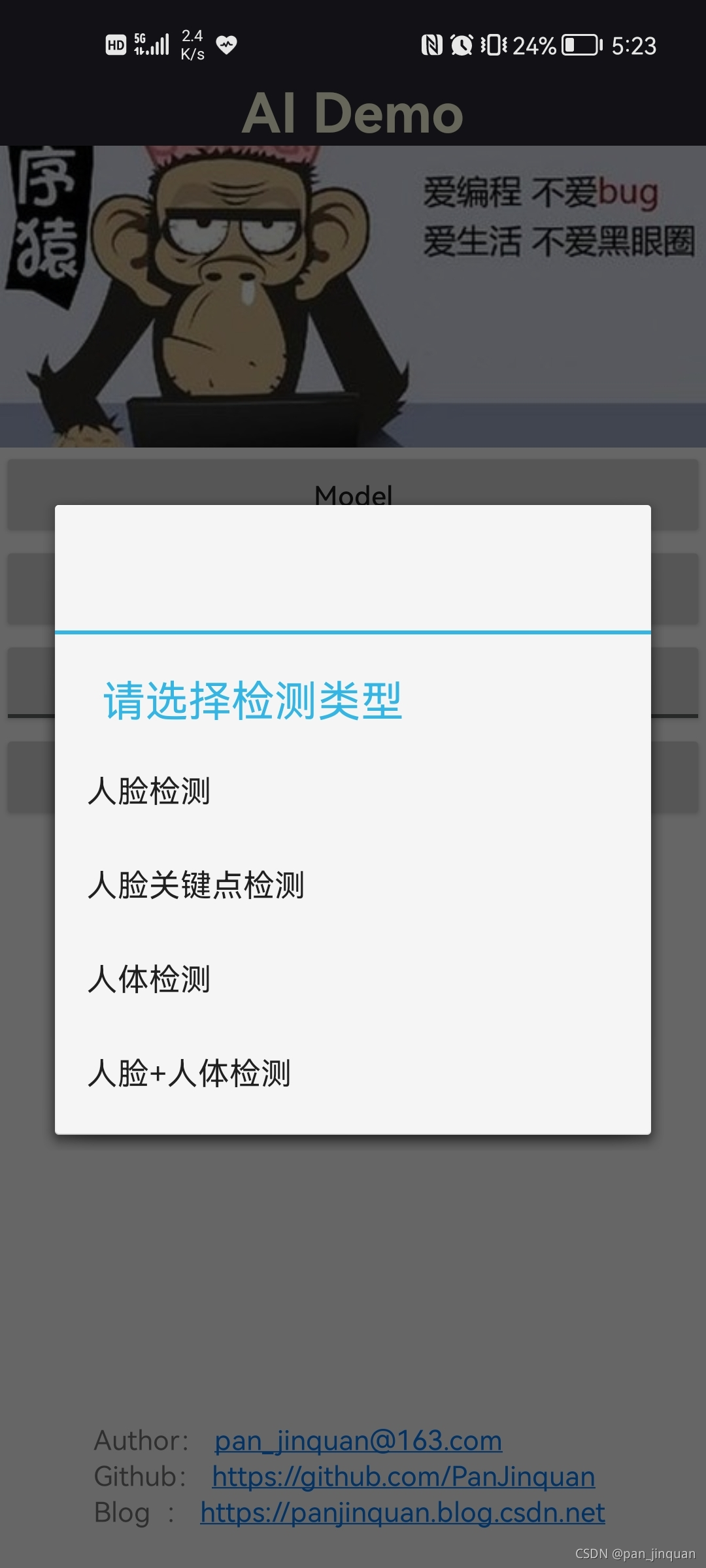

考虑到人脸人体检测的需求,本人开发了一套轻量化的,高精度的,可实时的人脸/人体检测Android Demo,主要支持功能如下:

- 支持人脸检测算法模型

- 支持人脸检测和人脸关键点检测(5个人脸关键点)算法模型

- 支持人体检测(行人检测)算法模型

- 支持人脸和人体同时检测算法模型

所有算法模型都使用C++开发,推理框架采用TNN,Android通过JNI接口进行算法调用;所有算法模型都可在普通Android手机实时跑,在普通Android手机,CPU和GPU都可以达到实时检测的效果(CPU约25毫秒左右,GPU约15毫秒左右)

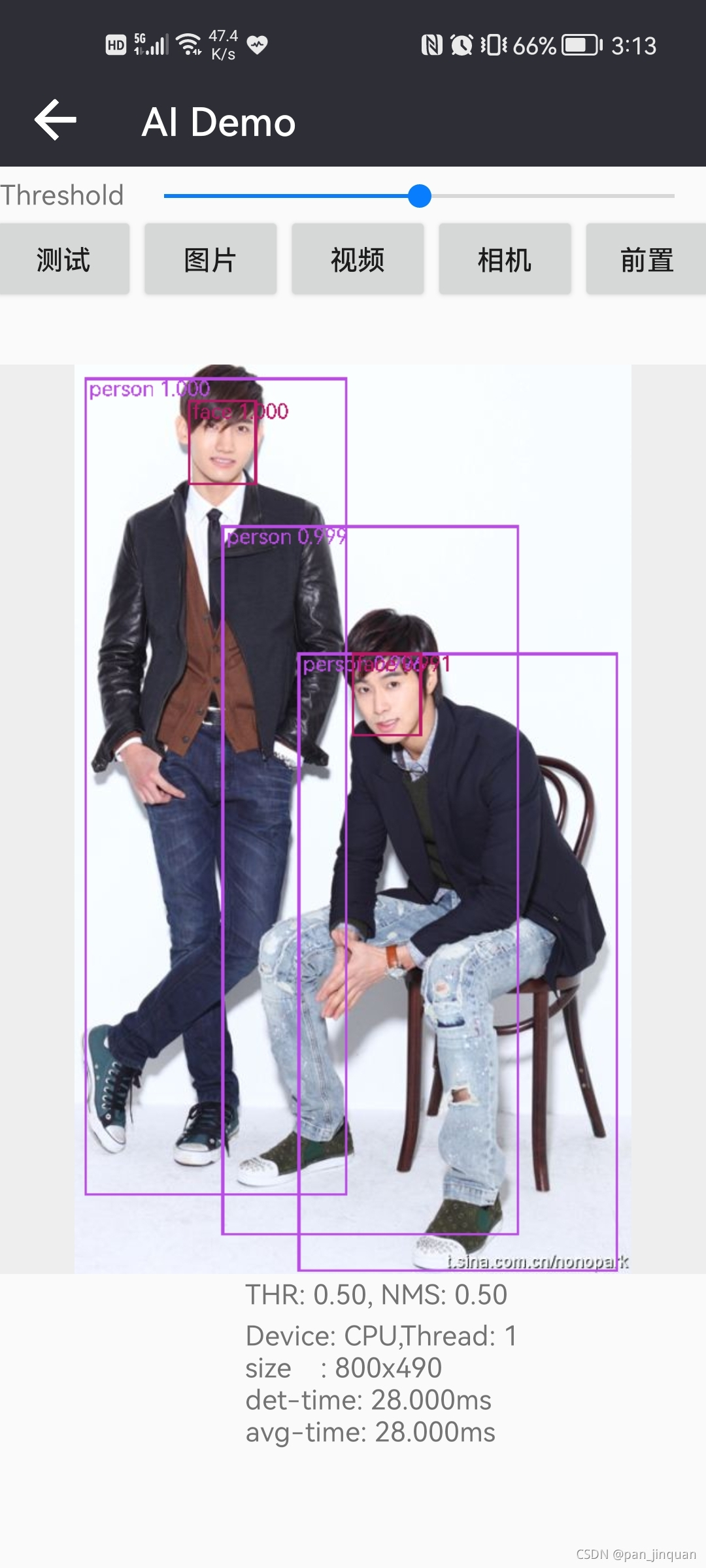

先看一些效果:

| 人脸关键点检测 | 人体检测 | 人脸+人体检测 |

|  |  |

Android Demo APP免费体检:

链接:?https://download.csdn.net/download/guyuealian/25924076

Android Demo APP源码下载地址:

【尊重原创,转载请注明出处】https://panjinquan.blog.csdn.net/article/details/125348189

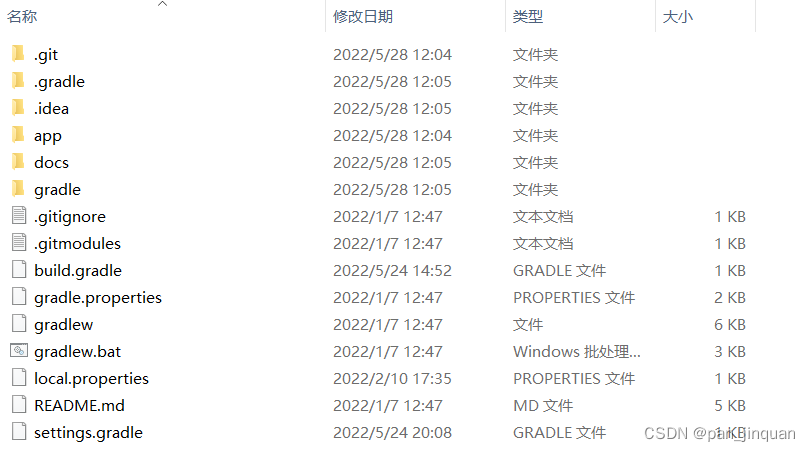

2.项目说明

(1)数据集

人脸人体的数据都来源于网上开源的数据集,其中把WiderFace数据集作为人脸检测和人脸关键点检测的训练数据集;使用COCO的数据集person标签作为人体检测训练的数据

| 数据集 | 数据说明 |

| WiderFace | WIDER FACE数据集是人脸检测的一个benchmark数据集,包含32203图像,以及393,703个标注人脸,其中,158,989个标注人脸位于训练集,39,496个位于验证集。每一个子集都包含3个级别的检测难度:Easy,Medium,Hard。这些人脸在尺度,姿态,光照、表情、遮挡方面都有很大的变化范围 |

| COCO | COCO数据集是一个可用于图像检测(image detection),语义分割(semantic segmentation)和图像标题生成(image captioning)的大规模数据集。它有超过330K张图像(其中220K张是有标注的图像),包含150万个目标,80个目标类别(object categories:行人、汽车、大象等),91种材料类别(stuff categoris:草、墙、天空等),每张图像包含五句图像的语句描述,且有250,000个带关键点标注的行人。 |

(2)模型训练

训练代码请参考:https://github.com/Linzaer/Ultra-Light-Fast-Generic-Face-Detector-1MB?,一个基于SSD简化的人脸检测模型,很轻量化,整个模型仅仅1.7M左右,在普通Android手机都可以实时检测。

原始代码使用WiderFace人脸数据集进行训练,仅支持了人脸检测,后经鄙人优化后,提高了人脸检测效果,并支持人脸关键点检测,人体检测。数据集是WiderFace,VOC和COCO。

(3)依赖库

- TNN:GitHub - Tencent/TNN: TNN: developed by Tencent Youtu Lab and Guangying Lab, a uniform deep learning inference framework for mobile、desktop and server. TNN is distinguished by several outstanding features, including its cross-platform capability, high performance, model compression and code pruning. Based on ncnn and Rapidnet, TNN further strengthens the support and performance optimization for mobile devices, and also draws on the advantages of good extensibility and high performance from existed open source efforts. TNN has been deployed in multiple Apps from Tencent, such as Mobile QQ, Weishi, Pitu, etc. Contributions are welcome to work in collaborative with us and make TNN a better framework.

https://github.com/Tencent/TNN

https://github.com/Tencent/TNN - OpenCV: Releases - OpenCV (推荐opencv-4.3.0)

- OpenCL: Choose & Download Intel? SDK for OpenCL? Applications (GPU的支持)

- base-utils:GitHub - PanJinquan/base-utils: 集成C/C++ OpenCV 常用的算法和工具 (一些文件和图像处理的相关工具)

- 拉取子模块submodule(TNN,base-utils)库

# pull 3rdparty(TNN,base-utils) submodule

git submodule init

git submodule update- 配置OpenCV

推荐opencv-4.3.0

mkdir build

cd build

cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local ..

sudo make install- 配置OpenCL(可选)

Android系统一般都支持OpenCL,Linux系统可参考如下配置:

# 参考安装OpenCL: https://blog.csdn.net/qq_28483731/article/details/68235383,作为测试,安装`intel cpu版本的OpenCL`即可

# 安装clinfo,clinfo是一个显示OpenCL平台和设备的软件

sudo apt-get install clinfo

# 安装依赖

sudo apt install dkms xz-utils openssl libnuma1 libpciaccess0 bc curl libssl-dev lsb-core libicu-dev

sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF

echo "deb http://download.mono-project.com/repo/debian wheezy main" | sudo tee /etc/apt/sources.list.d/mono-xamarin.list

sudo apt-get update

sudo apt-get install mono-complete

# 在intel官网上下载了intel SDK的tgz文件,并且解压

sudo sh install.sh- CMake配置说明

Linux OR Windows测试,

CMakeLists.txt

# TNN set

set(TNN_OPENCL_ENABLE ON CACHE BOOL "" FORCE)

set(TNN_CPU_ENABLE ON CACHE BOOL "" FORCE)

set(TNN_X86_ENABLE ON CACHE BOOL "" FORCE)

set(TNN_QUANTIZATION_ENABLE OFF CACHE BOOL "" FORCE)

set(TNN_OPENMP_ENABLE ON CACHE BOOL "" FORCE) # Multi-Thread

add_definitions(-DTNN_OPENCL_ENABLE) # for OpenCL GPU

add_definitions(-DDEBUG_ON) # for WIN/Linux Log

add_definitions(-DDEBUG_LOG_ON) # for WIN/Linux Log

add_definitions(-DDEBUG_IMSHOW_OFF) # for OpenCV show

add_definitions(-DPLATFORM_LINUX)

# add_definitions(-DPLATFORM_WINDOWS)3. C++/Java接口

所有算法模型都使用C++开发,推理框架采用TNN,Android通过JNI接口进行算法调用

?Java接口:

package com.cv.tnn.model;

import android.graphics.Bitmap;

public class Detector {

static {

System.loadLibrary("tnn_wrapper");

}

/***

* 初始化关键点检测模型

* @param proto: TNN *.tnnproto文件文件名(含后缀名)

* @param model: TNN *.tnnmodel文件文件名(含后缀名)

* @param root:模型文件的根目录,放在assets文件夹下

* @param model_type:模型类型

* @param num_thread:开启线程数

* @param useGPU:关键点的置信度,小于值的坐标会置-1

*/

public static native void init(String proto, String model, String root, int model_type, int num_thread, boolean useGPU);

/***

* 检测关键点

* @param bitmap 图像(bitmap),ARGB_8888格式

* @param score_thresh:置信度阈值

* @param iou_thresh: IOU阈值

* @return

*/

public static native FrameInfo[] detect(Bitmap bitmap, float score_thresh, float iou_thresh);

}

?C++ JNI接口

#include <jni.h>

#include <string>

#include <fstream>

#include "src/object_detection.h"

#include "src/Types.h"

#include "debug.h"

#include "android_utils.h"

#include "opencv2/opencv.hpp"

using namespace vision;

static ObjectDetection *detector = nullptr;

JNIEXPORT jint JNI_OnLoad(JavaVM *vm, void *reserved) {

return JNI_VERSION_1_6;

}

JNIEXPORT void JNI_OnUnload(JavaVM *vm, void *reserved) {

}

extern "C"

JNIEXPORT void JNICALL

Java_com_cv_tnn_model_Detector_init(JNIEnv *env,

jclass clazz,

jstring proto,

jstring model,

jstring root,

jint model_type,

jint num_thread,

jboolean use_gpu) {

if (detector != nullptr) {

delete detector;

detector = nullptr;

}

std::string parent = env->GetStringUTFChars(root, 0);

std::string proto_file = parent + env->GetStringUTFChars(proto, 0);

std::string model_file = parent + env->GetStringUTFChars(model, 0);

DeviceType device = use_gpu ? GPU : CPU;

LOGW("parent : %s", parent.c_str());

LOGW("useGPU : %d", use_gpu);

LOGW("device_type: %d", device);

LOGW("model_type : %d", model_type);

LOGW("num_thread : %d", num_thread);

ObjectDetectiobParam model_param = MODEL_TYPE[model_type];//模型参数

detector = new ObjectDetection(model_file,

proto_file,

model_param,

num_thread,

device);

}

extern "C"

JNIEXPORT jobjectArray JNICALL

Java_com_cv_tnn_model_Detector_detect(JNIEnv *env, jclass clazz, jobject bitmap,

jfloat score_thresh, jfloat iou_thresh) {

cv::Mat bgr;

BitmapToMatrix(env, bitmap, bgr);

int src_h = bgr.rows;

int src_w = bgr.cols;

// 检测区域为整张图片的大小

FrameInfo resultInfo;

// 开始检测

if (detector!= nullptr){

detector->detect(bgr, &resultInfo, score_thresh, iou_thresh);

}

else{

ObjectInfo objectInfo;

objectInfo.x1=0;

objectInfo.y1=0;

objectInfo.x2=84;

objectInfo.y2=84;

objectInfo.label=0;

resultInfo.info.push_back(objectInfo);

}

int nums = resultInfo.info.size();

LOGW("object nums: %d\n", nums);

auto BoxInfo = env->FindClass("com/cv/tnn/model/FrameInfo");

auto init_id = env->GetMethodID(BoxInfo, "<init>", "()V");

auto box_id = env->GetMethodID(BoxInfo, "addBox", "(FFFFIF)V");

auto ky_id = env->GetMethodID(BoxInfo, "addKeyPoint", "(FFF)V");

jobjectArray ret = env->NewObjectArray(resultInfo.info.size(), BoxInfo, nullptr);

for (int i = 0; i < nums; ++i) {

auto info = resultInfo.info[i];

env->PushLocalFrame(1);

//jobject obj = env->AllocObject(BoxInfo);

jobject obj = env->NewObject(BoxInfo, init_id);

// set bbox

//LOGW("rect:[%f,%f,%f,%f] label:%d,score:%f \n", info.rect.x,info.rect.y, info.rect.w, info.rect.h, 0, 1.0f);

env->CallVoidMethod(obj, box_id, info.x1, info.y1, info.x2 - info.x1, info.y2 - info.y1,

info.label, info.score);

// set keypoint

for (const auto &kps : info.landmarks) {

//LOGW("point:[%f,%f] score:%f \n", lm.point.x, lm.point.y, lm.score);

env->CallVoidMethod(obj, ky_id, (float) kps.x, (float) kps.y, 1.0f);

}

obj = env->PopLocalFrame(obj);

env->SetObjectArrayElement(ret, i, obj);

}

return ret;

}

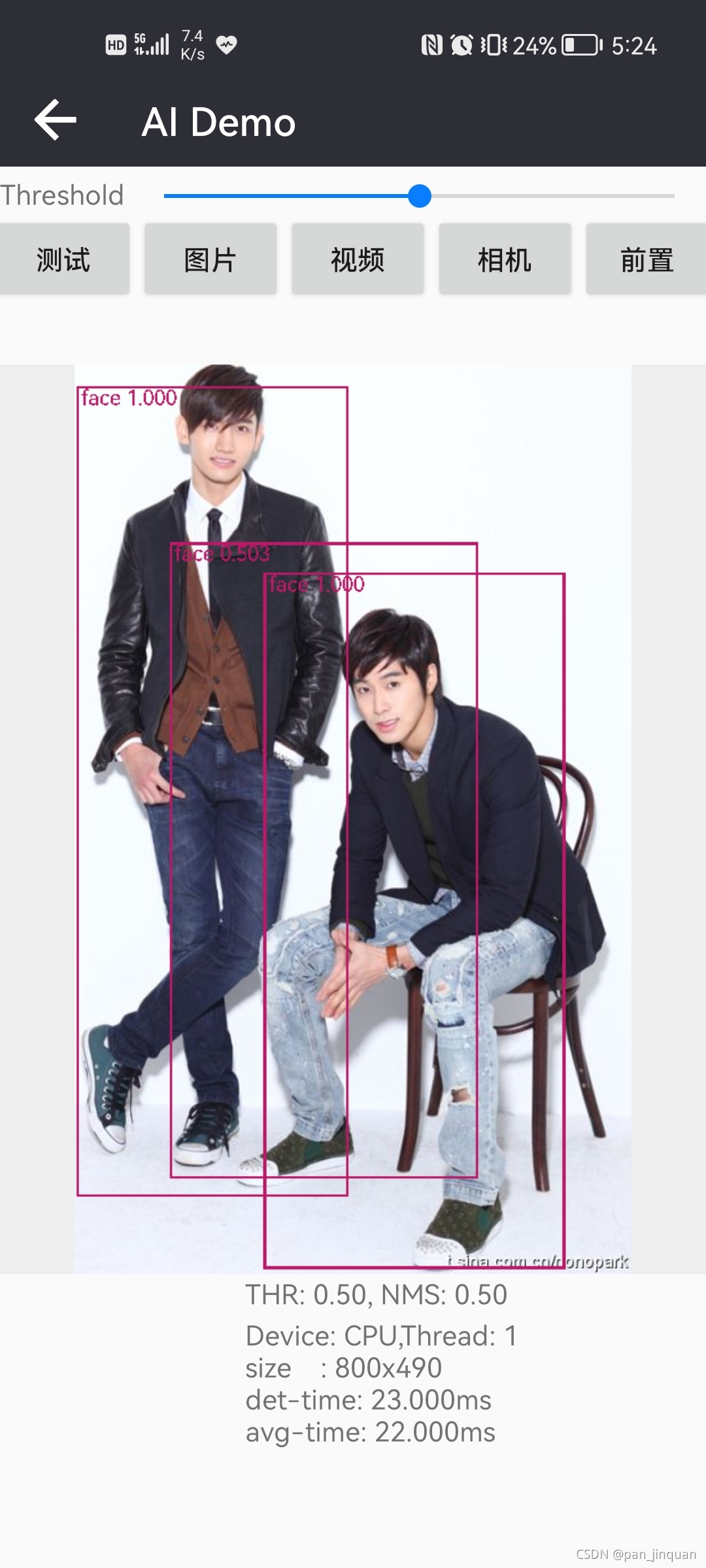

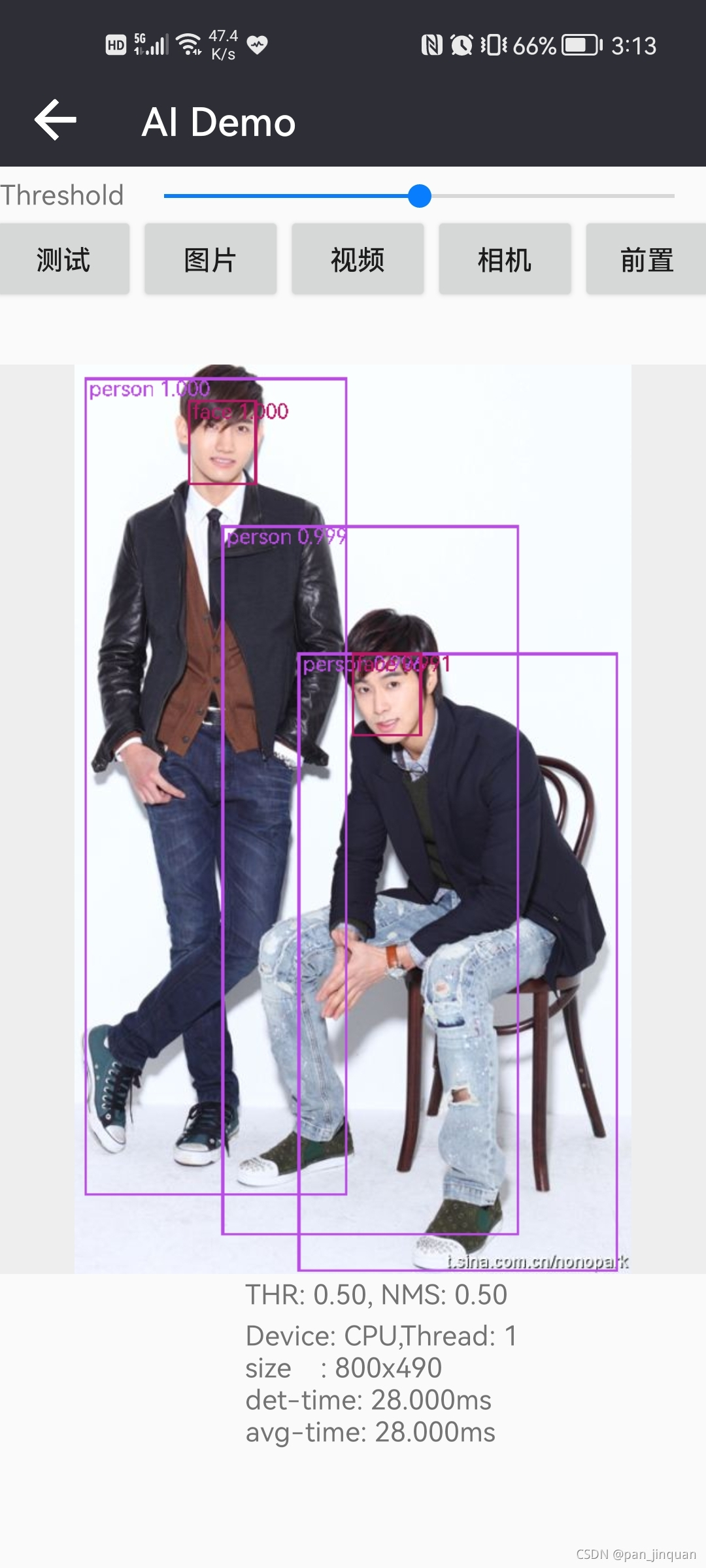

4. 人脸人体检测效果展示

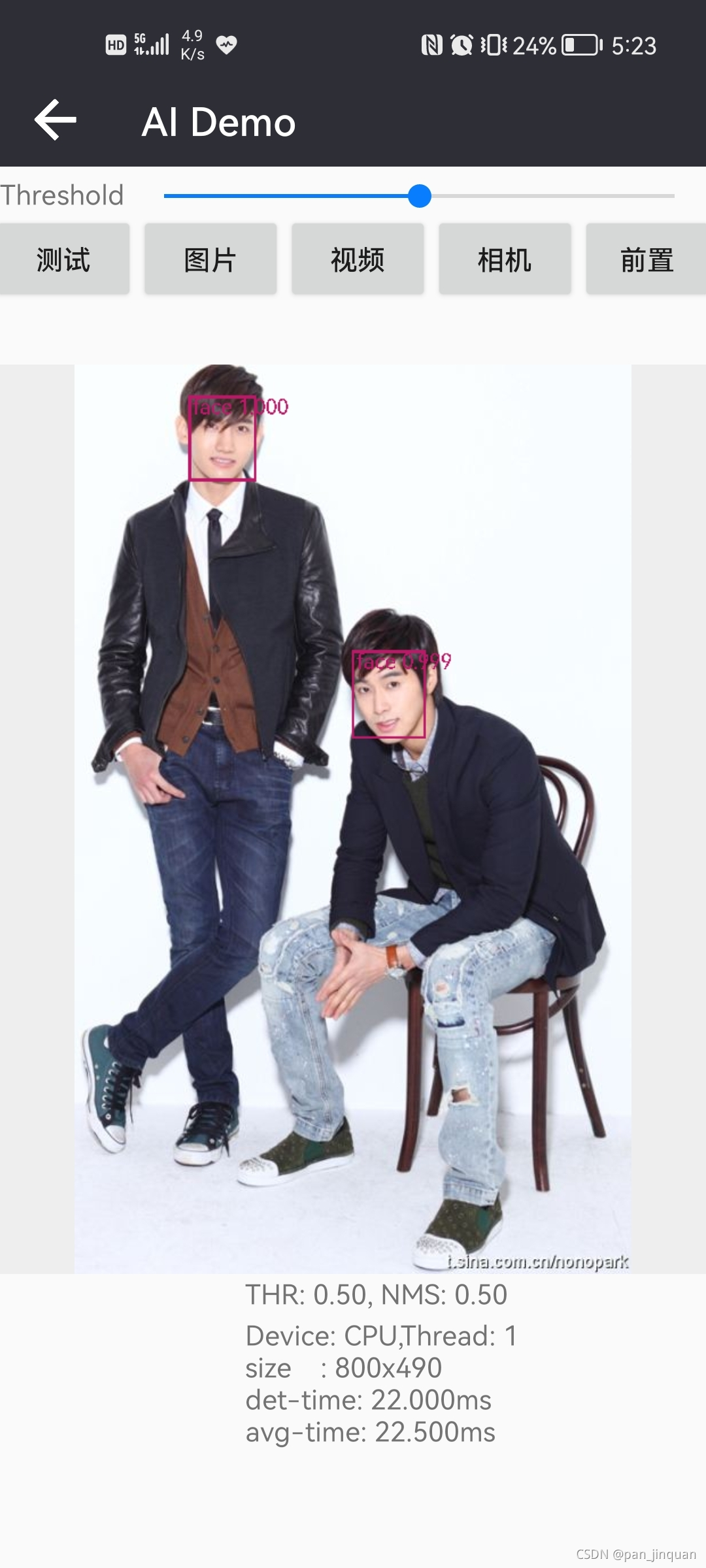

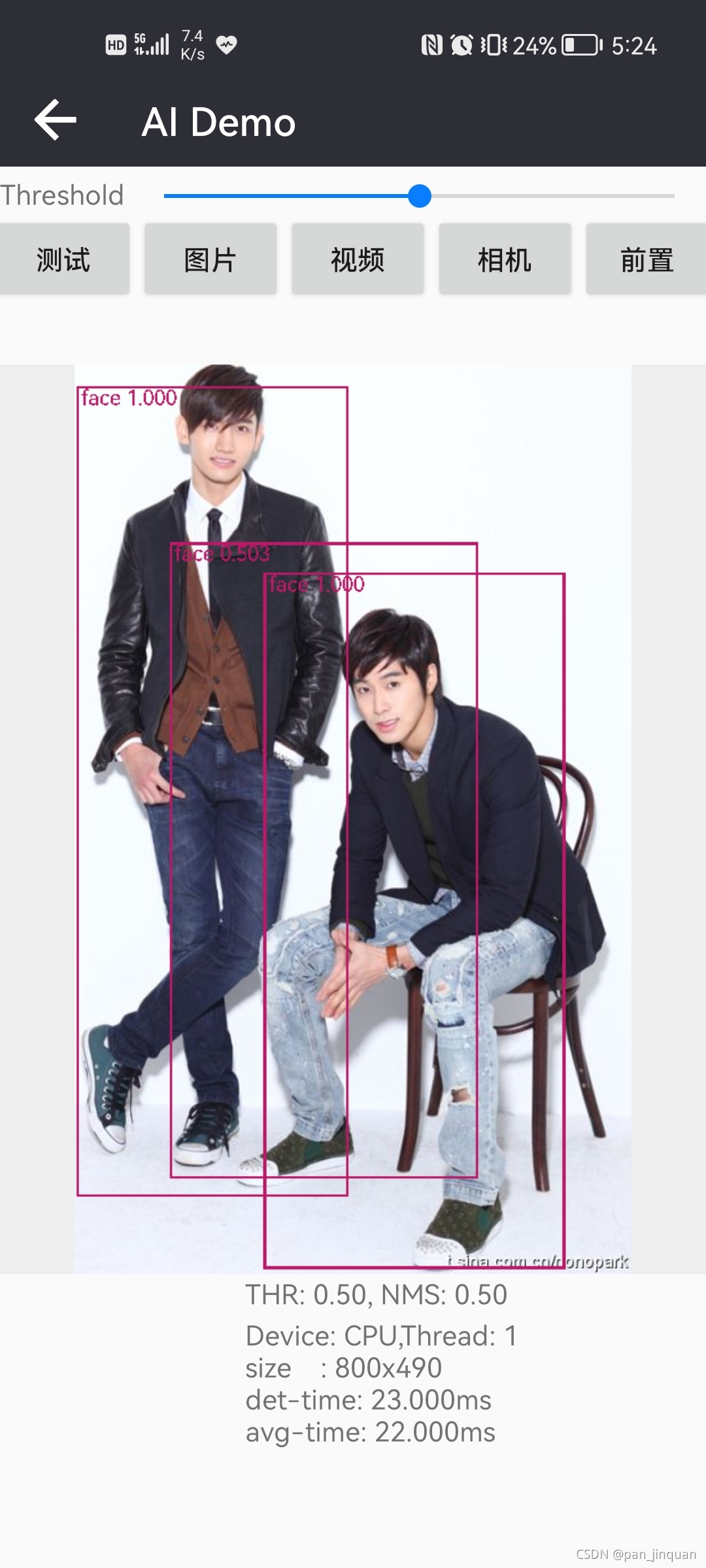

在普通Android手机上,CPU和GPU都可以达到实时检测(CPU约25毫秒左右,GPU约15毫秒左右),?下面是APP的检测效果,你可下载Android Demo APP真实体检一下哦~

| APP | 模型选择 | 人脸检测 |

? ? |  ? ? |  ? ? |

| 人脸关键点检测 | 人体检测 | 人脸+人体检测 |

? ? |  ? ? |  ? ? |

5.Demo源码下载

Android Demo APP免费体检:

链接:?https://download.csdn.net/download/guyuealian/25924076

Android Demo APP源码下载地址:

6.人体关键点Demo(Android版本)

本项目Demo支持人脸关键点检测(5个人脸关键点),如果你需要人体关键点检测,请查看鄙人另一篇博客:2D Pose人体关键点实时检测(Python/Android /C++ Demo)_pan_jinquan的博客-CSDN博客