目录

前言

记录一下流程,方便下次再用

一、树莓派配置NCNN

1.安装依赖

sudo apt-get install git cmake

sudo apt-get install -y gfortran

sudo apt-get install -y libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compiler

sudo apt-get install --no-install-recommends libboost-all-dev

sudo apt-get install -y libgflags-dev libgoogle-glog-dev liblmdb-dev libatlas-base-dev

2.下载NCNN并编译

$ git clone https://gitee.com/Tencent/ncnn.git

cd ncnn

mkdir build

cd build

cmake ..

make -j4

make install

完成后ncnn文件夹如下

二、Yolov5-lite模型训练

1.源码地址

https://gitee.com/seaflyren/YOLOv5-Lite

下载后的文件如图所示

2.安装所需要的包?

pip install -r requirements.txt

3.训练自己的数据集(YOLO格式)

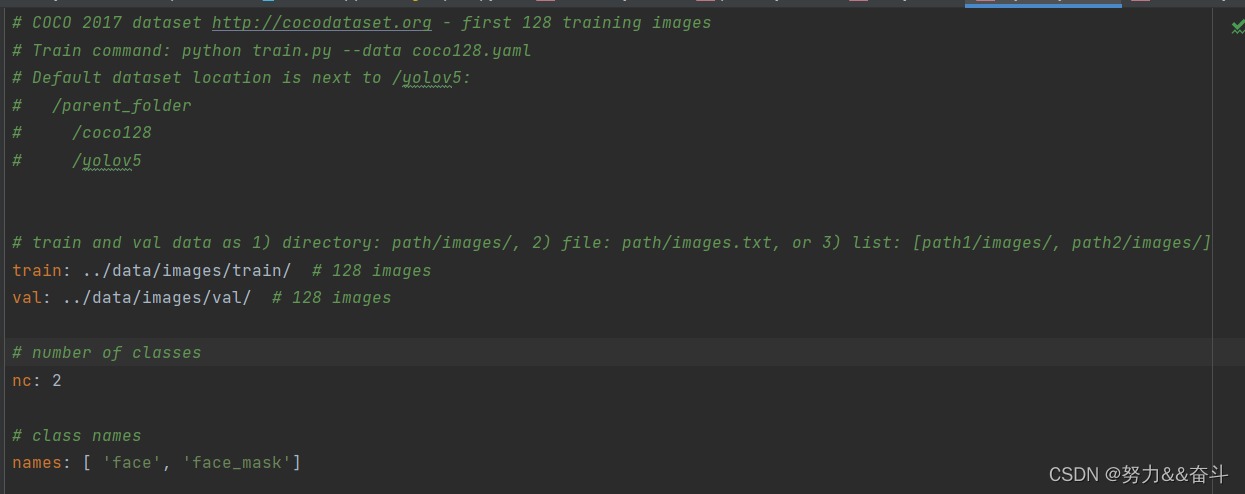

data文件夹下新建mydata.yaml,复制coco.yaml内容并粘贴

根据自己的数据集修改类别数nc和类名classname以及训练集和验证集路径

修改模型yaml文件中的nc数,和mydata.yaml保持一致

4.模型训练?

以lite-e为例,打开终端输入命令

python train.py --weights '预训练权重路径/v5lite-e.pt' --data 'data/mydata.yaml' --cfg 'models/v5lite-e.yaml' --epoch 300 --batch-size 16 --adam

5.模型转换

python export.py --weights 'weights/last.pt' --batch-size 1 --img_size 320

6.onnx模型简化

使用onnx-simplifier对转换后的onnx进行简化

pip install onnxsimplifier

python -m onnxsim last.onnx e.onnx三、树莓派部署lite模型

1.将onnx模型转换为ncnn

cd ncnn/build

./tools/onnx/onnx2ncnn e.onnx e.param e.bin

# 模型优化为fp16

./tools/onnxoptimize e.param e.bin eopt.param eopt.bin 65536

2.添加Yolov5-lite.cpp

cd ncnn/examples

touch yolov5_lite.cpp将下面的代码复制到cpp文件中

// Tencent is pleased to support the open source community by making ncnn available.

//

// Copyright (C) 2020 THL A29 Limited, a Tencent company. All rights reserved.

//

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

// in compliance with the License. You may obtain a copy of the License at

//

// https://opensource.org/licenses/BSD-3-Clause

//

// Unless required by applicable law or agreed to in writing, software distributed

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

// specific language governing permissions and limitations under the License.

#include "layer.h"

#include "net.h"

#if defined(USE_NCNN_SIMPLEOCV)

#include "simpleocv.h"

#else

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#endif

#include <float.h>

#include <stdio.h>

#include <vector>

#include <sys/time.h>

// 0 : FP16

// 1 : INT8

#define USE_INT8 0

// 0 : Image

// 1 : Camera

#define USE_CAMERA 1

struct Object

{

cv::Rect_<float> rect;

int label;

float prob;

};

static inline float intersection_area(const Object& a, const Object& b)

{

cv::Rect_<float> inter = a.rect & b.rect;

return inter.area();

}

static void qsort_descent_inplace(std::vector<Object>& faceobjects, int left, int right)

{

int i = left;

int j = right;

float p = faceobjects[(left + right) / 2].prob;

while (i <= j)

{

while (faceobjects[i].prob > p)

i++;

while (faceobjects[j].prob < p)

j--;

if (i <= j)

{

// swap

std::swap(faceobjects[i], faceobjects[j]);

i++;

j--;

}

}

#pragma omp parallel sections

{

#pragma omp section

{

if (left < j) qsort_descent_inplace(faceobjects, left, j);

}

#pragma omp section

{

if (i < right) qsort_descent_inplace(faceobjects, i, right);

}

}

}

static void qsort_descent_inplace(std::vector<Object>& faceobjects)

{

if (faceobjects.empty())

return;

qsort_descent_inplace(faceobjects, 0, faceobjects.size() - 1);

}

static void nms_sorted_bboxes(const std::vector<Object>& faceobjects, std::vector<int>& picked, float nms_threshold)

{

picked.clear();

const int n = faceobjects.size();

std::vector<float> areas(n);

for (int i = 0; i < n; i++)

{

areas[i] = faceobjects[i].rect.area();

}

for (int i = 0; i < n; i++)

{

const Object& a = faceobjects[i];

int keep = 1;

for (int j = 0; j < (int)picked.size(); j++)

{

const Object& b = faceobjects[picked[j]];

// intersection over union

float inter_area = intersection_area(a, b);

float union_area = areas[i] + areas[picked[j]] - inter_area;

// float IoU = inter_area / union_area

if (inter_area / union_area > nms_threshold)

keep = 0;

}

if (keep)

picked.push_back(i);

}

}

static inline float sigmoid(float x)

{

return static_cast<float>(1.f / (1.f + exp(-x)));

}

// unsigmoid

static inline float unsigmoid(float y) {

return static_cast<float>(-1.0 * (log((1.0 / y) - 1.0)));

}

static void generate_proposals(const ncnn::Mat &anchors, int stride, const ncnn::Mat &in_pad,

const ncnn::Mat &feat_blob, float prob_threshold,

std::vector <Object> &objects) {

const int num_grid = feat_blob.h;

float unsig_pro = 0;

if (prob_threshold > 0.6)

unsig_pro = unsigmoid(prob_threshold);

int num_grid_x;

int num_grid_y;

if (in_pad.w > in_pad.h) {

num_grid_x = in_pad.w / stride;

num_grid_y = num_grid / num_grid_x;

} else {

num_grid_y = in_pad.h / stride;

num_grid_x = num_grid / num_grid_y;

}

const int num_class = feat_blob.w - 5;

const int num_anchors = anchors.w / 2;

for (int q = 0; q < num_anchors; q++) {

const float anchor_w = anchors[q * 2];

const float anchor_h = anchors[q * 2 + 1];

const ncnn::Mat feat = feat_blob.channel(q);

for (int i = 0; i < num_grid_y; i++) {

for (int j = 0; j < num_grid_x; j++) {

const float *featptr = feat.row(i * num_grid_x + j);

// find class index with max class score

int class_index = 0;

float class_score = -FLT_MAX;

float box_score = featptr[4];

if (prob_threshold > 0.6) {

// while prob_threshold > 0.6, unsigmoid better than sigmoid

if (box_score > unsig_pro) {

for (int k = 0; k < num_class; k++) {

float score = featptr[5 + k];

if (score > class_score) {

class_index = k;

class_score = score;

}

}

float confidence = sigmoid(box_score) * sigmoid(class_score);

if (confidence >= prob_threshold) {

float dx = sigmoid(featptr[0]);

float dy = sigmoid(featptr[1]);

float dw = sigmoid(featptr[2]);

float dh = sigmoid(featptr[3]);

float pb_cx = (dx * 2.f - 0.5f + j) * stride;

float pb_cy = (dy * 2.f - 0.5f + i) * stride;

float pb_w = pow(dw * 2.f, 2) * anchor_w;

float pb_h = pow(dh * 2.f, 2) * anchor_h;

float x0 = pb_cx - pb_w * 0.5f;

float y0 = pb_cy - pb_h * 0.5f;

float x1 = pb_cx + pb_w * 0.5f;

float y1 = pb_cy + pb_h * 0.5f;

Object obj;

obj.rect.x = x0;

obj.rect.y = y0;

obj.rect.width = x1 - x0;

obj.rect.height = y1 - y0;

obj.label = class_index;

obj.prob = confidence;

objects.push_back(obj);

}

} else {

for (int k = 0; k < num_class; k++) {

float score = featptr[5 + k];

if (score > class_score) {

class_index = k;

class_score = score;

}

}

float confidence = sigmoid(box_score) * sigmoid(class_score);

if (confidence >= prob_threshold) {

float dx = sigmoid(featptr[0]);

float dy = sigmoid(featptr[1]);

float dw = sigmoid(featptr[2]);

float dh = sigmoid(featptr[3]);

float pb_cx = (dx * 2.f - 0.5f + j) * stride;

float pb_cy = (dy * 2.f - 0.5f + i) * stride;

float pb_w = pow(dw * 2.f, 2) * anchor_w;

float pb_h = pow(dh * 2.f, 2) * anchor_h;

float x0 = pb_cx - pb_w * 0.5f;

float y0 = pb_cy - pb_h * 0.5f;

float x1 = pb_cx + pb_w * 0.5f;

float y1 = pb_cy + pb_h * 0.5f;

Object obj;

obj.rect.x = x0;

obj.rect.y = y0;

obj.rect.width = x1 - x0;

obj.rect.height = y1 - y0;

obj.label = class_index;

obj.prob = confidence;

objects.push_back(obj);

}

}

}

}

}

}

}

static int detect_yolov5(const cv::Mat& bgr, std::vector<Object>& objects)

{

ncnn::Net yolov5;

#if USE_INT8

yolov5.opt.use_int8_inference=true;

#else

yolov5.opt.use_vulkan_compute = true;

yolov5.opt.use_bf16_storage = true;

#endif

// original pretrained model from https://github.com/ultralytics/yolov5

// the ncnn model https://github.com/nihui/ncnn-assets/tree/master/models

#if USE_INT8

yolov5.load_param("/home/corvin/Mask/weights/e.param");

yolov5.load_model("/home/corvin/Mask/weights/e.bin");

#else

yolov5.load_param("/home/corvin/Mask/weights/eopt.param");

yolov5.load_model("/home/corvin/Mask/weights/eopt.bin");

#endif

const int target_size = 320;

const float prob_threshold = 0.60f;

const float nms_threshold = 0.60f;

int img_w = bgr.cols;

int img_h = bgr.rows;

// letterbox pad to multiple of 32

int w = img_w;

int h = img_h;

float scale = 1.f;

if (w > h)

{

scale = (float)target_size / w;

w = target_size;

h = h * scale;

}

else

{

scale = (float)target_size / h;

h = target_size;

w = w * scale;

}

ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR2RGB, img_w, img_h, w, h);

// pad to target_size rectangle

// yolov5/utils/datasets.py letterbox

int wpad = (w + 31) / 32 * 32 - w;

int hpad = (h + 31) / 32 * 32 - h;

ncnn::Mat in_pad;

ncnn::copy_make_border(in, in_pad, hpad / 2, hpad - hpad / 2, wpad / 2, wpad - wpad / 2, ncnn::BORDER_CONSTANT, 114.f);

const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};

in_pad.substract_mean_normalize(0, norm_vals);

ncnn::Extractor ex = yolov5.create_extractor();

ex.input("images", in_pad);

std::vector<Object> proposals;

// stride 8

{

ncnn::Mat out;

ex.extract("451", out);

ncnn::Mat anchors(6);

anchors[0] = 10.f;

anchors[1] = 13.f;

anchors[2] = 16.f;

anchors[3] = 30.f;

anchors[4] = 33.f;

anchors[5] = 23.f;

std::vector<Object> objects8;

generate_proposals(anchors, 8, in_pad, out, prob_threshold, objects8);

proposals.insert(proposals.end(), objects8.begin(), objects8.end());

}

// stride 16

{

ncnn::Mat out;

ex.extract("479", out);

ncnn::Mat anchors(6);

anchors[0] = 30.f;

anchors[1] = 61.f;

anchors[2] = 62.f;

anchors[3] = 45.f;

anchors[4] = 59.f;

anchors[5] = 119.f;

std::vector<Object> objects16;

generate_proposals(anchors, 16, in_pad, out, prob_threshold, objects16);

proposals.insert(proposals.end(), objects16.begin(), objects16.end());

}

// stride 32

{

ncnn::Mat out;

ex.extract("507", out);

ncnn::Mat anchors(6);

anchors[0] = 116.f;

anchors[1] = 90.f;

anchors[2] = 156.f;

anchors[3] = 198.f;

anchors[4] = 373.f;

anchors[5] = 326.f;

std::vector<Object> objects32;

generate_proposals(anchors, 32, in_pad, out, prob_threshold, objects32);

proposals.insert(proposals.end(), objects32.begin(), objects32.end());

}

// sort all proposals by score from highest to lowest

qsort_descent_inplace(proposals);

// apply nms with nms_threshold

std::vector<int> picked;

nms_sorted_bboxes(proposals, picked, nms_threshold);

int count = picked.size();

objects.resize(count);

for (int i = 0; i < count; i++)

{

objects[i] = proposals[picked[i]];

// adjust offset to original unpadded

float x0 = (objects[i].rect.x - (wpad / 2)) / scale;

float y0 = (objects[i].rect.y - (hpad / 2)) / scale;

float x1 = (objects[i].rect.x + objects[i].rect.width - (wpad / 2)) / scale;

float y1 = (objects[i].rect.y + objects[i].rect.height - (hpad / 2)) / scale;

// clip

x0 = std::max(std::min(x0, (float)(img_w - 1)), 0.f);

y0 = std::max(std::min(y0, (float)(img_h - 1)), 0.f);

x1 = std::max(std::min(x1, (float)(img_w - 1)), 0.f);

y1 = std::max(std::min(y1, (float)(img_h - 1)), 0.f);

objects[i].rect.x = x0;

objects[i].rect.y = y0;

objects[i].rect.width = x1 - x0;

objects[i].rect.height = y1 - y0;

}

return 0;

}

static void draw_objects(const cv::Mat& bgr, const std::vector<Object>& objects)

{

static const char* class_names[] = {

"face","face_mask"

};

cv::Mat image = bgr.clone();

for (size_t i = 0; i < objects.size(); i++)

{

const Object& obj = objects[i];

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(image, obj.rect, cv::Scalar(0, 255, 0));

char text[256];

sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > image.cols)

x = image.cols - label_size.width;

cv::rectangle(image, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(image, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

#if USE_CAMERA

imshow("camera", image);

cv::waitKey(1);

#else

cv::imwrite("result.jpg", image);

#endif

}

#if USE_CAMERA

int main(int argc, char** argv)

{

cv::VideoCapture capture;

capture.open(0); //修改这个参数可以选择打开想要用的摄像头

cv::Mat frame;

while (true)

{

capture >> frame;

cv::Mat m = frame;

std::vector<Object> objects;

detect_yolov5(frame, objects);

draw_objects(m, objects);

if (cv::waitKey(30) >= 0)

break;

}

}

#else

int main(int argc, char** argv)

{

if (argc != 2)

{

fprintf(stderr, "Usage: %s [imagepath]\n", argv[0]);

return -1;

}

const char* imagepath = argv[1];

struct timespec begin, end;

long time;

clock_gettime(CLOCK_MONOTONIC, &begin);

cv::Mat m = cv::imread(imagepath, 1);

if (m.empty())

{

fprintf(stderr, "cv::imread %s failed\n", imagepath);

return -1;

}

std::vector<Object> objects;

detect_yolov5(m, objects);

clock_gettime(CLOCK_MONOTONIC, &end);

time = (end.tv_sec - begin.tv_sec) + (end.tv_nsec - begin.tv_nsec);

printf(">> Time : %lf ms\n", (double)time/1000000);

draw_objects(m, objects);

return 0;

}

#endif

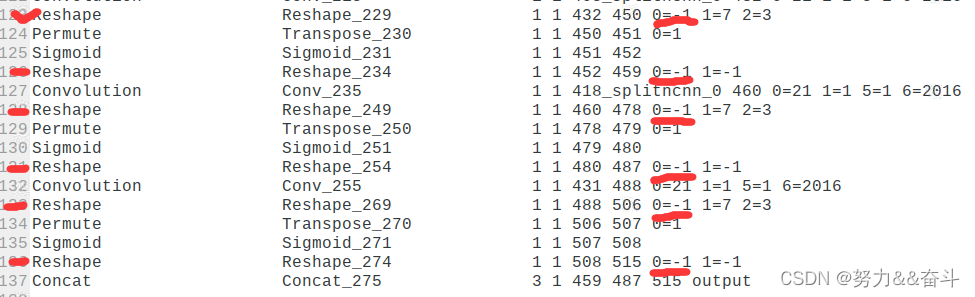

3.修改eopt.param

bug1:Squeeze not supported yet!

生成param文件时如果遇到Squeeze not supported yet!等提示,解决方法为使用onnxsimplifier优化onnx模型在转换为param

打开eopt.param,将所有Reshape修改为0=-1,此步是为了能够动态输入

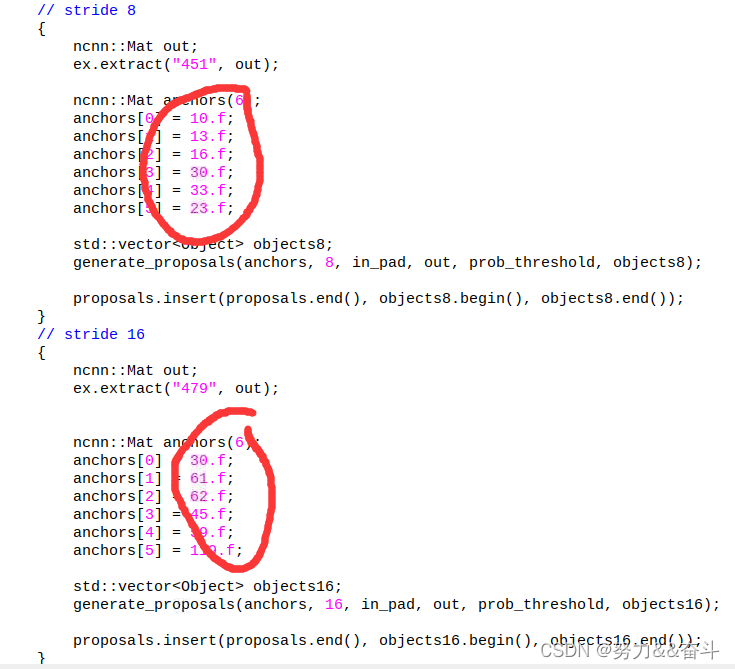

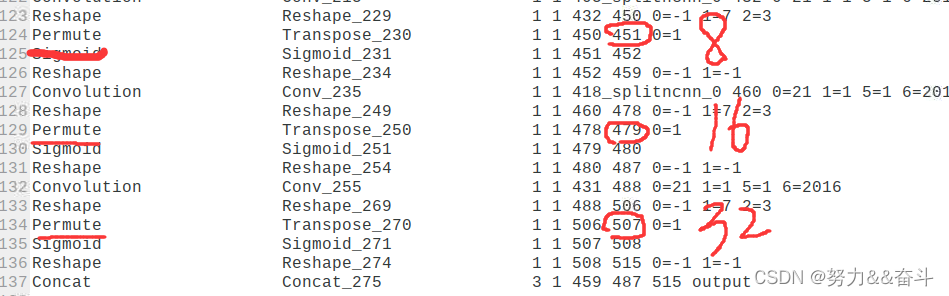

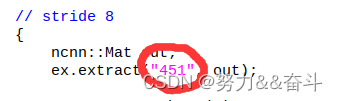

?4.修改yolov5_lite.cpp

bug2:Segmentation Fault

这是由于未修改cpp中ex.extract()和permute保持一致

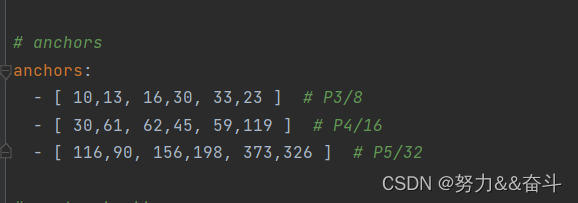

打开v5lite-e.yaml

?根据anchors修改cpp内容,需要保持一致

?

?打开eopt.param,根据permute修改cpp文件

?

?

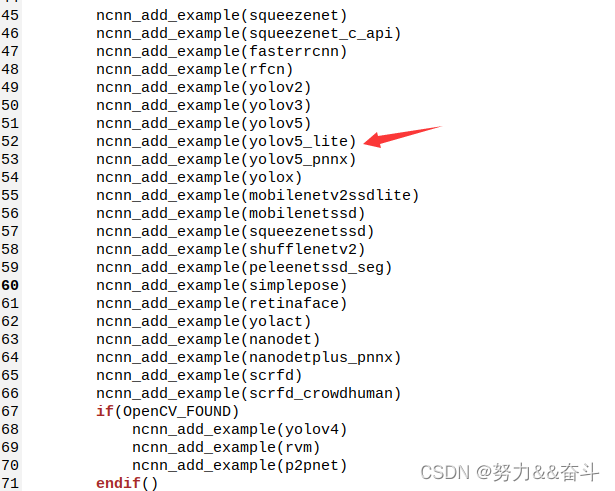

?5.修改CMakeLists.txt

打开examples/CMakeLists.txt?,添加ncnn_add_example(yolov5_lite)?,注意和文件名保持一致

完成后使用cmake编译?

cd ncnn/build

cmake ..

make?四、最终运行效果

总结

yolov5_lite部署后,树莓派识别还是很流畅的