本文旨在完成吴恩达机器学习的课后作业,搭建一个简单的神经网络来实现多分类问题,以手写数字识别为例。

1. 导入所需第三方库

from sklearn.datasets import load_digits

import numpy as np

import matplotlib.pyplot as plt

2. 加载手写数字的数据集

digits = load_digits() # 加载数据集

data = digits.data # 手写数字数据集:1797*64

target = digits.target # 数据集的标签:1797*1

plt.gray()

plt.matshow(digits.images[15])

plt.show()

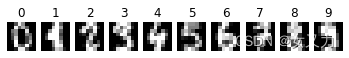

3. 随机选出每一类的一个样本看看长什么样子

classes = ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9']

num_classes = len(classes)

samples_per_class = 1

# enumerate():将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标

for y, num_class in enumerate(classes):

# 返回扁平化后矩阵中非零元素的位置(index)

idxs = np.flatnonzero(target == y) # 标签中为y的数据的索引

idxs = np.random.choice(idxs, samples_per_class, replace=False) # replace=False表示不可取相同数字

for i, idx in enumerate(idxs): # 将图形进行展示

plt_idx = i * num_classes + y + 1

plt.subplot(samples_per_class, num_classes, plt_idx)

plt.imshow(digits.images[idx].astype('uint8'))

plt.axis('off') # 关闭坐标轴

if i == 0:

plt.title(num_class)

plt.show()

4. 定义激活函数

# 激活函数

def sigmoid(x):

return 1 / (1 + np.exp(-x))

# sigmoid函数的导数函数

def dsigmoid(x):

return x * (1-x)

5. 实现两层神经网络

class NeuralNetwork(object):

# 随机初始化参数

def __init__(self, input_size, hidden_size, output_size):

self.theta1 = 0.01 * np.random.randn(input_size, hidden_size) #input_size * hidden_size

self.b1 = np.zeros(hidden_size)

self.theta2 = 0.01 * np.random.randn(hidden_size, output_size) #hidden_size * output_size

self.b2 = np.zeros(output_size)

# 定义损失函数

def loss(self, X, y, lamda=0.01):

num_train, num_feature = X.shape

# forward前向传播

a1 = X # input layer

a2 = sigmoid(a1.dot(self.theta1) + self.b1) # hidden layer

a3 = sigmoid(a2.dot(self.theta2) + self.b2) # output layer

loss = -np.sum(y * np.log(a3) + (1 - y) * np.log(1 - a3)) / num_train

loss += lamda * (np.sum(self.theta1 * self.theta1) + np.sum(self.theta2 * self.theta2)) / (2 * num_train)

# backward, 后向传播过程

delta3 = a3 - y

dtheta2 = a2.T.dot(delta3) + lamda * self.theta2

db2 = np.sum(delta3, axis=0)

delta2 = delta3.dot(self.theta2.T) * dsigmoid(a2)

dtheta1 = a1.T.dot(delta2) + lamda * self.theta1

db1 = np.sum(delta2, axis=0)

dtheta1 /= num_train

dtheta2 /= num_train

db1 /= num_train

db2 /= num_train

return loss, dtheta1, dtheta2, db1, db2

??在神经网络中,我们可以有很多输出变量,我们的

h

θ

(

𝑥

)

?_\theta(𝑥)

hθ?(x) 是一个维度为

𝐾

𝐾

K 的向量,并且我们训练集中的因变量也是同样维度的一个向量,因此我们的代价函数会比逻辑回归更加复杂一些,为:

h

θ

(

𝑥

)

∈

R

𝐾

,

(

h

θ

(

𝑥

)

)

𝑖

=

i

t

h

o

u

t

p

u

t

?_\theta(𝑥) ∈ ?^𝐾 ,{(?_\theta(𝑥))}_𝑖 =i^{th}output

hθ?(x)∈RK,(hθ?(x))i?=ithoutput

J

(

θ

)

=

?

1

m

[

∑

i

=

1

m

∑

k

=

1

k

y

k

(

i

)

l

o

g

(

h

θ

(

x

(

i

)

)

)

k

+

(

1

?

y

k

(

i

)

)

l

o

g

(

1

?

h

θ

(

x

(

i

)

)

)

k

]

+

λ

2

m

∑

l

=

1

L

?

1

∑

i

=

1

s

l

∑

j

=

1

s

l

+

1

(

θ

j

i

(

l

)

)

2

J(\theta)=-\frac{1}{m}[\displaystyle \sum^m_{i =1}\displaystyle \sum^k_{k=1}{y_k^{(i)}log(h_\theta(x^{(i)}))_k+(1-y_k^{(i)})log(1-h_\theta(x^{(i)}))_k}]+\frac{\lambda}{2m}\displaystyle \sum^{L-1}_{l=1}\displaystyle \sum^{s_l}_{i =1}\displaystyle \sum^{s_l+1}_{j=1}{(\theta_{ji}^{(l)})^2}

J(θ)=?m1?[i=1∑m?k=1∑k?yk(i)?log(hθ?(x(i)))k?+(1?yk(i)?)log(1?hθ?(x(i)))k?]+2mλ?l=1∑L?1?i=1∑sl??j=1∑sl?+1?(θji(l)?)2

??我们希望通过代价函数来观察算法预测的结果与真实情况的误差有多大,唯一不同的是,对于每一行特征,我们都会给出

𝐾

𝐾

K 个预测,基本上我们可以利用循环,对每一行特征都预测

𝐾

𝐾

K 个不同结果,然后在利用循环在

𝐾

𝐾

K 个预测中选择可能性最高的一个,将其与

𝑦

𝑦

y 中的实际数据进行比较。

??正则化的那一项只是排除了每一层

θ

0

\theta_0

θ0? 后,每一层的

θ

\theta

θ 矩阵的和。最里层的循环

𝑗

𝑗

j 循环所有的行(由

𝑠

𝑙

+

1

𝑠_𝑙 +1

sl?+1 层的激活单元数决定),循环

𝑖

𝑖

i 则循环所有的列,由该层(

𝑠

𝑙

𝑠_𝑙

sl? 层)的激活单元数所决定即:

h

θ

(

𝑥

)

?_\theta(𝑥)

hθ?(x) 与真实值之间的距离为每个样本-每个类输出的加和,对参数进行

r

e

g

u

l

a

r

i

z

a

t

i

o

n

regularization

regularization 的

b

i

a

s

bias

bias 项处理所有参数的平方和。

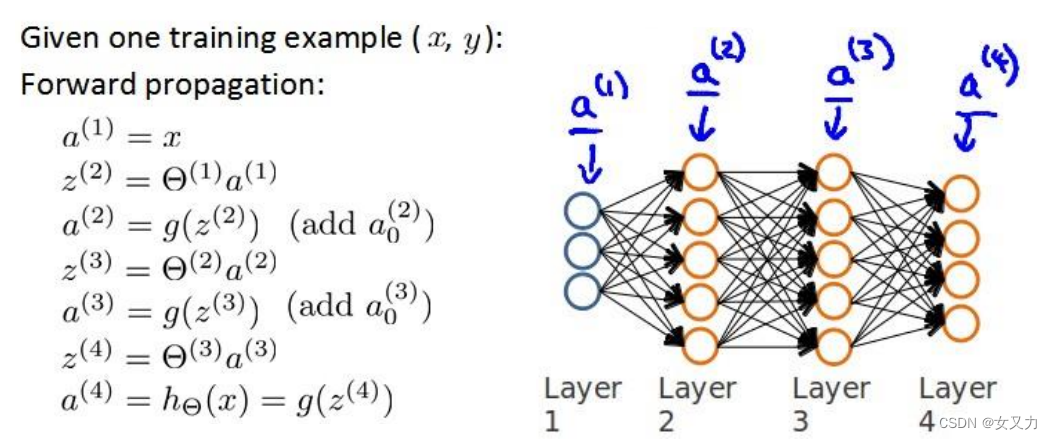

前向传播算法:从前往后一层一层推导(这里的函数g()是指sigmoid函数),直到最后一层的

h

θ

(

x

)

h_\theta(x)

hθ?(x)(b为偏置项,图中未画出)

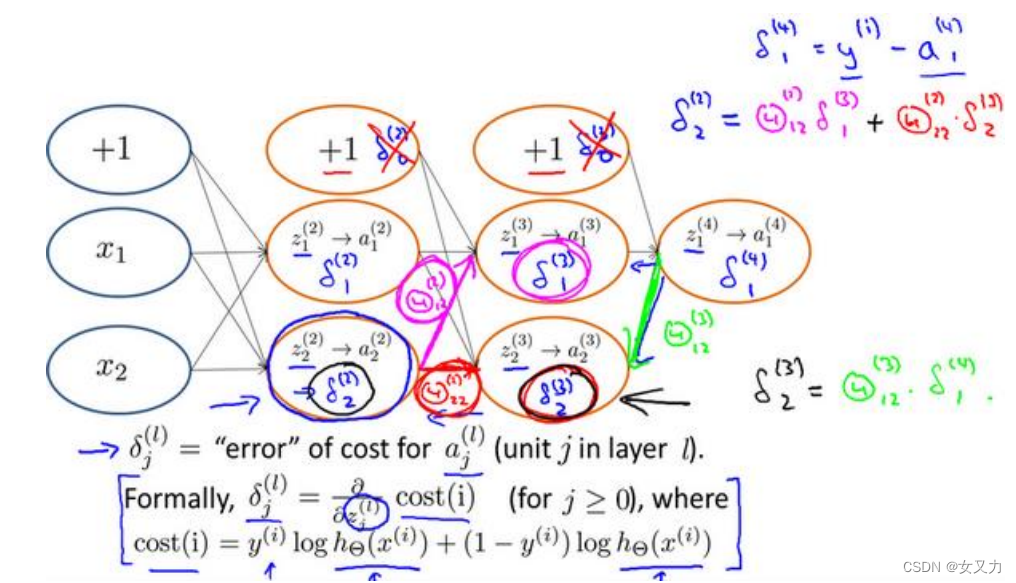

??现在,为了计算代价函数的偏导数

?

?

θ

i

j

l

J

(

θ

)

\frac{\partial}{\partial \theta_{ij}^{l}}J(\theta)

?θijl???J(θ) ,我们需要采用一种反向传播算法,也就是首先计算最后一层的误差,然后再一层一层反向求出各层的误差,直到倒数第二层。我们从最后一层的误差开始计算,误差是激活单元的预测

a

k

4

a_k^4

ak4? 与实际值

y

k

y^k

yk 之间的误差(

k

=

1

:

k

k=1:k

k=1:k)

??我们用

δ

\delta

δ 来表示误差,则:

δ

(

4

)

=

a

(

4

)

?

y

\delta^{(4)}=a^{(4)}-y

δ(4)=a(4)?y

??我们利用这个误差值来计算前一层的误差:

δ

(

3

)

=

(

θ

(

3

)

)

δ

(

4

)

?

g

′

(

z

(

3

)

)

\delta^{(3)}=(\theta^{(3)})\delta^{(4)}*g'(z^{(3)})

δ(3)=(θ(3))δ(4)?g′(z(3))。其中,

g

′

(

z

(

3

)

)

g'(z^{(3)})

g′(z(3))是

s

i

g

m

o

i

d

sigmoid

sigmoid函数的导数,

g

′

(

z

(

3

)

)

=

a

(

3

)

?

(

1

?

a

(

3

)

)

g'(z^{(3)})=a^{(3)}*(1-a^{(3)})

g′(z(3))=a(3)?(1?a(3))。而

(

(

θ

(

3

)

)

T

)

δ

(

4

)

{((\theta^{(3)})}^T)\delta^{(4)}

((θ(3))T)δ(4)则是权重导致的误差的和。下一步是继续计算第二层的误差:

δ

(

2

)

=

(

θ

(

2

)

)

δ

(

3

)

?

g

′

(

z

(

2

)

)

\delta^{(2)}=(\theta^{(2)})\delta^{(3)}*g'(z^{(2)})

δ(2)=(θ(2))δ(3)?g′(z(2))

# 训练

def train(self, X, y, y_trian, X_val, y_val, learning_rate=0.01, num_iters = 10000):

batch_size = 150

num_train = X.shape[0]

loss_list= []

accuracy_train = []

accuracy_val = []

for i in range(num_iters):

# 每次随机的选取小样本batch set去训练

batch_index = np.random.choice(num_train, batch_size, replace=True)

X_batch = X[batch_index]

y_batch = y[batch_index]

y_train_batch = y_train[batch_index]

loss, dtheta1, dtheta2, db1, db2 = self.loss(X_batch, y_batch)

loss_list.append(loss)

# update the weight

self.theta1 += -learning_rate * dtheta1

self.theta2 += -learning_rate * dtheta2

self.b1 += -learning_rate * db1

self.b2 += -learning_rate * db2

if i % 500 == 0:

print("i=%d,loss=%f" %(i,loss))

#record the train accuracy and validation accuracy

train_acc = np.mean(y_train_batch==self.predict(X_batch))

val_acc = np.mean(y_val==self.predict(X_val))

accuracy_train.append(train_acc)

accuracy_val.append(val_acc)

return loss_list, accuracy_train, accuracy_val

# 预测

def predict(self, X_test):

a2 = sigmoid(X_test.dot(self.theta1) + self.b1)

a3 = sigmoid(a2.dot(self.theta2) + self.b2)

y_pred = np.argmax(a3, axis=1)

return y_pred

pass

6. 定义一下数值梯度,用于梯度检查

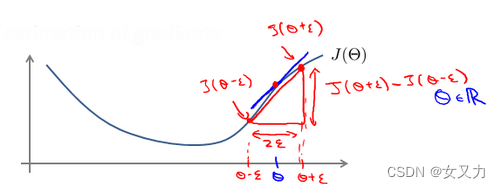

??当我们对一个较为复杂的模型(如神经网络)使用梯度下降法时,可能会存在一些不易察觉的错误,意味着虽然代价看上去在不断减小,但最终的结果可能并不是最优解。

??为了避免这样的问题,采用梯度的数值检验方法,其思想是:通过估计梯度值来检验我们计算的导数值是否真的是我们要求的。对梯度的估计的方法是在代价函数上沿着切线方向选择两个非常近的点,计算两个点的直线斜率来估计梯度。

??其代码实现为:

gradApprox = (J(theta + eps) - J(theta - eps)) / (2 * eps)

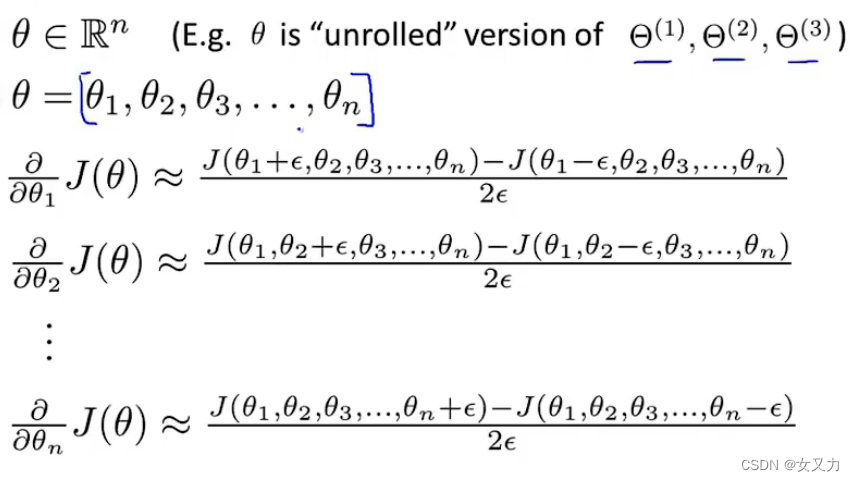

??当

θ

\theta

θ 是一个向量时,我们则需要对偏导数进行检验。因为代价函数的偏导数检验只针对一个参数的改变进行检验:

??对梯度检验的主要步骤如下:

????①实现反向传播算法计算偏导矩阵

D

i

j

l

D_{ij}^l

Dijl?

????②实现梯度检验计算近似梯度矩阵

g

r

a

d

A

p

p

r

o

x

gradApprox

gradApprox

????③确保它们的值相近

????④关掉梯度检验,使用反向传播代码进行学习

from sklearn.preprocessing import LabelBinarizer

def eval_numerical_gradient(f, x, verbose=True, h=0.00001):

"""

a naive implementation of numerical gradient of f at x

- f should be a function that takes a single argument

- x is the point (numpy array) to evaluate the gradient at

"""

fx = f(x) # evaluate function value at original point

grad = np.zeros_like(x)

# iterate over all indexes in x

it = np.nditer(x, flags=['multi_index'], op_flags=['readwrite'])

while not it.finished:

# evaluate function at x+h

ix = it.multi_index

oldval = x[ix]

x[ix] = oldval + h # increment by h

fxph = f(x) # evalute f(x + h)

x[ix] = oldval - h

fxmh = f(x) # evaluate f(x - h)

x[ix] = oldval # restore

# compute the partial derivative with centered formula

grad[ix] = (fxph - fxmh) / (2 * h) # the slope

if verbose:

print(ix, grad[ix])

it.iternext() # step to next dimension

return grad

7. 定义比较函数,用于两个梯度的对比

def rel_error(x, y):

return np.max(np.abs(x - y) / (np.maximum(1e-8, np.abs(x) + np.abs(y))))

8. 模拟一个小网络,进行梯度检查,这样可以判断模型正确性

input_size = 4

hidden_size = 10

output_size = 3

num_train = 5

9. 初始化模型

def init_toy_model():

np.random.seed(0)

return NeuralNetwork(input_size, hidden_size, output_size)

10. 初始化数据

def init_toy_data():

np.random.seed(1)

XX = 10 * np.random.randn(num_train, input_size)

yy = np.array([0, 1, 2, 2, 1])

return XX, yy

11. 通过反向传播计算参数

net = init_toy_model()

XX, yy = init_toy_data()

yy_label = LabelBinarizer().fit_transform(yy)

loss,dtheta1,dtheta2,db1,db2 = net.loss(XX,yy_label)

f = lambda theta: net.loss(XX, yy_label)[0] #f(w) = loss

dtheta1_ = eval_numerical_gradient(f,net.theta1,verbose=False)

dtheta2_ = eval_numerical_gradient(f,net.theta2,verbose=False)

db1_ = eval_numerical_gradient(f,net.b1,verbose=False)

db2_ = eval_numerical_gradient(f,net.b2,verbose=False)

print('%s max relative error: %e' % ('theta1', rel_error(dtheta1, dtheta1_)))

print('%s max relative error: %e' % ('theta2', rel_error(dtheta2, dtheta2_)))

print('%s max relative error: %e' % ('b1', rel_error(db1, db1_)))

print('%s max relative error: %e' % ('b2', rel_error(db2, db2_)))

theta1 max relative error: 1.812071e-08

theta2 max relative error: 3.958839e-10

b1 max relative error: 7.817713e-08

b2 max relative error: 5.888433e-11

12. 对神经网络进行训练

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelBinarizer

X = data

y = target

#normalized 数据集合0均值化

X_mean = np.mean(X,axis=0)

X -= X_mean

#分隔数据集合,分为train,val,test集合

X_data,X_test,y_data,y_test = train_test_split(X,y,test_size=0.2)

#split the train and tne validation

X_train = X_data[0:1000]

y_train = y_data[0:1000]

X_val = X_data[1000:-1]

y_val = y_data[1000:-1]

print(X_train.shape,X_val.shape,X_test.shape)

#进行标签二值化,1-->[0,1,0,0,0,0,0,0,0,0] 5=[0,0,0,0,0,1,0,0,0,0]

y_train_label = LabelBinarizer().fit_transform(y_train)

classify = NeuralNetwork(X.shape[1],100,10)

#数据准备完毕,开始训练

print('start')

loss_list,accuracy_train,accuracy_val = classify.train(X_train,y_train_label

,y_train,X_val,y_val)

print('end')

(1000, 64) (436, 64) (360, 64)

start

i=0,loss=7.060120

i=500,loss=2.020329

i=1000,loss=1.208715

i=1500,loss=0.711440

i=2000,loss=0.608078

i=2500,loss=0.493830

i=3000,loss=0.404080

i=3500,loss=0.328199

i=4000,loss=0.307644

i=4500,loss=0.224095

i=5000,loss=0.224976

i=5500,loss=0.211779

i=6000,loss=0.183015

i=6500,loss=0.188465

i=7000,loss=0.179562

i=7500,loss=0.165125

i=8000,loss=0.164741

i=8500,loss=0.112698

i=9000,loss=0.127080

i=9500,loss=0.113350

end

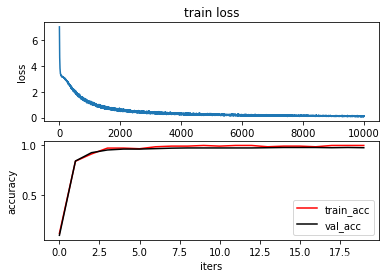

13. 可视化训练结果

#可视化一下训练结果

import matplotlib.pyplot as plt

plt.subplot(211)

plt.plot(loss_list)

plt.title('train loss')

plt.xlabel('iters')

plt.ylabel('loss')

plt.subplot(212)

plt.plot(accuracy_train,label = 'train_acc',color = 'red')

plt.plot(accuracy_val,label = 'val_acc', color = 'black')

plt.xlabel('iters')

plt.ylabel('accuracy')

plt.legend(loc = 'lower right')

plt.show()

14. 预测,并查看正确率

y_pred = classify.predict(X_test)

accuracy = np.mean(y_pred == y_test)

print("the accuracy is ",accuracy)

the accuracy is 0.9666666666666667

15. 随机挑选样本,查看预测结果

m,n = data.shape

example_size = 10

example_index = np.random.choice(m,example_size)

print(example_index)

for i, idx in enumerate(example_index):

print("%d example is number %d,we predict it as %d"%(i,target[idx],classify.predict(X[idx,:].reshape(1,-1))))

[ 879 1544 1654 1692 1209 1647 1723 831 207 928]

0 example is number 3,we predict it as 3

1 example is number 8,we predict it as 8

2 example is number 2,we predict it as 2

3 example is number 5,we predict it as 5

4 example is number 7,we predict it as 7

5 example is number 6,we predict it as 6

6 example is number 1,we predict it as 1

7 example is number 0,we predict it as 0

8 example is number 2,we predict it as 2

9 example is number 3,we predict it as 3