Get!New

1.super(MLP, self).__init__(**kwargs):这句话调用nn.Block的__init__函数,它提供了prefix(指定名字)和params(指定模型参数)

net3 = MLP(prefix='another_mlp_')

2.net.name_scope():调用nn.Block提供的name_scope()函数。nn.Dense的定义放在这个scope里面。它的作用是给里面的所有层和参数的名字加上前缀(prefix)使得他们在系统里面独一无二。

卷积神经网络

卷积:input/output 2channel

池化(pooling):和卷积类似,每次看一个小窗口,然后选出小窗口中的最大或平均元素作为输出。

LeNet

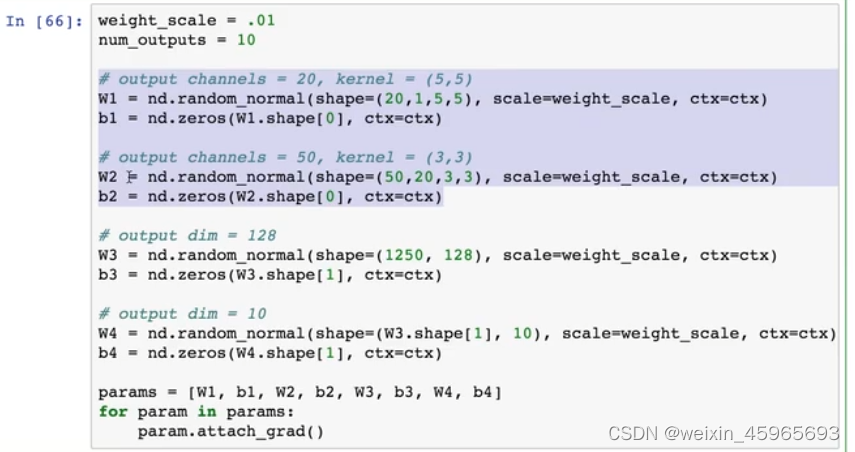

两层卷积+两层全连接

权重格式:input_filter×output_filter×height×width

当输入数据有多个通道的时候,每个通道会有对应的权重,然后会对每个通道做卷积之后在通道之间求和

c

o

n

v

(

d

a

t

a

,

w

,

b

)

=

∑

i

c

o

n

v

(

d

a

t

a

[

:

,

i

,

:

,

:

]

,

w

[

0

,

i

,

:

,

:

]

,

b

)

conv(data,w,b)=\sum_{i}conv(data[:,i,:,:],w[0,i,:,:],b)

conv(data,w,b)=i∑?conv(data[:,i,:,:],w[0,i,:,:],b)

卷积模块通常是“卷积层-激活层-池化层”。然后转成2D矩阵输出给后面的全连接层。

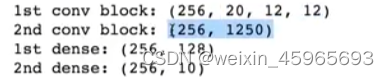

def net(X, verbose=False):

X = X.as_in_context(W1.context)

# 第一层卷积

h1_conv = nd.Convolution(data=X, weight=W1, bias=b1, kernel=W1.shape[2:], num_filter=W1.shape[0])

h1_activation = nd.relu(h1_conv)

h1 = nd.Pooling(data=h1_activation, pool_type="max", kernel=(2,2), stride=(2,2))

# 第二层卷积

h2_conv = nd.Convolution(data=h1, weight=W2, bias=b2, kernel=W2.shape[2:], num_filter=W2.shape[0])

h2_activation = nd.relu(h2_conv)

h2 = nd.Pooling(data=h1_activation, pool_type="max", kernel=(2,2), stride=(2,2))

h2 = nd.flatten(h2)

# 第一层全连接

h3_linear = nd.dot(h2, W3) + b3

h3 = nd.relu(h3_linear)

# 第二层全连接

h4_linear = nd.dot(h3, W4) + b4

if verbose:

print('1st conv block:', h1.shape)

print('2nd conv block:', h2.shape)

print('1st dense:', h3.shape)

print('2nd dense:', h4_linear.shape)

print('output:', h4_linear)

return h4_linear

gluon

不用管输入size

net = gluon.nn.Sequential()

with net.name_scope():

net.add(gluon.nn.Conv2D(channels=20, kernel_size=5, activation='relu'))

net.add(gluon.nn.MaxPool2D(pool_size=2,strides=2))

net.add(gluon.nn.Conv2D(channels=50, kernel_size=3, activation='relu'))

net.add(gluon.nn.MaxPool2D(pool_size=2,strides=2))

net.add(gluon.nn.Flatten())

net.add(gluon.nn.Dense(128,activation="relu"))

net.add(gluon.nn.Dense(10))

创建神经网络block

nn.block是什么?–提供灵活的网络定义

在gluon里,nn.block是一个一般化的部件。整个神经网络可以是一个nn.Block,单个层也是一个nn.Block。我们可以(近似)无限地【嵌套】nn.Block来构建新的nn.Block。主要提供:

- 存储参数

- 描述

forward如何执行 - 自动求导

class MLP(nn.Block):

def __init__(self, **kwargs):

super(MLP, self).__init__(**kwargs)

with self.name_scope():

self.dense0 = nn.Dense(256)

self.dnese1 = nn.Dense(10)

def forward(self, x):

return self.dense1(nd.relu(self.dense0(x)))

class FancyMLP(nn.Block):

def __init__(self, **kwargs):

super(FancyMLP, self).__init__(**kwargs)

with self.name_scope():

self.dense = nn.Dense(256)

self.weight = nd.random_uniform(shape=(256,20))

def forward(self, x):

x = nd.relu(self.dense(x))

print('layer 1:',x)

x = nd.relu(nd.dot(x, self.weight)+1)

print('layer 2:',x)

x = nd.relu(self.dense(x))

return x

fancy_mlp = FancyMLP()

fancy_mlp.initialize()

y = fancy_mlp(x)

print(y.shape)

nn.Sequential是什么?–定义更加简单

nn.Sequential是一个nn.Block容器,它通过add来添加nn.Block。它自动生成forward()函数,其就是把加进来的nn.Block逐一运行。

class Sequential(nn.Block):

def __init__(self, **kwargs):

super(Sequential, self).__init__(**kwargs)

def add(self, block):

self._children.append(block)

def forward(self, x):

for block in self._children:

x = block(x)

return x

add layer

net = nn.Sequenctial()

with net.name_scope():

net.add(nn.Dense(256, activation="relu"))

net.add(nn.Dense(10))

net.initialize()

nn下面的类基本都是nn.Block子类,他们可以很方便地嵌套使用

class RecMLP(nn.Block):

def __init__(self. **kwargs):

super(RecMLP, self).__init__(**kwargs)

self.net = nn.Sequential()

with self.name_scope():

self.net.add(nn.Dense(256, activation="relu"))

self.net.add(nn.Dense(128, activation="relu"))

self.net.add(nn.Dense(64, activation="relu"))

def forward(self, x):

return nd.relu(self.dense(self.net(x)))

rec_mlp = nn.Sequential()

rec_mlp.add(RecMLP())

rec_mlp.add(nn.Dense(10))

print(rec_mlp)

初始化模型参数

访问:params = net.collect_params()

class MyInit(init.Initializer):

def __init__(self):

super(MyInit, self).__init__()

self._verbose = True

def __init__weight(self, __, arr):

# 初始化权重,使用out=arr后我们不需要指定形状

nd.random.uniform(low=5, high=10, out=arr)

def __init__bias(self, __, arr):

# 初始化偏移

arr[:] = 2

params.initialize(init=MyInit(), force_reinit=True)

print(net[0].weight.data(), net[0].bias.data())

共享模型参数

net.add(nn.Dense(4, in_units=4, activation="relu"))

net.add(nn.Dense(4, in_units=4, activation="relu", params=net[-1].params))

定义一个简单的层

下面代码定义一个层将输入减掉均值。

from mxnet import nd

from mxnet.gluon import nn

class CenteredLayer(nn.Block):

def __init__(self, **kwargs):

super(CenteredLayer, self).__init__(**kwargs)

def forward(self, x):

return x - x.mean()

layer = CenteredLayer()#没有模型参数,不用initialize

layer(nd.array([1,2,3,4,5]))

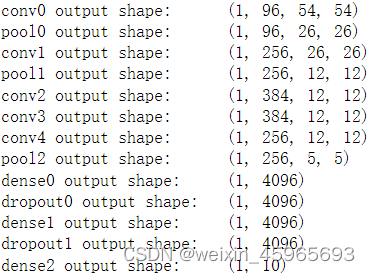

Alexnet:深度卷积神经网络

net = nn.Sequential()

# 使用较大的11 x 11窗口来捕获物体。同时使用步幅4来较大幅度减小输出高和宽。这里使用的输出通

# 道数比LeNet中的也要大很多

net.add(nn.Conv2D(96, kernel_size=11, strides=4, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2),

# 减小卷积窗口,使用填充为2来使得输入与输出的高和宽一致,且增大输出通道数

nn.Conv2D(256, kernel_size=5, padding=2, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2),

# 连续3个卷积层,且使用更小的卷积窗口。除了最后的卷积层外,进一步增大了输出通道数。

# 前两个卷积层后不使用池化层来减小输入的高和宽

nn.Conv2D(384, kernel_size=3, padding=1, activation='relu'),

nn.Conv2D(384, kernel_size=3, padding=1, activation='relu'),

nn.Conv2D(256, kernel_size=3, padding=1, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2),

# 这里全连接层的输出个数比LeNet中的大数倍。使用丢弃层来缓解过拟合

nn.Dense(4096, activation="relu"), nn.Dropout(0.5),

nn.Dense(4096, activation="relu"), nn.Dropout(0.5),

# 输出层。由于这里使用Fashion-MNIST,所以用类别数为10,而非论文中的1000

nn.Dense(10))

trick:丢弃法 dropout —— 应对过拟合

通常是对输入层或者隐含层做以下操作:

- 随机选择一部分该层的输出作为丢弃元素

- 把丢弃元素乘以0

- 把非丢弃元素拉伸

每一次都激活一部分的模型跑

def dropout(X, drop_prob):

assert 0 <= drop_prob <= 1

keep_prob = 1 - drop_prob

# 这种情况下把全部元素都丢弃

if keep_prob == 0:

return X.zeros_like()

# 随机选择一部分该层的输出作为丢弃元素

mask = nd.random.uniform(0, 1, X.shape) < keep_prob

return mask * X / keep_prob

num_inputs, num_outputs, num_hiddens1, num_hiddens2 = 784, 10, 256, 256

W1 = nd.random.normal(scale=0.01, shape=(num_inputs, num_hiddens1))

b1 = nd.zeros(num_hiddens1)

W2 = nd.random.normal(scale=0.01, shape=(num_hiddens1, num_hiddens2))

b2 = nd.zeros(num_hiddens2)

W3 = nd.random.normal(scale=0.01, shape=(num_hiddens2, num_outputs))

b3 = nd.zeros(num_outputs)

params = [W1, b1, W2, b2, W3, b3]

for param in params:

param.attach_grad()

drop_prob1, drop_prob2 = 0.2, 0.5

def net(X):

X = X.reshape((-1, num_inputs))

H1 = (nd.dot(X, W1) + b1).relu()

if autograd.is_training(): # 只在训练模型时使用丢弃法

H1 = dropout(H1, drop_prob1) # 在第一层全连接后添加丢弃层

H2 = (nd.dot(H1, W2) + b2).relu()

if autograd.is_training():

H2 = dropout(H2, drop_prob2) # 在第二层全连接后添加丢弃层

return nd.dot(H2, W3) + b3

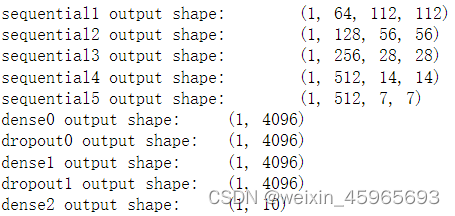

VGG:使用重复元素的非常深的网络

def vgg_block(num_convs, num_channels):

blk = nn.Sequential()

for _ in range(num_convs):

blk.add(nn.Conv2D(num_channels, kernel_size=3,

padding=1, activation='relu'))

blk.add(nn.MaxPool2D(pool_size=2, strides=2))

return blk

def vgg(conv_arch):

net = nn.Sequential()

# 卷积层部分

for (num_convs, num_channels) in conv_arch:

net.add(vgg_block(num_convs, num_channels))

# 全连接层部分

net.add(nn.Dense(4096, activation='relu'), nn.Dropout(0.5),

nn.Dense(4096, activation='relu'), nn.Dropout(0.5),

nn.Dense(10))

return net

net = vgg(conv_arch)

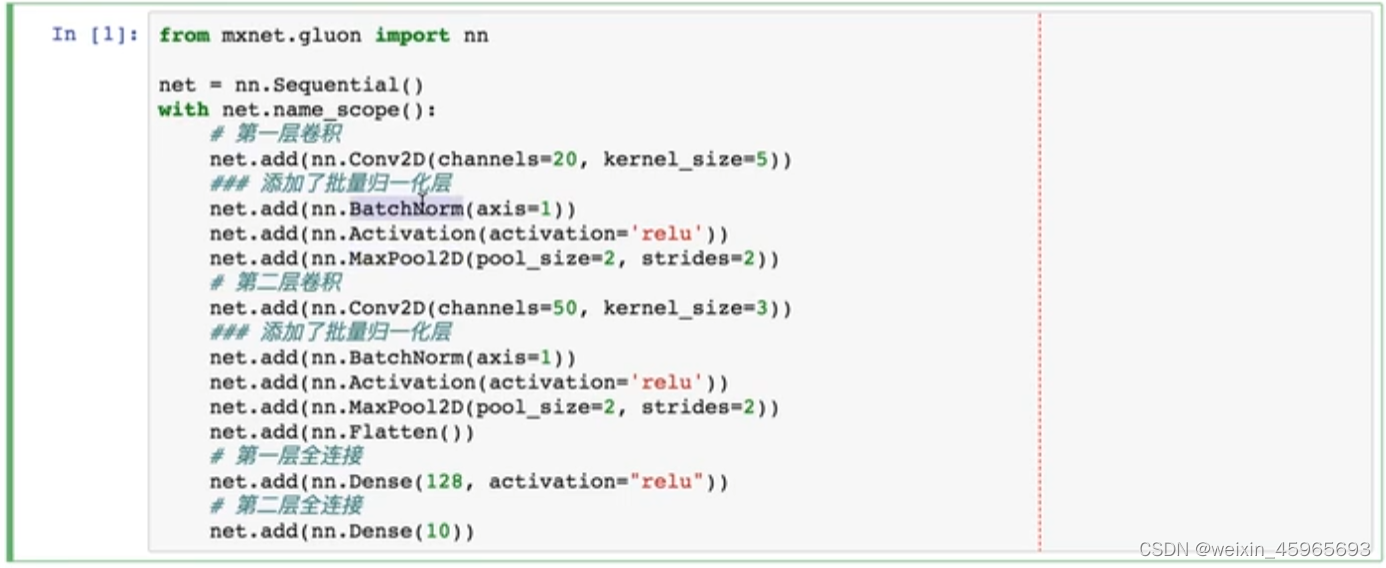

批量归一化 batch-norm

好处:收敛更快

每层归一化

每个channel归一化

均值0,方差1

测试时用整个数据的均值和方差

但是党训练数据极大时,这个计算开销很大。因此,我们用移动平均的方法来近似计算(mvoing_mean和moving_variance)

def batch_norm(X, gamma, beta, is_training, moving_mean, moving_variance, eps = 1e-5, moving_momentum = 0.9):

assert len(X.shape) in (2,4)

# 全连接:batch_size x feature

if len(X.shanpe) == 2:

# 每个输入维度在样本上的平均和方差

mean = X.mean(axis=0)

variance = ((X - mean)**2.mean(axis=0))

# 2D卷积:batch_size × channel × height × width

else:

# 对每个通道算均值和方差,需要保持4D形状使得可以正确的广播

mean = X.mean(axis=(0,2,3), keepdims=True)

variance = ((X - mean)**2).mean(axis=(0,2,3), keepdims=True)

# 变形使得可以正确广播

moving_mean = moving_mean.reshape(mean.shape)

moving_variance = moving_variance.reshape(mean.shape)

# 均一化

if is_training:

X_hat = (X - mean) / nd.sqrt(variance + eps)

#!!! 更新全局的均值和方差

moving_mean[:] = moving_momentum * moving_mean + (1.0 - moving_momentum) * mean

moving_variance[:] = moving_momentum * moving_variance + (1.0 - moving_momentum) * variance

else:

#!!! 测试阶段使用全局的均值和方差

X_hat = (X - moving_mean) / nd.sqrt(moving_variance + eps)

# 拉伸和偏移

return gamma.reshape(mean.shape) * X_hat + beta.reshape(mean.shape)

在gluon中使用

NiN:网络中的网络

1.每个卷积后都接1×1的卷积

1×1 卷积层。它可以看成全连接层,其中空间维度(高和宽)上的每个元素相当于样本,通道相当于特征。

因此,NiN使用 1×1 卷积层来替代全连接层。

2.【全连接】去掉,最后一层直接用avgpooling

优点:

1.block

2.网络小(【全连接】缺点:1.模型大 2.容易过拟合难调参;优点:收敛快)

def nin_block(num_channels, kernel_size, strides, padding):

blk = nn.Sequential()

blk.add(nn.Conv2D(num_channels, kernel_size,

strides, padding, activation='relu'),

nn.Conv2D(num_channels, kernel_size=1, activation='relu'),

nn.Conv2D(num_channels, kernel_size=1, activation='relu'))

# 第二和第三个卷积层的超参数一般是固定的

return blk

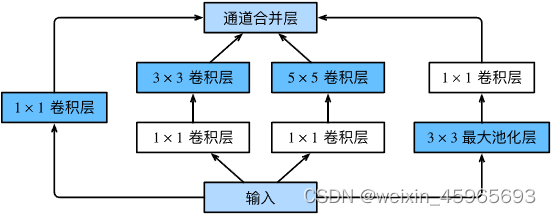

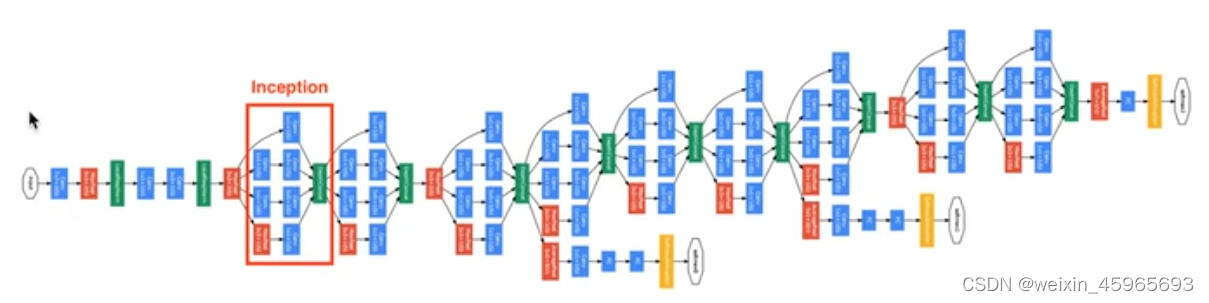

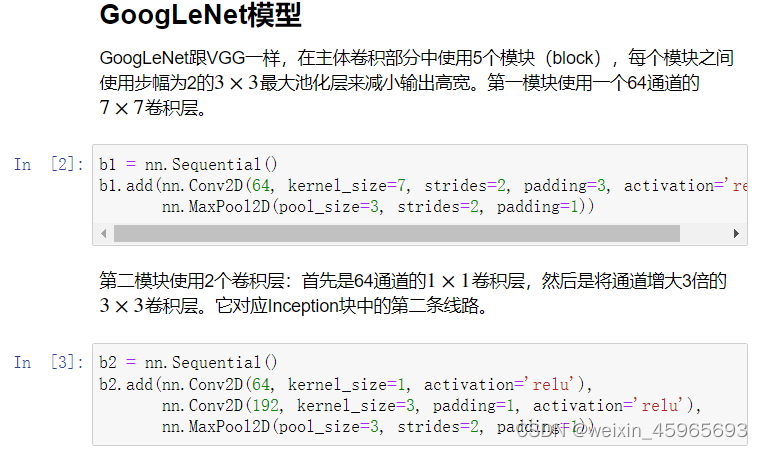

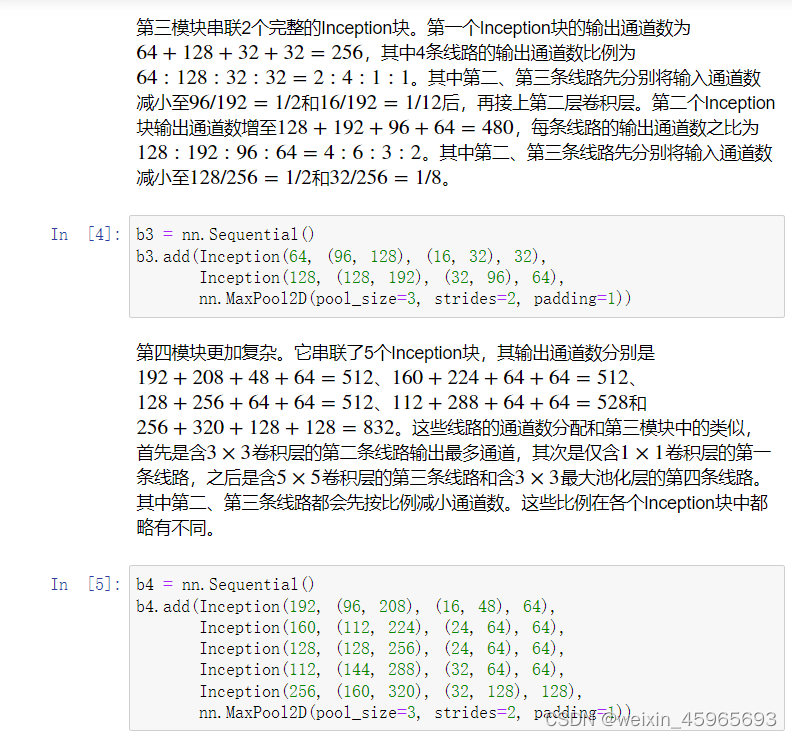

GoogLeNet:含并行连结的网络

定义Inception

inception:

1.四个并行卷积层抓取不同尺寸大小的信息

2.1×1Conv降维

class Inception(nn.Block):

# c1 - c4为每条线路里的层的输出通道数

def __init__(self, c1, c2, c3, c4, **kwargs):

super(Inception, self).__init__(**kwargs)

# 线路1,单1 x 1卷积层

self.p1_1 = nn.Conv2D(c1, kernel_size=1, activation='relu')

# 线路2,1 x 1卷积层后接3 x 3卷积层

self.p2_1 = nn.Conv2D(c2[0], kernel_size=1, activation='relu')

self.p2_2 = nn.Conv2D(c2[1], kernel_size=3, padding=1,

activation='relu')

# 线路3,1 x 1卷积层后接5 x 5卷积层

self.p3_1 = nn.Conv2D(c3[0], kernel_size=1, activation='relu')

self.p3_2 = nn.Conv2D(c3[1], kernel_size=5, padding=2,

activation='relu')

# 线路4,3 x 3最大池化层后接1 x 1卷积层

self.p4_1 = nn.MaxPool2D(pool_size=3, strides=1, padding=1)

self.p4_2 = nn.Conv2D(c4, kernel_size=1, activation='relu')

def forward(self, x):

p1 = self.p1_1(x)

p2 = self.p2_2(self.p2_1(x))

p3 = self.p3_2(self.p3_1(x))

p4 = self.p4_2(self.p4_1(x))

return nd.concat(p1, p2, p3, p4, dim=1) # 在通道维上连结输出

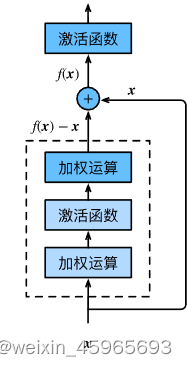

ResNet:深度残差网络

尝试解决:

1.按层训练。先训练靠近数据的层,然后慢慢地增加后面的层。但效果不好,比较麻烦。

2.使用更宽的层(增加输出通道数)而不是更深来增加模型复杂度。但更宽【复杂度平方增加】不如更深【复杂度线性增加】效果好

ResNet通过增加【跨层】的连接来解决梯度逐层回传时变小的问题。

jump 梯度反传时,最上层梯度可以直接跳过中间层传到最下层,从而避免最下层梯度过小情况。

左边的网络容易训练,这个小网络没有拟合到的部分【残差】则被右边的网络抓取住。这样的【跨层】连接可以使得底层网络充分训练。

class Residual(nn.Block): # 本类已保存在d2lzh包中方便以后使用

def __init__(self, num_channels, use_1x1conv=False, strides=1, **kwargs):

super(Residual, self).__init__(**kwargs)

self.conv1 = nn.Conv2D(num_channels, kernel_size=3, padding=1,

strides=strides)

self.conv2 = nn.Conv2D(num_channels, kernel_size=3, padding=1)

if use_1x1conv:

self.conv3 = nn.Conv2D(num_channels, kernel_size=1,

strides=strides)

else:

self.conv3 = None

self.bn1 = nn.BatchNorm()

self.bn2 = nn.BatchNorm()

def forward(self, X):

Y = nd.relu(self.bn1(self.conv1(X)))

Y = self.bn2(self.conv2(Y))

if self.conv3:

X = self.conv3(X)

return nd.relu(Y + X)

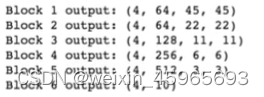

class ResNet(nn.Block):

def __init__(self, num_classes, verbose=False, **kwargs):

super(ResNet, self).__init__(**kwargs)

self.verbose = verbose

with self.name_scope():

# block 1

b1 = nn.Conv2D(64, kernel_size=7, strides=2)

# block 2

b2 = nn.Sequential()

b2.add(

nn.MaxPool2D(pool_size=3, strides=2),

Residual(64),

Residual(64),

)

# block 3

b3 = nn.Sequential()

b3.add(

Residual(128, same_shape=False),

Residual(128)

)

# block 4

b4 = nn.Sequential()

b4.add(

Residual(256, same_shape=False),

Residual(256)

)

# block 5

b5 = nn.Sequential()

b5.add(

Residual(512, same_shape=False),

Residual(512)

)

# block 6

b6 = nn.Sequential()

b6.add(

nn.AvgPool2D(pool_size=3),

nn.Dense(num_classes)

)

# chain all blocks together

self.net = nn.Sequential()

self.net.add(b1, b2, b3, b4, b5, b6)

def forward(self, x):

out = x

for i, b in enumerate(self.net):

out = b(out)

if self.verbose:

print('Block %d output: %s'%(i+1, out.shape))

return out

channel乘2乘2乘2…

input减半减半减半…

留坑

bottleneck!

Conv->BN->Relu BN->Relu->Conv

Densenet:稠密连结

稠密块

def conv_block(num_channels):

blk = nn.Sequential()

blk.add(nn.BatchNorm(), nn.Activation('relu'),

nn.Conv2D(num_channels, kernel_size=3, padding=1))

return blk

class DenseBlock(nn.Block):

def __init__(self, num_convs, num_channels, **kwargs):

super(DenseBlock, self).__init__(**kwargs)

self.net = nn.Sequential()

for _ in range(num_convs):

self.net.add(conv_block(num_channels))

def forward(self, X):

for blk in self.net:

Y = blk(X)

X = nd.concat(X, Y, dim=1) # 在通道维上将输入和输出连结

return X

过渡层

通过 1×1 卷积层来减小通道数,并使用步幅为2的平均池化层减半高和宽,从而进一步降低模型复杂度

def transition_block(num_channels):

blk = nn.Sequential()

blk.add(nn.BatchNorm(), nn.Activation('relu'),

nn.Conv2D(num_channels, kernel_size=1),

nn.AvgPool2D(pool_size=2, strides=2))

return blk

模型

net = nn.Sequential()

net.add(nn.Conv2D(64, kernel_size=7, strides=2, padding=3),

nn.BatchNorm(), nn.Activation('relu'),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

num_channels, growth_rate = 64, 32 # num_channels为当前的通道数

num_convs_in_dense_blocks = [4, 4, 4, 4]

for i, num_convs in enumerate(num_convs_in_dense_blocks):

net.add(DenseBlock(num_convs, growth_rate))

# 上一个稠密块的输出通道数

num_channels += num_convs * growth_rate

# 在稠密块之间加入通道数减半的过渡层

if i != len(num_convs_in_dense_blocks) - 1:

num_channels //= 2

net.add(transition_block(num_channels))

net.add(nn.BatchNorm(), nn.Activation('relu'), nn.GlobalAvgPool2D(),

nn.Dense(10))