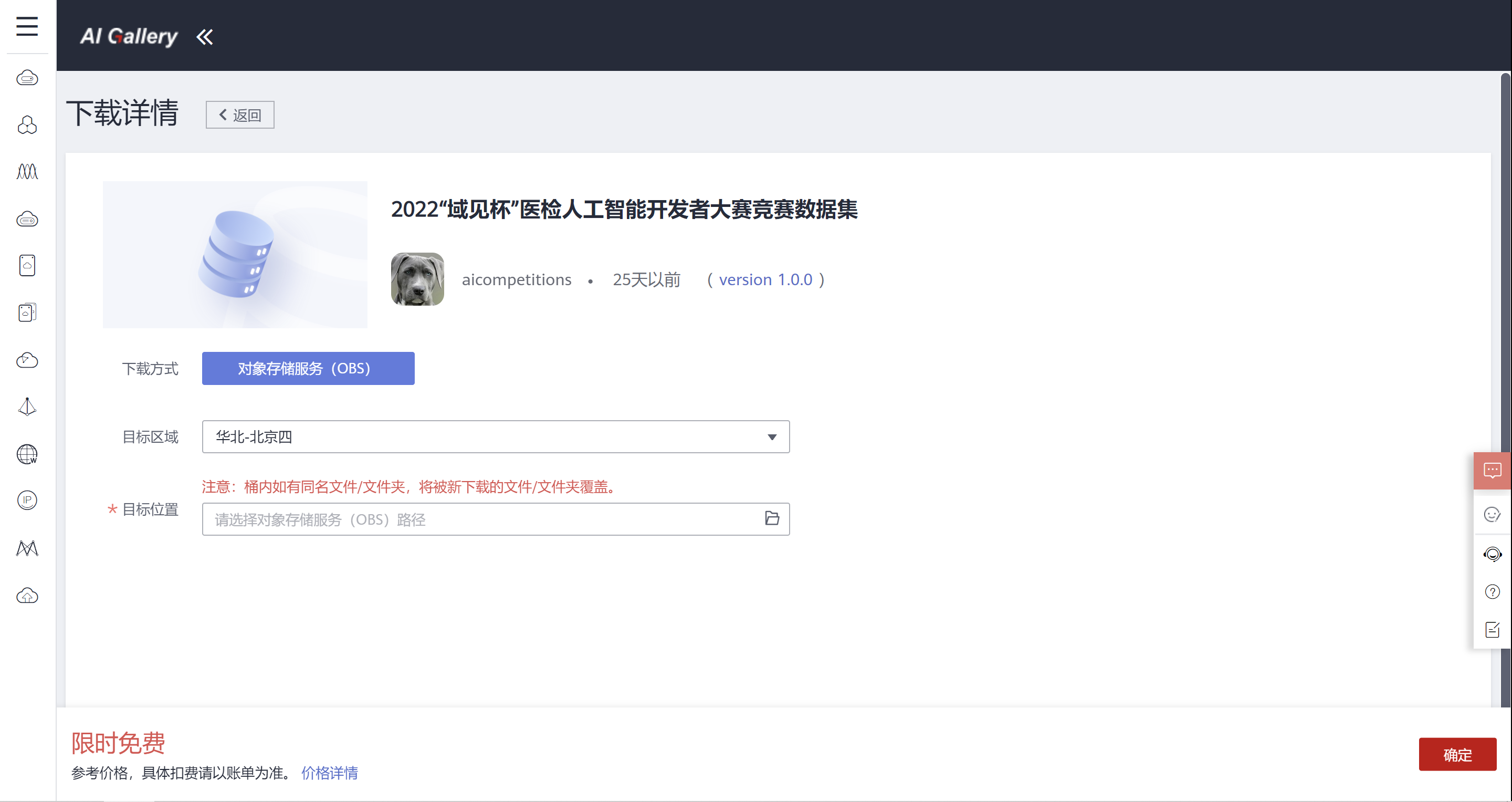

数据集下载

2022“域见杯”医检人工智能开发者大赛竞赛数据集 (huaweicloud.com)

目标区域选择“华北-北京四”(baseline notebook需要在该区域运行),目标位置选择自己创建的OBS桶,确定后即可开始数据集下载。

使用Notebook

“域见杯”_Baseline_code (huaweicloud.com)

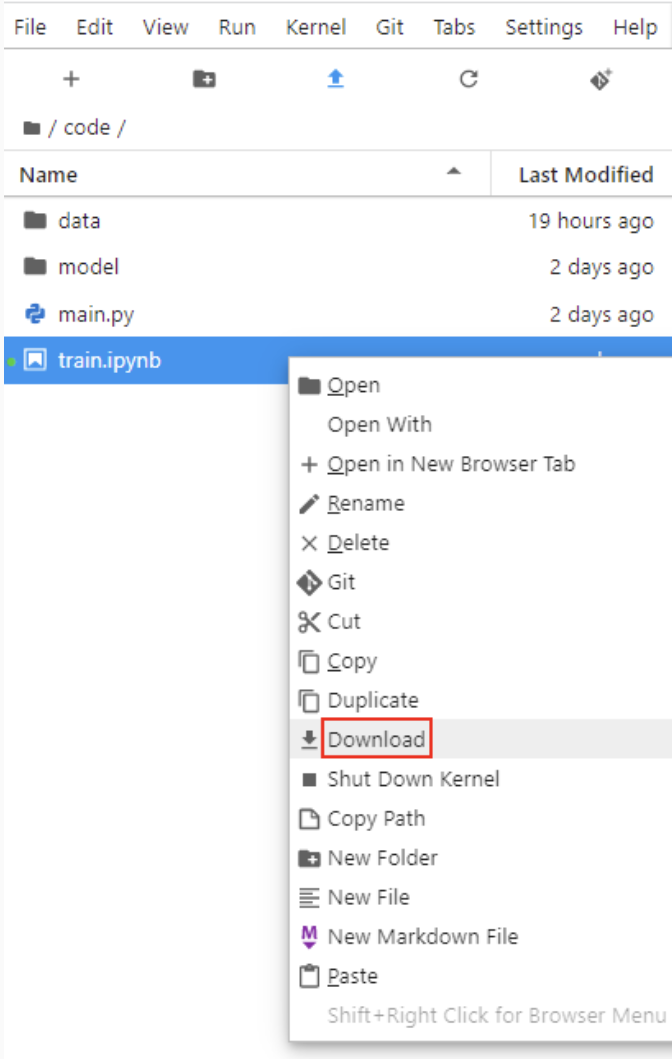

打开notebook后可能需要等待几十秒,然后左侧才会出现文件列表,然后再右键进行下载。

进入ModelArts的Notebook页面,然后创建属于自己的notebook在线环境,然后上传上一步下载的baseline notebook。

然后即可根据notebook里提供的详细教程,完成模型的训练开发。需将如下代码中"obs://***/kingmed_comp/DCCL_comp/" 修改成你自己的数据存储路径。

import moxing as moxmox.file.copy_parallel('obs://***/kingmed_comp/DCCL_comp/', './data/')

mox.file.copy_parallel('obs://ma-competitions-bj4/kingmed_comp/code/model/', './model/')import os

import time

import torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

from torch.autograd import Variable

import torchvision

from torchvision import datasets, models, transforms加载数据集,并将其分为训练集和测试集。

dataTrans = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

data_dir = './data'

train_data_dir = './data/train'

val_data_dir = './data/val'

train_dataset = datasets.ImageFolder(train_data_dir, dataTrans)

print(train_dataset.class_to_idx)

val_dataset = datasets.ImageFolder(val_data_dir, dataTrans)

image_datasets = {'train':train_dataset,'val':val_dataset}

# wrap your data and label into Tensor

dataloders = {x: torch.utils.data.DataLoader(image_datasets[x],

batch_size=64,

shuffle=True,

num_workers=4) for x in ['train', 'val']}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}

# use gpu or not

use_gpu = torch.cuda.is_available()def train_model(model, lossfunc, optimizer, scheduler, num_epochs=10):

start_time = time.time()

best_model_wts = model.state_dict()

best_acc = 0.0

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# Each epoch has a training and validation phase

for phase in ['train', 'val']:

if phase == 'train':

scheduler.step()

model.train(True) # Set model to training mode

else:

model.train(False) # Set model to evaluate mode

running_loss = 0.0

running_corrects = 0.0

# Iterate over data.

for data in dataloders[phase]:

# get the inputs

inputs, labels = data

# wrap them in Variable

if use_gpu:

inputs = Variable(inputs.cuda())

labels = Variable(labels.cuda())

else:

inputs, labels = Variable(inputs), Variable(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward

outputs = model(inputs)

_, preds = torch.max(outputs.data, 1)

loss = lossfunc(outputs, labels)

# backward + optimize only if in training phase

if phase == 'train':

loss.backward()

optimizer.step()

# statistics

running_loss += loss.data

running_corrects += torch.sum(preds == labels.data).to(torch.float32)

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects / dataset_sizes[phase]

print('{} Loss: {:.4f} Acc: {:.4f}'.format(

phase, epoch_loss, epoch_acc))

# deep copy the model

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = model.state_dict()

elapsed_time = time.time() - start_time

print('Training complete in {:.0f}m {:.0f}s'.format(

elapsed_time // 60, elapsed_time % 60))

print('Best val Acc: {:4f}'.format(best_acc))

# load best model weights

model.load_state_dict(best_model_wts)

return model模型训练

采用resnet50神经网络结构训练模型,模型训练需要一定时间,等待该段代码运行完成后再往下执行。

# get model and replace the original fc layer with your fc layer

model_ft = models.resnet50(pretrained=True, progress=False)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs, len(train_dataset.classes))

if use_gpu:

model_ft = model_ft.cuda()

# define loss function

lossfunc = nn.CrossEntropyLoss()

params = list(model_ft.fc.parameters())

optimizer_ft = optim.SGD(params, lr=0.001, momentum=0.9)

# Decay LR by a factor of 0.1 every 7 epochs

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

model_ft = train_model(model=model_ft,

lossfunc=lossfunc,

optimizer=optimizer_ft,

scheduler=exp_lr_scheduler,

num_epochs=50)保存训练好的模型

torch.save(model_ft.state_dict(), './model/model.pth', _use_new_zipfile_serialization=False)模型测试

from math import exp

import numpy as np

from PIL import Image

import cv2

infer_transformation = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

IMAGES_KEY = 'images'

MODEL_INPUT_KEY = 'images'

LABEL_OUTPUT_KEY = 'predicted_label'

MODEL_OUTPUT_KEY = 'scores'

LABELS_FILE_NAME = 'labels.txt'

def decode_image(file_content):

image = Image.open(file_content)

image = image.convert('RGB')

return image

def read_label_list(path):

with open(path, 'r',encoding="utf8") as f:

label_list = f.read().split(os.linesep)

label_list = [x.strip() for x in label_list if x.strip()]

return label_list

def resnet50(model_path):

"""Constructs a ResNet-50 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = models.resnet50(pretrained=False)

num_ftrs = model.fc.in_features

model.fc = nn.Linear(num_ftrs, 4)

model.load_state_dict(torch.load(model_path,map_location ='cpu'))

# model.load_state_dict(torch.load(model_path))

model.eval()

return model

def predict(file_name):

LABEL_LIST = read_label_list('./model/labels.txt')

model = resnet50('./model/model.pth')

image1 = decode_image(file_name)

input_img = infer_transformation(image1)

input_img = torch.autograd.Variable(torch.unsqueeze(input_img, dim=0).float(), requires_grad=False)

logits_list = model(input_img)[0].detach().numpy().tolist()

print(logits_list)

maxlist=max(logits_list)

print(maxlist)

z_exp = [exp(i-maxlist) for i in logits_list]

sum_z_exp = sum(z_exp)

softmax = [round(i / sum_z_exp, 3) for i in z_exp]

print(softmax)

labels_to_logits = {

LABEL_LIST[i]: s for i, s in enumerate(softmax)

}

predict_result = {

LABEL_OUTPUT_KEY: max(labels_to_logits, key=labels_to_logits.get),

MODEL_OUTPUT_KEY: labels_to_logits

}

return predict_result

file_name = './data/test/ASC-US&LSIL/03424.jpg'

result = predict(file_name) #可以替换其他图片

import matplotlib.pyplot as plt

plt.figure(figsize=(10,10)) #设置窗口大小

img = decode_image(file_name)

plt.imshow(img)

plt.show()

print(result)将训练好的模型导入ModelArts

将模型导入ModelArts,为后续推理测试、模型提交做准备。

from modelarts.session import Session

from modelarts.model import Model

from modelarts.config.model_config import TransformerConfig,Params

!pip install json5

import json5

import re

import traceback

import random

try:

session = Session()

config_path = 'model/config.json'

if mox.file.exists(config_path): # 判断一下是否存在配置文件,如果没有则不能导入模型

model_location = './model'

model_name = "kingmed_cc"

load_dict = json5.loads(mox.file.read(config_path))

model_type = load_dict['model_type']

re_name = '_'+str(random.randint(0,1000))

model_name += re_name

print("正在导入模型,模型名称:", model_name)

model_instance = Model(

session,

model_name=model_name, # 模型名称

model_version="1.0.0", # 模型版本

source_location_type='LOCAL_SOURCE',

source_location=model_location, # 模型文件路径

model_type=model_type, # 模型类型

)

print("所有模型导入完成")

except Exception as e:

print("发生了一些问题,请看下面的报错信息:")

traceback.print_exc()

print("模型导入失败")使用ModelArts创建推理应用

进入ModelArts的AI应用管理界面,然后按照如下步骤将你的模型包创建为AI应用。

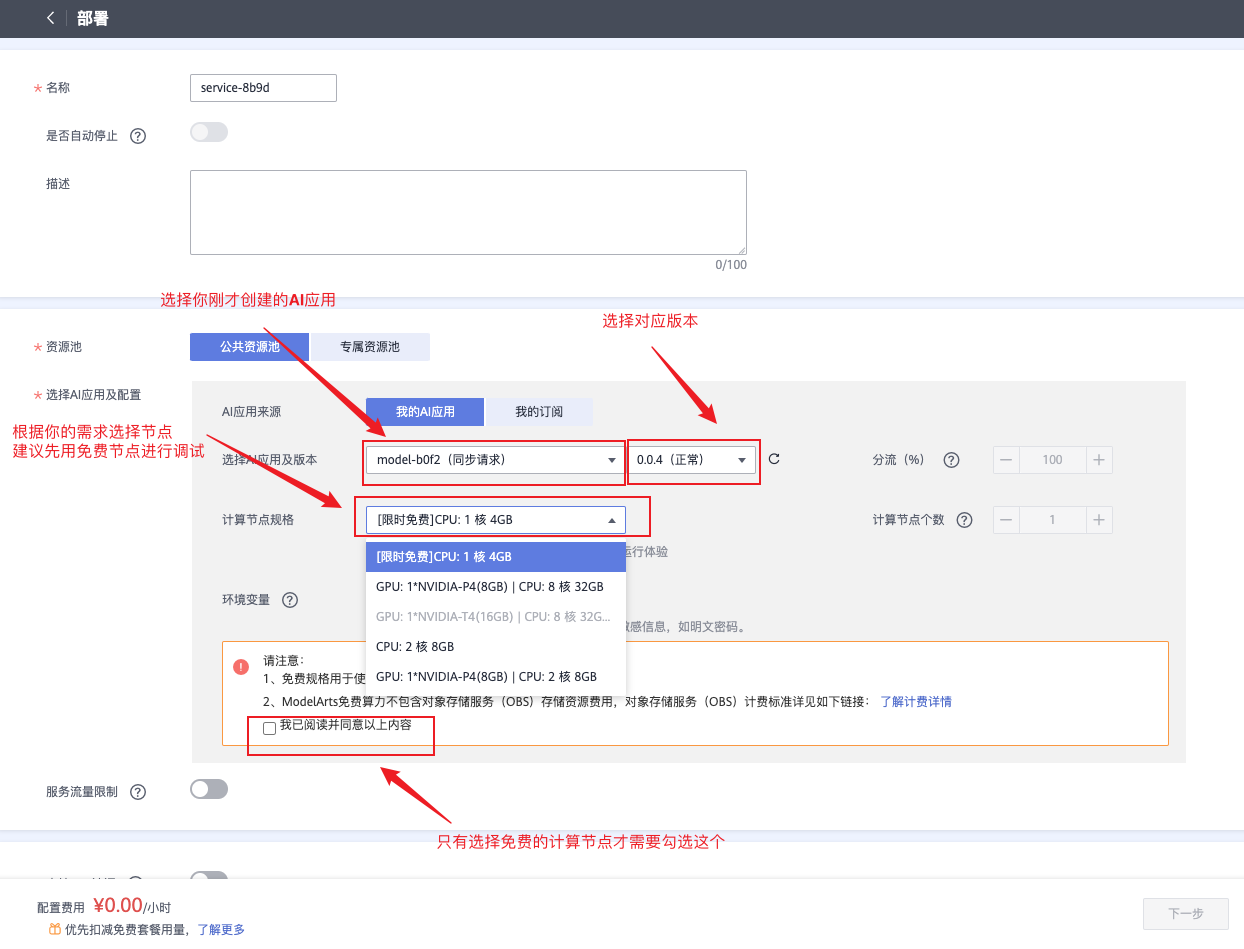

基于创建的AI应用部署为在线服务

进入在线服务部署界面,然后点击“部署”进行服务部署。

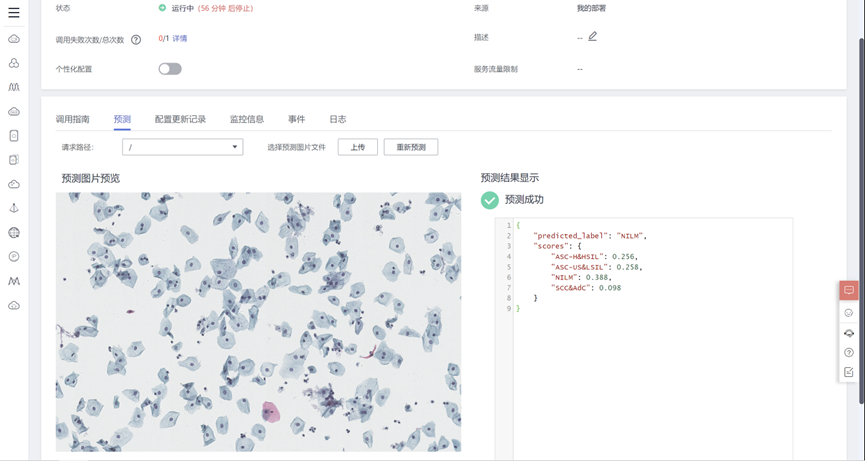

等待部署完成后,即可进行在线预测。

提交模型包进行判分

进入AI应用界面,找到你已经经过在线服务测试过的应用包,进行发布。