基于pytorch搭建神经网络的花朵种类识别(深度学习)

项目源码网盘链接:https://pan.baidu.com/s/176-HRsaVQkiwTXK6KJg5Bw

提取码:0dng

项目码云链接: https://gitee.com/xian-polytechnic-university/python

一.知识点

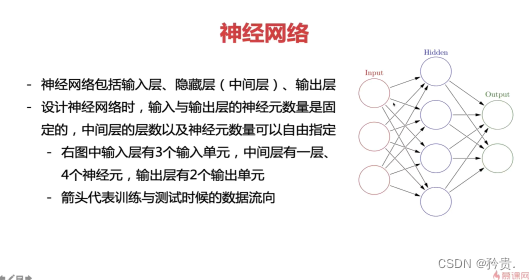

1.特征提取、神经元逐层判断

2.中间层(隐藏层)

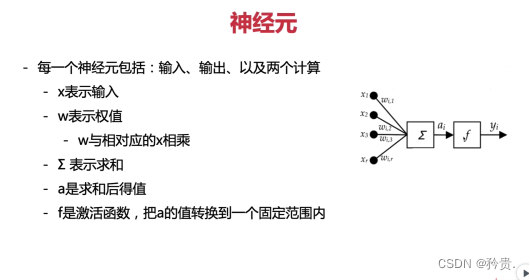

3.学习权值、神经元进行运算

返向传递:将输出值与正确答案进行比较,将误差传递回输出层回去(叫梯度,pytorch自动完成),从而计算每个权值的最优值,去进行更改。

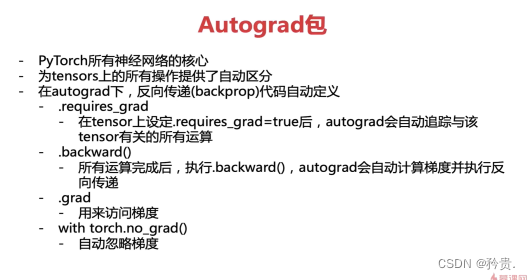

Pytorch核心:Autograd包(完成自动梯度计算及返向传递)

训练一个模型的时候需要返向传递,用的时候不需要

TensorFlow:

定义运算符、定义运算、定义梯度、开启对话框、注入数据、进行运算。

Pytorch:

初始化、进行运算(变调式便运算)

二、项目任务

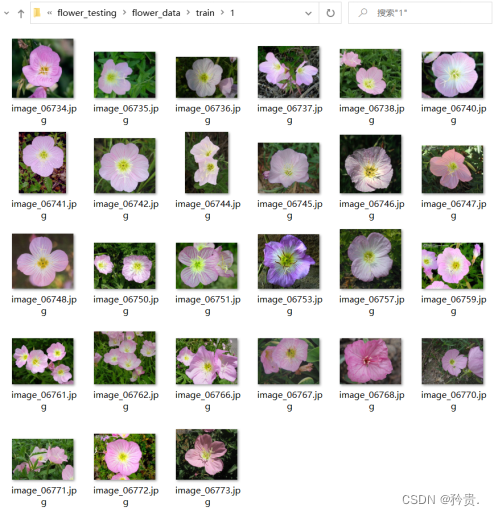

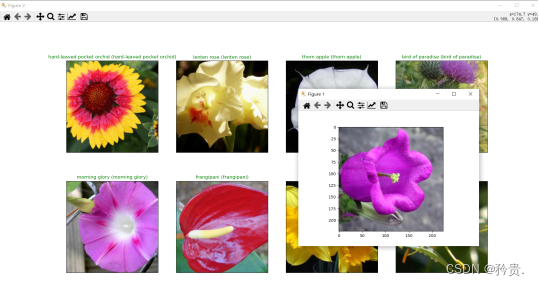

?基于 pytorch 搭建神经网络分类模型识别花的种类,输入一张花的照片,输出显示最有可能的前八种花的名称和该种花的照片。

操作过程如下:

1.数据集预处理操作

(1)读取数据集数据

(2)构建神经网络的数据集

1)数据增强:torchvision中transforms模块自带功能,将数据集中照片进行旋转、翻折、放大…得到更多的数据

2)数据预处理:torchvision中transforms也帮我们实现好了,直接调用即可

3)处理好的数据集保存在DataLoader模块中,可直接读取batch数据

2.网络模型训练操作

(1)迁移pytorch官网中models提供的resnet模型,torchvision中有很多经典网络架构,调用起来十分方便,并且可以用人家训练好的权重参数来继续训练,也就是所谓的迁移学习

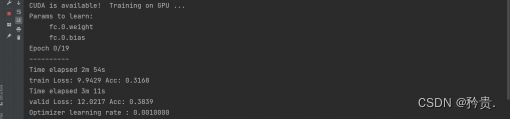

(2)选择GPU计算、选择训练哪些层、优化器设置、损失函数设置…

(3)训练全连接层,前几层都是做特征提取的,本质任务目标是一致的,前面的先不动,先训练最后一层全连接层

(4)再训练所有层

3.预测种类操作

(1)加载训练好的模型,模型保存的时候可以带有选择性,例如在验证集中如果当前效果好则保存

(2)设置检测图像的数据

(3)设置展示界面并进行预测

三、程序结构介绍

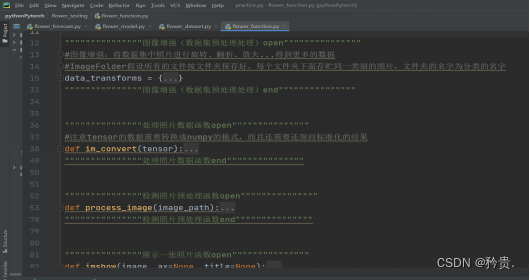

1.flower_function/定义函数程序

(在该程序中定义相关函数,以便在其他程序中进行调用)

- 图像增强(数据集预处理处理)

- 处理照片数据函数

- 检测照片预处理函数

- 展示一张照片函数

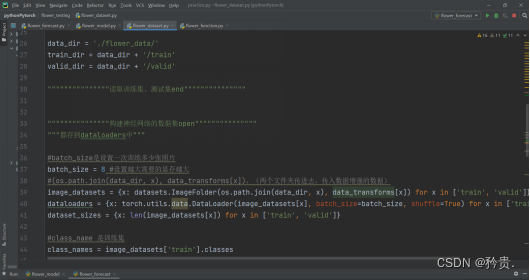

2.flower_dataset/数据集处理程序

- 读取数据集(训练集测试集)数据

- 构建神经网络的数据集

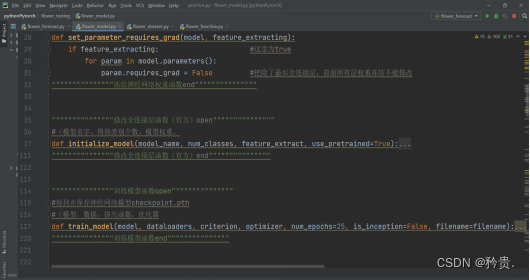

3.flower_model网络模型训练程序

- 冻结神经网络权重函数

- 修改全连接层函数(官方)

- 训练模型函数

- 加载并修改models中提供的resnet模型open

- 开始训练全连接层(0-19)

- 再继续训练所有层(0-9)

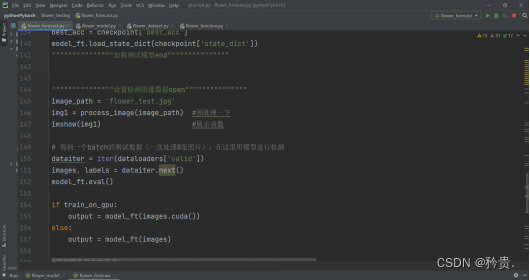

4.flower_forecast预测程序

- 冻结神经网络权重函数

- 修改全连接层函数

- 加载测试模型

- 设置检测图像数据

- 设置展示界面

四、程序内容说明

1.代码整洁操作说明

- 两行“”“”“open、”“”“”end封装的是该模块的程序

- #是单行注释

2.迁移学习

- 用相似的模型的权重初始化,修改全连接层,然后重新训练

- pytorch->transforms->resnet->models

(1)一个文件程序写全部代码有两个问题:

1)功能分工不明确

2)每次都要重新跑训练网络

(2)模块化编程: 将功能函数分别放到不同的程序中,程序中相互调,可以分别进行功能测试

(3)模块化编程两种方式:

A中:

import B

B.function

A中:

from B import function

function

(4)注意import循环重载:

? 利用pycharm这种IDE进行模块化编程,多个.py文件相互import容易发生循环重载

先了解下import的原理:

? 例:A中importB,当顺序执行A,遇到相关数据需要调用B时,停止执行A,去执行B,B执行完了再执行A,如果A、B相互调用的话会报错

解决办法:

? 当A中importB,当B又需要调用A时,把需要的A中参数定义、函数定义在B中再写一遍

五、附录完整代码

1.flower_function/定义函数程序

import numpy as np

import torch

import matplotlib.pyplot as plt

from PIL import Image

from torchvision import transforms, models

filename='checkpoint.pth'

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

"""""""""""""""图像增强(数据集预处理处理)open"""""""""""""""

#图像增强:将数据集中照片进行旋转、翻折、放大...得到更多的数据

#ImageFolder假设所有的文件按文件夹保存好,每个文件夹下面存贮同一类别的图片,文件夹的名字为分类的名字

data_transforms = { #data_transforms中指定了所有图像预处理操作,只需要修改训练集和验证集的名字后复制粘贴

'train':

transforms.Compose([transforms.RandomRotation(45),#随机旋转,-45到45度之间随机选

transforms.CenterCrop(224),#从中心开始裁剪

transforms.RandomHorizontalFlip(p=0.5),#随机水平翻转 选择一个概率概率

transforms.RandomVerticalFlip(p=0.5),#随机垂直翻转

transforms.ColorJitter(brightness=0.2, contrast=0.1, saturation=0.1, hue=0.1),#参数1为亮度,参数2为对比度,参数3为饱和度,参数4为色相

transforms.RandomGrayscale(p=0.025),#概率转换成灰度率,3通道就是R=G=B

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])#均值,标准差

]),

'valid':

transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

"""""""""""""""图像增强(数据集预处理处理)end"""""""""""""""

"""""""""""""""处理照片数据函数open"""""""""""""""

#注意tensor的数据需要转换成numpy的格式,而且还需要还原回标准化的结果

def im_convert(tensor):

image = tensor.to("cpu").clone().detach()

image = image.numpy().squeeze()

# 还原回h,w,c

image = image.transpose(1, 2, 0)

# 被标准化过了,还原非标准化样子

image = image * np.array((0.229, 0.224, 0.225)) + np.array((0.485, 0.456, 0.406))

image = image.clip(0, 1)

return image

"""""""""""""""处理照片数据函数end"""""""""""""""

"""""""""""""""检测照片预处理函数open"""""""""""""""

def process_image(image_path):

# 读取测试数据

img = Image.open(image_path)

# Resize,thumbnail方法只能进行缩小,所以进行了判断

if img.size[0] > img.size[1]:

img.thumbnail((10000, 256))

else:

img.thumbnail((256, 10000))

# Crop操作,再裁剪

left_margin = (img.width - 224) / 2

bottom_margin = (img.height - 224) / 2

right_margin = left_margin + 224

top_margin = bottom_margin + 224

img = img.crop((left_margin, bottom_margin, right_margin,

top_margin))

# 相同的预处理方法

img = np.array(img) / 255

mean = np.array([0.485, 0.456, 0.406]) # provided mean

std = np.array([0.229, 0.224, 0.225]) # provided std

img = (img - mean) / std

# 注意颜色通道应该放在第一个位置

img = img.transpose((2, 0, 1))

return img

"""""""""""""""检测照片预处理函数end"""""""""""""""

"""""""""""""""展示一张照片函数open"""""""""""""""

def imshow(image, ax=None, title=None):

"""展示数据"""

if ax is None:

fig, ax = plt.subplots()

# 颜色通道还原

image = np.array(image).transpose((1, 2, 0))

# 预处理还原

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

image = std * image + mean

image = np.clip(image, 0, 1)

ax.imshow(image)

ax.set_title(title)

return ax

"""""""""""""""展示一张照片函数end"""""""""""""""

2.flower_dataset/数据集处理程序

import os

import cv2

import matplotlib.pyplot as plt

import numpy as np

import torch

from torch import nn

import torch.optim as optim

import torchvision

#pip install torchvision 需要提前安装好这个模块

from torchvision import transforms, models, datasets

#https://pytorch.org/docs/stable/torchvision/index.html

import imageio

import time

import warnings

import random

import sys

import copy

import json

from PIL import Image

#图像增强(数据集预处理处理)

from flower_function import data_transforms

"""""""""""""""读取训练集、测试集open"""""""""""""""

data_dir = './flower_data/'

train_dir = data_dir + '/train'

valid_dir = data_dir + '/valid'

"""""""""""""""读取训练集、测试集end"""""""""""""""

"""""""""""""""构建神经网络的数据集open"""""""""""""""

"""都存到dataloaders中"""

#batch_size是设置一次训练多少张照片

batch_size = 8 #设置越大需要的显存越大

#(os.path.join(data_dir, x), data_transforms[x]),(两个文件夹传进去,传入数据增强的数据)

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x), data_transforms[x]) for x in ['train', 'valid']}

dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=batch_size, shuffle=True) for x in ['train', 'valid']}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'valid']}

#class_name 是训练集

class_names = image_datasets['train'].classes

"""

print(image_datasets)

print(dataloaders)

print(dataset_sizes)

"""

#数据集中类别按照123456...标号,文件是各标号对应的名称

with open('cat_to_name.json', 'r') as f:

cat_to_name = json.load(f)

"""

print(cat_to_name) #打印标号集

"""

"""""""""""""""构建神经网络的数据集end"""""""""""""""

"""""""""""""""打印照片操作open"""""""""""""""

"""

fig=plt.figure(figsize=(20, 12))

columns = 4

rows = 2

dataiter = iter(dataloaders['valid'])

inputs, classes = dataiter.next()

#做图,print出来

for idx in range (columns*rows):

ax = fig.add_subplot(rows, columns, idx+1, xticks=[], yticks=[])

ax.set_title(cat_to_name[str(int(class_names[classes[idx]]))])

plt.imshow(im_convert(inputs[idx]))

plt.show()

"""

"""""""""""""""打印照片操作end"""""""""""""""

3.flower_model网络模型训练程序

import os

import matplotlib.pyplot as plt

import numpy as np

import torch

from torch import nn

import torch.optim as optim

import torchvision

#pip install torchvision 需要提前安装好这个模块

from torchvision import transforms, models, datasets

#https://pytorch.org/docs/stable/torchvision/index.html

import imageio

import time

import warnings

import random

import sys

import copy

import json

from PIL import Image

#神经网络数据集

from flower_dataset import dataloaders

filename='checkpoint.pth'

"""""""""""""""冻结神经网络权重函数open"""""""""""""""

def set_parameter_requires_grad(model, feature_extracting):

if feature_extracting: #这里为true

for param in model.parameters():

param.requires_grad = False #把除了最后全连接层,前面所有层权重冻结不能修改

"""""""""""""""冻结神经网络权重函数end"""""""""""""""

"""""""""""""""修改全连接层函数(官方)open"""""""""""""""

#(模型名字、得到类别个数、模型权重、

def initialize_model(model_name, num_classes, feature_extract, use_pretrained=True):

# 选择合适的模型,不同模型的初始化方法稍微有点区别

model_ft = None

input_size = 0

if model_name == "resnet":

""" Resnet152

"""

#加载模型(下载)

model_ft = models.resnet152(pretrained=use_pretrained)

#有选择性的选需要冻住哪些层

set_parameter_requires_grad(model_ft, feature_extract)

#取出最后一层

num_ftrs = model_ft.fc.in_features

#重新做全连接层(102这里需要修改,因为本任务分类类别是102)

model_ft.fc = nn.Sequential(nn.Linear(num_ftrs, 102),

nn.LogSoftmax(dim=1))

input_size = 224

elif model_name == "alexnet":

""" Alexnet

"""

model_ft = models.alexnet(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.classifier[6].in_features

model_ft.classifier[6] = nn.Linear(num_ftrs,num_classes)

input_size = 224

elif model_name == "vgg":

""" VGG11_bn

"""

model_ft = models.vgg16(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.classifier[6].in_features

model_ft.classifier[6] = nn.Linear(num_ftrs,num_classes)

input_size = 224

elif model_name == "squeezenet":

""" Squeezenet

"""

model_ft = models.squeezenet1_0(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

model_ft.classifier[1] = nn.Conv2d(512, num_classes, kernel_size=(1,1), stride=(1,1))

model_ft.num_classes = num_classes

input_size = 224

elif model_name == "densenet":

""" Densenet

"""

model_ft = models.densenet121(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.classifier.in_features

model_ft.classifier = nn.Linear(num_ftrs, num_classes)

input_size = 224

elif model_name == "inception":

""" Inception v3

Be careful, expects (299,299) sized images and has auxiliary output

"""

model_ft = models.inception_v3(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

# Handle the auxilary net

num_ftrs = model_ft.AuxLogits.fc.in_features

model_ft.AuxLogits.fc = nn.Linear(num_ftrs, num_classes)

# Handle the primary net

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs,num_classes)

input_size = 299

else:

print("Invalid model name, exiting...")

exit()

return model_ft, input_size

"""""""""""""""修改全连接层函数(官方)end"""""""""""""""

"""""""""""""""训练模型函数open"""""""""""""""

#得到并保存神经网络模型checkpoint.pth

#(模型,数据,损失函数,优化器

def train_model(model, dataloaders, criterion, optimizer, num_epochs=25, is_inception=False, filename=filename):

since = time.time()

#保存最好的准确率

best_acc = 0

"""

checkpoint = torch.load(filename)

best_acc = checkpoint['best_acc']

model.load_state_dict(checkpoint['state_dict'])

optimizer.load_state_dict(checkpoint['optimizer'])

model.class_to_idx = checkpoint['mapping']

"""

#指定CPU做训练

model.to(device)

val_acc_history = []

train_acc_history = []

train_losses = []

valid_losses = []

LRs = [optimizer.param_groups[0]['lr']]

#最好的一次存下来

best_model_wts = copy.deepcopy(model.state_dict())

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# 训练和验证

for phase in ['train', 'valid']:

if phase == 'train':

model.train() # 训练

else:

model.eval() # 验证

running_loss = 0.0

running_corrects = 0

# 把数据都取个遍

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

# 清零

optimizer.zero_grad()

# 只有训练的时候计算和更新梯度

with torch.set_grad_enabled(phase == 'train'):

#resnet不执行这个

if is_inception and phase == 'train':

outputs, aux_outputs = model(inputs)

loss1 = criterion(outputs, labels)

loss2 = criterion(aux_outputs, labels)

loss = loss1 + 0.4 * loss2

else: # resnet执行的是这里

outputs = model(inputs)

loss = criterion(outputs, labels)

_, preds = torch.max(outputs, 1)

# 训练阶段更新权重

if phase == 'train':

loss.backward()

optimizer.step()

# 计算损失

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / len(dataloaders[phase].dataset)

epoch_acc = running_corrects.double() / len(dataloaders[phase].dataset)

time_elapsed = time.time() - since

print('Time elapsed {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))

# 得到最好那次的模型

if phase == 'valid' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

state = {

'state_dict': model.state_dict(),

'best_acc': best_acc,

'optimizer': optimizer.state_dict(),

}

torch.save(state, filename)

if phase == 'valid':

val_acc_history.append(epoch_acc)

valid_losses.append(epoch_loss)

scheduler.step(epoch_loss)

if phase == 'train':

train_acc_history.append(epoch_acc)

train_losses.append(epoch_loss)

print('Optimizer learning rate : {:.7f}'.format(optimizer.param_groups[0]['lr']))

LRs.append(optimizer.param_groups[0]['lr'])

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: {:4f}'.format(best_acc))

# 训练完后用最好的一次当做模型最终的结果

model.load_state_dict(best_model_wts)

return model, val_acc_history, train_acc_history, valid_losses, train_losses, LRs

"""""""""""""""训练模型函数end"""""""""""""""

""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""""

"""""""""""""""加载并修改models中提供的resnet模型open"""""""""""""""

"""直接用训练的好权重当做初始化参数"""

#可选的比较多 ['resnet', 'alexnet', 'vgg', 'squeezenet', 'densenet', 'inception']

model_name = 'resnet'

#是否用人家训练好的特征来做,true用人家权重

feature_extract = True

# 是否用GPU训练

train_on_gpu = torch.cuda.is_available()

if not train_on_gpu:

print('CUDA is not available. Training on CPU ...')

else:

print('CUDA is available! Training on GPU ...')

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

#设置哪些层需要训练

model_ft, input_size = initialize_model(model_name, 102, feature_extract, use_pretrained=True)

#GPU计算

model_ft = model_ft.to(device) #device这里放置的是gpu

#模型保存

filename='checkpoint.pth'

# 是否训练所有层

params_to_update = model_ft.parameters()

print("Params to learn:")

if feature_extract:

params_to_update = []

for name,param in model_ft.named_parameters():

if param.requires_grad == True:

params_to_update.append(param)

print("\t",name)

else:

for name,param in model_ft.named_parameters():

if param.requires_grad == True:

print("\t",name)

#优化器设置

optimizer_ft = optim.Adam(params_to_update, lr=1e-2) #lr学习率

#(传入优化器,迭代了多少后要变换学习率,学习率要*多少)

scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)#学习率每7个epoch衰减成原来的1/10

#最后一层已经LogSoftmax()了,所以不能nn.CrossEntropyLoss()来计算了,nn.CrossEntropyLoss()相当于logSoftmax()和nn.NLLLoss()整合

#定义损失函数

criterion = nn.NLLLoss()

"""""""""""""""加载并修改models中提供的resnet模型end"""""""""""""""

"""""""""""""""开始训练全连接层(0-19)open"""""""""""""""

model_ft, val_acc_history, train_acc_history, valid_losses, train_losses, LRs = train_model(model_ft, dataloaders, criterion, optimizer_ft, num_epochs=20, is_inception=(model_name=="inception"))

"""""""""""""""开始训练全连接层(0-19)end"""""""""""""""

"""""""""""""""再继续训练所有层(0-9)open"""""""""""""""

for param in model_ft.parameters():

param.requires_grad = True #所有层都变成true去训练

# 再继续训练所有的参数,学习率调小一点

optimizer = optim.Adam(params_to_update, lr=1e-4) #lr学习率变大一点

scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

# 损失函数

criterion = nn.NLLLoss()

# 在之前训练好的层上再去做训练

checkpoint = torch.load(filename) #传入路径

best_acc = checkpoint['best_acc'] #当前最好的一次准确率

model_ft.load_state_dict(checkpoint['state_dict']) #模型当前结果读进来

optimizer.load_state_dict(checkpoint['optimizer'])

#model_ft.class_to_idx = checkpoint['mapping']

#调用函数,再训练一遍

model_ft, val_acc_history, train_acc_history, valid_losses, train_losses, LRs = train_model(model_ft, dataloaders, criterion, optimizer, num_epochs=10, is_inception=(model_name=="inception"))

"""""""""""""""再继续训练所有层(0-9)end"""""""""""""""

"""""""""""""""测试网络效果open"""""""""""""""

"""probs, classes = predict ('flower_test.jpg', model_ft) """

"""print(probs) """

"""print(classes) """

"""""""""""""""测试网络效果end"""""""""""""""

4.flower_forecast预测程序

import os

import cv2

import matplotlib.pyplot as plt

import numpy as np

import torch

from torch import nn

import torch.optim as optim

import torchvision

#pip install torchvision 需要提前安装好这个模块

from torchvision import transforms, models, datasets

#https://pytorch.org/docs/stable/torchvision/index.html

import imageio

import time

import warnings

import random

import sys

import copy

import json

from PIL import Image

#数据集,标签

from flower_dataset import dataloaders, cat_to_name

#处理照片数据函数,检测照片预处理函数,展示一张照片函数

from flower_function import im_convert, process_image, imshow

"""""""""""""""flower_model中在本程序需要用到的参数和函数本程序中重新写一遍open"""""""""""""""

"""""""""""""""这样就不用调用flower_model程序,就不用再次训练模型了open"""""""""""""""

"""相关参数open"""

feature_extract = True

model_name = 'resnet'

train_on_gpu = torch.cuda.is_available()

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

"""相关参数open"""

"""""""""""""""冻结神经网络权重函数open"""""""""""""""

def set_parameter_requires_grad(model, feature_extracting):

if feature_extracting: #这里为true

for param in model.parameters():

param.requires_grad = False #把除了最后全连接层,前面所有层权重冻结不能修改

"""""""""""""""冻结神经网络权重函数end"""""""""""""""

"""""""""""""""修改全连接层函数open"""""""""""""""

#(模型名字、得到类别个数、模型权重、

def initialize_model(model_name, num_classes, feature_extract, use_pretrained=True):

# 选择合适的模型,不同模型的初始化方法稍微有点区别

model_ft = None

input_size = 0

if model_name == "resnet":

""" Resnet152

"""

#加载模型(下载)

model_ft = models.resnet152(pretrained=use_pretrained)

#有选择性的选需要冻住哪些层

set_parameter_requires_grad(model_ft, feature_extract)

#取出最后一层

num_ftrs = model_ft.fc.in_features

#重新做全连接层(102这里需要修改,因为本任务分类类别是102)

model_ft.fc = nn.Sequential(nn.Linear(num_ftrs, 102),

nn.LogSoftmax(dim=1))

input_size = 224

elif model_name == "alexnet":

""" Alexnet

"""

model_ft = models.alexnet(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.classifier[6].in_features

model_ft.classifier[6] = nn.Linear(num_ftrs,num_classes)

input_size = 224

elif model_name == "vgg":

""" VGG11_bn

"""

model_ft = models.vgg16(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.classifier[6].in_features

model_ft.classifier[6] = nn.Linear(num_ftrs,num_classes)

input_size = 224

elif model_name == "squeezenet":

""" Squeezenet

"""

model_ft = models.squeezenet1_0(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

model_ft.classifier[1] = nn.Conv2d(512, num_classes, kernel_size=(1,1), stride=(1,1))

model_ft.num_classes = num_classes

input_size = 224

elif model_name == "densenet":

""" Densenet

"""

model_ft = models.densenet121(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.classifier.in_features

model_ft.classifier = nn.Linear(num_ftrs, num_classes)

input_size = 224

elif model_name == "inception":

""" Inception v3

Be careful, expects (299,299) sized images and has auxiliary output

"""

model_ft = models.inception_v3(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

# Handle the auxilary net

num_ftrs = model_ft.AuxLogits.fc.in_features

model_ft.AuxLogits.fc = nn.Linear(num_ftrs, num_classes)

# Handle the primary net

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs,num_classes)

input_size = 299

else:

print("Invalid model name, exiting...")

exit()

return model_ft, input_size

"""""""""""""""修改全连接层函数end"""""""""""""""

"""""""""""""""flower_model中在本程序需要用到的参数和函数本程序中重新写一遍end"""""""""""""""

"""""""""""""""这样就不用调用flower_model程序,就不用再次训练模型了end"""""""""""""""

"""""""""""""""加载测试模型open"""""""""""""""

#加载模型

model_ft, input_size = initialize_model(model_name, 102, feature_extract, use_pretrained=True)

# GPU模式

model_ft = model_ft.to(device)

#保存文件的名字

filename='checkpoint.pth'

# 加载模型

checkpoint = torch.load(filename)

best_acc = checkpoint['best_acc']

model_ft.load_state_dict(checkpoint['state_dict'])

"""""""""""""""加载测试模型end"""""""""""""""

"""""""""""""""设置检测图像数据open"""""""""""""""

image_path = 'flower_test.jpg'

img1 = process_image(image_path) #预处理一下

imshow(img1) #展示函数

# 得到一个batch的测试数据(一次处理8张照片),在这里用模型进行检测

dataiter = iter(dataloaders['valid'])

images, labels = dataiter.next()

model_ft.eval()

if train_on_gpu:

output = model_ft(images.cuda())

else:

output = model_ft(images)

#得到概率最大的那个

_, preds_tensor = torch.max(output, 1)

preds = np.squeeze(preds_tensor.numpy()) if not train_on_gpu else np.squeeze(preds_tensor.cpu().numpy())

"""""""""""""""设置检测图像数据open"""""""""""""""

"""""""""""""""设置展示界面open"""""""""""""""

#设置展示预测结果,这张照片最像的前八类

fig=plt.figure(figsize=(20, 20))

columns = 4

rows = 2

#2*4展示出来

for idx in range (columns*rows):

ax = fig.add_subplot(rows, columns, idx+1, xticks=[], yticks=[])

plt.imshow(im_convert(images[idx]))

ax.set_title("{} ({})".format(cat_to_name[str(preds[idx])], cat_to_name[str(labels[idx].item())]),color=("green" if cat_to_name[str(preds[idx])] == cat_to_name[str(labels[idx].item())] else "red"))

plt.show()

"""""""""""""""设置展示界面end"""""""""""""""