1. Motivation

-

Instance segmentation requires costly annotations such as bounding boxes and segmentation masks for learning.

-

We propose a fully unsupervised learning method that learns class-agnostic instance segmentation without any annotations.

-

a novel localization-aware pre-training framework

-

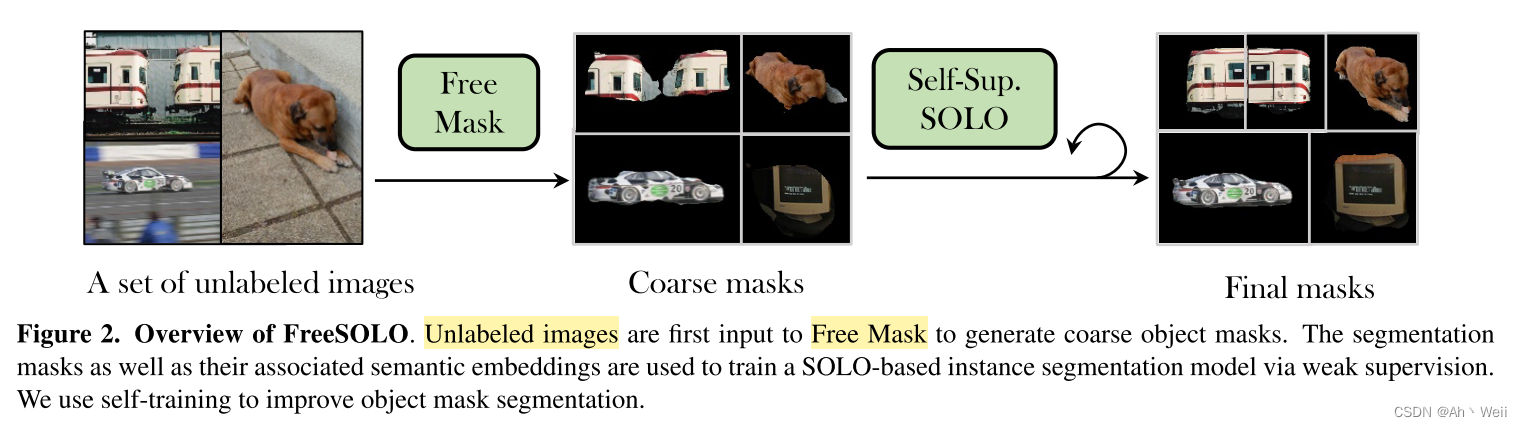

FreeSOLO, contains two major pillars: Free Mask and Self-supervised SOLO,

2. Contribution

- We propose the Free Mask approach, which leverages the specific design of SOLO to effectively extract coarse ob- ject masks and semantic embeddings in an unsupervised manner.

- We further propose Self-Supervised SOLO, which takes the coarse masks and semantic embeddings from Free Mask and trains the SOLO instance segmentation model, with several novel design elements to overcome label noise in the coarse masks.

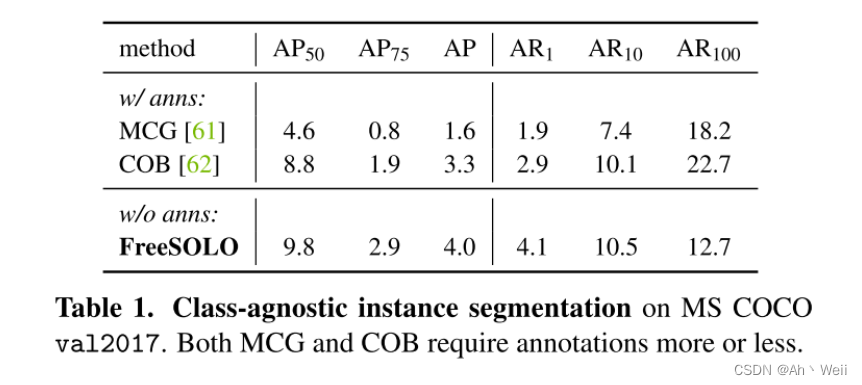

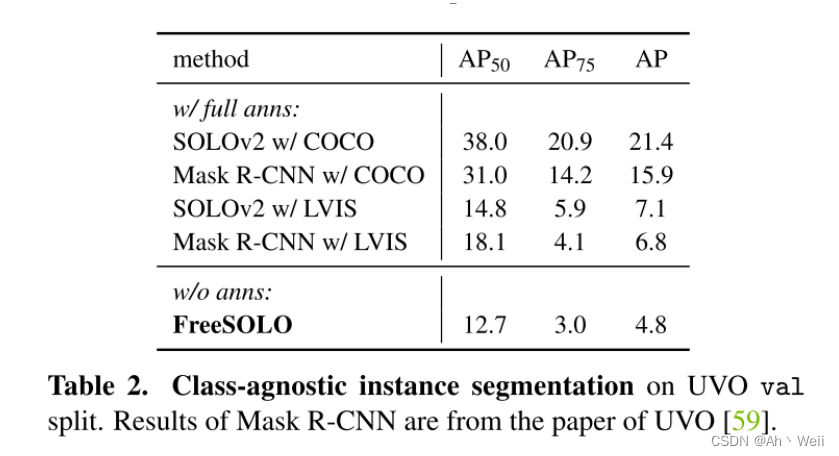

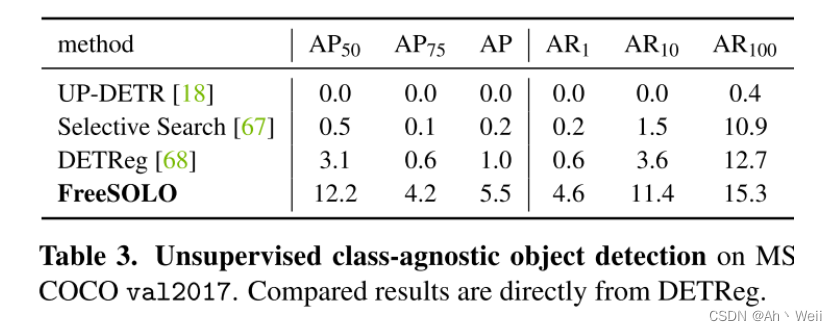

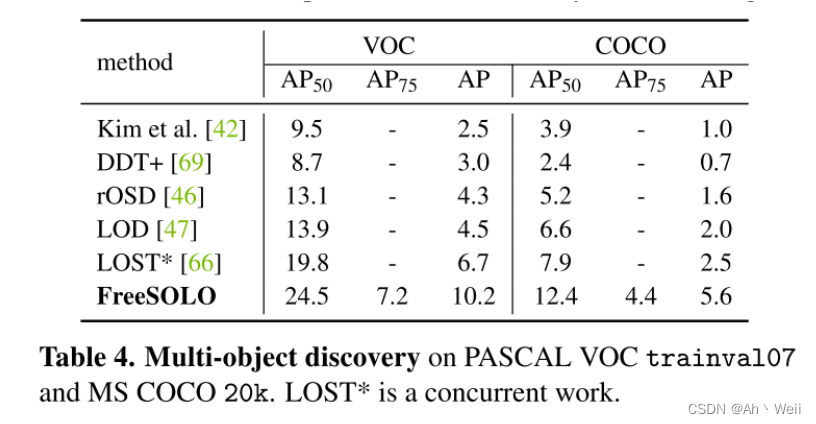

- With the above methods, FreeSOLO presents a simple and effective framework that demonstrates unsupervised instance segmentation successfully for the first time. Notably, it outperforms some proposal generation methods that use manual annotations. FreeSOLO also outperforms state-of-the-art methods for unsupervised object detection/discovery by a significant margin (relative +100% in COCO AP).

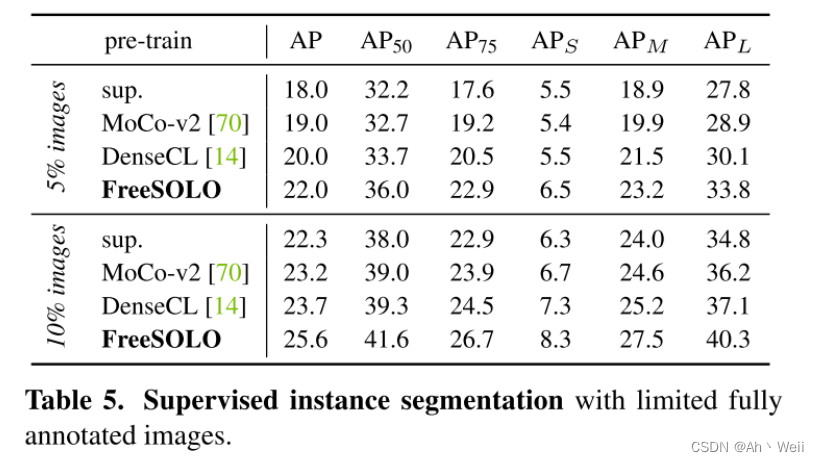

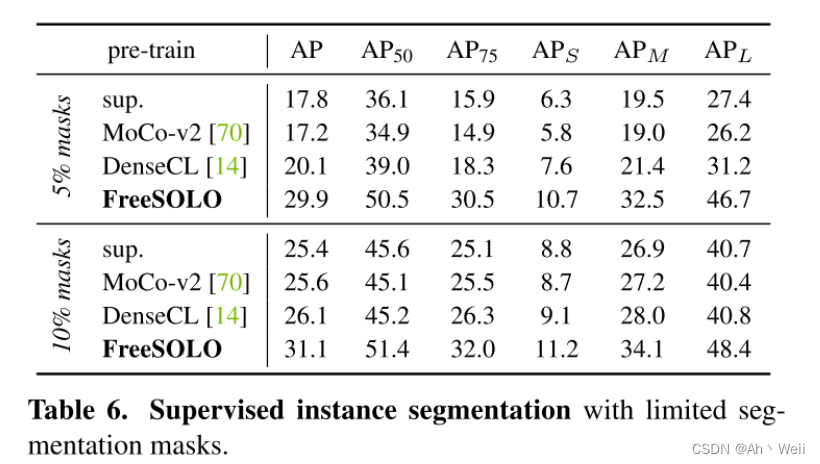

- In addition, FreeSOLO serves as a strong self-supervised pretext task for representation learning for instance segmentation. For example, when fine-tuning on COCO dataset with 5% labeled masks, FreeSOLO outperforms DenseCL [14] by +9.8% AP

3. Method

3.1 background

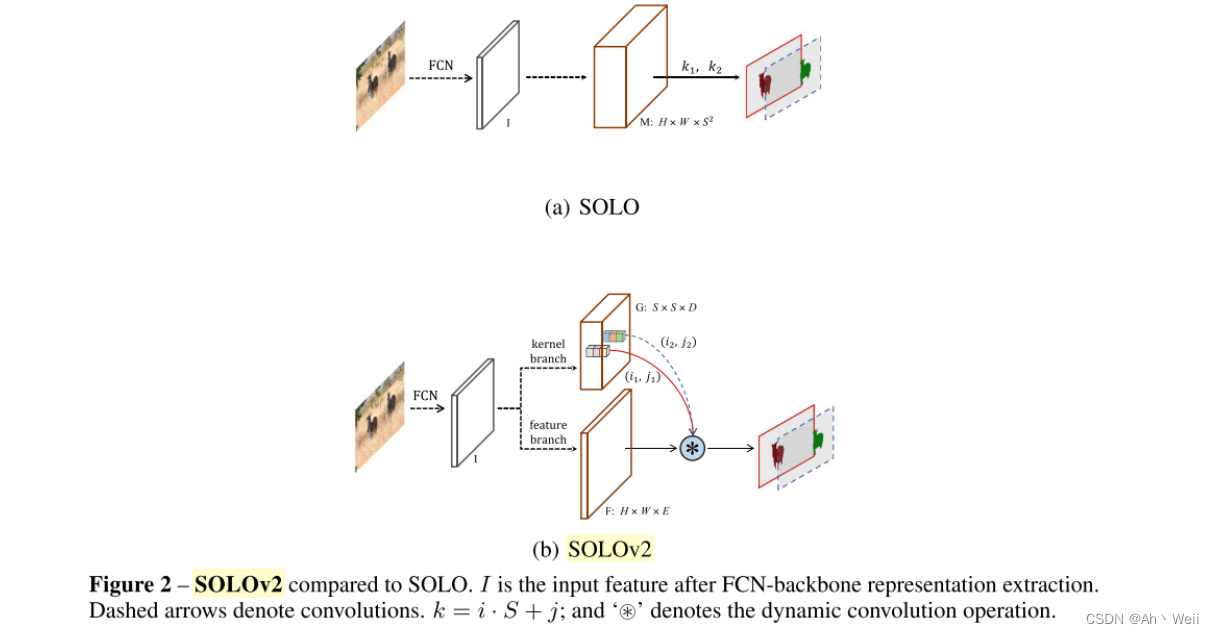

简要回顾一下SOLO

- 2个分支 category-branch and mask branch

- mask branch: H x W X S^2

- category-branch: S x S x C

SOLOV2

- 多了mask NMS

- 多了dynamic mask branch 分割为了 kernel branch S x S x D 以及features branch H x W x D。

- 如果是1x1的kernel 那么D=E, 如果是3x3的kernel 那么D=9E 因为都是对于一个1x1的 grid的D维特征 作为 HxW 特征图的 kernel

3.2 Free MASK (Mask Branch)

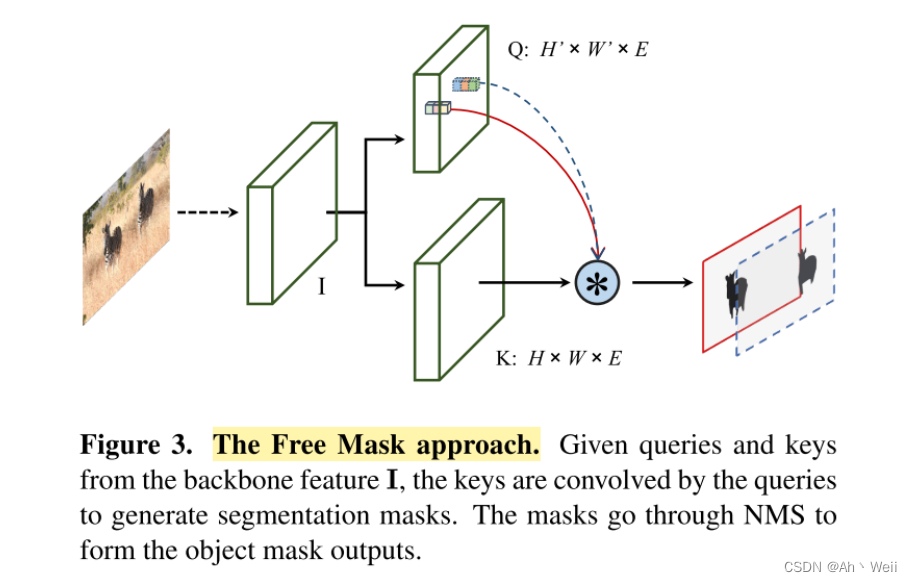

通过自监督的方法 从 feature map I 上获得 Q 和K,从而一起产生 coarse mask。

For each query in Q, we compute its cosine similarity with every key in K

Each of the normalized queries is treated as a 1 × 1 convolutional kernel.

和动态卷积思路类似, 只不过这里还使用了余弦相似度进一步的约束Q和K。(就类似TFA)

query 作为1x1的卷积核。

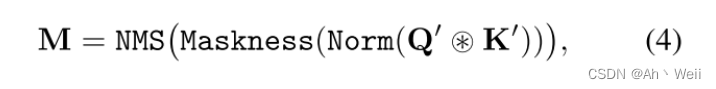

最后经过norm 以及 阈值,将mask置为0,1

- Self-supervised pre-training:

对于Free Mask阶段, 用DenseCL中的方法进行训练。

It optimizes a pairwise (dis)similarity loss at the level of local features between two views of the input image.

- Pyramid queries

对Q使用了不同尺度的方法来得到不同尺度的mask 下采样比例为[0.25, 0.5, 1.0]

When constructing the queries Q from I, we design a pyramid queries method to generate masks for instances at different scales.

- Maskness score

用SOLO中的方法来衡量获得的coarse mask的质量

公式为 1 N f ∑ i N f p i \frac{1}{N_f} \sum^{N_f}_{i} p _i Nf?1?i∑Nf??pi?,其中Nf表示大于阈值的所有前景的pixel的数量,pi则是对应pixel的得分。

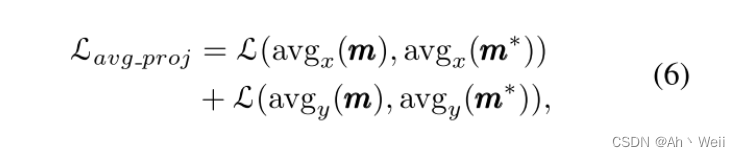

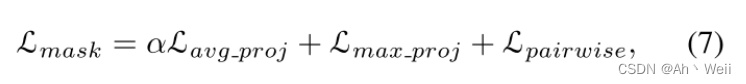

在loss部分 也吸收了Boxinst中的 pairwise affinity loss 以及 project loss,同时在max project的方法中改进了一下, 提出了avg proj

最后总体的loss 如公式7所示:

- Self-training

这个小节比较好理解, 也就是使用 通过SOLO 训练好的 predict mask以后, 直接对unlabeled image 做一个inference, 将low-confidence 的预测remove, 然后将剩余的作为新得 coarse mask。 然后将coarse mask 以及 在进行一轮训练,从而得到第二次的predict mask。

3.3 Semantic representation learning (Category-Branch)

- we propose to decouple the category branch to perform two sub-tasks: foreground/background binary classification, and semantic embedding learning.

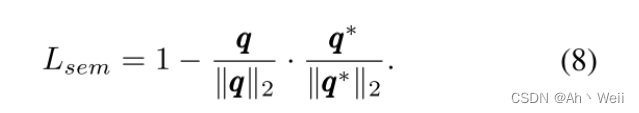

将原有的分类分支分为了2个子任务,因为是无类别的mask 因此只是二分类, 除此之外还并行加入一个分支, 预测query特征的语义,用 q ? q^* q?表示 (E维的特征, 和query feature vector相对应)。

- When training the instance segmenter, we add a branch in parallel to the last layer of the original category branch, which consists of a single convolution layer to predict the semantic embedding of each object.

通过最小化 负余弦相似度:

因此分类分支的 loss为:

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-P0HgsT8q-1658067453996)(C:/Users/chenwei/AppData/Roaming/Typora/typora-user-images/image-20220606204538449.png)]](https://img-blog.csdnimg.cn/c4f7ce1b04d4440aba2bb1839fb284e3.png)

而整个网络的loss就是Lcate + Lmask 公式9+公式7。

4. Experiments

4.1 Self-supervised instance segmentation.

4.2 Self-supervised object detection

4.3 Supervised fine-tuning

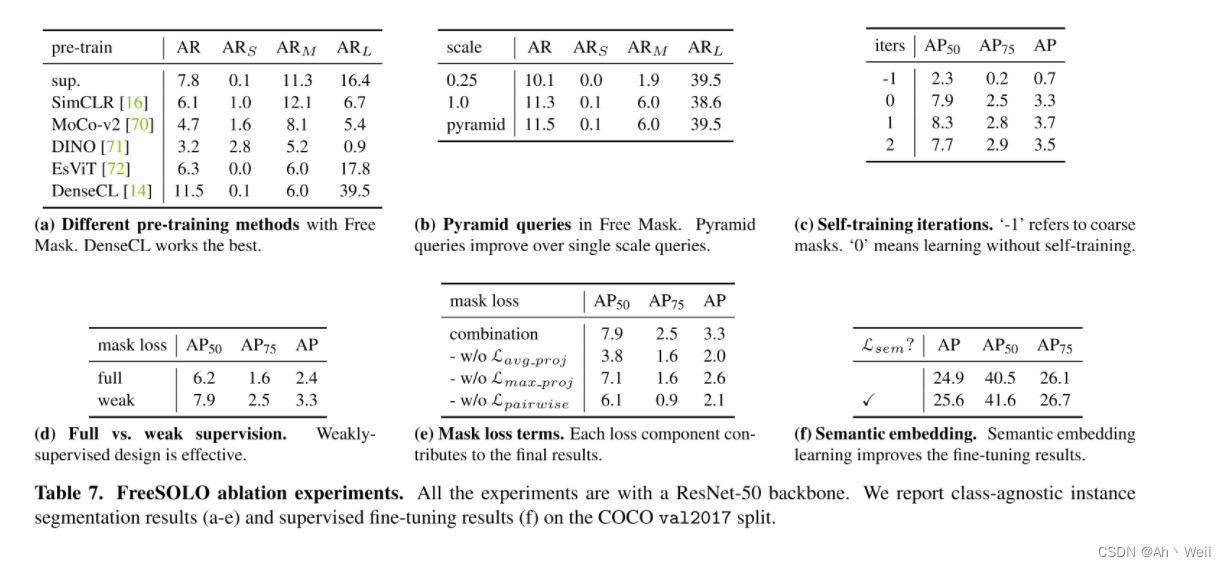

4.4 Ablation Study