NNML

输入:K 元语句(Word2Id 形式)

输出:下一个词的概率分布 P

0. 导包

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from tqdm import trange, tqdm

1. 语料库

sentences = ['i like dog', 'i love coffee', 'i hate milk', 'i do nlp']

2. 根据语料库创建词表

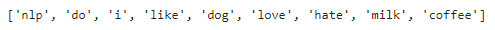

word_list = ' '.join(sentences).split()

# 去重

word_list = list(set(word_list))

word_list

3. Word2Id

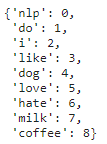

构建词与 id 的映射:

word_dict = {word: i for i, word in enumerate(word_list)}

word_dict

4. Id2Word

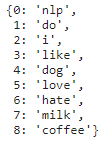

构建 id 与词的映射:

id_dict = {i: word for i, word in enumerate(word_list)}

id_dict

5. 记录词表大小

输出为预测下一个词的概率分布,所以需要记录词表大小:

n_class = len(word_dict)

n_class

6. 定义词向量维度

Word2Vector 需要 Embedding 为几维:

vec_size = 2

7. 定义 n-gram,用前几个词预测下一个词

n_step = 2

8. 定义神经网络隐藏层大小

n_hidden = 2

9. 定义模型

class NNLM(nn.Module):

def __init__(self):

# 第一句话,调用父类的构造函数

super(NNLM, self).__init__()

self.embedding = nn.Embedding(n_class, vec_size)

self.hidden1 = nn.Linear(vec_size * n_step, n_hidden)

self.tanh = nn.Tanh()

self.hidden2 = nn.Linear(n_hidden, n_class)

def forward(self, x):

x = self.embedding(x) # batch_size x n_step x vec_size

# 将 batch 中的每个句子的的词向量拼接起来

x = x.view(-1, vec_size * n_step) # batch_size x (n_step x vec_size)

x = self.hidden1(x) # batch_size x n_hidden

x = self.tanh(x)

x = self.hidden2(x) # batch_size x n_class

return x

model = NNLM()

10. 定义损失函数

criterion = nn.CrossEntropyLoss()

11. 定义优化器

optimizer = optim.Adam(model.parameters(), lr=1e-3)

12. 定义输入 batch

def make_batch(sentences):

input_batch = []

target_batch = []

for sen in sentences:

word = sen.split()

# 输入为前面的词

input = [word_dict[n] for n in word[:-1]]

# 输出为最后的词

target = word_dict[word[-1]]

input_batch.append(input) # batch_size x n_step

target_batch.append(target) # batch_size x 1

return input_batch, target_batch

input_batch, target_batch = make_batch(sentences)

# 因为要 Embedding,数据类型必须要定义为 LongTensor,否则报错

input_batch, target_batch = torch.Tensor(input_batch).type(torch.LongTensor), torch.Tensor(target_batch).type(torch.LongTensor)

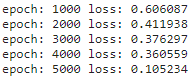

13. 训练

model.train()

for epoch in range(5000):

# 清零梯度,否则梯度会叠加

optimizer.zero_grad()

# 前向传播

output = model(input_batch)

# 计算损失, 这里 batch_size x n_class 可以和 batch_size x 1 计算损失 (要求标量为整数类型),不需要额外将真实标签 one-hot

loss = criterion(output, target_batch)

if (epoch + 1) % 1000 == 0:

print('epoch: %04d' % (epoch + 1), 'loss: {:.6f}'.format(loss.item()))

# 反向传播

loss.backward()

# 优化器更新梯度

optimizer.step()

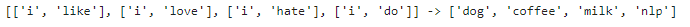

14. 预测

model.eval()

predict = model(input_batch).data.max(axis=1, keepdim=True)[1]

# squeeze() 将某个维度上长度为 1 的维度消除

print([sen.split()[:2] for sen in sentences], '->', [id_dict[n.item()] for n in predict.squeeze()])

补充

以下两句代码均能实现取出输出结果最大值索引:

# 1.

model(input_batch).data.max(axis=1, keepdim=True)[1]

# 2.

model(input_batch).data.argmax(axis=1, keepdim=True)