CV计算机视觉核心08-目标检测yolo v3

对应代码文件下载:https://download.csdn.net/download/m0_37755995/86237192

需要自己下载coco的train2014和val2014:https://blog.csdn.net/ViatorSun/article/details/115126015

对应代码(带有代码批注)下载:https://download.csdn.net/download/m0_37755995/86245876

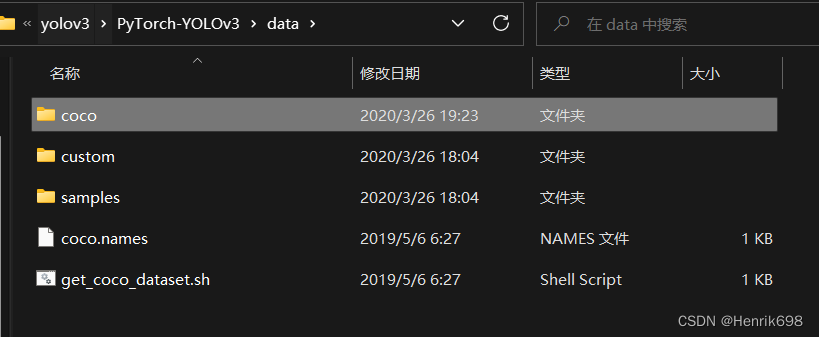

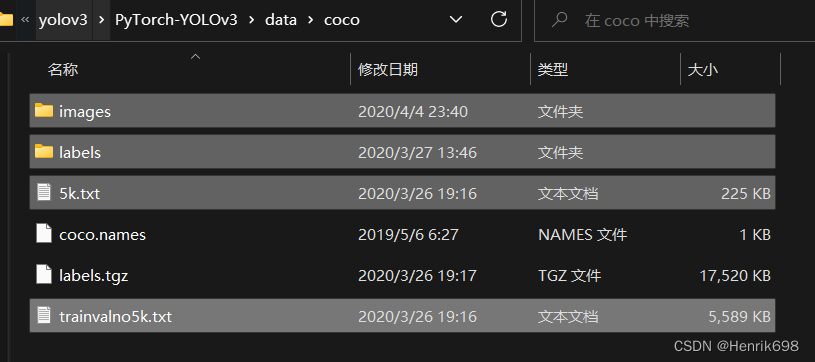

一、数据集:

这里我们选择使用coco2014数据集:

其中images、labels、5k.txt、trainvalno5k.txt是必须要的:

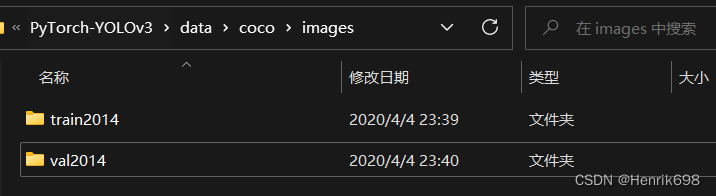

其中image存放训练数据和validation数据:

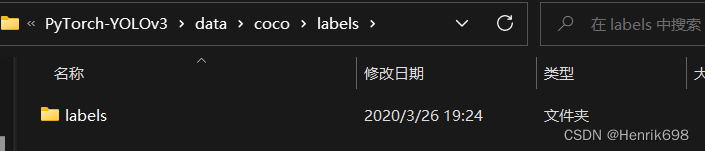

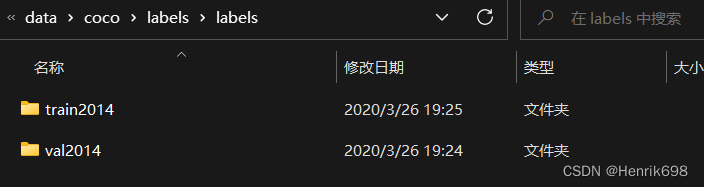

labels文件夹中存放标签,且与上面训练集合val数据相互对应:

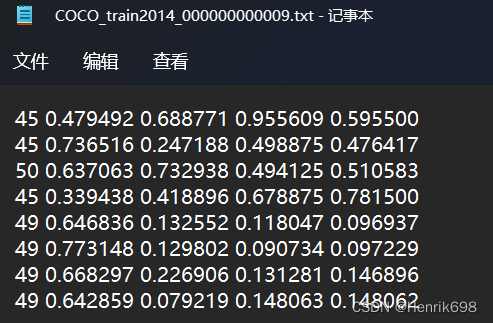

标签labels是标注图片上对象绘制好的边界框:

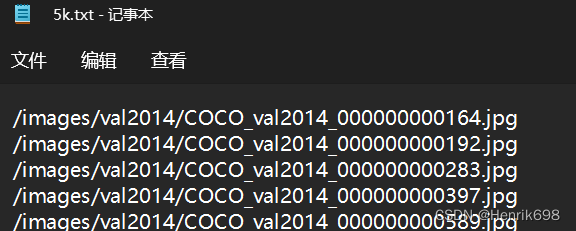

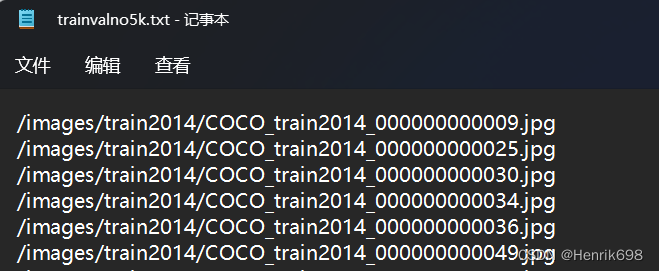

此外5k.txt和trainvalno5k.txt分别对应的是val数据和train数据的path:

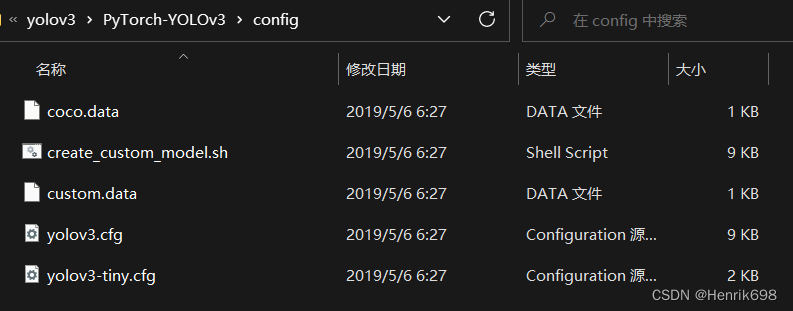

二、产看配置文件

查看一下config文件夹,custom.data相当于自己的数据集,yolov3.cfg是coco数据集的配置。

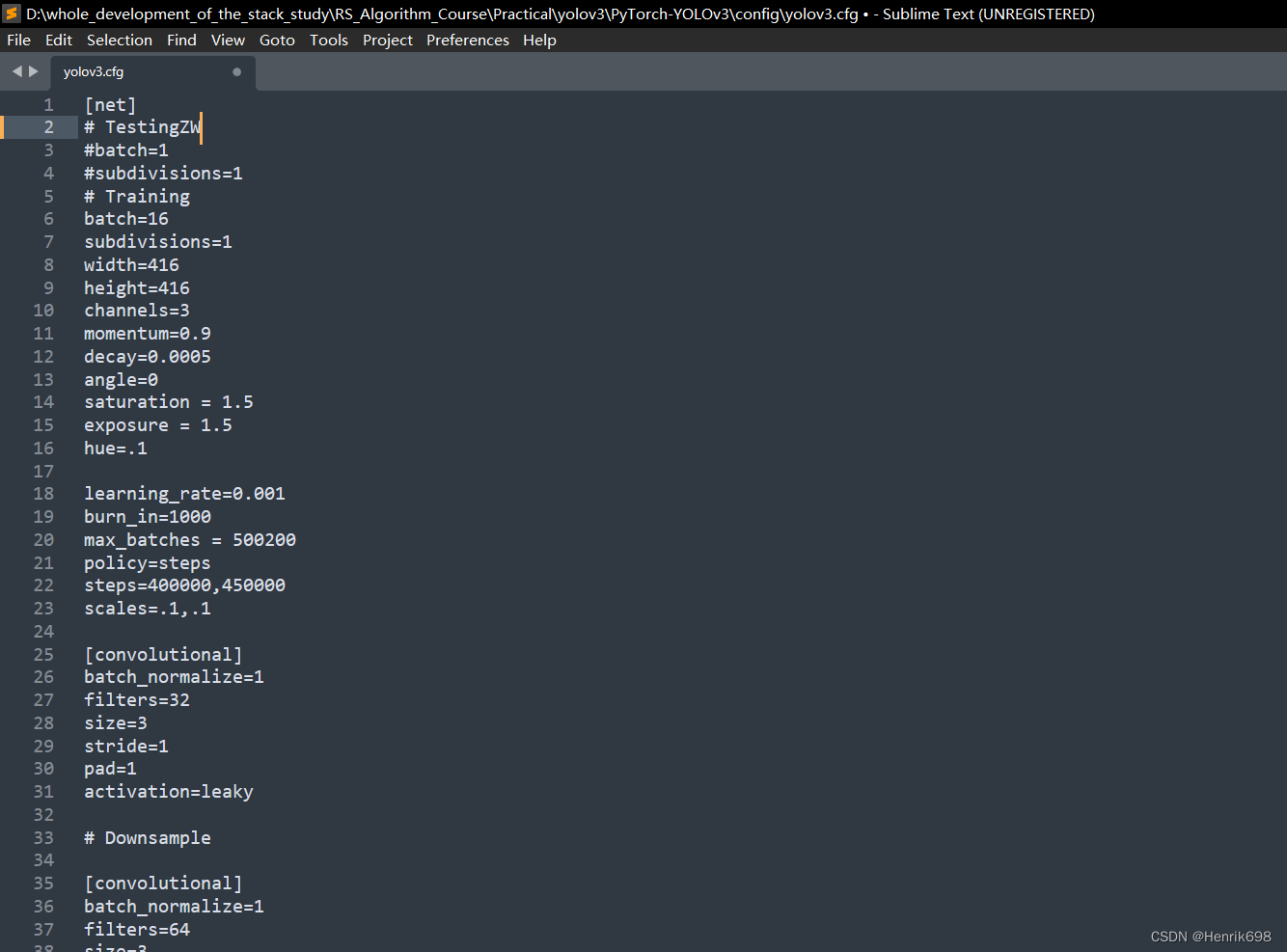

yolov3.cfg这个配置文件是用于我们之后建立的darknet网络,将配置文件的参数都按照顺序读取进网络中。

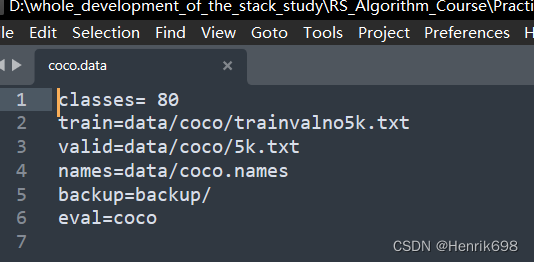

此外coco.data这个文件,描述当前训练数据集所需要的所有信息。

其中classes分为80类,train的路径信息是trainvalno5k.txt,valid的路径信息是5k.txt,下面的暂时用不上。

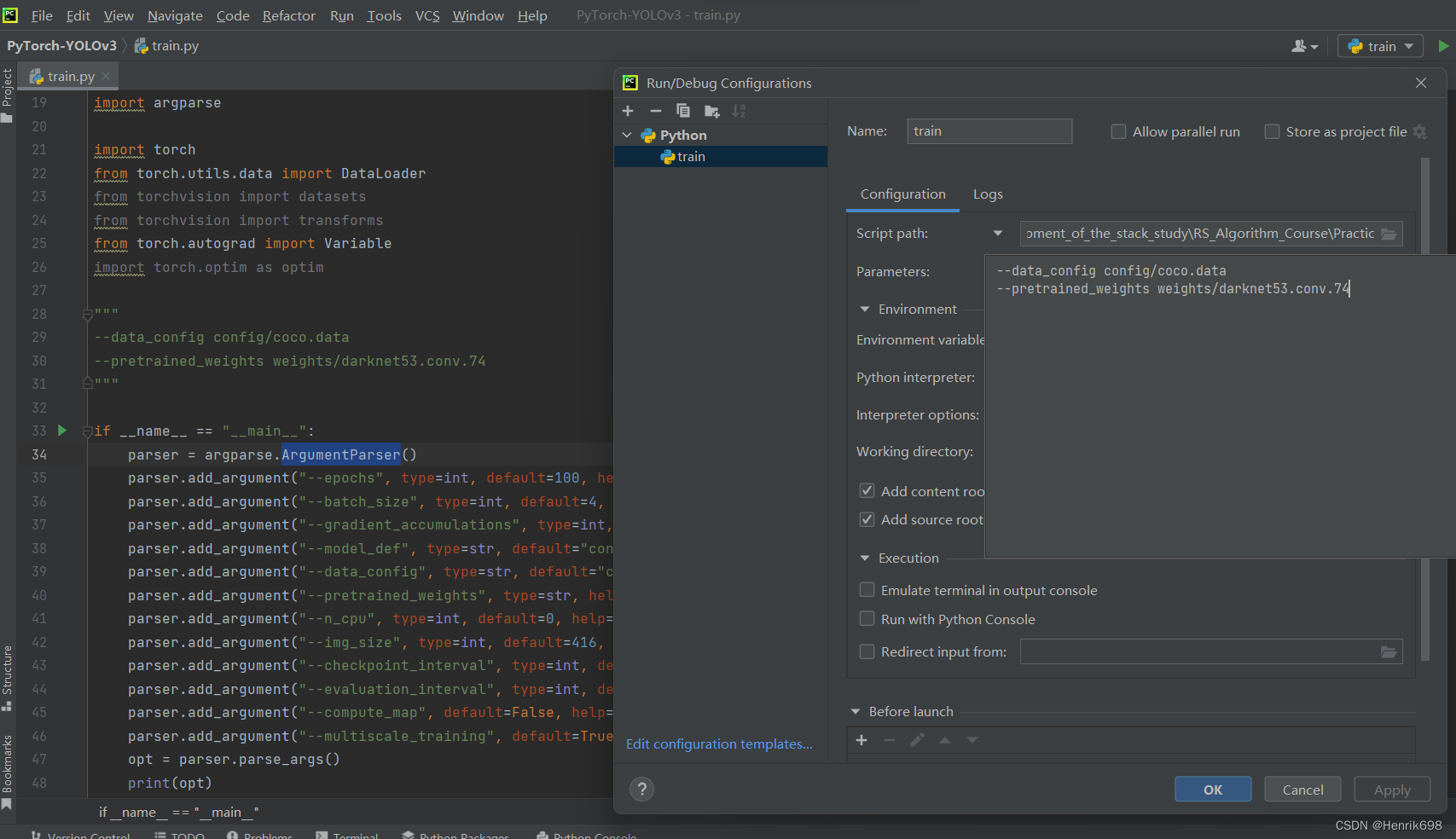

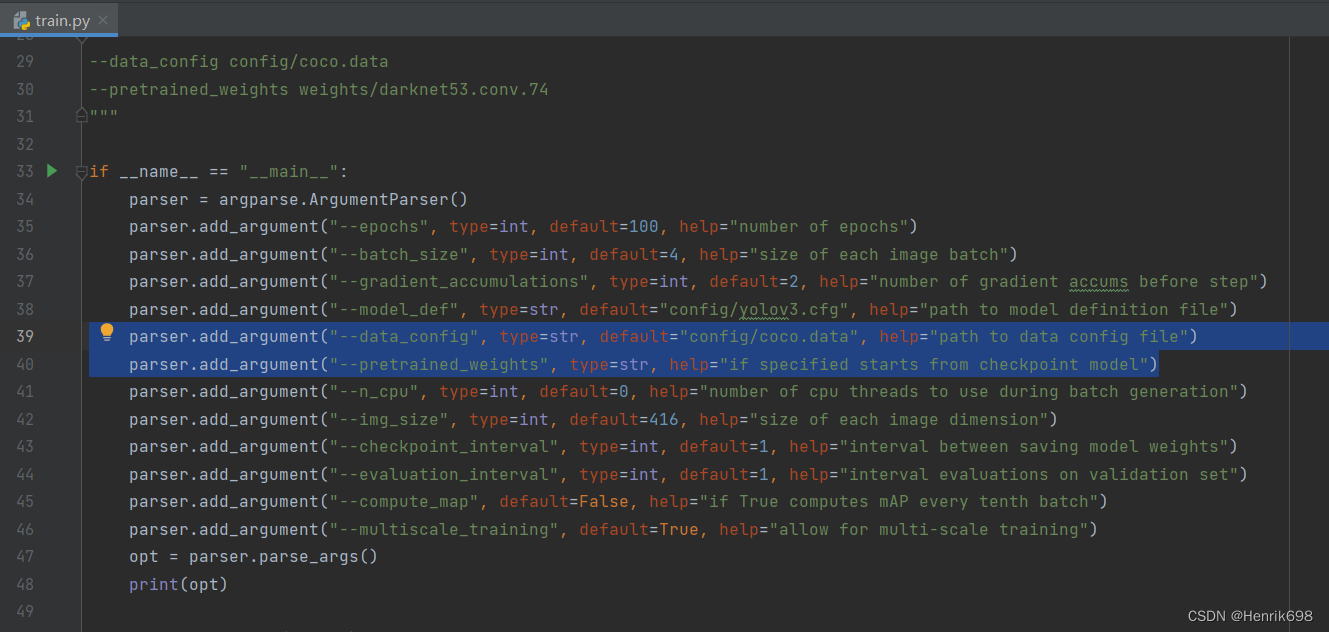

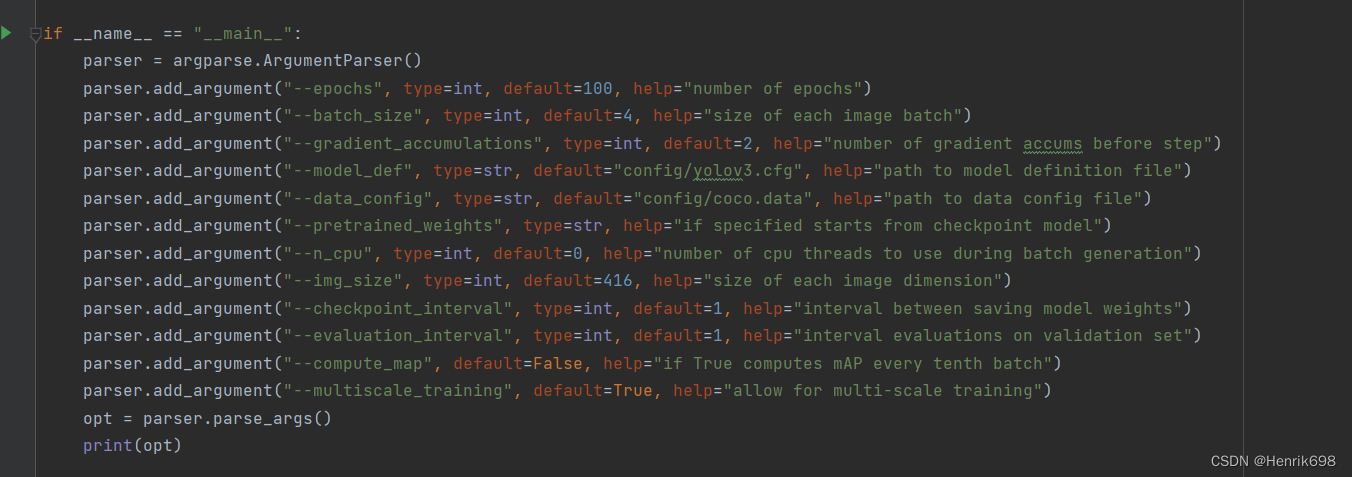

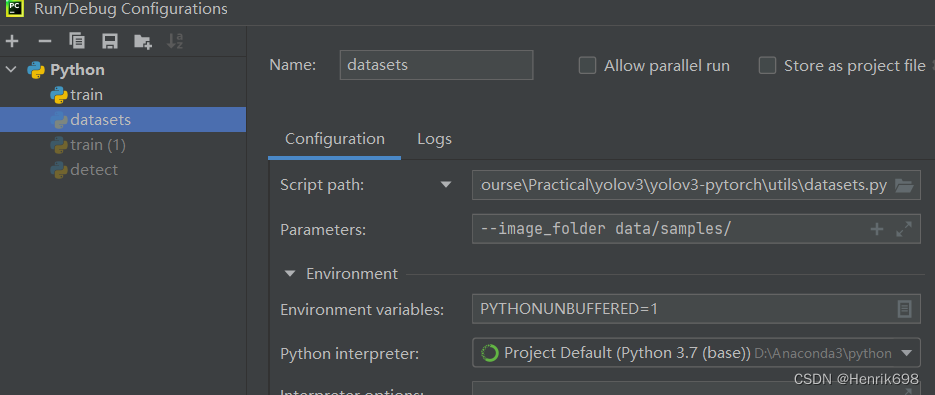

三、设置python程序文件对应的训练参数arguments

train.py的参数设置为:

–data_config config/coco.data

–pretrained_weights weights/darknet53.conv.74

其中参数1:–data_config config/coco.data是描述当前训练数据集所需要的所有信息,包括分类类别80类,train和valid的路径。

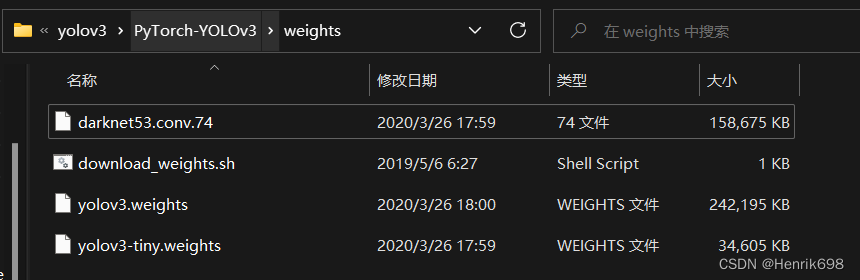

此外参数2:–pretrained_weights weights/darknet53.conv.74表示的是预训练的权值模型,因为我们不是从头开始训练模型的,需要一个提前训练好的权值模型,从这个权值模型的训练基础上再继续训练,这就是迁移学习。

上面的这两个参数对应的是下图两个参数:

这样所有配置参数就完成了。

四、学习查看代码

学习查看代码的顺序是:

1、产看所有的输入的配置参数

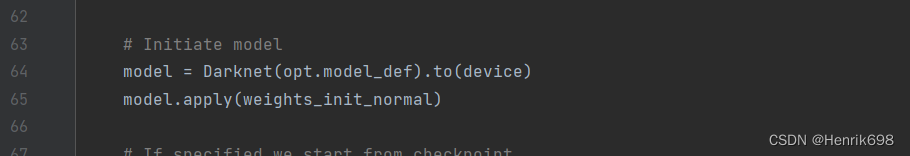

2、对模型进行构造

darknet网络的构造,前项传播

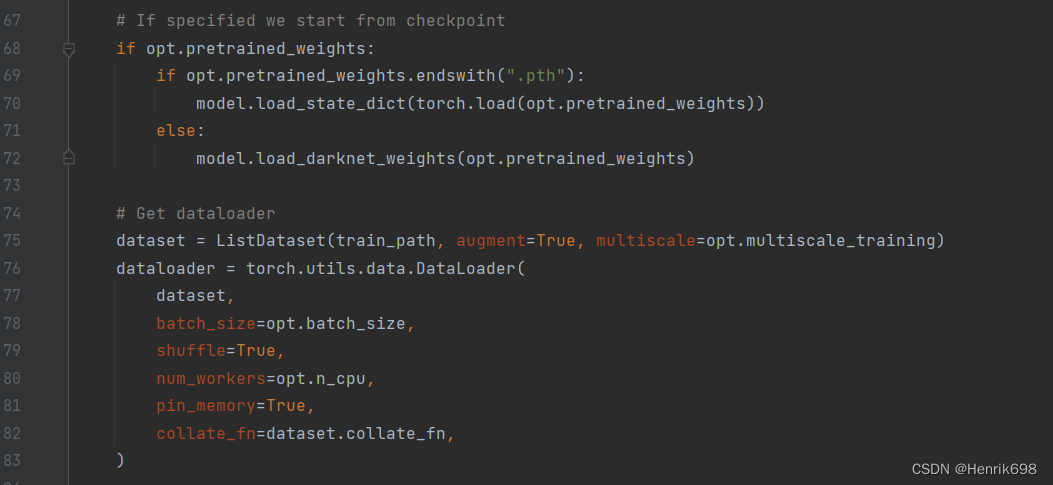

3、预训练模型

其中是读取迁移学习的权值模型,以及加载训练集。

4、构造优化器

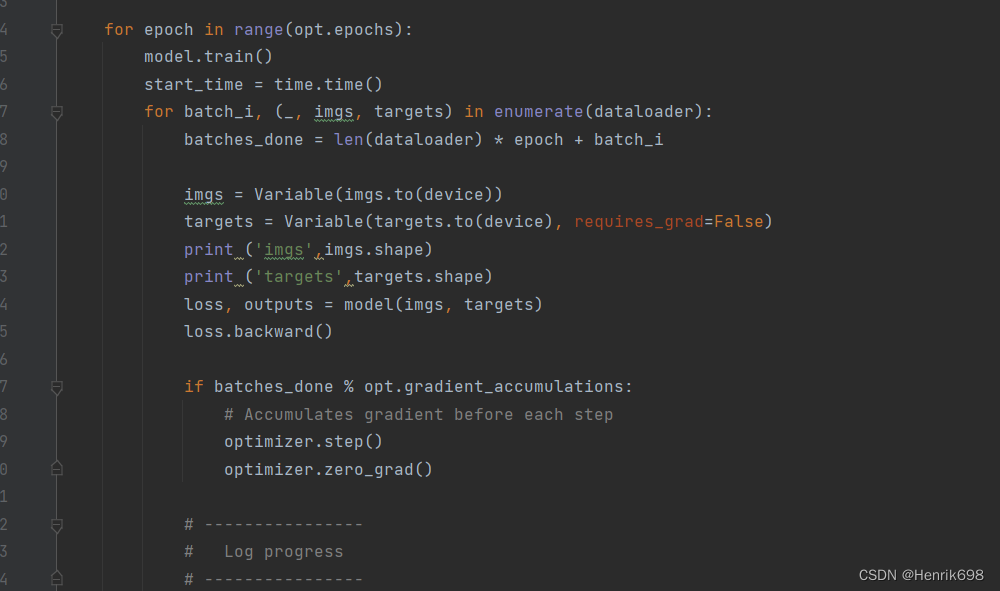

5、开始训练

但是这里我们不按照上面的顺序学习该train.py代码。我们先讲一下数据是如何读取的。

1、数据和标签是如何读取的

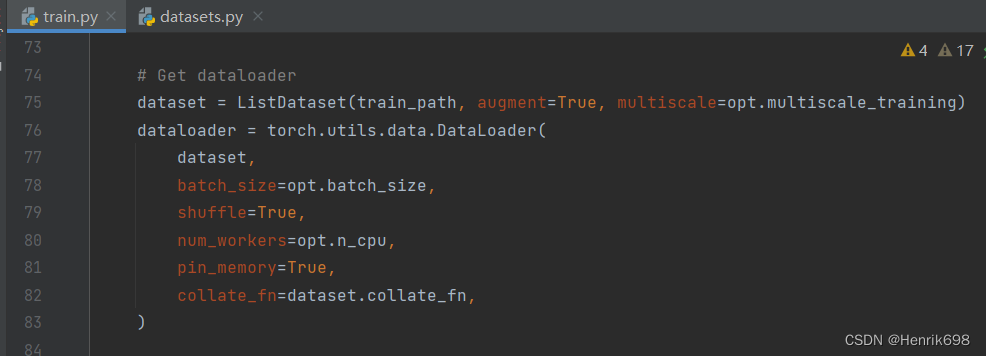

在train.py中的读取数据是通过ListDataset类来完成读取一张图片的。

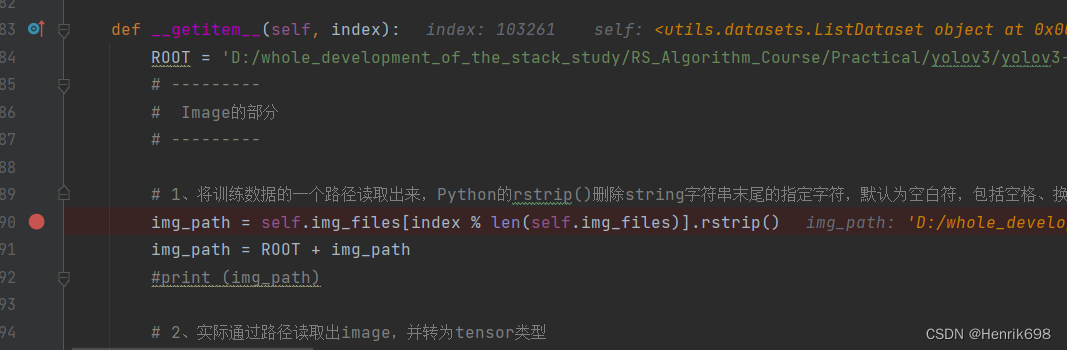

ListDataset类是在utils/datasets.py文件中,其中通过“getitem”函数将数据和标签读取进来:

这里我们查看一下代码:

utils/datasets.py:

核心部分就是“getitem”函数:

import glob

import random

import os

import sys

from pathlib import Path

import numpy as np

import visdom

from PIL import Image

import torch

import torch.nn.functional as F

# from utils.augmentations import horisontal_flip

from torch.utils.data import Dataset

import torchvision.transforms as transforms

# FILE = Path(__file__).resolve()

# ROOT = FILE.parents[0] # YOLOv5 root directory

# if str(ROOT) not in sys.path:

# sys.path.append(str(ROOT)) # add ROOT to PATH

# ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # relative

def pad_to_square(img, pad_value):

c, h, w = img.shape

dim_diff = np.abs(h - w)

# (upper / left) padding and (lower / right) padding

pad1, pad2 = dim_diff // 2, dim_diff - dim_diff // 2

# Determine padding

pad = (0, 0, pad1, pad2) if h <= w else (pad1, pad2, 0, 0)

# Add padding

img = F.pad(img, pad, "constant", value=pad_value)

return img, pad

def resize(image, size):

image = F.interpolate(image.unsqueeze(0), size=size, mode="nearest").squeeze(0)

return image

def random_resize(images, min_size=288, max_size=448):

new_size = random.sample(list(range(min_size, max_size + 1, 32)), 1)[0]

images = F.interpolate(images, size=new_size, mode="nearest")

return images

class ImageFolder(Dataset):

def __init__(self, folder_path, img_size=416):

self.files = sorted(glob.glob("%s/*.*" % folder_path))

self.img_size = img_size

def __getitem__(self, index):

img_path = self.files[index % len(self.files)]

# Extract image as PyTorch tensor

img = transforms.ToTensor()(Image.open(img_path))

# Pad to square resolution

img, _ = pad_to_square(img, 0)

# Resize

img = resize(img, self.img_size)

return img_path, img

def __len__(self):

return len(self.files)

class ListDataset(Dataset):

def __init__(self, list_path, img_size=416, augment=True, multiscale=True, normalized_labels=True):

with open(list_path, "r") as file:

self.img_files = file.readlines()

self.label_files = [

path.replace("images", "labels").replace(".png", ".txt").replace(".jpg", ".txt")

for path in self.img_files

]

self.img_size = img_size

self.max_objects = 100

self.augment = augment

self.multiscale = multiscale

self.normalized_labels = normalized_labels

self.min_size = self.img_size - 3 * 32

self.max_size = self.img_size + 3 * 32

self.batch_count = 0

def __getitem__(self, index):

ROOT = 'D:/whole_development_of_the_stack_study/RS_Algorithm_Course/Practical/yolov3/yolov3-pytorch/data/coco'

# ---------

# 一、Image的部分

# ---------

# 1、将训练数据的一个路径读取出来,Python的rstrip()删除string字符串末尾的指定字符,默认为空白符,包括空格、换行符、回车符、制表符。

img_path = self.img_files[index % len(self.img_files)].rstrip()

img_path = ROOT + img_path

#print (img_path)

# 2、实际通过路径读取出image,并转为tensor类型

# Image.open(img_path).convert('RGB')通过路径读取image

# Extract image as PyTorch tensor

img = transforms.ToTensor()(Image.open(img_path).convert('RGB'))

# 3、数据简单的预处理,判断一下数据是不是三维的,不是三维的就扩展一下,变成三维的

# Handle images with less than three channels

if len(img.shape) != 3:

img = img.unsqueeze(0)

img = img.expand((3, img.shape[1:]))

# 4、给输入的原数据做一个padding填充处理,以0作为填充的部分,使得数据达到640*640的正方形大小。

_, h, w = img.shape

h_factor, w_factor = (h, w) if self.normalized_labels else (1, 1)

# Pad to square resolution

img, pad = pad_to_square(img, 0)

_, padded_h, padded_w = img.shape

# ---------

# 二、Label的部分

# ---------

# 1、读取标签,和读取image一样,每次只读一个标签。这里要标签和数据一一对应

# 这里需要注意的是标签的格式,标签是相对坐标还是绝对坐标,不同标签是不同的需要注意。

label_path = self.label_files[index % len(self.img_files)].rstrip()

label_path = ROOT + "" + label_path

#print (label_path)

targets = None

if os.path.exists(label_path):

# 2、将标记好的实际的框都读取进来。

# 这里需要注意的是读取的每个框都是由5个元素组成,分别是[id,x,y,w,h],这里的id表示的是coco数据集中每一个物体类别的id。

# 这里注意,拿到的是原始图像上标注的坐标,但是在加载image的时候增加了padding,使得原图像变化了。因此标签也要有相应的改变。

# 我们这里的是相对坐标值

boxes = torch.from_numpy(np.loadtxt(label_path).reshape(-1, 5))

#3、标签的变换,因为image做了padding因此对应的label也需要调整

# 获得x1,y1,x2,y2的实际坐标值

# Extract coordinates for unpadded + unscaled image

x1 = w_factor * (boxes[:, 1] - boxes[:, 3] / 2)

y1 = h_factor * (boxes[:, 2] - boxes[:, 4] / 2)

x2 = w_factor * (boxes[:, 1] + boxes[:, 3] / 2)

y2 = h_factor * (boxes[:, 2] + boxes[:, 4] / 2)

# 通过知道padding是如何对原图像添加的,有的是上下加,有的是左右加,因此通过知道padding添加的方式后,再给标签添加。

# Adjust for added padding

x1 += pad[0]

y1 += pad[2]

x2 += pad[1]

y2 += pad[3]

# 4、论文中是预测的标签值是中心点坐标和宽高值,不是x1,y1,x2,y2。

# 所以这里对当前的坐标再做一个转换:

# Returns (x, y, w, h)

# 中心点是相对的值,是相对ground truth框来说的相对位置。

boxes[:, 1] = ((x1 + x2) / 2) / padded_w

boxes[:, 2] = ((y1 + y2) / 2) / padded_h

boxes[:, 3] *= w_factor / padded_w

boxes[:, 4] *= h_factor / padded_h

#5、将boxes[4,5]的[id,x,y,w,h]存储到创建空的targets[4,6]中。

# 这里的targets就存储着我们所需要的坐标了

# 此外注意到targets的shape是[4,6],boxes是[4,5],比boxes多一列,之后将往targets多的一列放入东西。

# 这里第一列是空出来的。

targets = torch.zeros((len(boxes), 6))

targets[:, 1:] = boxes

# ---------

# 三、图像增强的部分(选做,因为coco数据集量已经够大了)

# ---------

# 1、选做图像增强

# Apply augmentations

# if self.augment:

# if np.random.random() < 0.5:

# img, targets = horisontal_flip(img, targets)

# 返回数据image的路径,图像和对应的标签

return img_path, img, targets

def collate_fn(self, batch):

paths, imgs, targets = list(zip(*batch))

# Remove empty placeholder targets

targets = [boxes for boxes in targets if boxes is not None]

# Add sample index to targets

for i, boxes in enumerate(targets):

boxes[:, 0] = i

targets = torch.cat(targets, 0)

# Selects new image size every tenth batch

if self.multiscale and self.batch_count % 10 == 0:

self.img_size = random.choice(range(self.min_size, self.max_size + 1, 32))

# Resize images to input shape

imgs = torch.stack([resize(img, self.img_size) for img in imgs])

self.batch_count += 1

return paths, imgs, targets

def __len__(self):

return len(self.img_files)

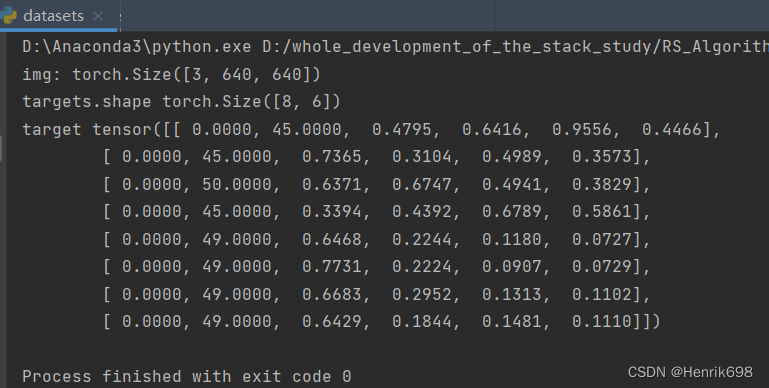

def main():

# viz = visdom.Visdom()

dataset = ListDataset('D:/whole_development_of_the_stack_study/RS_Algorithm_Course/Practical/yolov3/yolov3-pytorch/data/coco/trainvalno5k.txt', augment=True)

_, x, y = next(iter(dataset))

print('img:', x.shape)

print('targets.shape', y.shape)

print('target', y)

if __name__ == "__main__":

main()

2、Darknet模型是如何组成的以及计算的(架构)

前项传播:

其次模型的建立:(主要是配置文件的读取、模型每层的建立、yolo层、目标框相对位置转换)

models.py:

from __future__ import division

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import numpy as np

from utils.parse_config import *

from utils.utils import build_targets, to_cpu, non_max_suppression

import matplotlib.pyplot as plt

import matplotlib.patches as patches

def create_modules(module_defs):

"""

Constructs module list of layer blocks from module configuration in module_defs

"""

# hyperparams存储的是配置文件中名称为net中的所有参数

# pop() 函数用于移除列表中的一个元素(默认最后一个元素),并且返回该元素的值,这个值是一个字典形式的对象。

hyperparams = module_defs.pop(0)

output_filters = [int(hyperparams["channels"])]

# 搭建网络结构,按照配置文件中每层的顺序,一块一块开始搭建。

# ModuleList()相当于创建一个大的块,之后通过遍历的方式将配置文件中每一块按照顺序添加到里面。

module_list = nn.ModuleList()

# module_defs就是读取的配置信息parse_model_config(config_path)

# 这里需要注意的是配置文件中[convolutional]表示的是Conv卷积层+BN归一化层+Relu层,这样的3合1的组合块。

for module_i, module_def in enumerate(module_defs):

# 顺序连接模型

# 这个modules将添加配置文件中所有的模块:

modules = nn.Sequential()

# 判断一下,当前配置的块类型:

# 1、卷积层:

if module_def["type"] == "convolutional":

# BN操作

# 是否进行BN操作,当进行BN操作时,不需要设置偏置bias。

# nn.Conv2d()后面通常接nn.BatchNorm2d(output)

# 具体为什么有BN不需要bias可以查看:https://blog.csdn.net/wxd1233/article/details/117574603

# bn = 1

bn = int(module_def["batch_normalize"])

# 滤波器数量,即输出特征图数量,kernal的channel

# filters = 32

filters = int(module_def["filters"])

# 核的大小

# kernel_size = 3

kernel_size = int(module_def["size"])

# pad是根据kernel_size进行计算的

# 如果pad=0,padding 由 padding参数指定。

# 如果pad=1,padding大小为size/2,而不是真的填充为1

# pad = 1

pad = (kernel_size - 1) // 2

#将实际的api调进来,完成我们的卷积层:

modules.add_module(

# 在nn.Sequential()实例化的模型中添加卷积

# 第一个输入in_channels输入的是3个channel(是一个RGB的图像),out_channels输出的是32个channel,这里的输出的channel是由kernal的channel来决定。

# 卷积核大小是3

# 关于偏置项,很多时候如果加上了batch_normalization之后,很多时候就不需要这个偏置项了。

f"conv_{module_i}",

nn.Conv2d(

in_channels=output_filters[-1],

out_channels=filters,

kernel_size=kernel_size,

stride=int(module_def["stride"]),

padding=pad,

bias=not bn,

),

)

# 判断一下,是否做BN层:

# 2、BN层:

if bn:

# 在nn.Sequential()实例化的模型中添加BN层

modules.add_module(

f"batch_norm_{module_i}",

nn.BatchNorm2d(

filters,

momentum=0.9,

eps=1e-5)

)

# 判断一下,是否是LeakyReLU:

# 3、LeakyReLU层,激活函数:

if module_def["activation"] == "leaky":

modules.add_module(

f"leaky_{module_i}",

nn.LeakyReLU(0.1)

)

#下面的判断和卷积层的一样,根据不同配置文件中type类型来做出相应的添加。

elif module_def["type"] == "maxpool":

kernel_size = int(module_def["size"])

stride = int(module_def["stride"])

if kernel_size == 2 and stride == 1:

modules.add_module(f"_debug_padding_{module_i}", nn.ZeroPad2d((0, 1, 0, 1)))

maxpool = nn.MaxPool2d(kernel_size=kernel_size, stride=stride, padding=int((kernel_size - 1) // 2))

modules.add_module(f"maxpool_{module_i}", maxpool)

# 这里是上采样,这里没有做实际操作,只是在模型中定义了一些这个空模块,这个层是空的。

elif module_def["type"] == "upsample":

upsample = Upsample(scale_factor=int(module_def["stride"]), mode="nearest")

modules.add_module(f"upsample_{module_i}", upsample)

# route层(路由层):做了一个维度上的拼接

# 上采样后,从[13,13,1024]=>到[26,26,256],这个是上采样的结果。

# 上采样的结果[26,26,256]和之前本身就是26*26的[26,26,512]进行拼接,从而得到[26,26,768]。

# 使得我们的特征图变得更长了。

# [route]层只有一个参数layers,表示当前层,与前面第几层进行拼接。这个layers为负数,负几表示前几层,从当前层往后。

# 实际的操作只能等前项传播的过程中才能看到,这个和上面的上采样一样,只是在模型中定义了一些这个空模块,这个层是空的。

elif module_def["type"] == "route": # 输入1:26*26*256 输入2:26*26*128 输出:26*26*(256+128)

layers = [int(x) for x in module_def["layers"].split(",")]

filters = sum([output_filters[1:][i] for i in layers])

modules.add_module(

f"route_{module_i}",

EmptyLayer()

)

# shortcut层

# 这个shortcut层不是做拼接了,是ResNet短接的部分。残差连接。

# 维度不变,特征做了一个加法,就是数值上的相加。而route层是维度上的拼接

# 就是一个简单的加法操作,即[13,13,128]和[13,13,128]得到[13,13,256]

# 其中[shortcut]层参数from表示从当前层与向上第几层的结果进行相加,此外还有一个激活函数层。

# 实际的操作只能等前项传播的过程中才能看到。

elif module_def["type"] == "shortcut":

filters = output_filters[1:][int(module_def["from"])]

modules.add_module(f"shortcut_{module_i}", EmptyLayer())

#核心yolo层:(在配置文件中yolo模块是有三个(每个yolo是不一样的),和论文中的一样。)

#要指定好每个yolo对应的三个框的大小。

elif module_def["type"] == "yolo":

# 获得三个候选框的id,即mask

anchor_idxs = [int(x) for x in module_def["mask"].split(",")]

# Extract anchors

# 获得实际先验框的大小

#anchors=[10, 13, 16, 30, 33, 23, 30, 61, 62, 45, 59, 119, 116, 90, 156, 198, 373, 326]

anchors = [int(x) for x in module_def["anchors"].split(",")]

#anchors=[(10, 13), (16, 30), (33, 23), (30, 61), (62, 45), (59, 119), (116, 90), (156, 198), (373, 326)]

anchors = [(anchors[i], anchors[i + 1]) for i in range(0, len(anchors), 2)]

#anchors=[(116, 90), (156, 198), (373, 326)],是anchor_idxs的6,7,8所对应的元素

anchors = [anchors[i] for i in anchor_idxs]

#输入图像一共有多少类别,coco有80个类别,这个num_classes就是80

num_classes = int(module_def["classes"])

#图像的大小416

img_size = int(hyperparams["height"])

# Define detection layer

# 构建当前yolo层

yolo_layer = YOLOLayer(anchors, num_classes, img_size)

modules.add_module(f"yolo_{module_i}", yolo_layer)

# Register module list and number of output filters

module_list.append(modules)

output_filters.append(filters)

return hyperparams, module_list

class Upsample(nn.Module):

""" nn.Upsample is deprecated """

def __init__(self, scale_factor, mode="nearest"):

super(Upsample, self).__init__()

self.scale_factor = scale_factor

self.mode = mode

def forward(self, x):

x = F.interpolate(x, scale_factor=self.scale_factor, mode=self.mode)

return x

class EmptyLayer(nn.Module):

"""Placeholder for 'route' and 'shortcut' layers"""

def __init__(self):

super(EmptyLayer, self).__init__()

# yolo层类

class YOLOLayer(nn.Module):

"""Detection layer"""

#构造函数初始化

def __init__(self, anchors, num_classes, img_dim=416):

super(YOLOLayer, self).__init__()

self.anchors = anchors #先验框的大小anchors锚点,[(116, 90), (156, 198), (373, 326)]

self.num_anchors = len(anchors) #长度为3

self.num_classes = num_classes #80类

self.ignore_thres = 0.5 #阈值

self.mse_loss = nn.MSELoss() #MSE损失函数

self.bce_loss = nn.BCELoss() #BCE损失函数

self.obj_scale = 1

self.noobj_scale = 100

self.metrics = {}

self.img_dim = img_dim

self.grid_size = 0 # grid size

#计算一下偏移量

def compute_grid_offsets(self, grid_size, cuda=True):

self.grid_size = grid_size

#当前格子的大小

g = self.grid_size

FloatTensor = torch.cuda.FloatTensor if cuda else torch.FloatTensor

self.stride = self.img_dim / self.grid_size

# Calculate offsets for each grid

#计算实际位置,根据中心点所落在的格子坐标,再加上偏移相对位置,就能获得实际位置。

self.grid_x = torch.arange(g).repeat(g, 1).view([1, 1, g, g]).type(FloatTensor)

self.grid_y = torch.arange(g).repeat(g, 1).t().view([1, 1, g, g]).type(FloatTensor)

self.scaled_anchors = FloatTensor([(a_w / self.stride, a_h / self.stride) for a_w, a_h in self.anchors])

#当前后选框的w和h,后选框也需要除以32,因为我们经过5次下采样stride为2的操作,2^5为32,因此候选框也要除以32。

self.anchor_w = self.scaled_anchors[:, 0:1].view((1, self.num_anchors, 1, 1))

self.anchor_h = self.scaled_anchors[:, 1:2].view((1, self.num_anchors, 1, 1))

def forward(self, x, targets=None, img_dim=None):

# Tensors for cuda support

print (x.shape) #打印的结果torch.Size([1, 255, 16, 16]),这里batch我设置为1,255表示特征图的个数,当前特征图的大小是15*15的。

FloatTensor = torch.cuda.FloatTensor if x.is_cuda else torch.FloatTensor

LongTensor = torch.cuda.LongTensor if x.is_cuda else torch.LongTensor

ByteTensor = torch.cuda.ByteTensor if x.is_cuda else torch.ByteTensor

self.img_dim = img_dim #在训练过程中,我们会随机的选择一个大小,为了使得网络适应不同的分辨率,只要img_dim满足被32整除就行。因为我们网络中没有全连接层,因此大小不受影响。

num_samples = x.size(0) #batch一次训练多少张图像。

grid_size = x.size(2) #当前网格的大小。该大小是根据输入图像来的,用输入的图像大小除以32,就可以获得网格的大小。

# prediction表示最终预测的结果:

prediction = (

#这里.view()和numpy中的.reshape()操作是一样的。将数据变换一下维度。

x.view(num_samples, self.num_anchors, self.num_classes + 5, grid_size, grid_size)

.permute(0, 1, 3, 4, 2)

.contiguous()

#.permute做了一个维度的调整。

)

print (prediction.shape) #当前shape:torch.Size([1, 3, 16, 16, 85]),要预测85个值,这里的85表示80类+[x,y,w,h]+是前景物体还是背景的置信度。

# Get outputs

#[x,y,w,h,confidence,class80类]

x = torch.sigmoid(prediction[..., 0]) # Center x

y = torch.sigmoid(prediction[..., 1]) # Center y

w = prediction[..., 2] # Width

h = prediction[..., 3] # Height

pred_conf = torch.sigmoid(prediction[..., 4]) # Conf 执行度,即是物体的可能性。

pred_cls = torch.sigmoid(prediction[..., 5:]) # Cls pred.每个类别的可能性

#这里可以注意的是x,y,w,h都是0-1区间范围内的值。所以这些值表示的是一个相对位置。

#我们需要将相对位置还原为真实位置。

# If grid size does not match current we compute new offsets

if grid_size != self.grid_size:

#这里有一个新的函数,计算网格偏移,就是将现在的相对位置转为特征图当中的绝对位置。

#我们需要绝对位置,中心点所在网格位置加上compute_grid_offsets计算出的偏移量,就是实际中心点所在位置。

self.compute_grid_offsets(grid_size, cuda=x.is_cuda) #相对位置得到对应的绝对位置比如之前的位置是0.5,0.5变为 11.5,11.5这样的

# Add offset and scale with anchors

# 特征图中的实际位置

pred_boxes = FloatTensor(prediction[..., :4].shape)

pred_boxes[..., 0] = x.data + self.grid_x

pred_boxes[..., 1] = y.data + self.grid_y

# w和h之前是做了对数变换,这里还原会来需要指数变换exp,这里w.data和h.data都是相对位置,再乘以anchor_w和anchor_h(这里特征图中相对的大小),获得实际的预测值,特征图上实际的框的大小。

pred_boxes[..., 2] = torch.exp(w.data) * self.anchor_w

pred_boxes[..., 3] = torch.exp(h.data) * self.anchor_h

output = torch.cat(

(

pred_boxes.view(num_samples, -1, 4) * self.stride, #还原到原始图中,这里将特征图的框乘以32,还原回原始图像上。

pred_conf.view(num_samples, -1, 1),

pred_cls.view(num_samples, -1, self.num_classes),

),

-1,

)

#这里进行实际损失的计算:

if targets is None:

return output, 0

else:

#当前target做转换,将标签和预测值处理成相同的格式。

iou_scores, class_mask, obj_mask, noobj_mask, tx, ty, tw, th, tcls, tconf = build_targets(

#需要在build_targets该函数中先对标签做一些处理,凡是用到的标签转为相对格式。

#这个函数就是辅助的。

pred_boxes=pred_boxes, #预测的x,y,w,h(这里是相对于格子来说的位置)

pred_cls=pred_cls, #预测的类别

target=targets, #实际标签(这里是相对于整个图像来说的位置)

anchors=self.scaled_anchors,

ignore_thres=self.ignore_thres,

)

# iou_scores:真实值与最匹配的anchor的IOU得分值 class_mask:分类正确的索引 obj_mask:目标框所在位置的最好anchor置为1 noobj_mask obj_mask那里置0,还有计算的iou大于阈值的也置0,其他都为1 tx, ty, tw, th, 对应的对于该大小的特征图的xywh目标值也就是我们需要拟合的值 tconf 目标置信度

# Loss : Mask outputs to ignore non-existing objects (except with conf. loss)

# 只有目标的前提情况下,才计算loss

loss_x = self.mse_loss(x[obj_mask], tx[obj_mask]) # 只计算有目标的

loss_y = self.mse_loss(y[obj_mask], ty[obj_mask])

loss_w = self.mse_loss(w[obj_mask], tw[obj_mask])

loss_h = self.mse_loss(h[obj_mask], th[obj_mask])

#前景和背景的损失合分别乘以权重参数再相加,就是置信度损失。

loss_conf_obj = self.bce_loss(pred_conf[obj_mask], tconf[obj_mask])

loss_conf_noobj = self.bce_loss(pred_conf[noobj_mask], tconf[noobj_mask])

loss_conf = self.obj_scale * loss_conf_obj + self.noobj_scale * loss_conf_noobj #有物体越接近1越好 没物体的越接近0越好

loss_cls = self.bce_loss(pred_cls[obj_mask], tcls[obj_mask]) #分类损失

total_loss = loss_x + loss_y + loss_w + loss_h + loss_conf + loss_cls #总损失

# Metrics

cls_acc = 100 * class_mask[obj_mask].mean()

conf_obj = pred_conf[obj_mask].mean()

conf_noobj = pred_conf[noobj_mask].mean()

conf50 = (pred_conf > 0.5).float()

iou50 = (iou_scores > 0.5).float()

iou75 = (iou_scores > 0.75).float()

detected_mask = conf50 * class_mask * tconf

precision = torch.sum(iou50 * detected_mask) / (conf50.sum() + 1e-16)

recall50 = torch.sum(iou50 * detected_mask) / (obj_mask.sum() + 1e-16)

recall75 = torch.sum(iou75 * detected_mask) / (obj_mask.sum() + 1e-16)

self.metrics = {

"loss": to_cpu(total_loss).item(),

"x": to_cpu(loss_x).item(),

"y": to_cpu(loss_y).item(),

"w": to_cpu(loss_w).item(),

"h": to_cpu(loss_h).item(),

"conf": to_cpu(loss_conf).item(),

"cls": to_cpu(loss_cls).item(),

"cls_acc": to_cpu(cls_acc).item(),

"recall50": to_cpu(recall50).item(),

"recall75": to_cpu(recall75).item(),

"precision": to_cpu(precision).item(),

"conf_obj": to_cpu(conf_obj).item(),

"conf_noobj": to_cpu(conf_noobj).item(),

"grid_size": grid_size,

}

return output, total_loss

# darknet53,定义一个Darknet类

class Darknet(nn.Module):

"""YOLOv3 object detection model"""

# 构造函数,指定好网络模型用到了哪些模块。

# 读取到配置文件,按照配置文件编写的顺序,逐层将结构定义好。

# 调用这个类的初始化方法,来初始化这个父类。

def __init__(self, config_path, img_size=416):

super(Darknet, self).__init__()

# 1、先把配置文件读进来。

self.module_defs = parse_model_config(config_path)

# 2、创建模型,根据配置文件创建所有层,这里是create_modules函数创建的

self.hyperparams, self.module_list = create_modules(self.module_defs)

# 这里是通过上面的配置文件构建好了三个yolo层

self.yolo_layers = [layer[0] for layer in self.module_list if hasattr(layer[0], "metrics")]

self.img_size = img_size

self.seen = 0

self.header_info = np.array([0, 0, 0, self.seen, 0], dtype=np.int32)

# 前项运算流程

def forward(self, x, targets=None):

img_dim = x.shape[2]

loss = 0 #一开始损失是0

layer_outputs, yolo_outputs = [], [] #这里yolo层有三个(先得到预测大目标,之后得到预测中目标,最后得到预测小目标的),yolo_outputs有三个元素,yolo层是先有最后的yolo层依次往上,layer_outputs存储其他层

for i, (module_def, module) in enumerate(zip(self.module_defs, self.module_list)):

if module_def["type"] in ["convolutional", "upsample", "maxpool"]:

x = module(x)

elif module_def["type"] == "route": #拼接操作,当前层的结果和之前某层的结果拼接,这里的for循环是可以拼接多个的。

x = torch.cat([layer_outputs[int(layer_i)] for layer_i in module_def["layers"].split(",")], 1)

elif module_def["type"] == "shortcut": #这个是ResNet中的短接层,做加法

layer_i = int(module_def["from"])

x = layer_outputs[-1] + layer_outputs[layer_i] #当前层根前面第layer_i的层做一个加法操作

elif module_def["type"] == "yolo":

x, layer_loss = module[0](x, targets, img_dim)

loss += layer_loss

yolo_outputs.append(x)

layer_outputs.append(x) #把非yolo的结果添加到layer_outputs中。

yolo_outputs = to_cpu(torch.cat(yolo_outputs, 1))

return yolo_outputs if targets is None else (loss, yolo_outputs)

def load_darknet_weights(self, weights_path):

"""Parses and loads the weights stored in 'weights_path'"""

# Open the weights file

with open(weights_path, "rb") as f:

header = np.fromfile(f, dtype=np.int32, count=5) # First five are header values

self.header_info = header # Needed to write header when saving weights

self.seen = header[3] # number of images seen during training

weights = np.fromfile(f, dtype=np.float32) # The rest are weights

# Establish cutoff for loading backbone weights

cutoff = None

if "darknet53.conv.74" in weights_path:

cutoff = 75

ptr = 0

for i, (module_def, module) in enumerate(zip(self.module_defs, self.module_list)):

if i == cutoff:

break

if module_def["type"] == "convolutional":

conv_layer = module[0]

if module_def["batch_normalize"]:

# Load BN bias, weights, running mean and running variance

bn_layer = module[1]

num_b = bn_layer.bias.numel() # Number of biases

# Bias

bn_b = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.bias)

bn_layer.bias.data.copy_(bn_b)

ptr += num_b

# Weight

bn_w = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.weight)

bn_layer.weight.data.copy_(bn_w)

ptr += num_b

# Running Mean

bn_rm = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.running_mean)

bn_layer.running_mean.data.copy_(bn_rm)

ptr += num_b

# Running Var

bn_rv = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.running_var)

bn_layer.running_var.data.copy_(bn_rv)

ptr += num_b

else:

# Load conv. bias

num_b = conv_layer.bias.numel()

conv_b = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(conv_layer.bias)

conv_layer.bias.data.copy_(conv_b)

ptr += num_b

# Load conv. weights

num_w = conv_layer.weight.numel()

conv_w = torch.from_numpy(weights[ptr : ptr + num_w]).view_as(conv_layer.weight)

conv_layer.weight.data.copy_(conv_w)

ptr += num_w

def save_darknet_weights(self, path, cutoff=-1):

"""

@:param path - path of the new weights file

@:param cutoff - save layers between 0 and cutoff (cutoff = -1 -> all are saved)

"""

fp = open(path, "wb")

self.header_info[3] = self.seen

self.header_info.tofile(fp)

# Iterate through layers

for i, (module_def, module) in enumerate(zip(self.module_defs[:cutoff], self.module_list[:cutoff])):

if module_def["type"] == "convolutional":

conv_layer = module[0]

# If batch norm, load bn first

if module_def["batch_normalize"]:

bn_layer = module[1]

bn_layer.bias.data.cpu().numpy().tofile(fp)

bn_layer.weight.data.cpu().numpy().tofile(fp)

bn_layer.running_mean.data.cpu().numpy().tofile(fp)

bn_layer.running_var.data.cpu().numpy().tofile(fp)

# Load conv bias

else:

conv_layer.bias.data.cpu().numpy().tofile(fp)

# Load conv weights

conv_layer.weight.data.cpu().numpy().tofile(fp)

fp.close()

计算损失情况需要用到的其他函数,主要是统一标签和预测的数据标准,方便计算损失

utils/utils.py:

from __future__ import division

import math

import time

import tqdm

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.patches as patches

def to_cpu(tensor):

return tensor.detach().cpu()

def load_classes(path):

"""

Loads class labels at 'path'

"""

fp = open(path, "r")

names = fp.read().split("\n")[:-1]

return names

def weights_init_normal(m):

classname = m.__class__.__name__

if classname.find("Conv") != -1:

torch.nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find("BatchNorm2d") != -1:

torch.nn.init.normal_(m.weight.data, 1.0, 0.02)

torch.nn.init.constant_(m.bias.data, 0.0)

def rescale_boxes(boxes, current_dim, original_shape):

""" Rescales bounding boxes to the original shape """

orig_h, orig_w = original_shape

# The amount of padding that was added

pad_x = max(orig_h - orig_w, 0) * (current_dim / max(original_shape))

pad_y = max(orig_w - orig_h, 0) * (current_dim / max(original_shape))

# Image height and width after padding is removed

unpad_h = current_dim - pad_y

unpad_w = current_dim - pad_x

# Rescale bounding boxes to dimension of original image

boxes[:, 0] = ((boxes[:, 0] - pad_x // 2) / unpad_w) * orig_w

boxes[:, 1] = ((boxes[:, 1] - pad_y // 2) / unpad_h) * orig_h

boxes[:, 2] = ((boxes[:, 2] - pad_x // 2) / unpad_w) * orig_w

boxes[:, 3] = ((boxes[:, 3] - pad_y // 2) / unpad_h) * orig_h

return boxes

def xywh2xyxy(x):

y = x.new(x.shape)

y[..., 0] = x[..., 0] - x[..., 2] / 2

y[..., 1] = x[..., 1] - x[..., 3] / 2

y[..., 2] = x[..., 0] + x[..., 2] / 2

y[..., 3] = x[..., 1] + x[..., 3] / 2

return y

def ap_per_class(tp, conf, pred_cls, target_cls):

""" Compute the average precision, given the recall and precision curves.

Source: https://github.com/rafaelpadilla/Object-Detection-Metrics.

# Arguments

tp: True positives (list).

conf: Objectness value from 0-1 (list).

pred_cls: Predicted object classes (list).

target_cls: True object classes (list).

# Returns

The average precision as computed in py-faster-rcnn.

"""

# Sort by objectness

i = np.argsort(-conf)

tp, conf, pred_cls = tp[i], conf[i], pred_cls[i]

# Find unique classes

unique_classes = np.unique(target_cls)

# Create Precision-Recall curve and compute AP for each class

ap, p, r = [], [], []

for c in tqdm.tqdm(unique_classes, desc="Computing AP"):

i = pred_cls == c

n_gt = (target_cls == c).sum() # Number of ground truth objects

n_p = i.sum() # Number of predicted objects

if n_p == 0 and n_gt == 0:

continue

elif n_p == 0 or n_gt == 0:

ap.append(0)

r.append(0)

p.append(0)

else:

# Accumulate FPs and TPs

fpc = (1 - tp[i]).cumsum()

tpc = (tp[i]).cumsum()

# Recall

recall_curve = tpc / (n_gt + 1e-16)

r.append(recall_curve[-1])

# Precision

precision_curve = tpc / (tpc + fpc)

p.append(precision_curve[-1])

# AP from recall-precision curve

ap.append(compute_ap(recall_curve, precision_curve))

# Compute F1 score (harmonic mean of precision and recall)

p, r, ap = np.array(p), np.array(r), np.array(ap)

f1 = 2 * p * r / (p + r + 1e-16)

return p, r, ap, f1, unique_classes.astype("int32")

def compute_ap(recall, precision):

""" Compute the average precision, given the recall and precision curves.

Code originally from https://github.com/rbgirshick/py-faster-rcnn.

# Arguments

recall: The recall curve (list).

precision: The precision curve (list).

# Returns

The average precision as computed in py-faster-rcnn.

"""

# correct AP calculation

# first append sentinel values at the end

mrec = np.concatenate(([0.0], recall, [1.0]))

mpre = np.concatenate(([0.0], precision, [0.0]))

# compute the precision envelope

for i in range(mpre.size - 1, 0, -1):

mpre[i - 1] = np.maximum(mpre[i - 1], mpre[i])

# to calculate area under PR curve, look for points

# where X axis (recall) changes value

i = np.where(mrec[1:] != mrec[:-1])[0]

# and sum (\Delta recall) * prec

ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1])

return ap

def get_batch_statistics(outputs, targets, iou_threshold):

""" Compute true positives, predicted scores and predicted labels per sample """

batch_metrics = []

for sample_i in range(len(outputs)):

if outputs[sample_i] is None:

continue

output = outputs[sample_i]

pred_boxes = output[:, :4]

pred_scores = output[:, 4]

pred_labels = output[:, -1]

true_positives = np.zeros(pred_boxes.shape[0])

annotations = targets[targets[:, 0] == sample_i][:, 1:]

target_labels = annotations[:, 0] if len(annotations) else []

if len(annotations):

detected_boxes = []

target_boxes = annotations[:, 1:]

for pred_i, (pred_box, pred_label) in enumerate(zip(pred_boxes, pred_labels)):

# If targets are found break

if len(detected_boxes) == len(annotations):

break

# Ignore if label is not one of the target labels

if pred_label not in target_labels:

continue

iou, box_index = bbox_iou(pred_box.unsqueeze(0), target_boxes).max(0)

if iou >= iou_threshold and box_index not in detected_boxes:

true_positives[pred_i] = 1

detected_boxes += [box_index]

batch_metrics.append([true_positives, pred_scores, pred_labels])

return batch_metrics

def bbox_wh_iou(wh1, wh2):

wh2 = wh2.t()

w1, h1 = wh1[0], wh1[1]

w2, h2 = wh2[0], wh2[1]

inter_area = torch.min(w1, w2) * torch.min(h1, h2)

union_area = (w1 * h1 + 1e-16) + w2 * h2 - inter_area

return inter_area / union_area

def bbox_iou(box1, box2, x1y1x2y2=True):

"""

Returns the IoU of two bounding boxes

"""

if not x1y1x2y2:

# Transform from center and width to exact coordinates

b1_x1, b1_x2 = box1[:, 0] - box1[:, 2] / 2, box1[:, 0] + box1[:, 2] / 2

b1_y1, b1_y2 = box1[:, 1] - box1[:, 3] / 2, box1[:, 1] + box1[:, 3] / 2

b2_x1, b2_x2 = box2[:, 0] - box2[:, 2] / 2, box2[:, 0] + box2[:, 2] / 2

b2_y1, b2_y2 = box2[:, 1] - box2[:, 3] / 2, box2[:, 1] + box2[:, 3] / 2

else:

# Get the coordinates of bounding boxes

b1_x1, b1_y1, b1_x2, b1_y2 = box1[:, 0], box1[:, 1], box1[:, 2], box1[:, 3]

b2_x1, b2_y1, b2_x2, b2_y2 = box2[:, 0], box2[:, 1], box2[:, 2], box2[:, 3]

# get the corrdinates of the intersection rectangle

inter_rect_x1 = torch.max(b1_x1, b2_x1)

inter_rect_y1 = torch.max(b1_y1, b2_y1)

inter_rect_x2 = torch.min(b1_x2, b2_x2)

inter_rect_y2 = torch.min(b1_y2, b2_y2)

# Intersection area

inter_area = torch.clamp(inter_rect_x2 - inter_rect_x1 + 1, min=0) * torch.clamp(

inter_rect_y2 - inter_rect_y1 + 1, min=0

)

# Union Area

b1_area = (b1_x2 - b1_x1 + 1) * (b1_y2 - b1_y1 + 1)

b2_area = (b2_x2 - b2_x1 + 1) * (b2_y2 - b2_y1 + 1)

iou = inter_area / (b1_area + b2_area - inter_area + 1e-16)

return iou

def non_max_suppression(prediction, conf_thres=0.5, nms_thres=0.4):

"""

Removes detections with lower object confidence score than 'conf_thres' and performs

Non-Maximum Suppression to further filter detections.

Returns detections with shape:

(x1, y1, x2, y2, object_conf, class_score, class_pred)

"""

# From (center x, center y, width, height) to (x1, y1, x2, y2)

prediction[..., :4] = xywh2xyxy(prediction[..., :4])

output = [None for _ in range(len(prediction))]

for image_i, image_pred in enumerate(prediction):

# Filter out confidence scores below threshold

image_pred = image_pred[image_pred[:, 4] >= conf_thres]

# If none are remaining => process next image

if not image_pred.size(0):

continue

# Object confidence times class confidence

score = image_pred[:, 4] * image_pred[:, 5:].max(1)[0]

# Sort by it

image_pred = image_pred[(-score).argsort()]

class_confs, class_preds = image_pred[:, 5:].max(1, keepdim=True)

detections = torch.cat((image_pred[:, :5], class_confs.float(), class_preds.float()), 1)

# Perform non-maximum suppression

keep_boxes = []

while detections.size(0):

large_overlap = bbox_iou(detections[0, :4].unsqueeze(0), detections[:, :4]) > nms_thres

label_match = detections[0, -1] == detections[:, -1]

# Indices of boxes with lower confidence scores, large IOUs and matching labels

invalid = large_overlap & label_match

weights = detections[invalid, 4:5]

# Merge overlapping bboxes by order of confidence

detections[0, :4] = (weights * detections[invalid, :4]).sum(0) / weights.sum()

keep_boxes += [detections[0]]

detections = detections[~invalid]

if keep_boxes:

output[image_i] = torch.stack(keep_boxes)

return output

def build_targets(pred_boxes, pred_cls, target, anchors, ignore_thres):

ByteTensor = torch.cuda.ByteTensor if pred_boxes.is_cuda else torch.ByteTensor

FloatTensor = torch.cuda.FloatTensor if pred_boxes.is_cuda else torch.FloatTensor

#将标签target全部转成相对位置

nB = pred_boxes.size(0) # batchsieze 4

nA = pred_boxes.size(1) # 每个格子对应了多少个anchor

nC = pred_cls.size(-1) # 类别的数量

nG = pred_boxes.size(2) # gridsize

# Output tensors

#考虑有目标的损失:(考虑前景)

#当前有物体存在的地方,但凡有物体的地方标1。

#这里一开始全标为0,我们之后通过target标签将对应位置赋予1即可。

obj_mask = ByteTensor(nB, nA, nG, nG).fill_(0) # obj,anchor包含物体, 即为1,默认为0 考虑前景

#考虑没有目标的损失:(考虑背景)

#当前不含有物体的都标为1,但凡含有物体就标为0。

noobj_mask = ByteTensor(nB, nA, nG, nG).fill_(1) # noobj, anchor不包含物体, 则为1,默认为1 考虑背景

#分类,真确为1,错误为0。

class_mask = FloatTensor(nB, nA, nG, nG).fill_(0) # 类别掩膜,类别预测正确即为1,默认全为0

#当前真实框和预测框的交并比。

iou_scores = FloatTensor(nB, nA, nG, nG).fill_(0) # 预测框与真实框的iou得分

#真实框相对于网格的位置。一开始全拿0当填充,之后会在标签当中实际将值进行赋值。

tx = FloatTensor(nB, nA, nG, nG).fill_(0) # 真实框相对于网格的位置

ty = FloatTensor(nB, nA, nG, nG).fill_(0)

tw = FloatTensor(nB, nA, nG, nG).fill_(0)

th = FloatTensor(nB, nA, nG, nG).fill_(0)

#这里增加了一个维度,nC表示到底属于哪个类别,一开始用0做填充,会将真实值赋值为1。

tcls = FloatTensor(nB, nA, nG, nG, nC).fill_(0)

#对格式做一个相应的转换

# Convert to position relative to box

#这里的target是相对于原始图像的,我们要算其在特征图当中的位置,因此这个是一个等比例变化,直接乘以当前grad格网的大小。

#我们还需要知道target属于某一个格子的相对位置。

#这里的[batch,channel,x,y,w,h] 2:6表示的就是x,y,w,h。

#这样乘完以后就获得了实际框相对于特征图的实际位置了。

target_boxes = target[:, 2:6] * nG #target中的xywh都是0-1的,可以得到其在当前gridsize上的xywh

gxy = target_boxes[:, :2]

gwh = target_boxes[:, 2:]

# Get anchors with best iou

#每个target会有对应的3个候选anchor框,因此我们需要选择出最接近的一个候选框,因为每张图上可能不止有一个target,可能有多个target。

# 遍历三种anchor框的每一个,每一个框和当前gwh真实框之间算交并比iou

ious = torch.stack([bbox_wh_iou(anchor, gwh) for anchor in anchors]) #每一种规格的anchor跟每个标签上的框的IOU得分

print (ious.shape)

#取当前每个iou最大的值以及对应的最匹配候选框的index。

best_ious, best_n = ious.max(0) # 得到其最高分以及哪种规格框和当前目标最相似

# Separate target values

#b表示当前每个矩阵属于哪个batch的,target_labels当前框所属的类别0-80之间的id值。

b, target_labels = target[:, :2].long().t() # 真实框所对应的batch,以及每个框所代表的实际类别

#对gxy做分类,还需要对xy做变换

gx, gy = gxy.t()

#这里wh也分出来

gw, gh = gwh.t()

#查看一下中心点所落格子的左上角的格子坐标,也就是实际所落在的格子的位置。

gi, gj = gxy.long().t() #位置信息,向下取整了

#开始填充

# Set masks

obj_mask[b, best_n, gj, gi] = 1 # 实际包含物体的设置成1

noobj_mask[b, best_n, gj, gi] = 0 # 相反

#当iou大于某个阈值的,也不能当做是背景,还需要保持为前景

# Set noobj mask to zero where iou exceeds ignore threshold

for i, anchor_ious in enumerate(ious.t()): # IOU超过了指定的阈值就相当于有物体了

noobj_mask[b[i], anchor_ious > ignore_thres, gj[i], gi[i]] = 0

# Coordinates

#实际值减实际值取整(即所对应的某个格子的左上角值),这样就可以获得实际值相对于所对应格子左上角的偏移量了。

tx[b, best_n, gj, gi] = gx - gx.floor() # 根据真实框所在位置,得到其相当于网络的位置

ty[b, best_n, gj, gi] = gy - gy.floor()

# Width and height

#相对于候选框的位置,这里需要做一个对数。之前做还原是.exp指数。

tw[b, best_n, gj, gi] = torch.log(gw / anchors[best_n][:, 0] + 1e-16)

th[b, best_n, gj, gi] = torch.log(gh / anchors[best_n][:, 1] + 1e-16)

# One-hot encoding of label

#实际预测值,是哪个类别就标1即可。

tcls[b, best_n, gj, gi, target_labels] = 1 #将真实框的标签转换为one-hot编码形式

# Compute label correctness and iou at best anchor 计算预测的和真实一样的索引

#预测值和真实值之间的情况,是算对了还是错了。

class_mask[b, best_n, gj, gi] = (pred_cls[b, best_n, gj, gi].argmax(-1) == target_labels).float()

#计算一下真实框和预测框的iou值。

iou_scores[b, best_n, gj, gi] = bbox_iou(pred_boxes[b, best_n, gj, gi], target_boxes, x1y1x2y2=False) #与真实框想匹配的预测框之间的iou值

#真实框的置信度

tconf = obj_mask.float() # 真实框的置信度,也就是1

return iou_scores, class_mask, obj_mask, noobj_mask, tx, ty, tw, th, tcls, tconf

这里需要注意的是coco数据集非常大,需要花费非常多的时间进行训练。

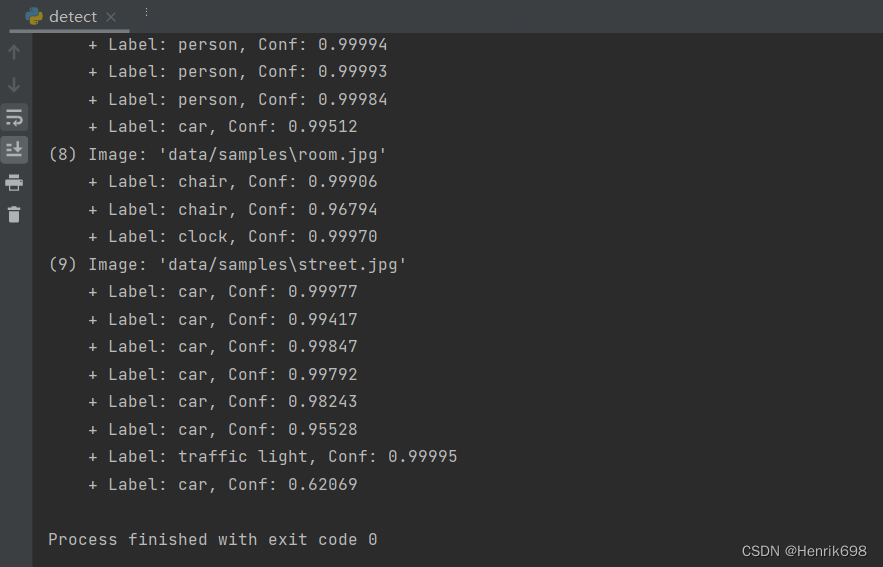

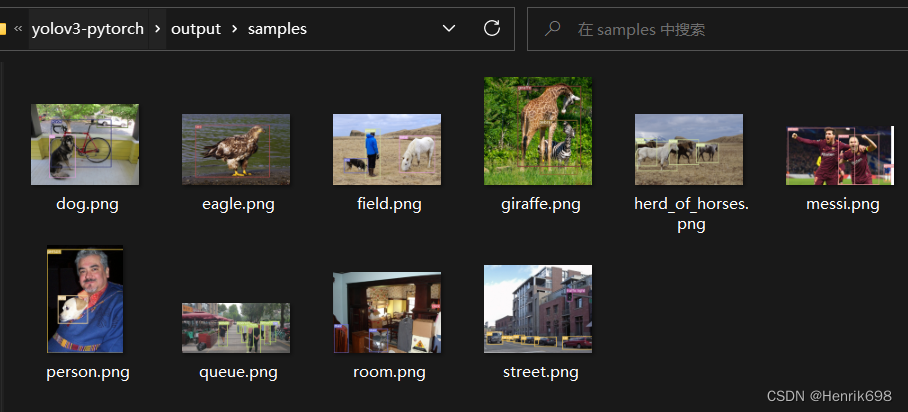

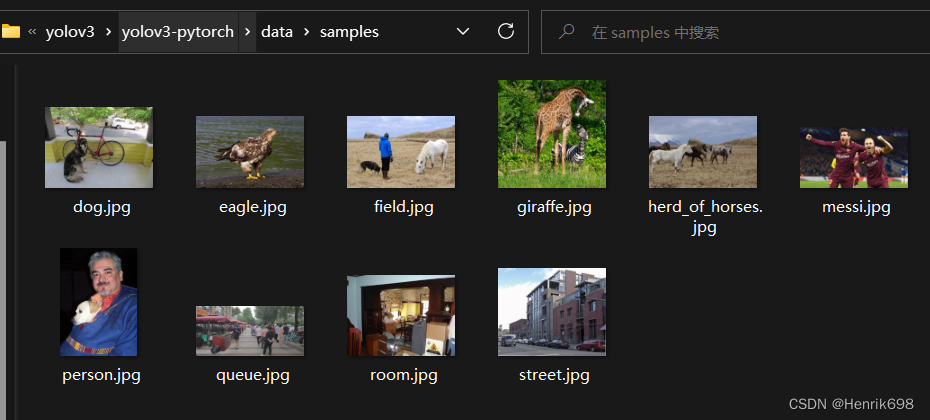

3、对训练好的模型做一个检测detect

首先配置一下参数,这里的参数是输入图像所在的文件夹。–image_folder data/samples/

该文件内存放着用于detect的图片:

detect的结果会生成一个output文件夹,这个里面存放sample的detect的结果。是预测图片后,图片上带有预测框的结果。

detect.py:

from __future__ import division

from models import *

from utils.utils import *

from utils.datasets import *

import os

import sys

import time

import datetime

import argparse

from PIL import Image

import torch

from torch.utils.data import DataLoader

from torchvision import datasets

from torch.autograd import Variable

import matplotlib.pyplot as plt

import matplotlib.patches as patches

from matplotlib.ticker import NullLocator

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--image_folder", type=str, default="data/samples", help="path to dataset")

parser.add_argument("--model_def", type=str, default="config/yolov3.cfg", help="path to model definition file")

parser.add_argument("--weights_path", type=str, default="weights/yolov3.weights", help="path to weights file")

parser.add_argument("--class_path", type=str, default="data/coco.names", help="path to class label file")

parser.add_argument("--conf_thres", type=float, default=0.8, help="object confidence threshold")

parser.add_argument("--nms_thres", type=float, default=0.4, help="iou thresshold for non-maximum suppression")

parser.add_argument("--batch_size", type=int, default=1, help="size of the batches")

parser.add_argument("--n_cpu", type=int, default=0, help="number of cpu threads to use during batch generation")

parser.add_argument("--img_size", type=int, default=416, help="size of each image dimension")

parser.add_argument("--checkpoint_model", type=str, help="path to checkpoint model")

opt = parser.parse_args()

print(opt)

#先指定是用cpu跑还是gpu跑

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

#创建一个生成的文件夹

os.makedirs("output", exist_ok=True)

# Set up model

#构建网络模型(训练和测试所使用的网络结构是一模一样的)

#我们训练的时候使用的模型,这里也是一样的,都是models.py中的Darknet类

model = Darknet(opt.model_def, img_size=opt.img_size).to(device)

#加载我们的训练好的权值参数。

#在weights文件夹中有一个darknet53.conv.74

#这个文件就是训练好的模型。我们直接加载这个训练好的模型即可。

if opt.weights_path.endswith(".weights"):

# Load darknet weights

model.load_darknet_weights(opt.weights_path)

else:

# Load checkpoint weights

model.load_state_dict(torch.load(opt.weights_path))

#这里将model模型进行修改,不需进行梯度计算了,确保我们的模型参数不会发生改变。

#这里只有前项传播的操作。

model.eval() # Set in evaluation mode

#定义一个dataloader,我们将配置的参数--image_folder data/samples/传进去

dataloader = DataLoader(

ImageFolder(opt.image_folder, img_size=opt.img_size),

batch_size=opt.batch_size,

shuffle=False,

num_workers=opt.n_cpu,

)

#将id和对应目标物的名字进行一一映射。

classes = load_classes(opt.class_path) # Extracts class labels from file

Tensor = torch.cuda.FloatTensor if torch.cuda.is_available() else torch.FloatTensor

imgs = [] # Stores image paths

img_detections = [] # Stores detections for each image index

print("\nPerforming object detection:")

prev_time = time.time()

for batch_i, (img_paths, input_imgs) in enumerate(dataloader):

# Configure input

# 取到的数据转为tensor数据

input_imgs = Variable(input_imgs.type(Tensor))

# Get detections

#执行一个前项传播

with torch.no_grad():

detections = model(input_imgs) #这里没有传target了。

#对预测结果做一个nms非极大值抑制。我们会预测的框是比较多的,但是我们只选最好的那一些。

#预测出来的结果框做非极大值抑制。

detections = non_max_suppression(detections, opt.conf_thres, opt.nms_thres)

# Log progress

current_time = time.time()

inference_time = datetime.timedelta(seconds=current_time - prev_time)

prev_time = current_time

print("\t+ Batch %d, Inference Time: %s" % (batch_i, inference_time))

# Save image and detections

imgs.extend(img_paths)

img_detections.extend(detections)

# 画图操作

# Bounding-box colors

cmap = plt.get_cmap("tab20b")

colors = [cmap(i) for i in np.linspace(0, 1, 20)]

print("\nSaving images:")

# Iterate through images and save plot of detections

for img_i, (path, detections) in enumerate(zip(imgs, img_detections)):

print("(%d) Image: '%s'" % (img_i, path))

# Create plot

img = np.array(Image.open(path))

plt.figure()

fig, ax = plt.subplots(1)

ax.imshow(img)

# Draw bounding boxes and labels of detections

#将检测到的框还原成真实的值,绘制到图像当中。

#将预测的坐标做一个还原。

#在原图像上绘制出来

if detections is not None:

# Rescale boxes to original image

detections = rescale_boxes(detections, opt.img_size, img.shape[:2])

unique_labels = detections[:, -1].cpu().unique()

n_cls_preds = len(unique_labels)

bbox_colors = random.sample(colors, n_cls_preds)

for x1, y1, x2, y2, conf, cls_conf, cls_pred in detections:

print("\t+ Label: %s, Conf: %.5f" % (classes[int(cls_pred)], cls_conf.item()))

box_w = x2 - x1

box_h = y2 - y1

color = bbox_colors[int(np.where(unique_labels == int(cls_pred))[0])]

# Create a Rectangle patch

bbox = patches.Rectangle((x1, y1), box_w, box_h, linewidth=2, edgecolor=color, facecolor="none")

# Add the bbox to the plot

ax.add_patch(bbox)

# Add label

plt.text(

x1,

y1,

s=classes[int(cls_pred)],

color="white",

verticalalignment="top",

bbox={"color": color, "pad": 0},

)

# Save generated image with detections

plt.axis("off")

plt.gca().xaxis.set_major_locator(NullLocator())

plt.gca().yaxis.set_major_locator(NullLocator())

filename = path.split("/")[-1].split(".")[0]

plt.savefig(f"output/{filename}.png", bbox_inches="tight", pad_inches=0.0)

plt.close()