1.简介

自从2020年Vision Transformer的出现,计算机视觉领域就掀起了一股Transform热潮,但是随着越来越多的人了解并且应用它,就能发现ViT虽然强大,但是想要训练好ViT也是非常困难的。

当前纯Transformer架构存在的一些问题:

1)Transformer参数多,算力要求高

2)Transformer缺少空间归纳偏置

3)Transformer迁移到其他任务比较繁琐

4)Transformer模型训练困难

所以整篇文章的核心就是探讨这个问题

Is it possible to combine the strengths of CNNs and ViTs to build a light-weight and low latency network for mobile vision tasks?

?2.MobileViT网络

文章首先介绍了自注意力模型,特别是Transformer在近几年的发展,由于没有CNN自带的归纳偏置优势,ViT只能通过增加模型的参数来提升性能。然而,这些提升的代价是模型尺寸(网络参数)和延迟的增大。而现实生活中的应用需要的是在有限资源的移动端使用的。但是现在基于ViT的模型在移动端的表现明显不如CNN好,比如在5-6million参数的限制下,DeIT的精度比MobileNetV3还低3%,所以急需要一个轻量级的ViT模型。

不像轻量级的CNNs即容易优化又容易结合特定的下游任务,ViT不仅难于优化,而且需要大量的数据增强和L2正则化来防止过拟合,并且对于下游任务还需要昂贵的decoder。

混合模型这两年获得了很多关注,但是这些混合模型还是具有很多参数,并且特别依赖数据增强,如果只有基本的数据增强方法,那么DeIT的精度会下降6%。

所以该文章主要关注设计一个轻量级(参数量小)、通用(容易迁移到下游任务中)、低延迟(推理速度快)的网络模型。MobileViT融合了卷积(空间归纳偏置和对数据增强的低敏感度)和Transformer(输入自适应权重和全局处理)的优点。

文章中相关工作部分还描述了一些卷积、Transformer的优点和缺点。

轻量级CNNs其实做的已经相当成熟了,主要就是依靠可分离卷积,优点是非常容易应用到各个卷积网络中(直接将普通卷积替换成可分离卷积)并且容易进行训练,即能减少网络尺寸又能提升推理速度减少延迟,唯一的缺点就是spatially local,缺少一定的全局信息。

ViT虽然也有一些工作进行优化,但是它难以优化和难以训练的缺点还是存在的。不达标的优化能力主要还是因为缺乏空间的归纳偏置。现在优化最好的PiT在相同参数下也比MobileNetv3少2.2%的精度。

然后又讲了MobileViT满足 1)更好的性能? 2)泛化能力更强 3)更强大,只需要基础的数据增强方法并且对L2正则敏感度很低。

2.1?MobileViT网络结构

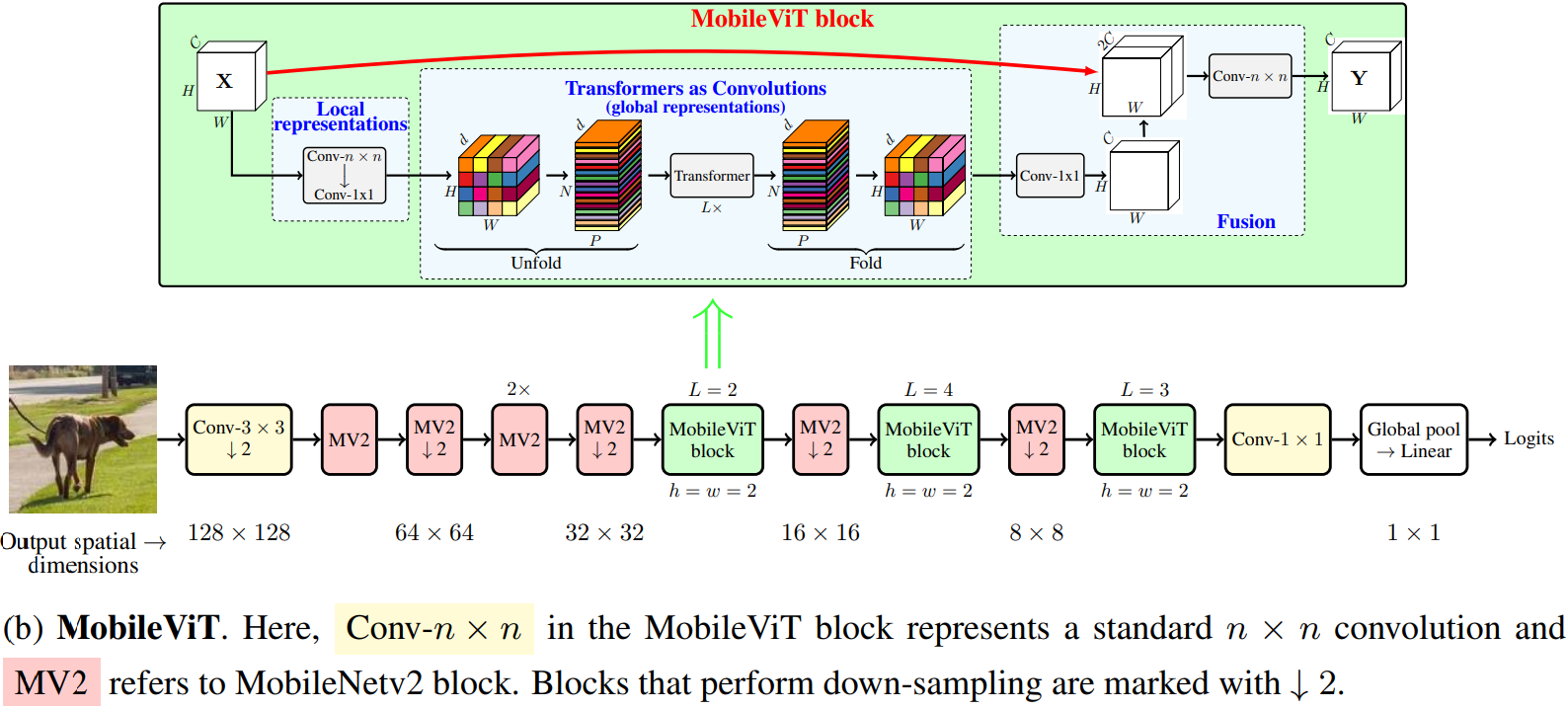

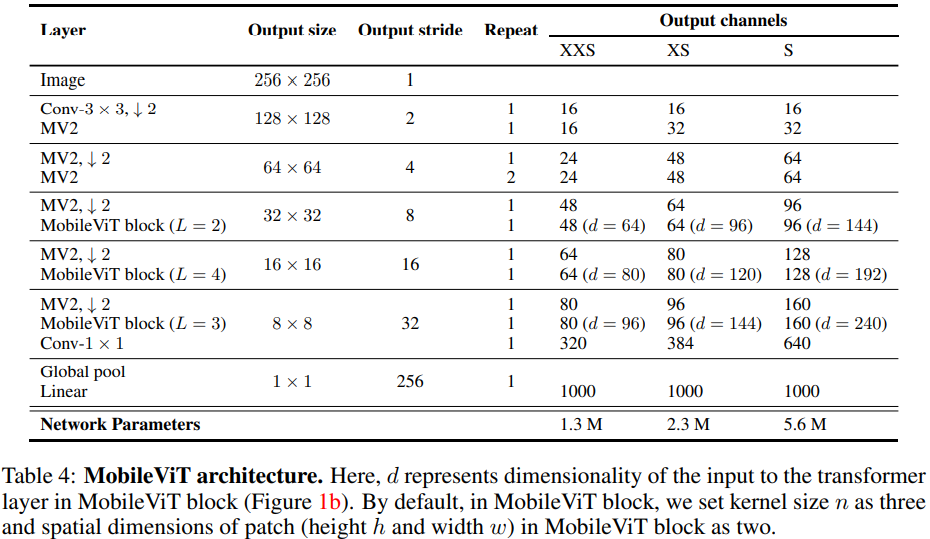

MobileViT网络结构主要包括三个模块,普通卷积、MV2和MobileViT block。其中MV2就是参考的MobileNetV2 block,有↓ 2表示stride=2进行下采样。

?2.2.1?MobileViT block

下图是MobileViT block的整体结构

?作者认为标准卷积主要包括三个步骤,unfolding, local processing(矩阵乘法), and?folding。

MobileViT block主要就是在参数量少的情况下将局部信息和全局信息都提取出来,可以在图中看到,首先通过一个Local representation(局部表征模块),由一个nxn的标准卷积(代码中是3x3),然后接一个PW卷积生成[B, H, W, d]的feature map进入到全局表征模块。全局表征模块模仿了标准卷积的三步unfolding, local processing, and?folding,只不过将中间的局部处理改为了Transformer的全局处理,用来扩大感受野。

膨胀卷积可以扩大感受野,但是需要谨慎的选择dilation rates,另外padding的补0操作也不是有效的空间区域,所以还是MSA更合适来扩大感受野。

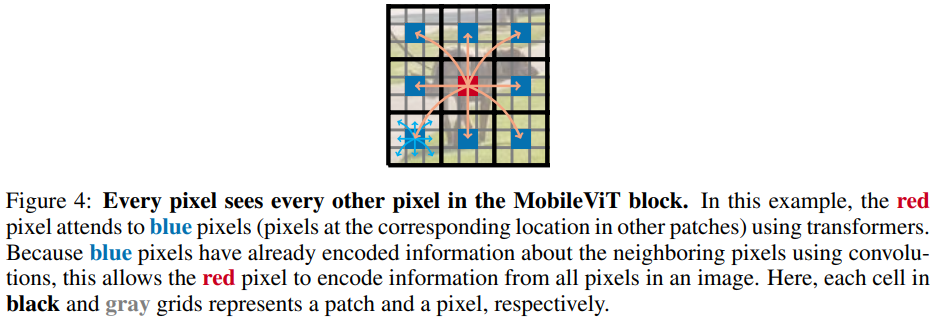

?unfold是将输入的[B, H, W, C]变为[B, P, N, d],注意,d>C,P为wh(每个patch的宽高,一般为2),N为patches的数目。也就是将feature map划分为一个个的patch,假如B=C=1,patch的宽高w=h=3,则可以想象将图像拆分为9列,每列中的像素就为每个patch中相同位置的提取出来的,所以每列有num_patches个像素。我们将每一列送入到Transformer中,算列间的每个像素的attention,如下图所示,这样我么就能够将感受野扩大为HxW了。

?然后进行fold操作,就是将[B, P, N, d]还原成[B, H, W, d]。然后就是Fusion模块了,用来将提取到的特征融合到一起。先通过一个1x1卷积将channel变为C,就是[B, H, W, C],再与MobileViT block的输入concat起来,通过nxn的卷积层将feature融合起来,输出[B, H, W, C]。

2.2.1.1 MV2

参考MObileNetV2 block,是一个倒残差结构

?2.2.1.2 Transformer block

?2.2?MobileViT的性能

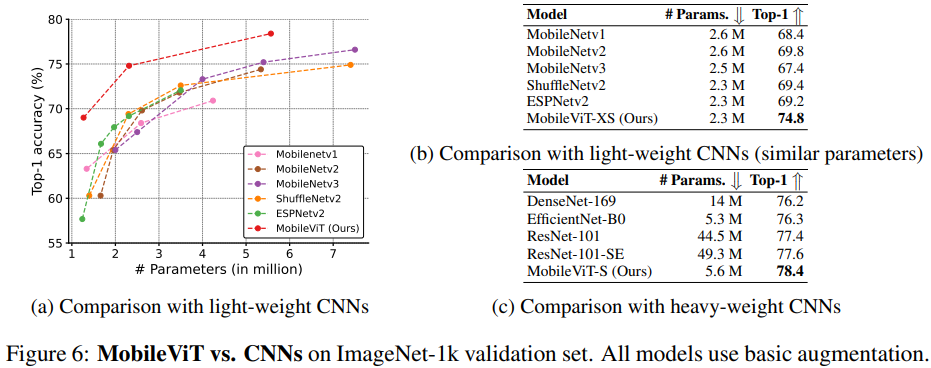

当所有模型只有基础数据增强时,各个模型在imagenet-1K上的准确率。可以看到左图的横坐标是参数量,所以越靠近左上角的模型越轻量,准确率越高。右边有与轻量级CNNs和重量级CNNs网络的对比。

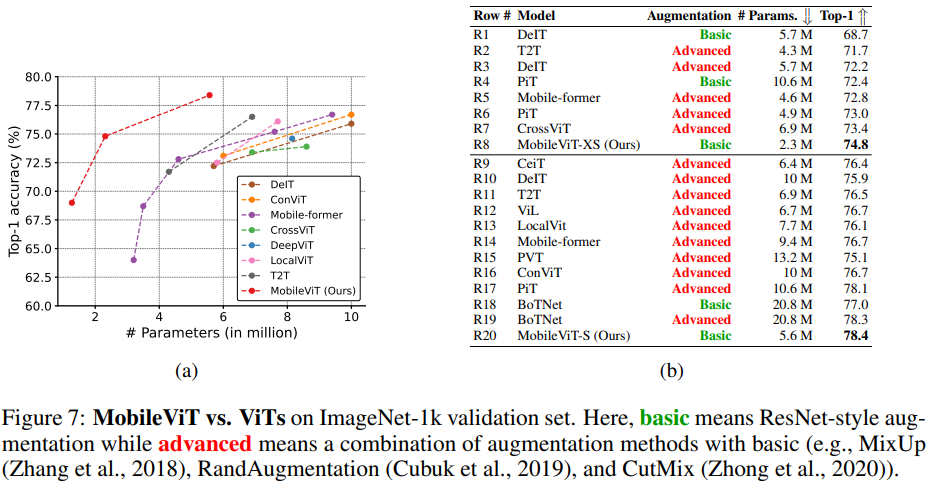

?下图是加上更加多的数据增强方法的advanced方法和基础的basic方法的对比。

2.3 MobileViT网络结构

?3.MobileViT网络代码(已进行注释)

"""

original code from apple:

https://github.com/apple/ml-cvnets/blob/main/cvnets/models/classification/mobilevit.py

"""

from typing import Optional, Tuple, Union, Dict

import math

import torch

import torch.nn as nn

from torch import Tensor

from torch.nn import functional as F

from transformer import TransformerEncoder

from model_config import get_config

def make_divisible(

v: Union[float, int],

divisor: Optional[int] = 8,

min_value: Optional[Union[float, int]] = None,

) -> Union[float, int]:

"""

This function is taken from the original tf repo.

It ensures that all layers have a channel number that is divisible by 8

It can be seen here:

https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

:param v:

:param divisor:

:param min_value:

:return:

"""

if min_value is None:

min_value = divisor

new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_v < 0.9 * v:

new_v += divisor

return new_v

class ConvLayer(nn.Module):

"""

Applies a 2D convolution over an input

Args:

in_channels (int): :math:`C_{in}` from an expected input of size :math:`(N, C_{in}, H_{in}, W_{in})`

out_channels (int): :math:`C_{out}` from an expected output of size :math:`(N, C_{out}, H_{out}, W_{out})`

kernel_size (Union[int, Tuple[int, int]]): Kernel size for convolution.

stride (Union[int, Tuple[int, int]]): Stride for convolution. Default: 1

groups (Optional[int]): Number of groups in convolution. Default: 1

bias (Optional[bool]): Use bias. Default: ``False``

use_norm (Optional[bool]): Use normalization layer after convolution. Default: ``True``

use_act (Optional[bool]): Use activation layer after convolution (or convolution and normalization).

Default: ``True``

Shape:

- Input: :math:`(N, C_{in}, H_{in}, W_{in})`

- Output: :math:`(N, C_{out}, H_{out}, W_{out})`

.. note::

For depth-wise convolution, `groups=C_{in}=C_{out}`.

"""

def __init__(

self,

in_channels: int,

out_channels: int,

kernel_size: Union[int, Tuple[int, int]],

stride: Optional[Union[int, Tuple[int, int]]] = 1,

groups: Optional[int] = 1,

bias: Optional[bool] = False,

use_norm: Optional[bool] = True,

use_act: Optional[bool] = True,

) -> None:

super().__init__()

if isinstance(kernel_size, int):

kernel_size = (kernel_size, kernel_size)

if isinstance(stride, int):

stride = (stride, stride)

assert isinstance(kernel_size, Tuple)

assert isinstance(stride, Tuple)

# 这样padding当stride=1时尺寸不变,stride=2时尺寸减半

padding = (

int((kernel_size[0] - 1) / 2),

int((kernel_size[1] - 1) / 2),

)

block = nn.Sequential()

conv_layer = nn.Conv2d(

in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

groups=groups,

padding=padding,

bias=bias

)

block.add_module(name="conv", module=conv_layer)

if use_norm:

norm_layer = nn.BatchNorm2d(num_features=out_channels, momentum=0.1)

block.add_module(name="norm", module=norm_layer)

if use_act:

act_layer = nn.SiLU()

block.add_module(name="act", module=act_layer)

self.block = block

def forward(self, x: Tensor) -> Tensor:

return self.block(x)

class InvertedResidual(nn.Module):

# MV2block,参考MobileNetv2

"""

This class implements the inverted residual block, as described in `MobileNetv2 <https://arxiv.org/abs/1801.04381>`_ paper

Args:

in_channels (int): :math:`C_{in}` from an expected input of size :math:`(N, C_{in}, H_{in}, W_{in})`

out_channels (int): :math:`C_{out}` from an expected output of size :math:`(N, C_{out}, H_{out}, W_{out)`

stride (int): Use convolutions with a stride. Default: 1

expand_ratio (Union[int, float]): Expand the input channels by this factor in depth-wise conv

skip_connection (Optional[bool]): Use skip-connection. Default: True

Shape:

- Input: :math:`(N, C_{in}, H_{in}, W_{in})`

- Output: :math:`(N, C_{out}, H_{out}, W_{out})`

.. note::

If `in_channels =! out_channels` and `stride > 1`, we set `skip_connection=False`

"""

def __init__(

self,

in_channels: int,

out_channels: int,

stride: int,

expand_ratio: Union[int, float],

skip_connection: Optional[bool] = True,

) -> None:

assert stride in [1, 2]

hidden_dim = make_divisible(int(round(in_channels * expand_ratio)), 8)

super().__init__()

block = nn.Sequential()

if expand_ratio != 1:

block.add_module(

name="exp_1x1",

module=ConvLayer(

in_channels=in_channels,

out_channels=hidden_dim,

kernel_size=1

),

)

block.add_module(

name="conv_3x3",

module=ConvLayer(

in_channels=hidden_dim,

out_channels=hidden_dim,

stride=stride,

kernel_size=3,

groups=hidden_dim

),

)

block.add_module(

name="red_1x1",

module=ConvLayer(

in_channels=hidden_dim,

out_channels=out_channels,

kernel_size=1,

use_act=False,

use_norm=True,

),

)

self.block = block

self.in_channels = in_channels

self.out_channels = out_channels

self.exp = expand_ratio

self.stride = stride

self.use_res_connect = (

self.stride == 1 and in_channels == out_channels and skip_connection

)

def forward(self, x: Tensor, *args, **kwargs) -> Tensor:

if self.use_res_connect:

return x + self.block(x)

else:

return self.block(x)

class MobileViTBlock(nn.Module):

"""

This class defines the `MobileViT block <https://arxiv.org/abs/2110.02178?context=cs.LG>`_

Args:

opts: command line arguments

in_channels (int): :math:`C_{in}` from an expected input of size :math:`(N, C_{in}, H, W)`

transformer_dim (int): Input dimension to the transformer unit

ffn_dim (int): Dimension of the FFN block

n_transformer_blocks (int): Number of transformer blocks. Default: 2

head_dim (int): Head dimension in the multi-head attention. Default: 32

attn_dropout (float): Dropout in multi-head attention. Default: 0.0

dropout (float): Dropout rate. Default: 0.0

ffn_dropout (float): Dropout between FFN layers in transformer. Default: 0.0

patch_h (int): Patch height for unfolding operation. Default: 8

patch_w (int): Patch width for unfolding operation. Default: 8

transformer_norm_layer (Optional[str]): Normalization layer in the transformer block. Default: layer_norm

conv_ksize (int): Kernel size to learn local representations in MobileViT block. Default: 3

no_fusion (Optional[bool]): Do not combine the input and output feature maps. Default: False

"""

def __init__(

self,

in_channels: int,

# 将输入的[B, H, W, C]变为[B, P, N, d],这里的transformer_dim就是d

transformer_dim: int,

# feed forward network,也就是Transformer Encoder中MSA,模块之后的前馈模块

ffn_dim: int,

# Transformer block的堆叠次数

n_transformer_blocks: int = 2,

# MSA中每个头的维度

head_dim: int = 32,

# Transformer Encoder中MSA内部的Dropout

attn_dropout: float = 0.0,

# Transformer Encoder中MSA block里Dropout的概率

dropout: float = 0.0,

# feed forward network中MLP内的Dropout概率

ffn_dropout: float = 0.0,

patch_h: int = 8,

patch_w: int = 8,

conv_ksize: Optional[int] = 3,

*args,

**kwargs

) -> None:

super().__init__()

conv_3x3_in = ConvLayer(

in_channels=in_channels,

out_channels=in_channels,

kernel_size=conv_ksize,

stride=1

)

conv_1x1_in = ConvLayer(

in_channels=in_channels,

out_channels=transformer_dim,

kernel_size=1,

stride=1,

use_norm=False,

use_act=False

)

conv_1x1_out = ConvLayer(

in_channels=transformer_dim,

out_channels=in_channels,

kernel_size=1,

stride=1

)

conv_3x3_out = ConvLayer(

in_channels=2 * in_channels,

out_channels=in_channels,

kernel_size=conv_ksize,

stride=1

)

# Local representation模块,包括3x3卷积和1x1升维卷积

self.local_rep = nn.Sequential()

self.local_rep.add_module(name="conv_3x3", module=conv_3x3_in)

self.local_rep.add_module(name="conv_1x1", module=conv_1x1_in)

assert transformer_dim % head_dim == 0

num_heads = transformer_dim // head_dim

global_rep = [

TransformerEncoder(

embed_dim=transformer_dim,

ffn_latent_dim=ffn_dim,

num_heads=num_heads,

attn_dropout=attn_dropout,

dropout=dropout,

ffn_dropout=ffn_dropout

)

for _ in range(n_transformer_blocks)

]

global_rep.append(nn.LayerNorm(transformer_dim))

self.global_rep = nn.Sequential(*global_rep)

self.conv_proj = conv_1x1_out

self.fusion = conv_3x3_out

self.patch_h = patch_h

self.patch_w = patch_w

self.patch_area = self.patch_w * self.patch_h

self.cnn_in_dim = in_channels

self.cnn_out_dim = transformer_dim

self.n_heads = num_heads

self.ffn_dim = ffn_dim

self.dropout = dropout

self.attn_dropout = attn_dropout

self.ffn_dropout = ffn_dropout

self.n_blocks = n_transformer_blocks

self.conv_ksize = conv_ksize

# unfolding模块实质上就是将[B, C, H, W]-->[BP, N, C]

# 就是将原来每个pixel与其他所有pixel做MSA变成了patch_h*patch_w份,每份内部做MSA,分批次输入进Transformer中。

def unfolding(self, x: Tensor) -> Tuple[Tensor, Dict]:

patch_w, patch_h = self.patch_w, self.patch_h #2, 2

patch_area = patch_w * patch_h # 4

batch_size, in_channels, orig_h, orig_w = x.shape

# 向上取整,若是不能整除,则将feature map尺寸扩大到能整除

new_h = int(math.ceil(orig_h / self.patch_h) * self.patch_h)

new_w = int(math.ceil(orig_w / self.patch_w) * self.patch_w)

interpolate = False

if new_w != orig_w or new_h != orig_h:

# Note: Padding can be done, but then it needs to be handled in attention function.

# 若是扩大feature map尺寸,则用双线性插值法

x = F.interpolate(x, size=(new_h, new_w), mode="bilinear", align_corners=False)

interpolate = True

# number of patches along width and height

# patches的数量

num_patch_w = new_w // patch_w # n_w

num_patch_h = new_h // patch_h # n_h

num_patches = num_patch_h * num_patch_w # N

# [B, C, H, W] -> [B * C * num_patch_h, patch_h, num_patch_w, patch_w]

x = x.reshape(batch_size * in_channels * num_patch_h, patch_h, num_patch_w, patch_w)

# [B * C * n_h, p_h, n_w, p_w] -> [B * C * n_h, n_w, p_h, p_w]

x = x.transpose(1, 2)

# [B * C * n_h, n_w, p_h, p_w] -> [B, C, N, P] where P = p_h * p_w and N = n_h * n_w

# P为patches面积大小,N为patches数量

x = x.reshape(batch_size, in_channels, num_patches, patch_area)

# [B, C, N, P] -> [B, P, N, C]

x = x.transpose(1, 3)

# [B, P, N, C] -> [BP, N, C]

x = x.reshape(batch_size * patch_area, num_patches, -1)

info_dict = {

"orig_size": (orig_h, orig_w),

"batch_size": batch_size,

"interpolate": interpolate,

"total_patches": num_patches,

"num_patches_w": num_patch_w,

"num_patches_h": num_patch_h,

}

return x, info_dict

# unfolding模块实质上就是将[BP, N, C]-->[B, C, H, W]

def folding(self, x: Tensor, info_dict: Dict) -> Tensor:

n_dim = x.dim()

assert n_dim == 3, "Tensor should be of shape BPxNxC. Got: {}".format(

x.shape

)

# [BP, N, C] --> [B, P, N, C]

x = x.contiguous().view(

info_dict["batch_size"], self.patch_area, info_dict["total_patches"], -1

)

batch_size, pixels, num_patches, channels = x.size()

num_patch_h = info_dict["num_patches_h"]

num_patch_w = info_dict["num_patches_w"]

# [B, P, N, C] -> [B, C, N, P]

x = x.transpose(1, 3)

# [B, C, N, P] -> [B*C*n_h, n_w, p_h, p_w]

x = x.reshape(batch_size * channels * num_patch_h, num_patch_w, self.patch_h, self.patch_w)

# [B*C*n_h, n_w, p_h, p_w] -> [B*C*n_h, p_h, n_w, p_w]

x = x.transpose(1, 2)

# [B*C*n_h, p_h, n_w, p_w] -> [B, C, H, W]

x = x.reshape(batch_size, channels, num_patch_h * self.patch_h, num_patch_w * self.patch_w)

if info_dict["interpolate"]:

x = F.interpolate(

x,

size=info_dict["orig_size"],

mode="bilinear",

align_corners=False,

)

return x

def forward(self, x: Tensor) -> Tensor:

res = x

fm = self.local_rep(x)

# convert feature map to patches

patches, info_dict = self.unfolding(fm)

# learn global representations

for transformer_layer in self.global_rep:

patches = transformer_layer(patches)

# [B x Patch x Patches x C] -> [B x C x Patches x Patch]

fm = self.folding(x=patches, info_dict=info_dict)

fm = self.conv_proj(fm)

fm = self.fusion(torch.cat((res, fm), dim=1))

return fm

class MobileViT(nn.Module):

"""

This class implements the `MobileViT architecture <https://arxiv.org/abs/2110.02178?context=cs.LG>`_

"""

def __init__(self, model_cfg: Dict, num_classes: int = 1000):

super().__init__()

image_channels = 3

out_channels = 16

self.conv_1 = ConvLayer(

in_channels=image_channels,

out_channels=out_channels,

kernel_size=3,

stride=2

)

self.layer_1, out_channels = self._make_layer(input_channel=out_channels, cfg=model_cfg["layer1"])

self.layer_2, out_channels = self._make_layer(input_channel=out_channels, cfg=model_cfg["layer2"])

self.layer_3, out_channels = self._make_layer(input_channel=out_channels, cfg=model_cfg["layer3"])

self.layer_4, out_channels = self._make_layer(input_channel=out_channels, cfg=model_cfg["layer4"])

self.layer_5, out_channels = self._make_layer(input_channel=out_channels, cfg=model_cfg["layer5"])

exp_channels = min(model_cfg["last_layer_exp_factor"] * out_channels, 960)

self.conv_1x1_exp = ConvLayer(

in_channels=out_channels,

out_channels=exp_channels,

kernel_size=1

)

self.classifier = nn.Sequential()

self.classifier.add_module(name="global_pool", module=nn.AdaptiveAvgPool2d(1))

self.classifier.add_module(name="flatten", module=nn.Flatten())

if 0.0 < model_cfg["cls_dropout"] < 1.0:

self.classifier.add_module(name="dropout", module=nn.Dropout(p=model_cfg["cls_dropout"]))

self.classifier.add_module(name="fc", module=nn.Linear(in_features=exp_channels, out_features=num_classes))

# weight init

self.apply(self.init_parameters)

def _make_layer(self, input_channel, cfg: Dict) -> Tuple[nn.Sequential, int]:

block_type = cfg.get("block_type", "mobilevit")

if block_type.lower() == "mobilevit":

return self._make_mit_layer(input_channel=input_channel, cfg=cfg)

else:

return self._make_mobilenet_layer(input_channel=input_channel, cfg=cfg)

@staticmethod

def _make_mobilenet_layer(input_channel: int, cfg: Dict) -> Tuple[nn.Sequential, int]:

output_channels = cfg.get("out_channels")

num_blocks = cfg.get("num_blocks", 2)

expand_ratio = cfg.get("expand_ratio", 4)

block = []

for i in range(num_blocks):

stride = cfg.get("stride", 1) if i == 0 else 1

layer = InvertedResidual(

in_channels=input_channel,

out_channels=output_channels,

stride=stride,

expand_ratio=expand_ratio

)

block.append(layer)

input_channel = output_channels

return nn.Sequential(*block), input_channel

@staticmethod

def _make_mit_layer(input_channel: int, cfg: Dict) -> [nn.Sequential, int]:

stride = cfg.get("stride", 1)

block = []

if stride == 2:

layer = InvertedResidual(

in_channels=input_channel,

out_channels=cfg.get("out_channels"),

stride=stride,

expand_ratio=cfg.get("mv_expand_ratio", 4)

)

block.append(layer)

input_channel = cfg.get("out_channels")

transformer_dim = cfg["transformer_channels"]

ffn_dim = cfg.get("ffn_dim")

num_heads = cfg.get("num_heads", 4)

head_dim = transformer_dim // num_heads

if transformer_dim % head_dim != 0:

raise ValueError("Transformer input dimension should be divisible by head dimension. "

"Got {} and {}.".format(transformer_dim, head_dim))

block.append(MobileViTBlock(

in_channels=input_channel,

transformer_dim=transformer_dim,

ffn_dim=ffn_dim,

n_transformer_blocks=cfg.get("transformer_blocks", 1),

patch_h=cfg.get("patch_h", 2),

patch_w=cfg.get("patch_w", 2),

dropout=cfg.get("dropout", 0.1),

ffn_dropout=cfg.get("ffn_dropout", 0.0),

attn_dropout=cfg.get("attn_dropout", 0.1),

head_dim=head_dim,

conv_ksize=3

))

return nn.Sequential(*block), input_channel

@staticmethod

def init_parameters(m):

if isinstance(m, nn.Conv2d):

if m.weight is not None:

nn.init.kaiming_normal_(m.weight, mode="fan_out")

if m.bias is not None:

nn.init.zeros_(m.bias)

elif isinstance(m, (nn.LayerNorm, nn.BatchNorm2d)):

if m.weight is not None:

nn.init.ones_(m.weight)

if m.bias is not None:

nn.init.zeros_(m.bias)

elif isinstance(m, (nn.Linear,)):

if m.weight is not None:

nn.init.trunc_normal_(m.weight, mean=0.0, std=0.02)

if m.bias is not None:

nn.init.zeros_(m.bias)

else:

pass

def forward(self, x: Tensor) -> Tensor:

x = self.conv_1(x)

x = self.layer_1(x)

x = self.layer_2(x)

x = self.layer_3(x)

x = self.layer_4(x)

x = self.layer_5(x)

x = self.conv_1x1_exp(x)

x = self.classifier(x)

return x

def mobile_vit_xx_small(num_classes: int = 1000):

# pretrain weight link

# https://docs-assets.developer.apple.com/ml-research/models/cvnets/classification/mobilevit_xxs.pt

config = get_config("xx_small")

m = MobileViT(config, num_classes=num_classes)

return m

def mobile_vit_x_small(num_classes: int = 1000):

# pretrain weight link

# https://docs-assets.developer.apple.com/ml-research/models/cvnets/classification/mobilevit_xs.pt

config = get_config("x_small")

m = MobileViT(config, num_classes=num_classes)

return m

def mobile_vit_small(num_classes: int = 1000):

# pretrain weight link

# https://docs-assets.developer.apple.com/ml-research/models/cvnets/classification/mobilevit_s.pt

config = get_config("small")

m = MobileViT(config, num_classes=num_classes)

return m