前言

本文主要是自己在阅读mmdet中DETR的源码时的一个记录,如有错误或者问题,欢迎指正

参考文章:DETR源码阅读

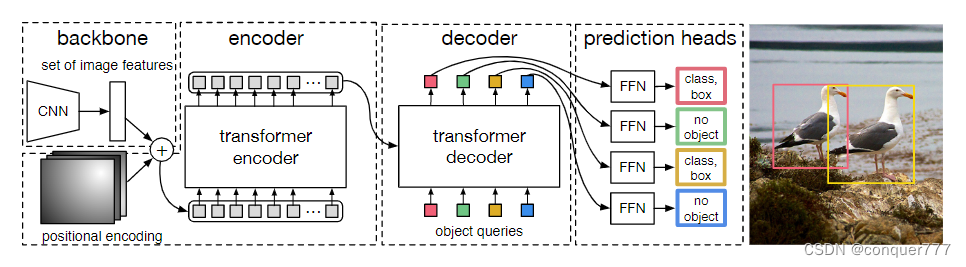

原理介绍

DETR的原理非常简单,将输入的图像首先经过一个CNN的backbone,得到feature map,DETR这里没有采用多尺度的特征图,因此输出的feature map只有一张。然后对feature map拉平加上postional encoding之后,送入到标准的transformer encoder,经过transformer encoder后,进入decoder学习object query;最后经过预测头预测出框和类别。

代码阅读

前面train_pipeline中对数据的处理我们暂且先不看,直接进入到模型的主体部分。

提取feature map

首先进入到SingleStageDetector中,代码在mmdet/models/detectors/single_stage.py中,代码如下:

def forward_train(self,

img,

img_metas,

gt_bboxes,

gt_labels,

gt_bboxes_ignore=None):

"""

Args:

img (Tensor): Input images of shape (N, C, H, W).

Typically these should be mean centered and std scaled.

img_metas (list[dict]): A List of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys see

:class:`mmdet.datasets.pipelines.Collect`.

gt_bboxes (list[Tensor]): Each item are the truth boxes for each

image in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): Class indices corresponding to each box

gt_bboxes_ignore (None | list[Tensor]): Specify which bounding

boxes can be ignored when computing the loss.

Returns:

dict[str, Tensor]: A dictionary of loss components.

"""

super(SingleStageDetector, self).forward_train(img, img_metas)

x = self.extract_feat(img)

losses = self.bbox_head.forward_train(x, img_metas, gt_bboxes,

gt_labels, gt_bboxes_ignore)

return losses

首先通过x = self.extract_feat(img)提前feature map,这里是用的resnet,并且只输出最后一层的feature map。然后进入到self.bbox_head.forward_train中,也就是DETRHead的forward_train中。

DETRHead.forward_single()

在进入到DETRHead的forward_train之后,通过下面的代码来计算模型的前向过程的输出

outs = self(x, img_metas)

此时程序跳转到了DETRHead中的forward函数中,代码如下:

def forward(self, feats, img_metas):

"""Forward function.

"""

num_levels = len(feats)

img_metas_list = [img_metas for _ in range(num_levels)]

return multi_apply(self.forward_single, feats, img_metas_list)

这里面通过multi_apply函数多次调用self.forward_single,来对每一个feature level进行前向过程,这里面由于feature map只有一层,故其实forward_single只执行了一次。

下面程序就进入到了forward_single中

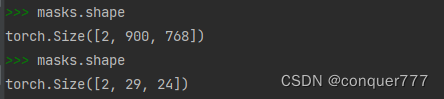

生成mask矩阵

代码如下:

batch_size = x.size(0)

input_img_h, input_img_w = img_metas[0]['batch_input_shape']

masks = x.new_ones((batch_size, input_img_h, input_img_w))

for img_id in range(batch_size):

img_h, img_w, _ = img_metas[img_id]['img_shape']

masks[img_id, :img_h, :img_w] = 0

x = self.input_proj(x)

# interpolate masks to have the same spatial shape with x

masks = F.interpolate(

masks.unsqueeze(1), size=x.shape[-2:]).to(torch.bool).squeeze(1)

所谓的mask就是为了统一批次大小而对图像进行了pad,被填充的部分在后续计算多头注意力时应该舍弃,故需要一个mask矩阵遮挡住,具体形状为[batch, input_img_h, input_img_w]。input_img_h, input_img_w是pad后的尺寸, img_h, img_w是原图尺寸。

mask矩阵中0代表有效区域,1代表pad的区域

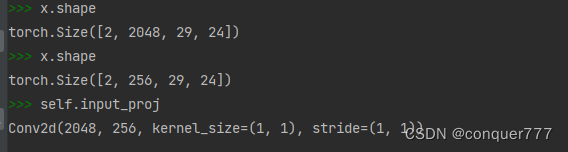

x = self.input_proj(x)

self.input_proj是一个输入通道为2048,输出通道为256的1*1的卷积核,这行代码是改变了x的channel数

注意此时的mask的尺寸是原图大小的;而输入图像的经过resnet50下采样后尺寸已经变了,所以还需进一步将mask通过F.interpolate函数下采样成和图像feature map一样的尺寸

# interpolate masks to have the same spatial shape with x

masks = F.interpolate(

masks.unsqueeze(1), size=x.shape[-2:]).to(torch.bool).squeeze(1)

生成positional_encoding

在DETRHead的forward_single()中,通过下面这行代码生成positional_encoding

pos_embed = self.positional_encoding(masks)

这里的采用的是SinePositionalEncoding,具体代码在mmdet/models/utils/positional_encoding.py中,这里不做过多介绍,最终得到到pos_embed的shape为[bs, embed_dim, h, w]

进入transformer

在DETRHead的forward_single()中,通过下面这行代码进入到transformer中

# outs_dec: [nb_dec, bs, num_query, embed_dim]

outs_dec, _ = self.transformer(x, masks, self.query_embedding.weight,

pos_embed)

代码进入到mmdet/models/utils/transformer.py的Transformer类的forward中,首先做一些进入transformer中的准备工作,将feature map等都拉平,然后再送入transformer中

bs, c, h, w = x.shape

# use `view` instead of `flatten` for dynamically exporting to ONNX

# 将feature map拉平

x = x.view(bs, c, -1).permute(2, 0, 1) # [bs, c, h, w] -> [h*w, bs, c]

# 将pos_embed也拉平 [bs,c,h,w] -> [h*w, bs, c]

pos_embed = pos_embed.view(bs, c, -1).permute(2, 0, 1)

# 将query_embed复制bs份

query_embed = query_embed.unsqueeze(1).repeat(

1, bs, 1) # [num_query, dim] -> [num_query, bs, dim]

# 将mask拉平

mask = mask.view(bs, -1) # [bs, h, w] -> [bs, h*w]

encoder

通过下面的代码进入到encoder中,

memory = self.encoder(

query=x,

key=None,

value=None,

query_pos=pos_embed,

query_key_padding_mask=mask)

encoder中的Q就是拉平后的feature map,query_pos=pos_embed K和V都是None,在调用mmcv\cnn\bricks\transformer.py中进行计算时,代码中有一句

temp_key = temp_value = query

也就是说,在encoder中进行self atten时,QKV都是一样的,都是拉平后的feature map

在经过encoder之后,return后的memory其实就是经过encoder的feature map,其shape和输入进去的query一样,都是[h*w,bs,embed_dims]

decoder

target = torch.zeros_like(query_embed)

# out_dec: [num_layers, num_query, bs, dim]

out_dec = self.decoder(

query=target,

key=memory,

value=memory,

key_pos=pos_embed,

query_pos=query_embed,

key_padding_mask=mask)

out_dec = out_dec.transpose(1, 2)

memory = memory.permute(1, 2, 0).reshape(bs, c, h, w)

这里先初始化了一个全0的target作为query,是因为后面在进行多头注意力时候,会把query和query_pos相加,这样就相当于加回去了

返回的out_dec的shape为[6,100,2,256]也就是[num_dec_layers, num_query,bs, embed_dims]

整个transformer的顺序是这样的:

bs, c, h, w = x.shape

# use `view` instead of `flatten` for dynamically exporting to ONNX

x = x.view(bs, c, -1).permute(2, 0, 1) # [bs, c, h, w] -> [h*w, bs, c]

pos_embed = pos_embed.view(bs, c, -1).permute(2, 0, 1)

query_embed = query_embed.unsqueeze(1).repeat(

1, bs, 1) # [num_query, dim] -> [num_query, bs, dim]

mask = mask.view(bs, -1) # [bs, h, w] -> [bs, h*w]

memory = self.encoder(

query=x,

key=None,

value=None,

query_pos=pos_embed,

query_key_padding_mask=mask)

target = torch.zeros_like(query_embed)

# out_dec: [num_layers, num_query, bs, dim]

out_dec = self.decoder(

query=target,

key=memory,

value=memory,

key_pos=pos_embed,

query_pos=query_embed,

key_padding_mask=mask)

out_dec = out_dec.transpose(1, 2)

memory = memory.permute(1, 2, 0).reshape(bs, c, h, w)

return out_dec, memory

前面先做进入transformer的准备工作,然后经过encoder和decoder,最后returnd的outs_dec其实就是更新过的query,memory相当于是经过encoder后的feature map。

走完transformer之后,程序就返回到了DETRHead的forward_single()中,通过返回的outs_dec来进行分类和回归的预测。

预测

在DETRHead的forward_single()中,通过

outs_dec, _ = self.transformer(x, masks, self.query_embedding.weight,

pos_embed)

all_cls_scores = self.fc_cls(outs_dec)

all_bbox_preds = self.fc_reg(self.activate(

self.reg_ffn(outs_dec))).sigmoid()

得到的all_cls_scores和all_bbox_preds shape分别为 [num_layer,bs,num_query,81]和 [num_layer,bs,num_query,4]

Loss

在得到前向的预测结果之后,下面就是要进行匈牙利匹配以及计算loss。

计算Loss的整个函数调用的逻辑是这样的:首先进入DETRHead的loss()函数中,在loss函数中,会通过multi_apply函数调用loss_single来计算每一个decoder layer的loss,在loss_single中会调用get_targets()来获得这一个batch图片的targets,在get_targets()中会调用_get_target_single()来获得batch中每张图片的targets,并且在_get_target_single()中会进行匈牙利匹配

我们直接进入到_get_target_single()中,在_get_target_single(),首先会进行匈牙利匹配,先看一下匈牙利匹配的代码,这部分代码在mmdet/core/bbox/assigners/hungarian_assigner.py下,注释写在代码中了

def assign(self,bbox_pred,cls_pred,gt_bboxes,gt_labels,img_meta,gt_bboxes_ignore=None,eps=1e-7):

assert gt_bboxes_ignore is None, \

'Only case when gt_bboxes_ignore is None is supported.'

num_gts, num_bboxes = gt_bboxes.size(0), bbox_pred.size(0)

# 1. assign -1 by default

assigned_gt_inds = bbox_pred.new_full((num_bboxes, ),

-1,

dtype=torch.long)

assigned_labels = bbox_pred.new_full((num_bboxes, ),

-1,

dtype=torch.long)

if num_gts == 0 or num_bboxes == 0:

# No ground truth or boxes, return empty assignment

if num_gts == 0:

# No ground truth, assign all to background

assigned_gt_inds[:] = 0

return AssignResult(

num_gts, assigned_gt_inds, None, labels=assigned_labels)

img_h, img_w, _ = img_meta['img_shape']

factor = gt_bboxes.new_tensor([img_w, img_h, img_w,

img_h]).unsqueeze(0)

# 2. compute the weighted costs

# classification and bboxcost.

'''

cls_pred.shape : [100,81]

gt_labels :[5]

'''

cls_cost = self.cls_cost(cls_pred, gt_labels)

# regression L1 cost

# 因为预测出的bbox_pred是在0-1之间,因此算reg_cost之前需要把gt_bboxes转换到0-1尺度

normalize_gt_bboxes = gt_bboxes / factor

reg_cost = self.reg_cost(bbox_pred, normalize_gt_bboxes)

# regression iou cost, defaultly giou is used in official DETR.

# 转回原图尺度再计算iou_cost

bboxes = bbox_cxcywh_to_xyxy(bbox_pred) * factor

iou_cost = self.iou_cost(bboxes, gt_bboxes)

# weighted sum of above three costs

# cost.shape [100,5] 因为这里是100个预测框对5个gt

cost = cls_cost + reg_cost + iou_cost

# 3. do Hungarian matching on CPU using linear_sum_assignment

cost = cost.detach().cpu()

if linear_sum_assignment is None:

raise ImportError('Please run "pip install scipy" '

'to install scipy first.')

matched_row_inds, matched_col_inds = linear_sum_assignment(cost)

matched_row_inds = torch.from_numpy(matched_row_inds).to(

bbox_pred.device)

matched_col_inds = torch.from_numpy(matched_col_inds).to(

bbox_pred.device)

# 4. assign backgrounds and foregrounds

# assign all indices to backgrounds first

assigned_gt_inds[:] = 0

# assign foregrounds based on matching results

assigned_gt_inds[matched_row_inds] = matched_col_inds + 1

assigned_labels[matched_row_inds] = gt_labels[matched_col_inds]

return AssignResult(

num_gts, assigned_gt_inds, None, labels=assigned_labels)

返回到_get_target_single()之后,这时以及匹配好了正负样本,将匹配的结果整理后返回即可,_get_target_single()返回的是一个元组**(labels, label_weights, bbox_targets, bbox_weights, pos_inds,neg_inds)**

labels:是预测出的100个框的类别,这里已经匹配好了,大部分为背景类

label_weights:是每个label的权重,这里都是1

bbox_targets是预测出框的位置,这里也是匹配好的,只有gt个框的值为预测值,其余全是0

bbox_weights是框的权重,这里与gt匹配的框的值为1,其余全为0

pos_inds是正样本的索引,neg_inds是负样本的索引。

在执行batch_size次_get_target_single()之后,代码返回到get_target()中,get_target()将这一个batch的label和bbox返回到loss_single()当中

def loss_single(self,

cls_scores,

bbox_preds,

gt_bboxes_list,

gt_labels_list,

img_metas,

gt_bboxes_ignore_list=None):

num_imgs = cls_scores.size(0)

cls_scores_list = [cls_scores[i] for i in range(num_imgs)]

bbox_preds_list = [bbox_preds[i] for i in range(num_imgs)]

cls_reg_targets = self.get_targets(cls_scores_list, bbox_preds_list,

gt_bboxes_list, gt_labels_list,

img_metas, gt_bboxes_ignore_list)

(labels_list, label_weights_list, bbox_targets_list, bbox_weights_list,

num_total_pos, num_total_neg) = cls_reg_targets

# 将不同batch的结果cat在一起

labels = torch.cat(labels_list, 0) #[bs*num_query]

label_weights = torch.cat(label_weights_list, 0) #[bs*num_query]

bbox_targets = torch.cat(bbox_targets_list, 0) #[bs*num_query,4]

bbox_weights = torch.cat(bbox_weights_list, 0) #[bs*num_query,4]

# classification loss

# self.cls_out_channels:81

cls_scores = cls_scores.reshape(-1, self.cls_out_channels) # [bs*num_query,81]

# construct weighted avg_factor to match with the official DETR repo

cls_avg_factor = num_total_pos * 1.0 + \

num_total_neg * self.bg_cls_weight

if self.sync_cls_avg_factor:

cls_avg_factor = reduce_mean(

cls_scores.new_tensor([cls_avg_factor]))

# cls_avg_factor的作用:Average factor that is used to average the loss

cls_avg_factor = max(cls_avg_factor, 1)

# self.loss_cls: CrossEntropyLoss()

loss_cls = self.loss_cls(

cls_scores, labels, label_weights, avg_factor=cls_avg_factor)

# Compute the average number of gt boxes across all gpus, for

# normalization purposes

num_total_pos = loss_cls.new_tensor([num_total_pos])

num_total_pos = torch.clamp(reduce_mean(num_total_pos), min=1).item()

# construct factors used for rescale bboxes

# 计算一个batch中每张图的放缩因子

factors = []

for img_meta, bbox_pred in zip(img_metas, bbox_preds):

img_h, img_w, _ = img_meta['img_shape']

factor = bbox_pred.new_tensor([img_w, img_h, img_w,

img_h]).unsqueeze(0).repeat(

bbox_pred.size(0), 1)

factors.append(factor)

factors = torch.cat(factors, 0) # [bs*num_query,4]

# DETR regress the relative position of boxes (cxcywh) in the image,

# thus the learning target is normalized by the image size. So here

# we need to re-scale them for calculating IoU loss

# 计算iou_loss需要在原图尺寸上进行,同时编码方式为xyxy

bbox_preds = bbox_preds.reshape(-1, 4)

bboxes = bbox_cxcywh_to_xyxy(bbox_preds) * factors

bboxes_gt = bbox_cxcywh_to_xyxy(bbox_targets) * factors

# regression IoU loss, defaultly GIoU loss

loss_iou = self.loss_iou(

bboxes, bboxes_gt, bbox_weights, avg_factor=num_total_pos)

# regression L1 loss

# 计算l1 loss在0-1尺度上进行,编码方式为[x,y,w,h]

loss_bbox = self.loss_bbox(

bbox_preds, bbox_targets, bbox_weights, avg_factor=num_total_pos)

return loss_cls, loss_bbox, loss_iou

loss_single()函数会执行6次,因为是对每一个decoder layer都计算loss,但是实际最后需要进行梯度回传的loss只有最后一个decoder的

最后回到loss函数中,其实就是将loss进行整理并返回loss_dict

losses_cls, losses_bbox, losses_iou = multi_apply(

self.loss_single, all_cls_scores, all_bbox_preds,

all_gt_bboxes_list, all_gt_labels_list, img_metas_list,

all_gt_bboxes_ignore_list)

loss_dict = dict()

# loss from the last decoder layer

loss_dict['loss_cls'] = losses_cls[-1]

loss_dict['loss_bbox'] = losses_bbox[-1]

loss_dict['loss_iou'] = losses_iou[-1]

# loss from other decoder layers

num_dec_layer = 0

for loss_cls_i, loss_bbox_i, loss_iou_i in zip(losses_cls[:-1],

losses_bbox[:-1],

losses_iou[:-1]):

loss_dict[f'd{num_dec_layer}.loss_cls'] = loss_cls_i

loss_dict[f'd{num_dec_layer}.loss_bbox'] = loss_bbox_i

loss_dict[f'd{num_dec_layer}.loss_iou'] = loss_iou_i

num_dec_layer += 1

return loss_dict

计算完Loss之后,后面就是梯度回传,更新参数,然后进行下一次前向过程了