Deep Dream : 窥探神经网络模型的内部

????????通常我们通过使用大量的标记数据训练神经网络模型,以图像识别模型为例,模型通常由多个卷积层堆叠而成,中间还有一些池化和激活的操作,每一个图像从输入层到输出层,要经过很多层的“处理”。一个训练好的模型,会逐层提取图像中特征,最终的高级特征可以判断出图像属于哪一个类别。尽管模型表现良好,但是我们并不了解,模型到底从数据中学到了什么,或者图像中的哪些模式被模型检测到了,并导致了最终得到正确的分类。又或者模型是否是通过正确的模式实现正确的分类等等。总之通过窥探模型的内部可以帮助我们更好得理解、分析模型。

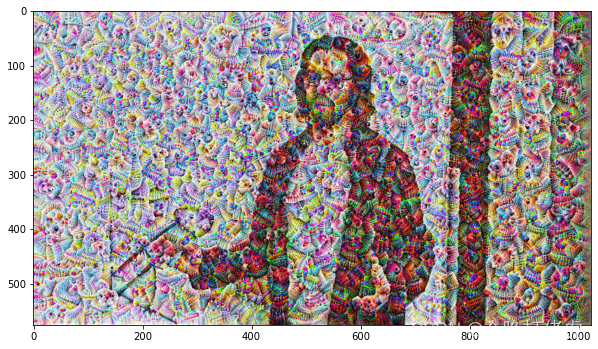

????????Deep Dream可以帮助我们通过可视化来观察神经网络学习到的内部模式。向网络输入任意的图像,然后选择某一层的输出(激活)计算其梯度,通过梯度信息修改图像增强网络模型检测到的任何东西,进而放大模式。网络的每一层都处理不同抽象级别的特征,因此最终的可视化结果取决于我们选择增强哪一层。例如,较低的层倾向于产生笔画或简单的装饰状图案,因为这些层对基本特征,如边缘及其方向很敏感 。如果我们选择更深层次的层,来识别图像中更复杂的特征,复杂的特征甚至整个物体往往会出现。

InceptionV3

以InceptionV3模型为例,可视化模型的检测结果。

import numpy as np

import tensorflow as tf

from tqdm.notebook import tqdm_notebook

from tensorflow.keras import Model

from tensorflow.keras.preprocessing.image import load_img, img_to_array

from tensorflow.keras.applications.inception_v3 import InceptionV3, preprocess_input

加载模型

inception = InceptionV3(weights="F:/Keras_Model_Weights/weights/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5",

include_top=False)

inception.summary()

Model: "inception_v3"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_6 (InputLayer) [(None, None, None, 0

__________________________________________________________________________________________________

conv2d_203 (Conv2D) (None, None, None, 3 864 input_6[0][0]

__________________________________________________________________________________________________

batch_normalization_203 (BatchN (None, None, None, 3 96 conv2d_203[0][0]

__________________________________________________________________________________________________

省略..........................................................................

__________________________________________________________________________________________________

mixed9_1 (Concatenate) (None, None, None, 7 0 activation_290[0][0]

activation_291[0][0]

__________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, None, None, 7 0 activation_294[0][0]

activation_295[0][0]

__________________________________________________________________________________________________

activation_296 (Activation) (None, None, None, 1 0 batch_normalization_296[0][0]

__________________________________________________________________________________________________

mixed10 (Concatenate) (None, None, None, 2 0 activation_288[0][0]

mixed9_1[0][0]

concatenate_1[0][0]

activation_296[0][0]

==================================================================================================

Total params: 21,802,784

Trainable params: 21,768,352

Non-trainable params: 34,432

__________________________________________________________________________________________________

Deep Dream

__init__: 初始化图像的缩放系数、指定输出层_calculate_loss: 计算loss(激活输出均值)_gradient_ascent: 计算梯度、修改图像,循环多次放大增强_deprecess: 输出结果后处理dream: 对增强后的图像,进行多尺度缩放,循环增强

为什么要在多个尺度上对图像进行连续梯度增强?

答:在相同的尺度上应用梯度上升会使结果看起来有噪声,而且分辨率很低。为了解决这些问题,主要的方法dream()在不同的尺度(称为八度)上应用梯度上升,其中前一个小尺度输出是在更高尺度上的输入。

class DeepDreamer:

def __init__(self,

model = None,

octave_scale = 1.3,

octave_power_factors = [-2, -1, 0, 1, 2],

layers = ['mixed3', 'mixed5']):

self.octave_scale = octave_scale

self.octave_power_factors = octave_power_factors

self.layers = layers

self.base_model = model

outputs = [self.base_model.get_layer(name).output for name in self.layers]

self.dreamer_model = Model(self.base_model.input, outputs)

def _calculate_loss(self, image):

image_tensor = tf.expand_dims(image, axis=0)

activation = self.dreamer_model(image_tensor)

loss = tf.math.reduce_mean(activation)

return loss

@tf.function(experimental_relax_shapes=True)

def _gradient_ascent(self, image, steps, step_size):

loss = tf.constant(0.0)

for _ in range(steps):

with tf.GradientTape() as tape:

tape.watch(image)

loss = self._calculate_loss(image)

gradients = tape.gradient(loss, image)

gradients /= tf.math.reduce_std(gradients) + 1e-8

image = image + gradients * step_size

image = tf.clip_by_value(image, -1, 1)

return loss, image

def _deprocess(self, image):

image = 255 * (image + 1.0) / 2.0

image = tf.cast(image, tf.uint8)

image = np.array(image)

return image

def _dream(self, image, steps, step_size):

image = preprocess_input(image)

image = tf.convert_to_tensor(image)

step_size = tf.convert_to_tensor(step_size)

step_size = tf.constant(step_size)

steps = tf.constant(steps)

loss, image = self._gradient_ascent(image, steps, step_size)

result = self._deprocess(image)

return result

def dream(self, image, steps=100, step_size=0.01):

image = tf.constant(np.array(image))

base_shape = tf.shape(image)[:-1]

base_shape = tf.cast(base_shape, tf.float32)

for factor in tqdm_notebook(self.octave_power_factors):

new_shape = tf.cast(base_shape * (self.octave_scale ** factor), tf.int32)

image = tf.image.resize(image, new_shape).numpy()

image = self._dream(image, steps, step_size)

base_shape = tf.cast(base_shape, tf.int32)

image = tf.image.resize(image, base_shape)

image = tf.image.convert_image_dtype(image/255.0, dtype=tf.uint8)

image = np.array(image)

return image

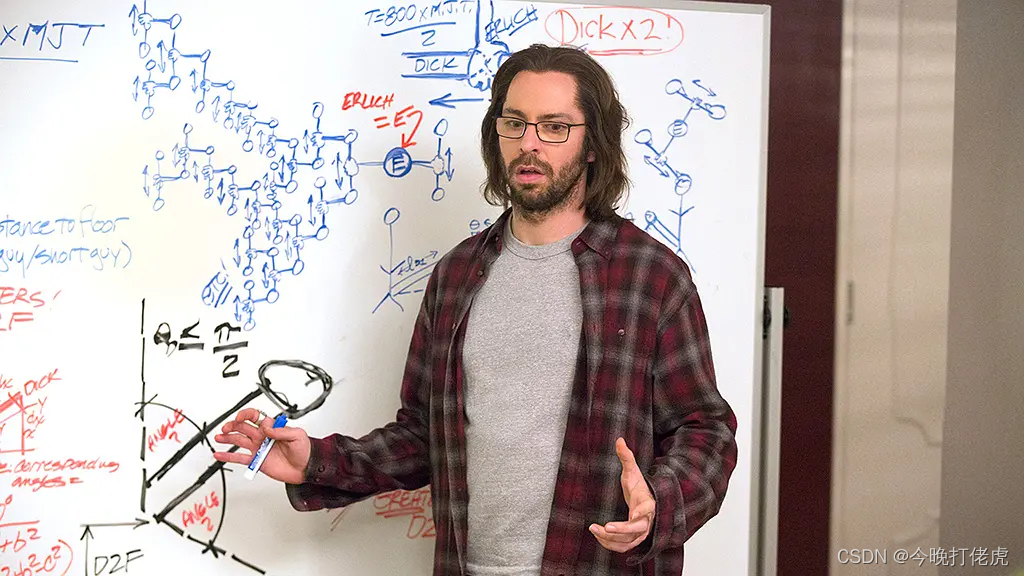

加载测试图像

import matplotlib.pyplot as plt

original_image = load_img('../images/p2277197426.png')

original_image = img_to_array(original_image)

plt.imshow(original_image/255.)

deepdream = DeepDreamer(model=inception, layers=['mixed3','mixed5','mixed7'], octave_power_factors=[-2, -1, 0, 2])

dream_image = deepdream.dream(original_image, steps=100, step_size=0.025)

plt.figure(figsize=(10, 6))

plt.imshow(dream_image)