目录

2. 自定义隐藏层层数和每个隐藏层中的神经元个数,尝试找到最优超参数完成多分类。

4. 尝试基于MNIST手写数字识别数据集,设计合适的前馈神经网络进行实验,并取得95%以上的准确率。

深入研究鸢尾花数据集

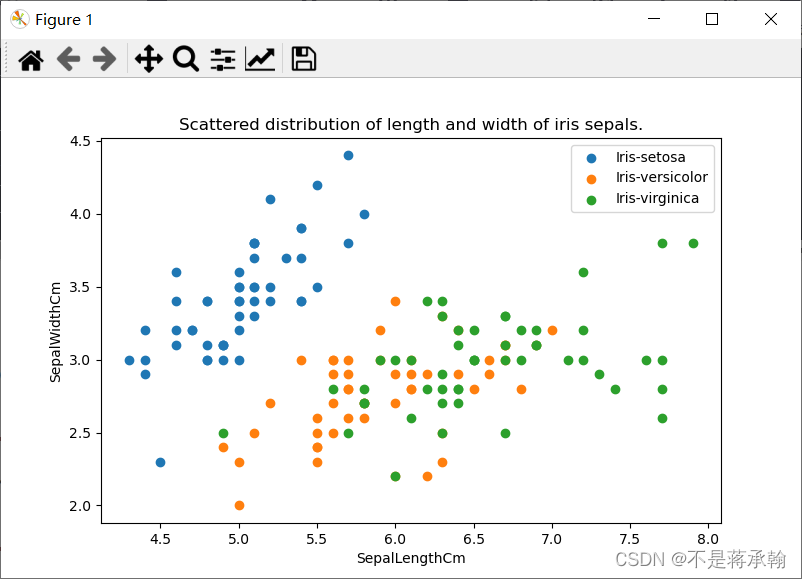

Iris 鸢尾花数据集内包含 3 类分别为山鸢尾(Iris-setosa)、变色鸢尾(Iris-versicolor)和维吉尼亚鸢尾(Iris-virginica),共 150 条记录,每类各 50 个数据,每条记录都有 4 项特征:花萼长度、花萼宽度、花瓣长度、花瓣宽度。

sepallength:萼片长度

sepalwidth:萼片宽度

petallength:花瓣长度

petalwidth:花瓣宽度

以上四个特征的单位都是厘米(cm)

画出数据集中150个数据的前两个特征的散点分布图

import pandas as pd

import matplotlib.pyplot as plt

# 导入数据集

df = pd.read_csv('Iris.csv', usecols=[1, 2, 3, 4, 5])

"""绘制训练集基本散点图,便于人工分析,观察数据集的线性可分性"""

# 表示绘制图形的画板尺寸为8*5

plt.figure(figsize=(8, 5))

# 散点图的x坐标、y坐标、标签

plt.scatter(df[:50]['SepalLengthCm'], df[:50]['SepalWidthCm'], label='Iris-setosa')

plt.scatter(df[50:100]['SepalLengthCm'], df[50:100]['SepalWidthCm'], label='Iris-versicolor')

plt.scatter(df[100:150]['SepalLengthCm'], df[100:150]['SepalWidthCm'], label='Iris-virginica')

plt.xlabel('SepalLengthCm')

plt.ylabel('SepalWidthCm')

# 添加标题 '鸢尾花萼片的长度与宽度的散点分布'

plt.title('Scattered distribution of length and width of iris sepals.')

# 显示标签

plt.legend()

plt.show()

4.5 实践:基于前馈神经网络完成鸢尾花分类

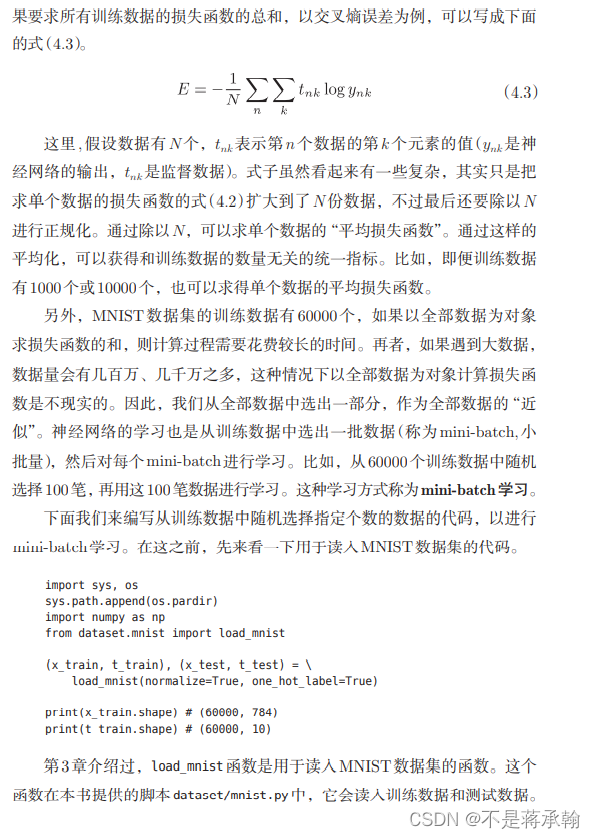

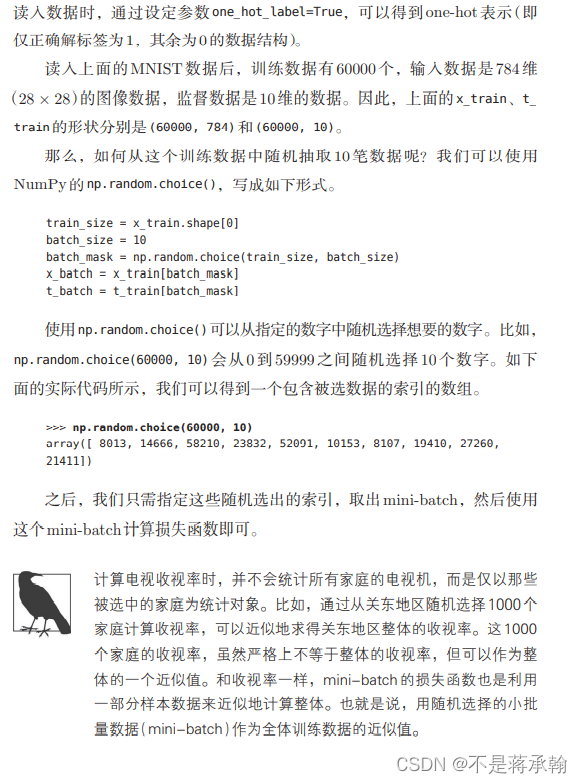

4.5.1 小批量梯度下降法

在梯度下降法中,目标函数是整个训练集上的风险函数,这种方式称为批量梯度下降法(Batch Gradient Descent,BGD)。 批量梯度下降法在每次迭代时需要计算每个样本上损失函数的梯度并求和。当训练集中的样本数量N很大时,空间复杂度比较高,每次迭代的计算开销也很大。

为了减少每次迭代的计算复杂度,我们可以在每次迭代时只采集一小部分样本,计算在这组样本上损失函数的梯度并更新参数,这种优化方式称为

小批量梯度下降法(Mini-Batch Gradient Descent,Mini-Batch GD)。

4.5.1.1 数据分组

为了小批量梯度下降法,我们需要对数据进行随机分组。目前,机器学习中通常做法是构建一个数据迭代器,每个迭代过程中从全部数据集中获取一批指定数量的数据。

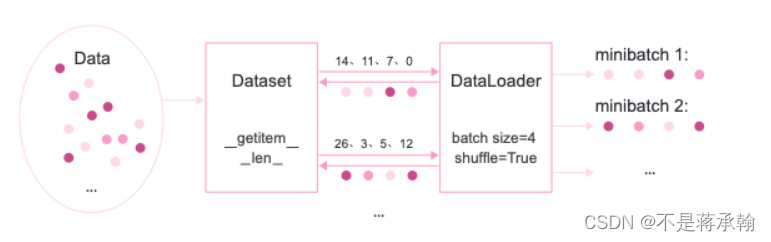

数据迭代器的实现原理如下图所示:

- 首先,将数据集封装为Dataset类,传入一组索引值,根据索引从数据集合中获取数据;

- 其次,构建DataLoader类,需要指定数据批量的大小和是否需要对数据进行乱序,通过该类即可批量获取数据。

4.5.2 数据处理

构造IrisDataset类进行数据读取,继承自torch.utils.data.Dataset类。torch.utils.data.Dataset是用来封装 Dataset的方法和行为的抽象类,通过一个索引获取指定的样本,同时对该样本进行数据处理。当继承torch.utils.data.Dataset来定义数据读取类时,实现如下方法:

__getitem__:根据给定索引获取数据集中指定样本,并对样本进行数据处理;__len__:返回数据集样本个数。

代码实现如下:

import numpy as np

import torch

import torch.utils.data as io

from dataset import load_data

class IrisDataset(io.Dataset):

def __init__(self, mode='train', num_train=120, num_dev=15):

super(IrisDataset, self).__init__()

# 调用第三章中的数据读取函数,其中不需要将标签转成one-hot类型

X, y = load_data(shuffle=True)

if mode == 'train':

self.X, self.y = X[:num_train], y[:num_train]

elif mode == 'dev':

self.X, self.y = X[num_train:num_train + num_dev], y[num_train:num_train + num_dev]

else:

self.X, self.y = X[num_train + num_dev:], y[num_train + num_dev:]

def __getitem__(self, idx):

return self.X[idx], self.y[idx]

def __len__(self):

return len(self.y)torch.random.manual_seed(12)

train_dataset = IrisDataset(mode='train')

dev_dataset = IrisDataset(mode='dev')

test_dataset = IrisDataset(mode='test')

# 打印训练集长度

print ("length of train set: ", len(train_dataset))length of train set: ?120

4.5.2.2 用DataLoader进行封装?

# 批量大小

batch_size = 16

# 加载数据

train_loader = io.DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

dev_loader = io.DataLoader(dev_dataset, batch_size=batch_size)

test_loader = io.DataLoader(test_dataset, batch_size=batch_size)4.5.3 模型构建

构建一个简单的前馈神经网络进行鸢尾花分类实验。其中输入层神经元个数为4,输出层神经元个数为3,隐含层神经元个数为6。代码实现如下:

# 定义前馈神经网络

class Model_MLP_L2_V3(nn.Module):

def __init__(self, input_size, output_size, hidden_size):

super(Model_MLP_L2_V3, self).__init__()

# 构建第一个全连接层

self.fc1 = nn.Linear(

input_size,

hidden_size

)

normal_(self.fc1.weight,mean=0.0,std=0.01)

constant_(self.fc1.bias,val=1.0)

# 构建第二全连接层

self.fc2 = nn.Linear(

hidden_size,

output_size

)

normal_(self.fc2.weight, mean=0.0, std=0.01)

constant_(self.fc2.bias, val=1.0)

# 定义网络使用的激活函数

self.act = nn.Sigmoid()

def forward(self, inputs):

outputs = self.fc1(inputs)

outputs = self.act(outputs)

outputs = self.fc2(outputs)

return outputs

fnn_model = Model_MLP_L2_V3(input_size=4, output_size=3, hidden_size=6)4.5.4 完善Runner类

基于RunnerV2类进行完善实现了RunnerV3类。其中训练过程使用自动梯度计算,使用DataLoader加载批量数据,使用随机梯度下降法进行参数优化;模型保存时,使用state_dict方法获取模型参数;模型加载时,使用set_state_dict方法加载模型参数.

由于这里使用随机梯度下降法对参数优化,所以数据以批次的形式输入到模型中进行训练,那么评价指标计算也是分别在每个批次进行的,要想获得每个epoch整体的评价结果,需要对历史评价结果进行累积。这里定义Accuracy类实现该功能。

由于没找到pytorch对应的Metric代码,就直接找到了paddle的源代码

import six

import abc

import numpy as np

@six.add_metaclass(abc.ABCMeta)

class Metric(object):

r"""

Base class for metric, encapsulates metric logic and APIs

Usage:

.. code-block:: text

m = SomeMetric()

for prediction, label in ...:

m.update(prediction, label)

m.accumulate()

Advanced usage for :code:`compute`:

Metric calculation can be accelerated by calculating metric states

from model outputs and labels by build-in operators not by Python/NumPy

in :code:`compute`, metric states will be fetched as NumPy array and

call :code:`update` with states in NumPy format.

Metric calculated as follows (operations in Model and Metric are

indicated with curly brackets, while data nodes not):

.. code-block:: text

inputs & labels || ------------------

| ||

{model} ||

| ||

outputs & labels ||

| || tensor data

{Metric.compute} ||

| ||

metric states(tensor) ||

| ||

{fetch as numpy} || ------------------

| ||

metric states(numpy) || numpy data

| ||

{Metric.update} \/ ------------------

Examples:

For :code:`Accuracy` metric, which takes :code:`pred` and :code:`label`

as inputs, we can calculate the correct prediction matrix between

:code:`pred` and :code:`label` in :code:`compute`.

For examples, prediction results contains 10 classes, while :code:`pred`

shape is [N, 10], :code:`label` shape is [N, 1], N is mini-batch size,

and we only need to calculate accurary of top-1 and top-5, we could

calculate the correct prediction matrix of the top-5 scores of the

prediction of each sample like follows, while the correct prediction

matrix shape is [N, 5].

.. code-block:: text

def compute(pred, label):

# sort prediction and slice the top-5 scores

pred = torch.argsort(pred, descending=True)[:, :5]

# calculate whether the predictions are correct

correct = pred == label

return torch.cast(correct, dtype='float32')

With the :code:`compute`, we split some calculations to OPs (which

may run on GPU devices, will be faster), and only fetch 1 tensor with

shape as [N, 5] instead of 2 tensors with shapes as [N, 10] and [N, 1].

:code:`update` can be define as follows:

.. code-block:: text

def update(self, correct):

accs = []

for i, k in enumerate(self.topk):

num_corrects = correct[:, :k].sum()

num_samples = len(correct)

accs.append(float(num_corrects) / num_samples)

self.total[i] += num_corrects

self.count[i] += num_samples

return accs

"""

def __init__(self):

pass

@abc.abstractmethod

def reset(self):

"""

Reset states and result

"""

raise NotImplementedError("function 'reset' not implemented in {}.".

format(self.__class__.__name__))

@abc.abstractmethod

def update(self, *args):

"""

Update states for metric

Inputs of :code:`update` is the outputs of :code:`Metric.compute`,

if :code:`compute` is not defined, the inputs of :code:`update`

will be flatten arguments of **output** of mode and **label** from data:

:code:`update(output1, output2, ..., label1, label2,...)`

see :code:`Metric.compute`

"""

raise NotImplementedError("function 'update' not implemented in {}.".

format(self.__class__.__name__))

@abc.abstractmethod

def accumulate(self):

"""

Accumulates statistics, computes and returns the metric value

"""

raise NotImplementedError(

"function 'accumulate' not implemented in {}.".format(

self.__class__.__name__))

@abc.abstractmethod

def name(self):

"""

Returns metric name

"""

raise NotImplementedError("function 'name' not implemented in {}.".

format(self.__class__.__name__))

def compute(self, *args):

"""

This API is advanced usage to accelerate metric calculating, calulations

from outputs of model to the states which should be updated by Metric can

be defined here, where torch OPs is also supported. Outputs of this API

will be the inputs of "Metric.update".

If :code:`compute` is defined, it will be called with **outputs**

of model and **labels** from data as arguments, all outputs and labels

will be concatenated and flatten and each filed as a separate argument

as follows:

:code:`compute(output1, output2, ..., label1, label2,...)`

If :code:`compute` is not defined, default behaviour is to pass

input to output, so output format will be:

:code:`return output1, output2, ..., label1, label2,...`

see :code:`Metric.update`

"""

return argsclass Accuracy(Metric):

def __init__(self, is_logist=True):

"""

输入:

- is_logist: outputs是logist还是激活后的值

"""

# 用于统计正确的样本个数

self.num_correct = 0

# 用于统计样本的总数

self.num_count = 0

self.is_logist = is_logist

def update(self, outputs, labels):

"""

输入:

- outputs: 预测值, shape=[N,class_num]

- labels: 标签值, shape=[N,1]

"""

# 判断是二分类任务还是多分类任务,shape[1]=1时为二分类任务,shape[1]>1时为多分类任务

if outputs.shape[1] == 1: # 二分类

outputs = torch.squeeze(outputs, dim=-1)

if self.is_logist:

# logist判断是否大于0

preds = torch.tensor((outputs>=0), dtype=torch.float32)

else:

# 如果不是logist,判断每个概率值是否大于0.5,当大于0.5时,类别为1,否则类别为0

preds = torch.tensor((outputs>=0.5), dtype=torch.float32)

else:

# 多分类时,使用'torch.argmax'计算最大元素索引作为类别

preds = torch.argmax(outputs, dim=1)

# 获取本批数据中预测正确的样本个数

labels = torch.squeeze(labels, dim=-1)

batch_correct = torch.sum(torch.tensor(preds==labels, dtype=torch.float32)).numpy()

batch_count = len(labels)

# 更新num_correct 和 num_count

self.num_correct += batch_correct

self.num_count += batch_count

def accumulate(self):

# 使用累计的数据,计算总的指标

if self.num_count == 0:

return 0

return self.num_correct / self.num_count

def reset(self):

# 重置正确的数目和总数

self.num_correct = 0

self.num_count = 0

def name(self):

return "Accuracy"RunnerV3类的代码实现如下:?

import torch.nn.functional as F

class RunnerV3(object):

def __init__(self, model, optimizer, loss_fn, metric, **kwargs):

self.model = model

self.optimizer = optimizer

self.loss_fn = loss_fn

self.metric = metric # 只用于计算评价指标

# 记录训练过程中的评价指标变化情况

self.dev_scores = []

# 记录训练过程中的损失函数变化情况

self.train_epoch_losses = [] # 一个epoch记录一次loss

self.train_step_losses = [] # 一个step记录一次loss

self.dev_losses = []

# 记录全局最优指标

self.best_score = 0

def train(self, train_loader, dev_loader=None, **kwargs):

# 将模型切换为训练模式

self.model.train()

# 传入训练轮数,如果没有传入值则默认为0

num_epochs = kwargs.get("num_epochs", 0)

# 传入log打印频率,如果没有传入值则默认为100

log_steps = kwargs.get("log_steps", 100)

# 评价频率

eval_steps = kwargs.get("eval_steps", 0)

# 传入模型保存路径,如果没有传入值则默认为"best_model.pdparams"

save_path = kwargs.get("save_path", "best_model.pdparams")

custom_print_log = kwargs.get("custom_print_log", None)

# 训练总的步数

num_training_steps = num_epochs * len(train_loader)

if eval_steps:

if self.metric is None:

raise RuntimeError('Error: Metric can not be None!')

if dev_loader is None:

raise RuntimeError('Error: dev_loader can not be None!')

# 运行的step数目

global_step = 0

# 进行num_epochs轮训练

for epoch in range(num_epochs):

# 用于统计训练集的损失

total_loss = 0

for step, data in enumerate(train_loader):

X, y = data

# 获取模型预测

logits = self.model(X)

loss = self.loss_fn(logits, y) # 默认求mean

total_loss += loss

# 训练过程中,每个step的loss进行保存

self.train_step_losses.append((global_step, loss.item()))

if log_steps and global_step % log_steps == 0:

print(

f"[Train] epoch: {epoch}/{num_epochs}, step: {global_step}/{num_training_steps}, loss: {loss.item():.5f}")

# 梯度反向传播,计算每个参数的梯度值

loss.backward()

if custom_print_log:

custom_print_log(self)

# 小批量梯度下降进行参数更新

self.optimizer.step()

# 梯度归零

self.optimizer.zero_grad()

# 判断是否需要评价

if eval_steps > 0 and global_step > 0 and \

(global_step % eval_steps == 0 or global_step == (num_training_steps - 1)):

dev_score, dev_loss = self.evaluate(dev_loader, global_step=global_step)

print(f"[Evaluate] dev score: {dev_score:.5f}, dev loss: {dev_loss:.5f}")

# 将模型切换为训练模式

self.model.train()

# 如果当前指标为最优指标,保存该模型

if dev_score > self.best_score:

self.save_model(save_path)

print(

f"[Evaluate] best accuracy performence has been updated: {self.best_score:.5f} --> {dev_score:.5f}")

self.best_score = dev_score

global_step += 1

# 当前epoch 训练loss累计值

trn_loss = (total_loss / len(train_loader)).item()

# epoch粒度的训练loss保存

self.train_epoch_losses.append(trn_loss)

print("[Train] Training done!")

# 模型评估阶段,使用'torch.no_grad()'控制不计算和存储梯度

@torch.no_grad()

def evaluate(self, dev_loader, **kwargs):

assert self.metric is not None

# 将模型设置为评估模式

self.model.eval()

global_step = kwargs.get("global_step", -1)

# 用于统计训练集的损失

total_loss = 0

# 重置评价

self.metric.reset()

# 遍历验证集每个批次

for batch_id, data in enumerate(dev_loader):

X, y = data

# 计算模型输出

logits = self.model(X)

# 计算损失函数

loss = self.loss_fn(logits, y).item()

# 累积损失

total_loss += loss

# 累积评价

self.metric.update(logits, y)

dev_loss = (total_loss / len(dev_loader))

dev_score = self.metric.accumulate()

# 记录验证集loss

if global_step != -1:

self.dev_losses.append((global_step, dev_loss))

self.dev_scores.append(dev_score)

return dev_score, dev_loss

# 模型评估阶段,使用'torch.no_grad()'控制不计算和存储梯度

@torch.no_grad()

def predict(self, x, **kwargs):

# 将模型设置为评估模式

self.model.eval()

# 运行模型前向计算,得到预测值

logits = self.model(x)

return logits

def save_model(self, save_path):

torch.save(self.model.state_dict(), save_path)

def load_model(self, model_path):

model_state_dict = torch.load(model_path)

self.model.load_state_dict(model_state_dict)4.5.5 模型训练

实例化RunnerV3类,并传入训练配置,代码实现如下:

import torch.optim as opt

from metric import Accuracy

lr = 0.2

# 定义网络

model = fnn_model

# 定义优化器

optimizer = opt.SGD(model.parameters(),lr=lr)

# 定义损失函数。softmax+交叉熵

loss_fn = F.cross_entropy

# 定义评价指标

metric = Accuracy(is_logist=True)

runner = RunnerV3(model, optimizer, loss_fn, metric)使用训练集和验证集进行模型训练,共训练150个epoch。在实验中,保存准确率最高的模型作为最佳模型。代码实现如下:

# 启动训练

log_steps = 100

eval_steps = 50

runner.train(train_loader, dev_loader,

num_epochs=150, log_steps=log_steps, eval_steps = eval_steps,

save_path="best_model.pdparams")

[Train] epoch: 0/150, step: 0/1200, loss: 1.09898

[Evaluate] ?dev score: 0.33333, dev loss: 1.09582

[Evaluate] best accuracy performence has been updated: 0.00000 --> 0.33333

[Train] epoch: 12/150, step: 100/1200, loss: 1.13891

[Evaluate] ?dev score: 0.46667, dev loss: 1.10749

[Evaluate] best accuracy performence has been updated: 0.33333 --> 0.46667

[Evaluate] ?dev score: 0.20000, dev loss: 1.10089

[Train] epoch: 25/150, step: 200/1200, loss: 1.10158

[Evaluate] ?dev score: 0.20000, dev loss: 1.12477

[Evaluate] ?dev score: 0.46667, dev loss: 1.09090

[Train] epoch: 37/150, step: 300/1200, loss: 1.09982

[Evaluate] ?dev score: 0.46667, dev loss: 1.07537

[Evaluate] ?dev score: 0.53333, dev loss: 1.04453

[Evaluate] best accuracy performence has been updated: 0.46667 --> 0.53333

[Train] epoch: 50/150, step: 400/1200, loss: 1.01054

[Evaluate] ?dev score: 1.00000, dev loss: 1.00635

[Evaluate] best accuracy performence has been updated: 0.53333 --> 1.00000

[Evaluate] ?dev score: 0.86667, dev loss: 0.86850

[Train] epoch: 62/150, step: 500/1200, loss: 0.63702

[Evaluate] ?dev score: 0.80000, dev loss: 0.66986

[Evaluate] ?dev score: 0.86667, dev loss: 0.57089

[Train] epoch: 75/150, step: 600/1200, loss: 0.56490

[Evaluate] ?dev score: 0.93333, dev loss: 0.52392

[Evaluate] ?dev score: 0.86667, dev loss: 0.45410

[Train] epoch: 87/150, step: 700/1200, loss: 0.41929

[Evaluate] ?dev score: 0.86667, dev loss: 0.46156

[Evaluate] ?dev score: 0.93333, dev loss: 0.41593

[Train] epoch: 100/150, step: 800/1200, loss: 0.41047

[Evaluate] ?dev score: 0.93333, dev loss: 0.40600

[Evaluate] ?dev score: 0.93333, dev loss: 0.37672

[Train] epoch: 112/150, step: 900/1200, loss: 0.42777

[Evaluate] ?dev score: 0.93333, dev loss: 0.34534

[Evaluate] ?dev score: 0.93333, dev loss: 0.33552

[Train] epoch: 125/150, step: 1000/1200, loss: 0.30734

[Evaluate] ?dev score: 0.93333, dev loss: 0.31958

[Evaluate] ?dev score: 0.93333, dev loss: 0.32091

[Train] epoch: 137/150, step: 1100/1200, loss: 0.28321

[Evaluate] ?dev score: 0.93333, dev loss: 0.28383

[Evaluate] ?dev score: 0.93333, dev loss: 0.27171

[Evaluate] ?dev score: 0.93333, dev loss: 0.25447

[Train] Training done!

可视化观察训练集损失和训练集loss变化情况。

import matplotlib.pyplot as plt

# 绘制训练集和验证集的损失变化以及验证集上的准确率变化曲线

def plot_training_loss_acc(runner, fig_name,

fig_size=(16, 6),

sample_step=20,

loss_legend_loc="upper right",

acc_legend_loc="lower right",

train_color="#e4007f",

dev_color='#f19ec2',

fontsize='large',

train_linestyle="-",

dev_linestyle='--'):

plt.figure(figsize=fig_size)

plt.subplot(1, 2, 1)

train_items = runner.train_step_losses[::sample_step]

train_steps = [x[0] for x in train_items]

train_losses = [x[1] for x in train_items]

plt.plot(train_steps, train_losses, color=train_color, linestyle=train_linestyle, label="Train loss")

if len(runner.dev_losses) > 0:

dev_steps = [x[0] for x in runner.dev_losses]

dev_losses = [x[1] for x in runner.dev_losses]

plt.plot(dev_steps, dev_losses, color=dev_color, linestyle=dev_linestyle, label="Dev loss")

# 绘制坐标轴和图例

plt.ylabel("loss", fontsize=fontsize)

plt.xlabel("step", fontsize=fontsize)

plt.legend(loc=loss_legend_loc, fontsize='x-large')

# 绘制评价准确率变化曲线

if len(runner.dev_scores) > 0:

plt.subplot(1, 2, 2)

plt.plot(dev_steps, runner.dev_scores,

color=dev_color, linestyle=dev_linestyle, label="Dev accuracy")

# 绘制坐标轴和图例

plt.ylabel("score", fontsize=fontsize)

plt.xlabel("step", fontsize=fontsize)

plt.legend(loc=acc_legend_loc, fontsize='x-large')

plt.savefig(fig_name)

plt.show()

plot_training_loss_acc(runner, 'fw-loss.pdf') ?从输出结果可以看出准确率随着迭代次数增加逐渐上升,损失函数下降。

?从输出结果可以看出准确率随着迭代次数增加逐渐上升,损失函数下降。

4.5.6 模型评价

# 加载最优模型

runner.load_model('best_model.pdparams')

# 模型评价

score, loss = runner.evaluate(test_loader)

print("[Test] accuracy/loss: {:.4f}/{:.4f}".format(score, loss))

[Test] accuracy/loss: 1.0000/1.0183

4.5.7 模型预测?

# 获取测试集中第一条数据

X, label = next(iter(test_loader))

logits = runner.predict(X)

pred_class = torch.argmax(logits[0]).numpy()

label = label[0].numpy()

# 输出真实类别与预测类别

print("The true category is {} and the predicted category is {}".format(label, pred_class))The true category is 2 and the predicted category is 2

?next(test_loader),报错’DataLoader’ object is not an?iterator.

改成next(iter(test_loader))

思考题

1. 对比Softmax分类和前馈神经网络分类。

Softmax分类Iris代码:

from sklearn.datasets import load_iris

import pandas

import numpy as np

import op

import copy

import torch

def softmax(X):

"""

输入:

- X:shape=[N, C],N为向量数量,C为向量维度

"""

x_max = torch.max(X, dim=1, keepdim=True)[0]#N,1

x_exp = torch.exp(X - x_max)

partition = torch.sum(x_exp, dim=1, keepdim=True)#N,1

return x_exp / partition

class model_SR(op.Op):

def __init__(self, input_dim, output_dim):

super(model_SR, self).__init__()

self.params = {}

# 将线性层的权重参数全部初始化为0

self.params['W'] = torch.zeros(size=[input_dim, output_dim])

# self.params['W'] = torch.normal(mean=0, std=0.01, shape=[input_dim, output_dim])

# 将线性层的偏置参数初始化为0

self.params['b'] = torch.zeros(size=[output_dim])

# 存放参数的梯度

self.grads = {}

self.X = None

self.outputs = None

self.output_dim = output_dim

def __call__(self, inputs):

return self.forward(inputs)

def forward(self, inputs):

self.X = inputs

# 线性计算

score = torch.matmul(self.X, self.params['W']) + self.params['b']

# Softmax 函数

self.outputs = softmax(score)

return self.outputs

def backward(self, labels):

"""

输入:

- labels:真实标签,shape=[N, 1],其中N为样本数量

"""

# 计算偏导数

N =labels.shape[0]

labels = torch.nn.functional.one_hot(labels, self.output_dim)

self.grads['W'] = -1 / N * torch.matmul(self.X.t(), (labels-self.outputs))

self.grads['b'] = -1 / N * torch.matmul(torch.ones(size=[N]), (labels-self.outputs))

# 加载数据集

class RunnerV2(object):

def __init__(self, model, optimizer, metric, loss_fn):

self.model = model

self.optimizer = optimizer

self.loss_fn = loss_fn

self.metric = metric

# 记录训练过程中的评价指标变化情况

self.train_scores = []

self.dev_scores = []

# 记录训练过程中的损失函数变化情况

self.train_loss = []

self.dev_loss = []

def train(self, train_set, dev_set, **kwargs):

# 传入训练轮数,如果没有传入值则默认为0

num_epochs = kwargs.get("num_epochs", 0)

# 传入log打印频率,如果没有传入值则默认为100

log_epochs = kwargs.get("log_epochs", 100)

# 传入模型保存路径,如果没有传入值则默认为"best_model.pdparams"

save_path = kwargs.get("save_path", "best_model.pdparams")

# 梯度打印函数,如果没有传入则默认为"None"

print_grads = kwargs.get("print_grads", None)

# 记录全局最优指标

best_score = 0

# 进行num_epochs轮训练

for epoch in range(num_epochs):

X, y = train_set

# 获取模型预测

logits = self.model(X)

# 计算交叉熵损失

trn_loss = self.loss_fn(logits, y).item()

self.train_loss.append(trn_loss)

# 计算评价指标

trn_score = self.metric(logits, y).item()

self.train_scores.append(trn_score)

# 计算参数梯度

self.model.backward(y)

if print_grads is not None:

# 打印每一层的梯度

print_grads(self.model)

# 更新模型参数

self.optimizer.step()

dev_score, dev_loss = self.evaluate(dev_set)

# 如果当前指标为最优指标,保存该模型

if dev_score > best_score:

self.save_model(save_path)

print(f"best accuracy performence has been updated: {best_score:.5f} --> {dev_score:.5f}")

best_score = dev_score

if epoch % log_epochs == 0:

print(f"[Train] epoch: {epoch}, loss: {trn_loss}, score: {trn_score}")

print(f"[Dev] epoch: {epoch}, loss: {dev_loss}, score: {dev_score}")

def evaluate(self, data_set):

X, y = data_set

# 计算模型输出

logits = self.model(X)

# 计算损失函数

loss = self.loss_fn(logits, y).item()

self.dev_loss.append(loss)

# 计算评价指标

score = self.metric(logits, y).item()

self.dev_scores.append(score)

return score, loss

def predict(self, X):

return self.model(X)

def save_model(self, save_path):

torch.save(self.model.params, save_path)

def load_model(self, model_path):

self.model.params = torch.load(model_path)

class MultiCrossEntropyLoss(op.Op):

def __init__(self):

self.predicts = None

self.labels = None

self.num = None

def __call__(self, predicts, labels):

return self.forward(predicts, labels)

def forward(self, predicts, labels):

"""

输入:

- predicts:预测值,shape=[N, 1],N为样本数量

- labels:真实标签,shape=[N, 1]

输出:

- 损失值:shape=[1]

"""

self.predicts = predicts

self.labels = labels

self.num = self.predicts.shape[0]

loss = 0

for i in range(0, self.num):

index = self.labels[i]

loss -= torch.log(self.predicts[i][index])

return loss / self.num

from abc import abstractmethod

class Optimizer(object):

def __init__(self, init_lr, model):

"""

优化器类初始化

"""

# 初始化学习率,用于参数更新的计算

self.init_lr = init_lr

# 指定优化器需要优化的模型

self.model = model

@abstractmethod

def step(self):

"""

定义每次迭代如何更新参数

"""

pass

class SimpleBatchGD(Optimizer):

def __init__(self, init_lr, model):

super(SimpleBatchGD, self).__init__(init_lr=init_lr, model=model)

def step(self):

# 参数更新

# 遍历所有参数,按照公式(3.8)和(3.9)更新参数

if isinstance(self.model.params, dict):

for key in self.model.params.keys():

self.model.params[key] = self.model.params[key] - self.init_lr * self.model.grads[key]

def accuracy(preds, labels):

"""

输入:

- preds:预测值,二分类时,shape=[N, 1],N为样本数量,多分类时,shape=[N, C],C为类别数量

- labels:真实标签,shape=[N, 1]

输出:

- 准确率:shape=[1]

"""

# 判断是二分类任务还是多分类任务,preds.shape[1]=1时为二分类任务,preds.shape[1]>1时为多分类任务

if preds.shape[1] == 1:

data_float = torch.randn(preds.shape[0], preds.shape[1])

# 二分类时,判断每个概率值是否大于0.5,当大于0.5时,类别为1,否则类别为0

# 使用'torch.cast'将preds的数据类型转换为float32类型

preds = (preds>=0.5).type(torch.float32)

else:

# 多分类时,使用'torch.argmax'计算最大元素索引作为类别

data_float = torch.randn(preds.shape[0], preds.shape[1])

preds = torch.argmax(preds,dim=1)

return torch.mean(torch.eq(preds, labels).type(torch.float32))

import matplotlib.pyplot as plt

def plot(runner,fig_name):

plt.figure(figsize=(10,5))

plt.subplot(1,2,1)

epochs = [i for i in range(len(runner.train_scores))]

# 绘制训练损失变化曲线

plt.plot(epochs, runner.train_loss, color='#e4007f', label="Train loss")

# 绘制评价损失变化曲线

plt.plot(epochs, runner.dev_loss, color='#f19ec2', linestyle='--', label="Dev loss")

# 绘制坐标轴和图例

plt.ylabel("loss", fontsize='large')

plt.xlabel("epoch", fontsize='large')

plt.legend(loc='upper right', fontsize='x-large')

plt.subplot(1,2,2)

# 绘制训练准确率变化曲线

plt.plot(epochs, runner.train_scores, color='#e4007f', label="Train accuracy")

# 绘制评价准确率变化曲线

plt.plot(epochs, runner.dev_scores, color='#f19ec2', linestyle='--', label="Dev accuracy")

# 绘制坐标轴和图例

plt.ylabel("score", fontsize='large')

plt.xlabel("epoch", fontsize='large')

plt.legend(loc='lower right', fontsize='x-large')

plt.tight_layout()

plt.savefig(fig_name)

plt.show()

def load_data(shuffle=True):

"""

加载鸢尾花数据

输入:

- shuffle:是否打乱数据,数据类型为bool

输出:

- X:特征数据,shape=[150,4]

- y:标签数据, shape=[150]

"""

# 加载原始数据

X = np.array(load_iris().data, dtype=np.float32)

y = np.array(load_iris().target, dtype=np.int64)

X = torch.tensor(X)

y = torch.tensor(y)

# 数据归一化

X_min = torch.min(X, dim=0)[0]

X_max = torch.max(X, dim=0)[0]

X = (X-X_min) / (X_max-X_min)

# 如果shuffle为True,随机打乱数据

if shuffle:

idx = torch.randperm(X.shape[0])

X = X[idx]

y = y[idx]

return X, y

# 固定随机种子

torch.manual_seed(12)

num_train = 120

num_dev = 15

num_test = 15

X, y = load_data(shuffle=True)

X_train, y_train = X[:num_train], y[:num_train]

X_dev, y_dev = X[num_train:num_train + num_dev], y[num_train:num_train + num_dev]

X_test, y_test = X[num_train + num_dev:], y[num_train + num_dev:]

# 输入维度

input_dim = 4

# 类别数

output_dim = 3

# 实例化模型

model = model_SR(input_dim=input_dim, output_dim=output_dim)

# 学习率

lr = 0.2

# 梯度下降法

optimizer = SimpleBatchGD(init_lr=lr, model=model)

# 交叉熵损失

loss_fn = MultiCrossEntropyLoss()

# 准确率

metric = accuracy

# 实例化RunnerV2

runner = RunnerV2(model, optimizer, metric, loss_fn)

# 启动训练

runner.train([X_train, y_train], [X_dev, y_dev], num_epochs=150, log_epochs=10, save_path="best_model.pdparams")

# 加载最优模型

runner.load_model('best_model.pdparams')

# 模型评价

score, loss = runner.evaluate([X_test, y_test])

print("[Test] score/loss: {:.4f}/{:.4f}".format(score, loss))

# 预测测试集数据

logits = runner.predict(X_test)

# 观察其中一条样本的预测结果

pred = torch.argmax(logits[0]).numpy()

# 获取该样本概率最大的类别

label = y_test[0].numpy()

# 输出真实类别与预测类别

print("The true category is {} and the predicted category is {}".format(label, pred))

[Train] epoch: 130, loss: 0.572494626045227, score: 0.8416666388511658

[Dev] epoch: 130, loss: 0.5110094547271729, score: 0.800000011920929

[Train] epoch: 140, loss: 0.5590549111366272, score: 0.8500000238418579

[Dev] epoch: 140, loss: 0.49699389934539795, score: 0.800000011920929

[Test] score/loss: 0.8000/0.6527

The true category is 1 and the predicted category is 1

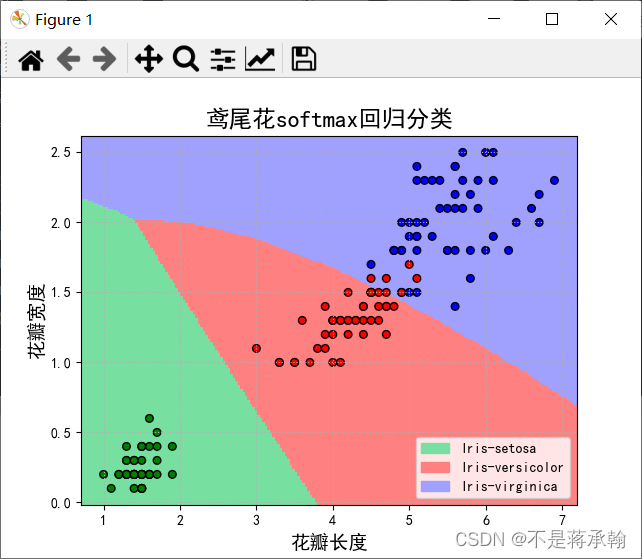

Softmax分类Iris决策边界代码如下:

# -*- coding:utf-8 -*-

import numpy as np

import matplotlib as mpl

import matplotlib.pyplot as plt

import pandas as pd

import matplotlib.patches as mpatches

from sklearn.preprocessing import PolynomialFeatures

def soft_max(X, Y, K, alpha, lamda):

n = len(X[0])

w = np.zeros((K,n))

wnew = np.zeros((K,n))

for times in range(1000):

for i in range(len(X)):

x = X[i]

for k in range(K):

y = 0

if Y[i] == k:

y = 1

p = predict(w,x,k)

g = (y-p)*x

wnew[k] = w[k] + (alpha*g + lamda*w[k])

w = wnew.copy()

return w

def predict(w,x,k):

numerator = np.exp(np.dot(w[k],x))

denominator = sum(np.exp(np.dot(w,x)))

return numerator/denominator

def model(w,x,K):

cat = []

p = [0,0,0]

for i in range(len(x[:,0])):

for k in range(K):

p[k] = predict(w,x[i,:],k)

cat.append(p.index(max(p)))

return cat

def extend(a, b):

return 1.05*a-0.05*b, 1.05*b-0.05*a

data = pd.read_csv('iris.data', header=None)

columns = np.array([u'花萼长度', u'花萼宽度', u'花瓣长度', u'花瓣宽度', u'类型'])

data.rename(columns=dict(zip(np.arange(5), columns)), inplace=True)

data.drop(columns[:2],axis=1,inplace=True)

data[u'类型'] = pd.Categorical(data[u'类型']).codes

x = data[columns[2:-1]].values

y = data[columns[-1]].values

poly = PolynomialFeatures(2)

x_p = poly.fit_transform(x)

N, M = 200, 200 # 横纵各采样多少个值

x1_min, x1_max = extend(x[:, 0].min(), x[:, 0].max()) # 第0列的范围

x2_min, x2_max = extend(x[:, 1].min(), x[:, 1].max()) # 第1列的范围

t1 = np.linspace(x1_min, x1_max, N)

t2 = np.linspace(x2_min, x2_max, M)

x1, x2 = np.meshgrid(t1, t2)

x_show = np.stack((x1.flat, x2.flat), axis=1) # 测试点

x_show_p = poly.fit_transform(x_show)

K = 3

w = soft_max(x_p,y,K,0.0005,0.0000005)

y_hat = np.array(model(w,x_show_p,K))

y_hat = y_hat.reshape(x1.shape) # 使之与输入的形状相同

cm_light = mpl.colors.ListedColormap(['#77E0A0', '#FF8080', '#A0A0FF'])

cm_dark = mpl.colors.ListedColormap(['g', 'r', 'b'])

mpl.rcParams['font.sans-serif'] = u'SimHei'

mpl.rcParams['axes.unicode_minus'] = False

plt.figure(facecolor='w')

plt.pcolormesh(x1, x2, y_hat, cmap=cm_light) # 预测值的显示

plt.scatter(x[:, 0], x[:, 1], s=30, c=y, edgecolors='k', cmap=cm_dark) # 样本的显示

x1_label, x2_label = columns[2],columns[3]

plt.xlabel(x1_label, fontsize=14)

plt.ylabel(x2_label, fontsize=14)

plt.xlim(x1_min, x1_max)

plt.ylim(x2_min, x2_max)

plt.grid(b=True, ls=':')

# 画各种图

# a = mpl.patches.Wedge(((x1_min+x1_max)/2, (x2_min+x2_max)/2), 1.5, 0, 360, width=0.5, alpha=0.5, color='r')

# plt.gca().add_patch(a)

patchs = [mpatches.Patch(color='#77E0A0', label='Iris-setosa'),

mpatches.Patch(color='#FF8080', label='Iris-versicolor'),

mpatches.Patch(color='#A0A0FF', label='Iris-virginica')]

plt.legend(handles=patchs, fancybox=True, framealpha=0.8, loc='lower right')

plt.title(u'鸢尾花softmax回归分类', fontsize=17)

plt.show()

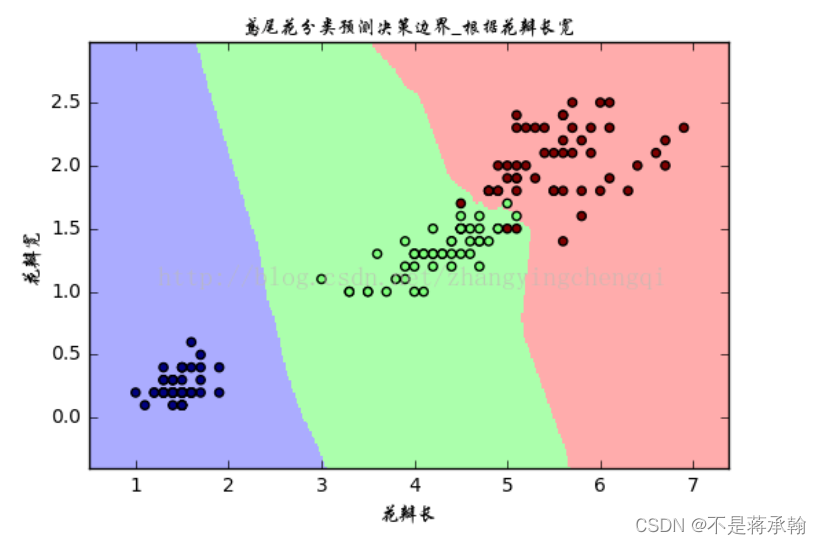

?神经网络划分决策边界如下:

?通过决策边界可以看出,神经网络的划分要更优于Softmax回归。

2. 自定义隐藏层层数和每个隐藏层中的神经元个数,尝试找到最优超参数完成多分类。

一个隐藏层时:

2个神经元:

[Train] epoch: 137/150, step: 1100/1200, loss: 0.45784

[Evaluate] ?dev score: 0.93333, dev loss: 0.38736

[Evaluate] ?dev score: 0.93333, dev loss: 0.38031

[Evaluate] ?dev score: 0.93333, dev loss: 0.35182

[Train] Training done!

[Test] accuracy/loss: 1.0000/0.9992

6个神经元:

[Train] epoch: 137/150, step: 1100/1200, loss: 0.28321

[Evaluate] ?dev score: 0.93333, dev loss: 0.28383

[Evaluate] ?dev score: 0.93333, dev loss: 0.27171

[Evaluate] ?dev score: 0.93333, dev loss: 0.25447

[Train] Training done!

[Test] accuracy/loss: 1.0000/1.0183?

9个神经元:

[Train] epoch: 137/150, step: 1100/1200, loss: 0.30954

[Evaluate] ?dev score: 0.86667, dev loss: 0.26464

[Evaluate] ?dev score: 0.86667, dev loss: 0.25517

[Evaluate] ?dev score: 0.86667, dev loss: 0.27032

[Train] Training done!

[Test] accuracy/loss: 1.0000/0.6159?

可以看出在训练集和开发集上,6个神经元的效果最好,可是在测试集上效果最差

两个隐层:

2个神经元:

[Train] epoch: 137/150, step: 1100/1200, loss: 1.07650

[Evaluate] ?dev score: 0.20000, dev loss: 1.11814

[Evaluate] ?dev score: 0.20000, dev loss: 1.10823

[Evaluate] ?dev score: 0.20000, dev loss: 1.10268

[Train] Training done!

[Test] accuracy/loss: 0.3333/1.0948

6个神经元:

[Train] epoch: 137/150, step: 1100/1200, loss: 1.10329

[Evaluate] ?dev score: 0.46667, dev loss: 1.08948

[Evaluate] ?dev score: 0.46667, dev loss: 1.10116

[Evaluate] ?dev score: 0.46667, dev loss: 1.09433

[Train] Training done!

[Test] accuracy/loss: 0.3333/1.0922

The true category is 2 and the predicted category is 2?

9个神经元:

[Train] epoch: 137/150, step: 1100/1200, loss: 1.10266

[Evaluate] ?dev score: 0.33333, dev loss: 1.10681

[Evaluate] ?dev score: 0.46667, dev loss: 1.09803

[Evaluate] ?dev score: 0.20000, dev loss: 1.11512

[Train] Training done!

[Test] accuracy/loss: 0.3333/1.0928

可以看出两个隐层的训练效果太差了,出现了过拟合的状况。

关于隐藏层层数

·没有隐藏层,进能够表示线性可分的函数或者决策

·隐藏层数为1,可以拟合从一个有限空间到另外一个有限空间的连续映射

·隐藏层数为2,搭配适当的激活函数可以表示任意精度的任意决策边界,可以拟合任何精度的任何平滑映射

·隐藏层数大于2:多出来的隐藏层可以学习复杂的描述(某种自动特征工程)

3. 对比SVM与FNN分类效果,谈谈自己看法。

SVM代码如下:

import math # 数学

import random # 随机

import numpy as np

import matplotlib.pyplot as plt

import matplotlib as mpl

mpl.rcParams['font.sans-serif'] = u'SimHei'

def zhichi_w(zhichi, xy, a): # 计算更新 w

w = [0, 0]

if len(zhichi) == 0: # 初始化的0

return w

for i in zhichi:

w[0] += a[i] * xy[0][i] * xy[2][i] # 更新w

w[1] += a[i] * xy[1][i] * xy[2][i]

return w

def zhichi_b(zhichi, xy, a): # 计算更新 b

b = 0

if len(zhichi) == 0: # 初始化的0

return 0

for s in zhichi: # 对任意的支持向量有 ysf(xs)=1 所有支持向量求解平均值

sum = 0

for i in zhichi:

sum += a[i] * xy[2][i] * (xy[0][i] * xy[0][s] + xy[1][i] * xy[1][s])

b += 1 / xy[2][s] - sum

return b / len(zhichi)

def SMO(xy, m):

a = [0.0] * len(xy[0]) # 拉格朗日乘子

zhichi = set() # 支持向量下标

loop = 1 # 循环标记(符合KKT)

w = [0, 0] # 初始化 w

b = 0 # 初始化 b

while loop:

loop += 1

if loop == 150:

print("达到早停标准")

print("循环了:", loop, "次")

loop = 0

break

# 初始化=========================================

fx = [] # 储存所有的fx

yfx = [] # 储存所有yfx-1的值

Ek = [] # Ek,记录fx-y用于启发式搜索

E_ = -1 # 贮存最大偏差,减少计算

a1 = 0 # SMO a1

a2 = 0 # SMO a2

# 初始化结束======================================

# 寻找a1,a2======================================

for i in range(len(xy[0])): # 计算所有的 fx yfx-1 Ek

fx.append(w[0] * xy[0][i] + w[1] * xy[1][i] + b) # 计算 fx=wx+b

yfx.append(xy[2][i] * fx[i] - 1) # 计算 yfx-1

Ek.append(fx[i] - xy[2][i]) # 计算 fx-y

if i in zhichi: # 之前看过的不看了,防止重复找某个a

continue

if yfx[i] <= yfx[a1]:

a1 = i # 得到偏离最大位置的下标(数值最小的)

if yfx[a1] >= 0: # 最小的也满足KKT

print("循环了:", loop, "次")

loop = 0 # 循环标记(符合KKT)置零(没有用到)

break

for i in range(len(xy[0])): # 遍历找间隔最大的a2

if i == a1: # 如果是a1,跳过

continue

Ei = abs(Ek[i] - Ek[a1]) # |Eki-Eka1|

if Ei < E_: # 找偏差

E_ = Ei # 储存偏差的值

a2 = i # 储存偏差的下标

# 寻找a1,a2结束===================================

zhichi.add(a1) # a1录入支持向量

zhichi.add(a2) # a2录入支持向量

# 分析约束条件=====================================

# c=a1*y1+a2*y2

c = a[a1] * xy[2][a1] + a[a2] * xy[2][a2] # 求出c

# n=K11+k22-2*k12

if m == "xianxinghe": # 线性核

n = xy[0][a1] ** 2 + xy[1][a1] ** 2 + xy[0][a2] ** 2 + xy[1][a2] ** 2 - 2 * (

xy[0][a1] * xy[0][a2] + xy[1][a1] * xy[1][a2])

elif m == "duoxiangshihe": # 多项式核(这里是二次)

n = (xy[0][a1] ** 2 + xy[1][a1] ** 2) ** 2 + (xy[0][a2] ** 2 + xy[1][a2] ** 2) ** 2 - 2 * (

xy[0][a1] * xy[0][a2] + xy[1][a1] * xy[1][a2]) ** 2

else: # 高斯核 取 2σ^2 = 1

n = 2 * math.exp(-1) - 2 * math.exp(-((xy[0][a1] - xy[0][a2]) ** 2 + (xy[1][a1] - xy[1][a2]) ** 2))

# 确定a1的可行域=====================================

if xy[2][a1] == xy[2][a2]:

L = max(0.0, a[a1] + a[a2] - 0.5) # 下界

H = min(0.5, a[a1] + a[a2]) # 上界

else:

L = max(0.0, a[a1] - a[a2]) # 下界

H = min(0.5, 0.5 + a[a1] - a[a2]) # 上界

if n > 0:

a1_New = a[a1] - xy[2][a1] * (Ek[a1] - Ek[a2]) / n # a1_New = a1_old-y1(e1-e2)/n

# print("x1=",xy[0][a1],"y1=",xy[1][a1],"z1=",xy[2][a1],"x2=",xy[0][a2],"y2=",xy[1][a2],"z2=",xy[2][a2],"a1_New=",a1_New)

# 越界裁剪============================================================

if a1_New >= H:

a1_New = H

elif a1_New <= L:

a1_New = L

else:

a1_New = min(H, L)

# 参数更新=======================================

a[a2] = a[a2] + xy[2][a1] * xy[2][a2] * (a[a1] - a1_New) # a2更新

a[a1] = a1_New # a1更新

w = zhichi_w(zhichi, xy, a) # 更新w

b = zhichi_b(zhichi, xy, a) # 更新b

# print("W=", w, "b=", b, "zhichi=", zhichi, "a1=", a[a1], "a2=", a[a2])

# 标记支持向量======================================

for i in zhichi:

if a[i] == 0: # 选了,但值仍为0

loop = loop + 1

e = 'silver'

else:

if xy[2][i] == 1:

e = 'b'

else:

e = 'r'

plt.scatter(x1[0][i], x1[1][i], c='none', s=100, linewidths=1, edgecolor=e)

print("支持向量数为:", len(zhichi), "\na为零支持向量:", loop)

print("有用向量数:", len(zhichi) - loop)

# 返回数据 w b =======================================

return [w, b]

def panduan(xyz, w_b1, w_b2):

c = 0

for i in range(len(xyz[0])):

if (xyz[0][i] * w_b1[0][0] + xyz[1][i] * w_b1[0][1] + w_b1[1]) * xyz[2][i][0] < 0:

c = c + 1

continue

if (xyz[0][i] * w_b2[0][0] + xyz[1][i] * w_b2[0][1] + w_b2[1]) * xyz[2][i][1] < 0:

c = c + 1

continue

return (1 - c / len(xyz[0])) * 100

def huitu(x1, x2, wb1, wb2, name):

x = [x1[0][:], x1[1][:], x1[2][:]]

for i in range(len(x[2])): # 对训练集‘上色’

if x[2][i] == [1, 1]:

x[2][i] = 'r' # 训练集 1 1 红色

elif x[2][i] == [-1, 1]:

x[2][i] = 'g' # 训练集 -1 1 绿色

else:

x[2][i] = 'b' # 训练集 -1 -1 蓝色

plt.scatter(x[0], x[1], c=x[2], alpha=0.8) # 绘点训练集

x = [x2[0][:], x2[1][:], x2[2][:]]

for i in range(len(x[2])): # 对测试集‘上色’

if x[2][i] == [1, 1]:

x[2][i] = 'orange' # 训练集 1 1 橙色

elif x[2][i] == [-1, 1]:

x[2][i] = 'y' # 训练集 -1 1 黄色

else:

x[2][i] = 'm' # 训练集 -1 -1 紫色

plt.scatter(x[0], x[1], c=x[2], alpha=0.8) # 绘点测试集

plt.xlabel('x') # x轴标签

plt.ylabel('y') # y轴标签

plt.title(name) # 标题

xl = np.arange(min(x[0]), max(x[0]), 0.1) # 绘制分类线一

yl = (-wb1[0][0] * xl - wb1[1]) / wb1[0][1]

plt.plot(xl, yl, 'r')

xl = np.arange(min(x[0]), max(x[0]), 0.1) # 绘制分类线二

yl = (-wb2[0][0] * xl - wb2[1]) / wb2[0][1]

plt.plot(xl, yl, 'b')

# 主函数=======================================================

f = open('Iris.txt', 'r') # 读文件

x = [[], [], [], [], []] # 花朵属性,(0,1,2,3),花朵种类

while 1:

yihang = f.readline() # 读一行

if len(yihang) <= 1: # 读到末尾结束

break

fenkai = yihang.split('\t') # 按\t分开

for i in range(4): # 分开的四个值

x[i].append(eval(fenkai[i])) # 化为数字加到x中

if (eval(fenkai[4]) == 1): # 将标签化为向量形式

x[4].append([1, 1])

else:

if (eval(fenkai[4]) == 2):

x[4].append([-1, 1])

else:

x[4].append([-1, -1])

print('数据集=======================================================')

print(len(x[0])) # 数据大小

# 选择数据===================================================

shuxing1 = eval(input("选取第一个属性:"))

if shuxing1 < 0 or shuxing1 > 4:

print("无效选项,默认选择第1项")

shuxing1 = 1

shuxing2 = eval(input("选取第一个属性:"))

if shuxing2 < 0 or shuxing2 > 4 or shuxing1 == shuxing2:

print("无效选项,默认选择第2项")

shuxing2 = 2

# 生成数据集==================================================

lt = list(range(150)) # 得到一个顺序序列

random.shuffle(lt) # 打乱序列

x1 = [[], [], []] # 初始化x1

x2 = [[], [], []] # 初始化x2

for i in lt[0:100]: # 截取部分做训练集

x1[0].append(x[shuxing1][i]) # 加上数据集x属性

x1[1].append(x[shuxing2][i]) # 加上数据集y属性

x1[2].append(x[4][i]) # 加上数据集c标签

for i in lt[100:150]: # 截取部分做测试集

x2[0].append(x[shuxing1][i]) # 加上数据集x属性

x2[1].append(x[shuxing2][i]) # 加上数据集y属性

x2[2].append(x[4][i]) # 加上数据集c标签

print('\n\n开始训练==============================================')

print('\n线性核==============================================')

# 计算 w b============================================

plt.figure(1) # 第一张画布

x = [x1[0][:], x1[1][:], []] # 第一次分类

for i in x1[2]:

x[2].append(i[0]) # 加上数据集标签

wb1 = SMO(x, "SVM")

x = [x1[0][:], x1[1][:], []] # 第二次分类

for i in x1[2]:

x[2].append(i[1]) # 加上数据集标签

wb2 = SMO(x, "SVM")

print("w1为:", wb1[0], " b1为:", wb1[1])

print("w2为:", wb2[0], " b2为:", wb2[1])

# 计算正确率===========================================

print("训练集上的正确率为:", panduan(x1, wb1, wb2), "%")

print("测试集上的正确率为:", panduan(x2, wb1, wb2), "%")

# 绘图 ===============================================

# 圈着的是曾经选中的值,灰色的是选中但更新为0

huitu(x1, x2, wb1, wb2, "SVM")

print('\n多项式核============================================')

# 计算 w b============================================

plt.figure(2) # 第二张画布

x = [x1[0][:], x1[1][:], []] # 第一次分类

for i in x1[2]:

x[2].append(i[0]) # 加上数据集标签

wb1 = SMO(x, "SVM")

x = [x1[0][:], x1[1][:], []] # 第二次分类

for i in x1[2]:

x[2].append(i[1]) # 加上数据集标签

wb2 = SMO(x, "SVM")

print("w1为:", wb1[0], " b1为:", wb1[1])

print("w2为:", wb2[0], " b2为:", wb2[1])

# 计算正确率===========================================

print("训练集上的正确率为:", panduan(x1, wb1, wb2), "%")

print("测试集上的正确率为:", panduan(x2, wb1, wb2), "%")

# 绘图 ===============================================

# 圈着的是曾经选中的值,灰色的是选中但更新为0

huitu(x1, x2, wb1, wb2, "SVM")

print('\n高斯核==============================================')

# 计算 w b============================================

plt.figure(3) # 第三张画布

x = [x1[0][:], x1[1][:], []] # 第一次分类

for i in x1[2]:

x[2].append(i[0]) # 加上数据集标签

wb1 = SMO(x, "SVM")

x = [x1[0][:], x1[1][:], []] # 第二次分类

for i in x1[2]:

x[2].append(i[1]) # 加上数据集标签

wb2 = SMO(x, "SVM")

print("w1为:", wb1[0], " b1为:", wb1[1])

print("w2为:", wb2[0], " b2为:", wb2[1])

# 计算正确率===========================================

print("训练集上的正确率为:", panduan(x1, wb1, wb2), "%")

print("测试集上的正确率为:", panduan(x2, wb1, wb2), "%")

# 绘图 ===============================================

# 圈着的是曾经选中的值,灰色的是选中但更新为0

huitu(x1, x2, wb1, wb2, "SVM")

# 显示所有图

plt.show() # 显示数据集=======================================================

150

选取第一个属性:1

选取第一个属性:2

开始训练==============================================

循环了: 3 次

支持向量数为: 2?

a为零支持向量: 0

有用向量数: 2

循环了: 55 次

支持向量数为: 54?

a为零支持向量: 44

有用向量数: 10

w1为: [0.6000000000000001, -1.2] ?b1为: 1.38

w2为: [-0.34999999999999987, -1.0] ?b2为: 5.924444444444445

训练集上的正确率为: 92.0 %

测试集上的正确率为: 94.0 %

?二者在形式上有几分相似,但实际上有很大不同。

简而言之,神经网络是个“黑匣子”,优化目标是基于经验风险最小化,易陷入局部最优,训练结果不太稳定,一般需要大样本;

而支持向量机有严格的理论和数学基础,基于结构风险最小化原则, 泛化能力优于前者,算法具有全局最优性, 是针对小样本统计的理论。

目前来看,虽然二者均为机器学习领域非常流行的方法,但后者在很多方面的应用一般都优于前者。

4. 尝试基于MNIST手写数字识别数据集,设计合适的前馈神经网络进行实验,并取得95%以上的准确率。

import torch

import torch.nn as nn

from torch.utils.data import DataLoader, TensorDataset

import torch.optim as optim

from scipy.io import loadmat

from torch.autograd import Variable

import torch.nn.functional as F

from sklearn.preprocessing import OneHotEncoder

from sklearn.metrics import accuracy_score, roc_auc_score, precision_score, recall_score

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

import numpy as np

import time

import warnings

warnings.filterwarnings("ignore")

def LoadMatFile(dataset='mnist'):

if dataset == 'usps':

X = loadmat('usps_train.mat')

X = X['usps_train']

y = loadmat('usps_train_labels.mat')

y = y['usps_train_labels']

else:

X = loadmat('mnist_train.mat')

X = X['mnist_train']

y = loadmat('mnist_train_labels.mat')

y = y['mnist_train_labels']

return X, y

loss = nn.CrossEntropyLoss()

class Recognition(nn.Module):

def __init__(self, dim_input, dim_output, depth=3):

super(Recognition, self).__init__()

self.depth = depth

self.linear = nn.Linear(dim_input, dim_input)

self.final = nn.Linear(dim_input, dim_output)

self.relu = nn.ReLU()

def net(self):

nets = nn.ModuleList()

for i in range(self.depth-1):

nets.append(self.linear)

nets.append(self.relu)

nets.append(self.final)

return nets

def forward(self, X):

nets = self.net()

for n in nets:

X = n(X)

y_pred = torch.sigmoid(X)

return y_pred

def props_to_onehot(props):

if isinstance(props, list):

props = np.array()

a = np.argmax(props, axis=1)

b = np.zeros((len(a), props.shape[1]))

b[np.arange(len(a)), a] = 1

return b

def process_labels(y):

labels = dict()

for i in y:

if i[0] in labels:

continue

else:

labels[i[0]] = len(labels)

Y = []

for i in y:

Y.append([labels[i[0]]])

return np.array(Y), len(labels)

def eval(y_hat, y):

y_hat = y_hat.detach().numpy()

encoder = OneHotEncoder(categories='auto')

y = encoder.fit_transform(y)

y = y.toarray()

roc = roc_auc_score(y, y_hat, average='micro')

y_hat = props_to_onehot(y_hat)

acc = accuracy_score(y, y_hat)

precision = precision_score(y, y_hat, average='macro')

recall = recall_score(y, y_hat, average='macro')

return acc, precision, roc, recall

if __name__ == "__main__":

data_name = 'usps' # usps, mnist

depth = 2

epoch = 1000

lr = 0.01

batch_size = 32

test_size = 0.2 # train: test = 8:2

X, y = LoadMatFile(data_name)

y, num_output = process_labels(y)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=test_size)

X_train = torch.FloatTensor(X_train)

X_test = torch.FloatTensor(X_test)

y_train = torch.LongTensor(y_train)

y_test = torch.LongTensor(y_test)

dataset = TensorDataset(X_train, y_train)

dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

net = Recognition(X_train.shape[1], num_output, depth=depth)

optimzer = optim.SGD(net.parameters(), lr=lr)

loss_history = []

epoch_his = []

acc_history = []

roc_history = []

recall_history = []

precision_history = []

start = time.time()

for i in range(epoch):

epoch_his.append(i)

print('epoch ', i)

net.train()

for X, y in dataloader:

X = Variable(X)

y_pred = net(X)

l = loss(y_pred, y.squeeze()).sum()

l.backward()

optimzer.step()

optimzer.zero_grad()

loss_history.append(l)

net.eval()

y_hat = net(X_test)

acc, p, roc, recall = eval(y_hat, y_test)

acc_history.append(acc)

recall_history.append(recall)

roc_history.append(roc)

precision_history.append(p)

print('loss:{}, acc:{}, precision:{}, roc:{}, recall:{}'.format(

l, acc, p, roc, recall))

elapsed = (time.time() - start)

print('total time: {}'.format(elapsed))

plt.plot(np.array(epoch_his), np.array(loss_history), label='loss')

plt.legend()

plt.savefig('loss_{}_depth{}_lr{}_epoch{}_batch{}.png'.format(

data_name, depth, lr, epoch, batch_size))

plt.show()

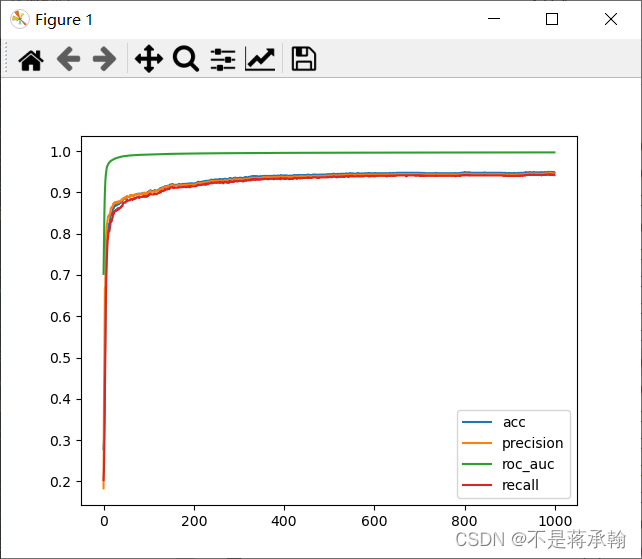

plt.plot(np.array(epoch_his), np.array(acc_history), label='acc')

plt.plot(np.array(epoch_his), np.array(

precision_history), label='precision')

plt.plot(np.array(epoch_his), np.array(roc_history), label='roc_auc')

plt.plot(np.array(epoch_his), np.array(recall_history), label='recall')

plt.legend()

plt.savefig('metrics_{}_depth{}_lr{}_epoch{}_batch{}.png'.format(

data_name, depth, lr, epoch, batch_size))

plt.show()

?epoch ?997

loss:1.4835679531097412, acc:0.9483870967741935, precision:0.9453482437669699, roc:0.9966766870929201, recall:0.9418365760280014

epoch ?998

loss:1.4650973081588745, acc:0.9494623655913978, precision:0.9463293024467889, roc:0.9966754024228877, recall:0.9432651474565729

epoch ?999

loss:1.4738668203353882, acc:0.9483870967741935, precision:0.9453482437669699, roc:0.9966756593568943, recall:0.9418365760280014

?

?在训练1000次后,识别准确率达到了95%

总结

更深入了解了神经网络,同时复习了上学期的SVM。

神经网络的优势要在数据量很大,计算力很强的时候才能体现,数据量小的话,很多任务上的表现都不是很好。

SVM属于非参数方法,拥有很强的理论基础和统计保障。损失函数拥有全局最优解,而且当数据量不大的时候,收敛速度很快,超参数即便需要调整,但也有具体的含义,比如高斯kernel的大小可以理解为数据点之间的中位数距离。在神经网络普及之前,引领了机器学习的主流,那时候理论和实验都同样重要。

思维导图