深度学习实验四:卷积神经网络编程

本次实验练习使用torch.nn中的类设计一个卷积神经网络进行MNIST手写体数字图像分类。

name = 'x'#填写你的姓名

sid = 'B02014152'#填写你的学号

print('姓名:%s, 学号:%s'%(name, sid))

姓名:x, 学号:B02014152

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import numpy as np

import matplotlib.pyplot as plt

1. 设计CNN类

从torch.nn.Module派生一个子类CNN,表示一个卷积神经网络;

请合理设计网络各个层,尝试使用不同的结构,比如skip connection, batch normalization,group convolution等。

你的网络模型中至少有2个卷积层、2个聚合层。

#在下面添加代码,实现卷积神经网络,用于识别MNIST手写体数字

# conv1 = nn.Conv2d(in_channels = 3, out_channels = 16, kernel_size = 3, padding = 0) # Valid Conv

# conv2 = nn.Conv2d(in_channels = 3, out_channels = 16, kernel_size = 3, padding = 1) # Same Conv

# conv3 = nn.Conv2d(in_channels = 3, out_channels = 16, kernel_size = 3, padding = 2) # Full Conv

class CNN(torch.nn.Module):

def __init__(self):

super().__init__()

self.convd1 = nn.Conv2d(in_channels = 1, out_channels = 10, kernel_size = 3, padding = 0)

self.pool1 = torch.nn.MaxPool2d(kernel_size= 2, stride= 2)

self.convd2 = nn.Conv2d(in_channels = 10, out_channels = 20, kernel_size = 3, padding = 0)

self.pool2 = torch.nn.MaxPool2d(kernel_size= 2, stride= 2)

self.fc = torch.nn.Linear(500, 10)

def forward(self, x):

x.cuda()

batch_size = x.size(0)

z1 = self.pool1(self.convd1(x))

a1 = F.relu(z1)

z2 = self.pool2(self.convd2(a1))

a2 = F.relu(z2)

a2 = a2.view(batch_size, -1)

a2 = self.fc(a2)

# a2 = F.softmax(a2, dim=0)

return a2

#测试MLP类

X = torch.rand((32,1,28,28),dtype = torch.float32)

net = CNN()

Y = net(X)

print(Y)

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

Cell In [482], line 4

2 X = torch.rand((32,1,28,28),dtype = torch.float32)

3 net = CNN()

----> 4 Y = net(X)

5 print(Y)

File ~\AppData\Roaming\Python\Python38\site-packages\torch\nn\modules\module.py:727, in Module._call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

--> 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

730 self._forward_hooks.values()):

731 hook_result = hook(self, input, result)

Cell In [481], line 19, in CNN.forward(self, x)

17 batch_size = x.size(0)

18 x = nn.BatchNorm2d(num_features=1)

---> 19 z1 = self.pool1(self.convd1(x))

20 a1 = F.relu(z1)

21 z2 = self.pool2(self.convd2(a1))

File ~\AppData\Roaming\Python\Python38\site-packages\torch\nn\modules\module.py:727, in Module._call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

--> 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

730 self._forward_hooks.values()):

731 hook_result = hook(self, input, result)

File ~\AppData\Roaming\Python\Python38\site-packages\torch\nn\modules\conv.py:423, in Conv2d.forward(self, input)

422 def forward(self, input: Tensor) -> Tensor:

--> 423 return self._conv_forward(input, self.weight)

File ~\AppData\Roaming\Python\Python38\site-packages\torch\nn\modules\conv.py:419, in Conv2d._conv_forward(self, input, weight)

415 if self.padding_mode != 'zeros':

416 return F.conv2d(F.pad(input, self._reversed_padding_repeated_twice, mode=self.padding_mode),

417 weight, self.bias, self.stride,

418 _pair(0), self.dilation, self.groups)

--> 419 return F.conv2d(input, weight, self.bias, self.stride,

420 self.padding, self.dilation, self.groups)

TypeError: conv2d(): argument 'input' (position 1) must be Tensor, not BatchNorm2d

Y.shape

torch.Size([32, 10])

#了解MLP中的参数

for name,param in net.named_parameters():

print(name,':',param.size())

convd1.weight : torch.Size([10, 1, 3, 3])

convd1.bias : torch.Size([10])

convd2.weight : torch.Size([20, 10, 3, 3])

convd2.bias : torch.Size([20])

fc.weight : torch.Size([10, 500])

fc.bias : torch.Size([10])

#输出模型

print(net)

CNN(

(convd1): Conv2d(1, 10, kernel_size=(3, 3), stride=(1, 1))

(pool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(convd2): Conv2d(10, 20, kernel_size=(3, 3), stride=(1, 1))

(pool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(fc): Linear(in_features=500, out_features=10, bias=True)

)

#用torchsummary输出模型结构

# !pip install -i https://pypi.tuna.tsinghua.edu.cn/simple torchsummary

from torchsummary import summary

summary(net.cuda(), input_size = (1,28,28))

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 10, 26, 26] 100

MaxPool2d-2 [-1, 10, 13, 13] 0

Conv2d-3 [-1, 20, 11, 11] 1,820

MaxPool2d-4 [-1, 20, 5, 5] 0

Linear-5 [-1, 10] 5,010

================================================================

Total params: 6,930

Trainable params: 6,930

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.09

Params size (MB): 0.03

Estimated Total Size (MB): 0.12

----------------------------------------------------------------

2.训练模型

2.1 第一步,对数据做预处理

MNIST图像的像素取值范围是[0,1],先把值域改变为[-1,1]. 在PyTorch中,可以使用torchvision.transforms.Normalize类处理。

from torchvision import datasets,transforms

#构造一个变换,将像素值范围变换到[-1,1]

normalizer = transforms.Normalize((0.5,), (0.5,))#一行代码

#定义一个变换序列transform,包含两个变换:第一个将PIL图像转换为张量,第二个是normalizer

transform = transforms.Compose([transforms.ToTensor(), normalizer]) #一行代码

2.2 第二步,构造训练集,加入预处理

data_path = '../data/'

mnist_train = datasets.MNIST(data_path,download=False,train = True,transform = transform)

mnist_test = datasets.MNIST(data_path,download=False,train = False,transform = transform)

2.3 第三步,构造加载器

batch_size = 32 #可以自己定义batch_size大小

train_loader = torch.utils.data.DataLoader(mnist_train, batch_size = batch_size, shuffle = True)

test_loader = torch.utils.data.DataLoader(mnist_test, batch_size = batch_size, shuffle = False)

#从加载器里获取一批样本,并输出样本张量的形状

imgs,labels = iter(train_loader).next()#一行样本

imgs.shape

torch.Size([32, 1, 28, 28])

labels.shape

torch.Size([32])

2.4 第四步,训练模型

注意:训练卷积神经网络时,网络的输入是四维张量,尺寸为 N × C × H × W N\times C \times H \times W N×C×H×W,分别表示张量

def Train(model, loader, epochs, lr = 0.1):

epsilon = 1e-6

#将model置于train模式

#一行代码

net.train()

#定义合适的优化器

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)#一行代码

#定义损失函数

loss = F.cross_entropy#一行代码

#请在下面完成训练代码

#请在迭代过程中每100次迭代,输出一次损失

loss0 = 0

for epoch in range(epochs):

for it,(imgs, labels) in enumerate(loader):

#1. zero_grads

#请用一行代码实现

optimizer.zero_grad()

imgs, labels = imgs.cuda(), labels.cuda()

#2. F.P.前向传播

#请用一行代码实现

logits = model(imgs)

#3. 计算损失

loss1 = loss(logits, labels)#请用一行代码实现

if(abs(loss1.item() - loss0)<epsilon):

break

loss0 = loss1.item()

if it%100==0:

print('epoch %d, iter %d, loss = %f\n'%(epoch,it,loss1.item()))

#4. 后向传播

#请用一行代码实现

loss1.backward()

#5. 梯度下降

#请用一行代码实现.

optimizer.step()

return model

#训练模型

model = CNN()

model = model.cuda()

model = Train(model, test_loader, 10)

epoch 0, iter 0, loss = 2.317181

epoch 0, iter 100, loss = 1.612653

epoch 0, iter 200, loss = 0.475313

epoch 0, iter 300, loss = 0.267556

epoch 1, iter 0, loss = 0.272252

epoch 1, iter 100, loss = 0.387812

epoch 1, iter 200, loss = 0.248591

epoch 1, iter 300, loss = 0.130585

epoch 2, iter 0, loss = 0.101499

epoch 2, iter 100, loss = 0.289020

epoch 2, iter 200, loss = 0.127526

epoch 2, iter 300, loss = 0.072299

epoch 3, iter 0, loss = 0.069821

epoch 3, iter 100, loss = 0.237272

epoch 3, iter 200, loss = 0.073975

epoch 3, iter 300, loss = 0.052888

epoch 4, iter 0, loss = 0.055265

epoch 4, iter 100, loss = 0.210844

epoch 4, iter 200, loss = 0.046827

epoch 4, iter 300, loss = 0.046028

epoch 5, iter 0, loss = 0.044145

epoch 5, iter 100, loss = 0.192648

epoch 5, iter 200, loss = 0.031938

epoch 5, iter 300, loss = 0.042316

epoch 6, iter 0, loss = 0.036941

epoch 6, iter 100, loss = 0.179299

epoch 6, iter 200, loss = 0.023668

epoch 6, iter 300, loss = 0.039010

epoch 7, iter 0, loss = 0.032049

epoch 7, iter 100, loss = 0.168726

epoch 7, iter 200, loss = 0.018773

epoch 7, iter 300, loss = 0.036361

epoch 8, iter 0, loss = 0.027803

epoch 8, iter 100, loss = 0.158944

epoch 8, iter 200, loss = 0.015419

epoch 8, iter 300, loss = 0.034879

epoch 9, iter 0, loss = 0.024135

epoch 9, iter 100, loss = 0.149009

epoch 9, iter 200, loss = 0.012855

epoch 9, iter 300, loss = 0.033128

?

2.5 第五步,测试模型

#编写模型测试过程

def Evaluate(model, loader):

model.eval()

correct = 0

counts = 0

for imgs, labels in loader:

imgs, labels = imgs.cuda(), labels.cuda()

logits = model(imgs)

yhat = logits.argmax(dim = 1)

correct = correct + (yhat==labels).sum().item()

counts = counts + imgs.size(0)

accuracy = correct / counts

return accuracy

acc = Evaluate(model,test_loader)

print('Accuracy = %f'%(acc))

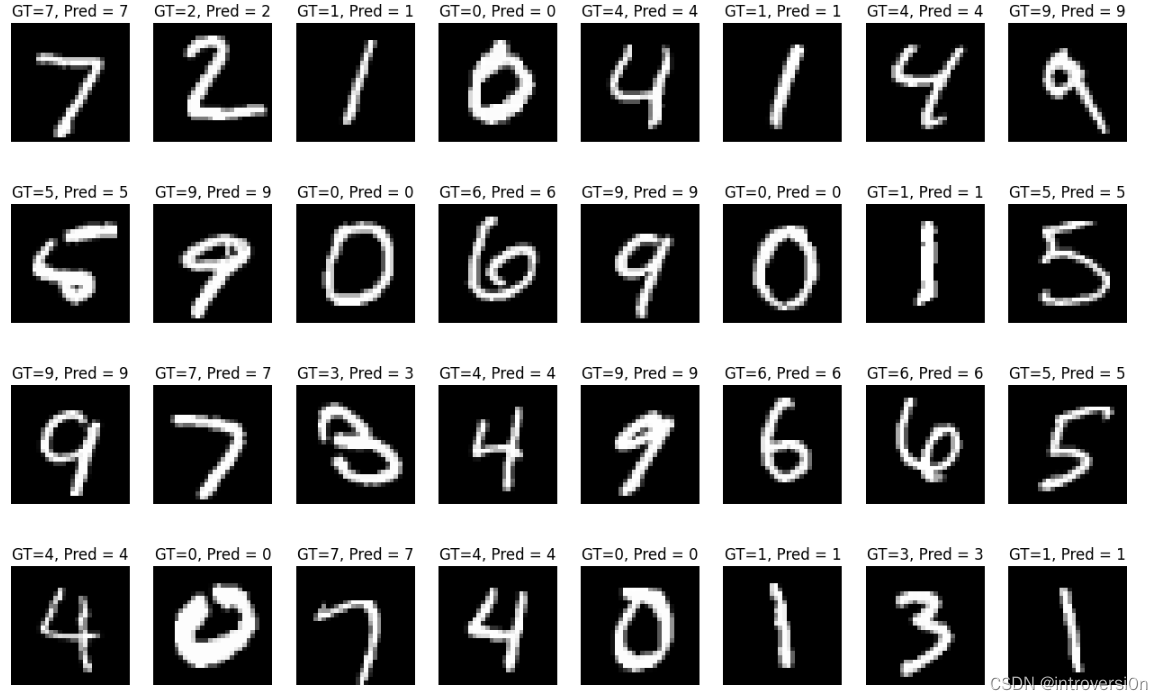

Accuracy = 0.974000

imgs,labels = next(iter(test_loader))

imgs, labels = imgs.cuda(), labels.cuda()

logits = model(imgs)

imgs, labels = imgs.cpu(), labels.cpu()

yhat = logits.argmax(dim = 1)

plt.figure(figsize = (16,10))

for i in range(imgs.size(0)):

plt.subplot(4,8,i+1)

plt.imshow(imgs[i].squeeze()/2+0.5,cmap = 'gray')

plt.axis('off')

plt.title('GT=%d, Pred = %d'%(labels[i],yhat[i]))

plt.show()

?