Selenium爬取智能合约交易记录

1.目的:爬取 https://eth.btc.com/accounts 上的智能合约账户地址及其交易记录数据。

2.方法:

- 加入元素显式等待

- 利用selenium爬取记录并判断是否为合约地址or外部地址(有合约地址图标的即合约地址)

- 对爬取的数据做简单的第一步的数据处理使数据格式标准化

- 将数据追加写入txt中

3.源代码(Python)

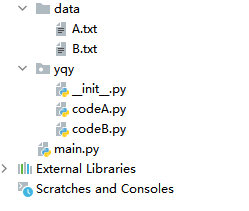

项目目录结构

- codeA中爬取的数据会保存在A.txt中

- codeB中爬取的数据会保存在B.txt中

# @Content : 账户地址页面 A 爬取

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as ECS

from selenium.webdriver.common.by import By

import time

url = 'https://eth.btc.com/accounts'

opt = webdriver.ChromeOptions() # 创建浏览

driver = webdriver.Chrome(options=opt) # 创建浏览器对象

driver.get(url) # 打开网页

pageSum = 2 # 爬取多少页

all_list = [] # A数据总表

for q in range(1, pageSum + 1):

print('开始爬取第' + str(q) + '页...')

try:

# 定位页面中的table表格元素

table_loc = (By.CSS_SELECTOR,

'#root > div > section > section > main > div > div > div.page-container > '

'div:nth-child(2) > div > div > div > div > div > div > table')

# 等待timeOut秒,直到发现元素

timeOut = 20

WebDriverWait(driver, timeOut).until(ECS.presence_of_element_located(table_loc))

except:

# timeOut秒后没有发现元素则会执行该方法

print('执行except方法 : 没有找到对应元素! 当前已爬取到第', q, '页')

driver.quit()

finally:

# 发现元素则执行下面的方法

# 定位页面中的table表格元素

element = driver.find_element_by_css_selector(

'#root > div > section > section > main > div > div > div.page-container > '

'div:nth-child(2) > div > div > div > div > div > div > table')

# 找到table元素下面的tr标签元素集合

tr_content = element.find_elements_by_tag_name("tr")

# 遍历tr元素

for tr in tr_content:

# 在当前表格行tr元素下查找类名为contract-tx-icon的元素

icon = tr.find_elements_by_class_name('contract-tx-icon')

type = ''

if len(icon) != 0:

type = '合约地址'

else:

type = '外部地址'

# 保存当前行的数据

tempList = [type]

# 在当前表格行tr元素下查找td表格块集合

td_list = tr.find_elements_by_tag_name('td')

# 获取当前行数据中的地址字段上附带的链接

for td in td_list:

urls = td.find_elements_by_tag_name('a')

for u in urls:

tempList.append(u.get_attribute('href'))

tdText = str(td.text).strip().replace(',', '')

if len(tdText) > 0:

tempList.append(tdText)

tempLen = len(tempList)

if tempLen == 8:

del tempList[4]

if tempLen > 1:

del tempList[5]

with open('../data/A.txt', 'a+', encoding='utf-8') as f:

f.write(",".join(tempList))

f.write("\n")

all_list.append(tempList)

# 打印本页内容并点击分页按钮继续执行下一页的内容

print('第' + str(q) + '页爬取结束!')

# 点击下一页按钮

driver.find_element_by_xpath(

'/html/body/div/div/section/section/main/div/div/div[2]/div[3]/div/ul/li[2]/div[3]/i') \

.click()

# 点击完分页按钮后等待一秒再进行下页的数据操作,否则会报错

time.sleep(0.5)

print("全部爬取结束!!! 共", pageSum, '页')

for line in all_list:

print(line)

driver.quit()

# @Content : 交易记录页面 B 爬取

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as ECS

from selenium.webdriver.common.by import By

import time

# 获取A.txt中爬取的链接字段

urls_in_A = []

with open('../data/A.txt', 'r', encoding='utf-8') as f:

for line in f.readlines():

sp = line.split(',')

urls_in_A.append(sp[2])

opt = webdriver.ChromeOptions() # 创建浏览

driver = webdriver.Chrome(options=opt) # 创建浏览器对象

recordsMaxNum = 10 # 每页最多爬取多少条

all_list = [] # B数据总表

# table css选择器

t_css = '#accountCopyContainer > div.margin-top-md > div > div.ant-tabs-content.ant-tabs-content-animated.ant-tabs-top-content > div.ant-tabs-tabpane.ant-tabs-tabpane-active > div.account-txns > div.ant-table-wrapper > div > div > div > div > div > table'

for urlA in urls_in_A:

driver.get(urlA) # 打开网页

print('正在爬取...', urlA)

try:

# 定位页面中的table表格下面的tbody下面的第一个tr元素

table_loc = (By.CSS_SELECTOR, t_css+' > tbody > tr:nth-child(1)')

# 等待timeOut秒,直到发现元素

timeOut = 20

WebDriverWait(driver, timeOut).until(ECS.presence_of_element_located(table_loc))

except:

# timeOut秒后没有发现元素则会执行该方法

print('执行except方法 : 没有找到table元素! 当前已爬取到', urlA)

continue

finally:

# 发现元素则执行下面的方法

# 定位页面中的table表格元素

element = driver.find_element_by_css_selector(t_css)

# 找到table元素下面的tr标签元素集合

tr_content = element.find_elements_by_tag_name("tr")

# 遍历tr元素

all_ct = 0

for tr in tr_content:

# 每个url最多爬取recordsMaxNum条数据

if all_ct >= recordsMaxNum:

break

# 保存当前行的数据并将正在爬取的url放入其中作为初始化元素

tempList = [urlA]

# 在当前表格行tr元素下查找td表格块集合

td_list = tr.find_elements_by_tag_name('td')

# 获取当前行数据中的地址字段上附带的链接

temp_ct = 0 #计数 ,看是第几个字段,当到达第4个或者第5个字段,也就是地址字段时判断是否为合约地址

for td in td_list:

temp_ct += 1

tdText = str(td.text).strip().replace(',', '')

if len(tdText) > 0:

tempList.append(tdText)

if temp_ct == 4 or temp_ct == 5:

type = ''

# 在当前表格行td元素下查找类名为contract-tx-icon的元素

icon = td.find_elements_by_class_name('contract-tx-icon')

if len(icon) != 0:

type = '合约地址'

else:

type = '外部地址'

tempList.append(type)

if len(tempList) > 1:

# 把当前行追加存储在文件B.txt中

with open('../data/B.txt', 'a+', encoding='utf-8') as f:

f.write(",".join(tempList))

f.write("\n")

all_list.append(tempList)

all_ct += 1

# 打印本页内容并点击分页按钮继续执行下一页的内容

print('爬取结束!', urlA)

# 爬完后过换下一个url

time.sleep(0.25)

print("全部爬取结束!!! 共", len(all_list), '条!')

for line in all_list:

print(line)

driver.quit()