2021SC@SDUSC

一、MerkleProof

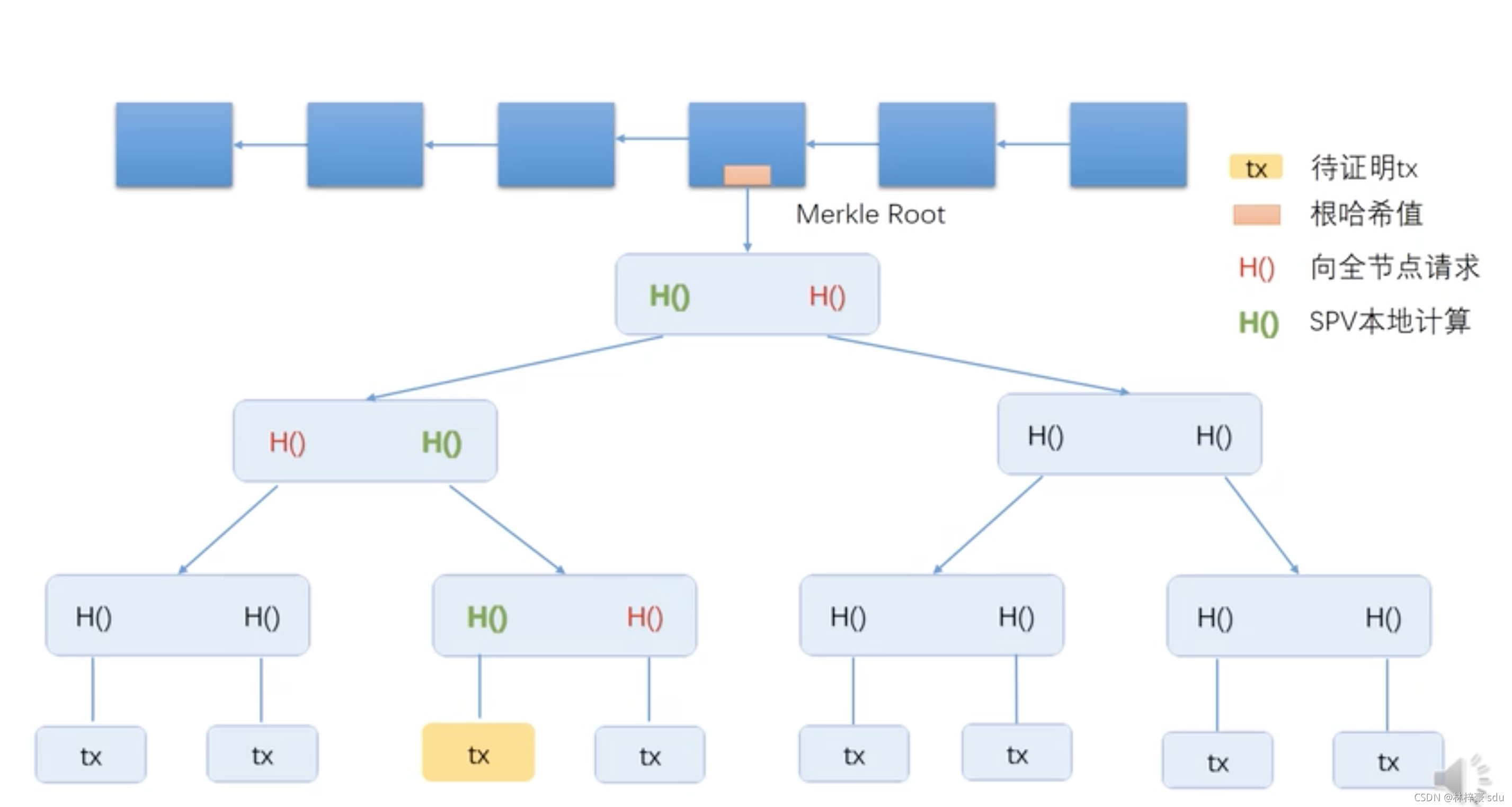

假如一个轻节点想知道一个事物信息是否被保存到了区块中,它可以向途中的全节点发出请求,这个轻节点会被给到下图中三个红色的哈希指针,这样他就可以在本地算出绿色的哈希指针,即由这个事务算出的哈希值,顺着叶节点就可以找到MerkleTree的根节点,而这个header就被保存在区块链的某个节点当中。

那么接下来我们就看看jdchain中MerkleProof的内容,如下方代码:

package com.jd.blockchain.ledger;

public interface MerkleProof {

com.jd.blockchain.crypto.HashDigest getRootHash();

com.jd.blockchain.ledger.MerkleProofLevel[] getProofLevels();

com.jd.blockchain.crypto.HashDigest getDataHash();

}

我们可以看到MerkleProof中包含着三个接口,一个是HashDigest getRootHash()另一个是getDataHash();顾名思义,我们可以知道这个事一个获取根节点获取哈希的数据的方法;究其根本,我们可以看到这么两个接口,第一个关于字节序列的接口,这个接口定义了MerkleTree的尾部事物序列,具有不存在证明的职能。第二个接口可以使事务连续,这样可以防止他人篡改。

public interface ByteSequence {

int size();

byte byteAt(int index);

default boolean equal(byte[] data) { /* compiled code */ }

utils.ByteSequence subSequence(int start, int end);

int copyTo(int srcOffset, byte[] dest, int destOffset, int length);

default int copyTo(byte[] dest, int destOffset, int length) { /* compiled code */ }

}

public interface BytesSerializable {

byte[] toBytes();

}

我们还可以看到一个getProofLevels();,这个是树的level是按照倒置的方式计算,而不是以根节点的距离衡量,即叶子节点的 level 是 0; 所有的数据的哈希索引都以叶子节点进行记录每一个数据节点都以标记一个序列号(Sequence Number, 缩写为 SN),并按照序列号的大小统一地在 level 0;

下面的代码我们可以看出,它同样调用了HashDigest的方法,获取了节点的node,用来辨别Merkle树的层级。

public interface MerkleProofLevel {

int getProofPoint();

com.jd.blockchain.crypto.HashDigest[] getHashNodes();

}

二、MerkleSortTree

首先这个类implements了Transactional的接口,这个接口的职能就基本是更新,提交,删除的事物。

public interface Transactional {

boolean isUpdated();

void commit();

void cancel();

}

默克尔排序树;所有的数据的哈希索引都以叶子节点进行记录;随着数据节点的增加,整棵树以倒置方式向上增长(根节点在上,叶子节点在下),此设计带来显著特性是已有节点的信息都可以不必修改;但由于对单个账本中的写入过程被设计为同步写入,因而非线程安全的设计并不会影响在此场景下的使用,而且由于省去了线程间同步操作,反而提升了性能;

构建了一个空的树

public MerkleSortTree(TreeOptions options, String keyPrefix, ExPolicyKVStorage kvStorage,BytesConverter<T> converter) {

this(TreeDegree.D3, options, Bytes.fromString(keyPrefix), kvStorage, converter);

}

创建了一个MerkleSortTree:

rootHash 节点的根Hash;

如果指定为 null,则实际上创建一个空的 Merkle Tree;

options 树的配置选项;

keyPrefix 存储数据的 key 的前缀;

kvStorage 保存 Merkle 节点的存储服务;

converter 默克尔树的转换器;

dataPolicy 数据节点的处理策略;

可以通过更改setting来创建不同数据类型的树

public MerkleSortTree(HashDigest rootHash, TreeOptions options, Bytes keyPrefix, ExPolicyKVStorage kvStorage,

BytesConverter<T> converter, DataPolicy<T> dataPolicy) {

this.KEY_PREFIX = keyPrefix;

this.OPTIONS = options;

this.KV_STORAGE = kvStorage;

this.DEFAULT_HASH_FUNCTION = Crypto.getHashFunction(options.getDefaultHashAlgorithm());

this.CONVERTER = converter;

this.DATA_POLICY = dataPolicy;

MerkleIndex merkleIndex = loadMerkleEntry(rootHash);

int subtreeCount = merkleIndex.getChildCounts().length;

TreeDegree degree = null;

for (TreeDegree td : TreeDegree.values()) {

if (td.DEGREEE == subtreeCount) {

degree = td;

}

}

if (degree == null) {

throw new MerkleProofException("The root node with hash[" + rootHash.toBase58() + "] has wrong degree!");

}

this.DEGREE = degree.DEGREEE;

this.MAX_LEVEL = degree.MAX_DEPTH;

this.MAX_COUNT = MathUtils.power(DEGREE, MAX_LEVEL);

this.root = new PathNode(rootHash, merkleIndex, this);

refreshMaxId();

}

从指定的默克尔索引开始,搜索指定 id 的数据,并记录搜索经过的节点的哈希;如果数据不存在,则返回 null;

merkleIndex 开始搜索的默克尔索引节点;

id 要查找的数据的 id;

pathSelector 路径选择器,记录搜索过程经过的默克尔节点;

搜索到的指定 ID 的数据;

private T seekData(MerkleIndex merkleIndex, long id, MerkleEntrySelector pathSelector) {

int idx = index(id, merkleIndex);

if (idx < 0) {

return null;

}

if (merkleIndex.getStep() > 1) {

MerkleIndex child;

if (merkleIndex instanceof PathNode) {

PathNode path = (PathNode) merkleIndex;

child = path.getChild(idx);

if (child == null) {

return null;

}

HashDigest childHash = path.getChildHashs()[idx];

pathSelector.accept(childHash, child);

} else {

HashDigest[] childHashs = merkleIndex.getChildHashs();

HashDigest childHash = childHashs[idx];

if (childHash == null) {

return null;

}

child = loadMerkleEntry(childHash);

pathSelector.accept(childHash, child);

}

return seekData((MerkleIndex) child, id, pathSelector);

}

// leaf node;

T child;

if (merkleIndex instanceof LeafNode) {

@SuppressWarnings("unchecked")

LeafNode<T> path = (LeafNode<T>) merkleIndex;

child = path.getChild(idx);

} else {

HashDigest[] childHashs = merkleIndex.getChildHashs();

HashDigest childHash = childHashs[idx];

if (childHash == null) {

return null;

}

child = CONVERTER.fromBytes(loadNodeBytes(childHash));

}

return child;

}

合并指定的索引节点和数据节点

indexNodeHash 索引节点的哈希;

indexNode 索引节点;

dataId 数据节点的 id;

data 数据;

返回共同的父节点;如果未更新数据,则返回 null;

private MerkleNode mergeChildren(HashDigest indexNodeHash, MerkleIndex indexNode, long dataId, T data) {

final long PATH_OFFSET = indexNode.getOffset();

final long PATH_STEP = indexNode.getStep();

long pathId = PATH_OFFSET;

long dataOffset = calculateOffset(dataId, PATH_STEP);

long pathOffset = PATH_OFFSET;

long step = PATH_STEP;

while (dataOffset != pathOffset) {

step = upStep(step);

if (step >= MAX_COUNT) {

throw new IllegalStateException("The 'step' overflows!");

}

dataOffset = calculateOffset(dataId, step);

pathOffset = calculateOffset(pathId, step);

}

// 判断参数指定的索引节点和数据节点是否具有从属关系,还是并列关系;

if (step == PATH_STEP && pathOffset == PATH_OFFSET) {

// 数据节点属于 pathNode 路径节点;

// 把数据节点合并到 pathNode 路径节点;

int index = index(dataId, pathOffset, step);

if (PATH_STEP > 1) {

PathNode parentNode;

if (indexNode instanceof PathNode) {

parentNode = (PathNode) indexNode;

} else {

parentNode = new PathNode(indexNodeHash, indexNode, this);

}

boolean ok = updateChildAtIndex(parentNode, index, dataId, data);

return ok ? parentNode : null;

} else {

// while PATH_STEP == 1, this index node is leaf;

LeafNode<T> parentNode;

if (indexNode instanceof LeafNode) {

parentNode = (LeafNode<T>) indexNode;

} else {

parentNode = new LeafNode<T>(indexNodeHash, indexNode, this);

}

boolean ok = updateChildAtIndex(parentNode, index, dataId, data);

return ok ? parentNode : null;

}

} else {

// 数据节点不从属于 pathNode 路径节点,它们有共同的父节点;

// 创建共同的父节点;

PathNode parentPathNode = new PathNode(pathOffset, step, this);

int dataChildIndex = parentPathNode.index(dataId);

boolean ok = updateChildAtIndex(parentPathNode, dataChildIndex, dataId, data);

if (!ok) {

return null;

}

int pathChildIndex = parentPathNode.index(pathId);

updateChildAtIndex(parentPathNode, pathChildIndex, indexNodeHash, indexNode);

return parentPathNode;

}

}

这个是一个判断子节点是否溢出,或超出范围的方法。

public long skip(long count) {

if (count < 0) {

throw new IllegalArgumentException("The specified count is out of bound!");

}

if (count == 0) {

return 0;

}

if (childIndex >= TREE.DEGREE) {

return 0;

}

long s = ArrayUtils.sum(childCounts, 0, childIndex + 1);

long skipped;// 实际可略过的数量;

long currLeft = s - cursor - 1;

if (count < currLeft) {

// 实际略过的数量在 index 指示的当前子节点的范围内;

if (childIterator == null) {

childIterator = createChildIterator(childIndex);

}

skipped = count;

long sk = childIterator.skip(skipped);

assert sk == skipped;

} else {

// 已经超过 index 指示的当前子节点的剩余数量,直接忽略当前子节点;

childIterator = null;

skipped = currLeft;

childIndex++;

while (childIndex < TREE.DEGREE && skipped + childCounts[childIndex] <= count) {

skipped += childCounts[childIndex];

childIndex++;

}

if (childIndex < TREE.DEGREE) {

// 未超出子节点的范围;

long c = count - skipped;

childIterator = createChildIterator(childIndex);

long sk = childIterator.skip(c);

assert sk == c;

skipped = count;

}

}

cursor = cursor + skipped;

return skipped;

}