1 系统版本

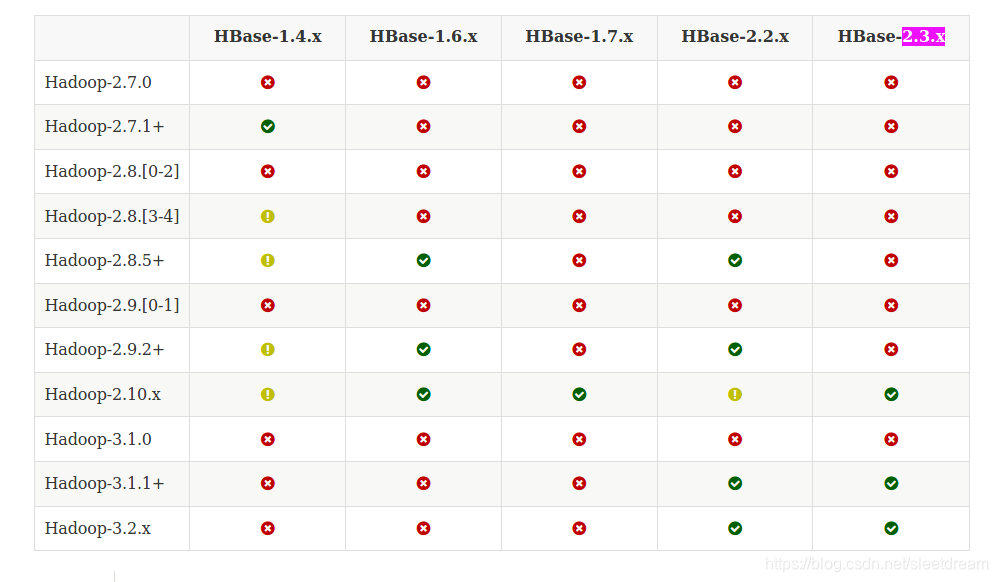

HBase与Hadoop兼容性查找网站

http://hbase.apache.org/book.html#basic.prerequisites

版本对应很重要,差一点都不行。例如Hadoop3.3.0对应HBase2.3.5就不行

| 环境 | 版本 |

|---|---|

| Windows | Windows10 家庭中文版 |

| JDK | 1.8.0_291 |

| Hadoop | 3.1.3 |

| HBase | 2.3.5 |

| winutils | 3.1.0 |

| Jansi | 1.18 |

Hadoop

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

HBase

http://archive.apache.org/dist/hbase/

winutils

https://github.com/s911415/apache-hadoop-3.1.0-winutils

Jansi

http://fusesource.github.io/jansi/download.html

注意:

(1)管理员权限解压安装包,否则会出现部分文件解压缩失败的问题

(2)切记HADOOP和HBASE路径中不要有空格。JDK路径中最好也不要有空格,详细请看最后Bug分析。

2 配置环境变量

我的电脑 --> 属性 --> 高级系统设置 --> 高级 --> 环境变量

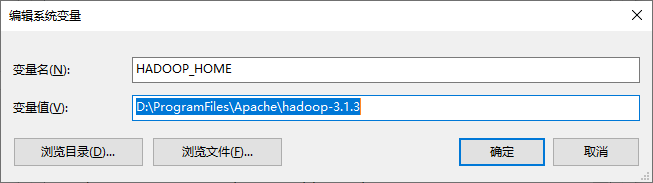

新建HADOOP_HOME

在PATH中添加HADOOP_HOME\bin路径,HADOOP_HOME\sbin路径

!](https://img-blog.csdnimg.cn/20210709201714184.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3NsZWV0ZHJlYW0=,size_16,color_FFFFFF,t_70)

3 验证安装

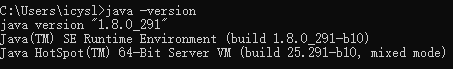

3.1 验证JAVA

java -version

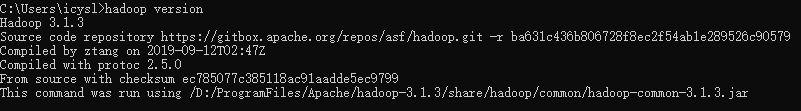

3.2 验证Hadoop

hadoop version

4 配置Hadoop

打开hadoop-3.1.3\etc\hadoop目录

4.1 hadoop-env.cmd

set JAVA_HOME=C:\ProgramFiles\Java\jdk1.8.0_291

4.2 core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

4.3 hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/D:/ProgramFiles/Apache/hadoop-3.1.3/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/D:/ProgramFiles/Apache/hadoop-3.1.3/data/datanode</value>

</property>

</configuration>

4.4 yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>1</value>

</property>

</configuration>

4.5 mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

4.6 替换winutils

把winutils里面的bin文件复制到hadoop的安装路径,替换掉原来的bin文件

4.7 adoop-yarn-server-timelineservice-3.1.3.jar

将hadoop-3.1.3\share\hadoop\yarn\lib\hadoop-yarn-server-timelineservice-3.1.3.jar 复制到share\hadoop\yarn\lib

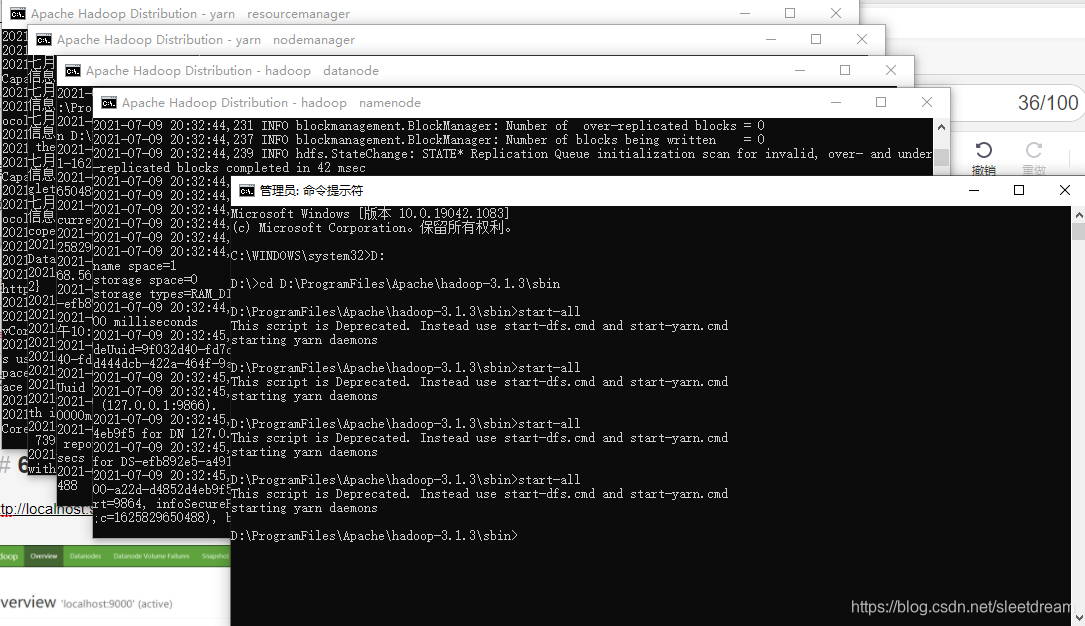

5 启动Hadoop

5.1 初始化namenode

hdfs namenode -format

初始化成功后会在hadoop-3.1.3下生成data目录

5.2 启动HDFS

cd到sbin目录

start-all

启动完成后,会跳出四个窗口没有错误即可

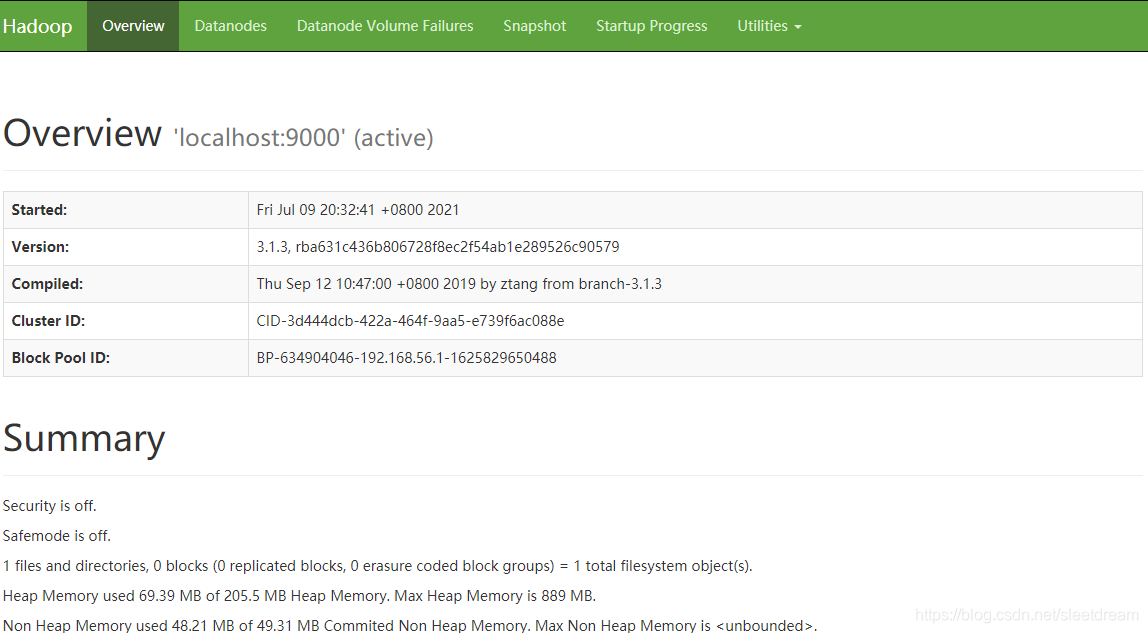

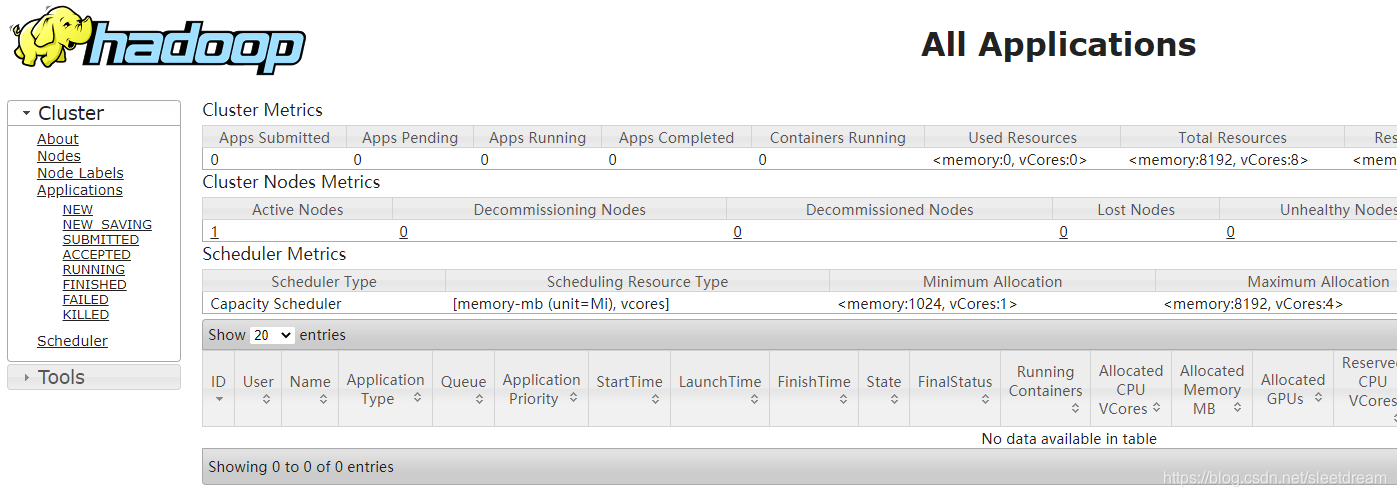

5.3 访问Hadoop上的浏览器

http://localhost:9870

http://localhost:8088/cluster

6 配置HBase

6.1 hbase-site.xml

hbase-2.3.5\conf路径下

在<configuration>标签内插入

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

6.2 hbase-env.cmd

最后插入

set HBASE_MANAGES_ZK=false

set JAVA_HOME=C:\ProgramFiles\Java\jdk1.8.0_291

set HBASE_CLASSPATH=D:\ProgramFiles\Apache\hbase-2.3.5\conf

6.3 jansi-1.18.jar

jansi-1.18.jar包放到hbase-2.3.5\lib下,不执行此步会出现9.4的bug

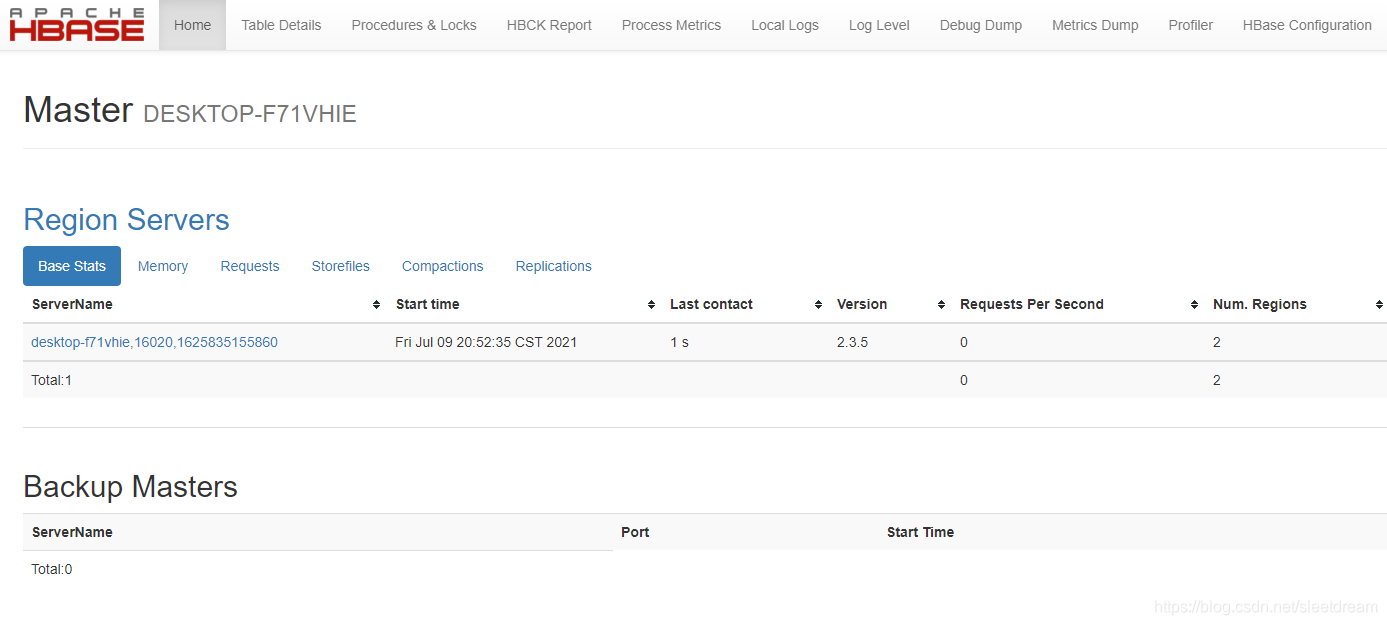

7 启动HBase

7.1 启动服务

cd到bin目录下

start-hbase

7.2 访问HBase

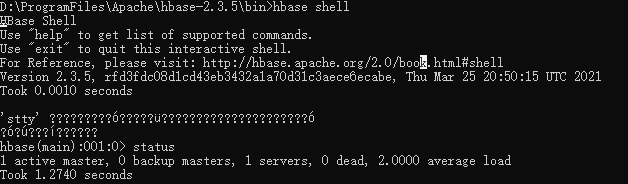

7.3 测试HBase Shell

打开脚本

hbase shell

调试

status

8 参考鸣谢

windows10 搭建最新的 hadoop 3.1.3 和 hbase 2.2.2 测试环境

Windows环境下执行hadoop命令出现系统找不到指定路径Error

Could not initialize class org.fusesource.jansi.internal.Kernel32

9 问题Bug

9.1 winutils

winutils若采用其他Github的版本如winutils-master,可能会出现因缺少msvcr100.dll,导致winutils-master无法运行的Bug,虽然网上解决方案说安装缺失的dll或常用C++库合集。尝试后均以失败告终。

2021-07-09 16:32:26,712 WARN checker.StorageLocationChecker: Exception checking StorageLocation [DISK]file:/D:/ProgramFiles/Apache/hadoop-3.1.1/workplace/data/datanode

java.lang.RuntimeException: Error while running command to get file permissions : ExitCodeException exitCode=-1073741515:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:1009)

at org.apache.hadoop.util.Shell.run(Shell.java:902)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:1227)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:1321)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:1303)

at org.apache.hadoop.fs.FileUtil.execCommand(FileUtil.java:1343)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.loadPermissionInfoByNonNativeIO(RawLocalFileSystem.java:726)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.loadPermissionInfo(RawLocalFileSystem.java:717)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.getPermission(RawLocalFileSystem.java:678)

at org.apache.hadoop.util.DiskChecker.mkdirsWithExistsAndPermissionCheck(DiskChecker.java:233)

at org.apache.hadoop.util.DiskChecker.checkDirInternal(DiskChecker.java:141)

at org.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:116)

at org.apache.hadoop.hdfs.server.datanode.StorageLocation.check(StorageLocation.java:239)

at org.apache.hadoop.hdfs.server.datanode.StorageLocation.check(StorageLocation.java:52)

at org.apache.hadoop.hdfs.server.datanode.checker.ThrottledAsyncChecker$1.call(ThrottledAsyncChecker.java:142)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.loadPermissionInfoByNonNativeIO(RawLocalFileSystem.java:766)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.loadPermissionInfo(RawLocalFileSystem.java:717)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.getPermission(RawLocalFileSystem.java:678)

at org.apache.hadoop.util.DiskChecker.mkdirsWithExistsAndPermissionCheck(DiskChecker.java:233)

at org.apache.hadoop.util.DiskChecker.checkDirInternal(DiskChecker.java:141)

at org.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:116)

at org.apache.hadoop.hdfs.server.datanode.StorageLocation.check(StorageLocation.java:239)

at org.apache.hadoop.hdfs.server.datanode.StorageLocation.check(StorageLocation.java:52)

at org.apache.hadoop.hdfs.server.datanode.checker.ThrottledAsyncChecker$1.call(ThrottledAsyncChecker.java:142)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2021-07-09 16:32:26,715 ERROR datanode.DataNode: Exception in secureMain

org.apache.hadoop.util.DiskChecker$DiskErrorException: Too many failed volumes - current valid volumes: 0, volumes configured: 1, volumes failed: 1, volume failures tolerated: 0

at org.apache.hadoop.hdfs.server.datanode.checker.StorageLocationChecker.check(StorageLocationChecker.java:220)

at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:2762)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:2677)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:2719)

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:2863)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:2887)

2021-07-09 16:32:26,719 INFO util.ExitUtil: Exiting with status 1: org.apache.hadoop.util.DiskChecker$DiskErrorException: Too many failed volumes - current valid volumes: 0, volumes configured: 1, volumes failed: 1, volume failures tolerated: 0

2021-07-09 16:32:26,722 INFO datanode.DataNode: SHUTDOWN_MSG:

9.2 ResourceManager无法启动

执行start-all后,Resource报错。

将hadoop-3.1.3\share\hadoop\yarn\lib\hadoop-yarn-server-timelineservice-3.1.3.jar 放到share\hadoop\yarn\lib 下即可解决

2021-07-09 19:33:49,324 FATAL resourcemanager.ResourceManager: Error starting ResourceManager

java.lang.NoClassDefFoundError: org/apache/hadoop/yarn/server/timelineservice/collector/TimelineCollectorManager

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:756)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)

at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

at java.lang.Class.getDeclaredMethods0(Native Method)

at java.lang.Class.privateGetDeclaredMethods(Class.java:2701)

at java.lang.Class.getDeclaredMethods(Class.java:1975)

at com.google.inject.spi.InjectionPoint.getInjectionPoints(InjectionPoint.java:688)

at com.google.inject.spi.InjectionPoint.forInstanceMethodsAndFields(InjectionPoint.java:380)

at com.google.inject.spi.InjectionPoint.forInstanceMethodsAndFields(InjectionPoint.java:399)

at com.google.inject.internal.BindingBuilder.toInstance(BindingBuilder.java:84)

at org.apache.hadoop.yarn.server.resourcemanager.webapp.RMWebApp.setup(RMWebApp.java:60)

at org.apache.hadoop.yarn.webapp.WebApp.configureServlets(WebApp.java:160)

at com.google.inject.servlet.ServletModule.configure(ServletModule.java:55)

at com.google.inject.AbstractModule.configure(AbstractModule.java:62)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:340)

at com.google.inject.spi.Elements.getElements(Elements.java:110)

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:138)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:104)

at com.google.inject.Guice.createInjector(Guice.java:96)

at com.google.inject.Guice.createInjector(Guice.java:73)

at com.google.inject.Guice.createInjector(Guice.java:62)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.build(WebApps.java:387)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.start(WebApps.java:432)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.startWepApp(ResourceManager.java:1203)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.serviceStart(ResourceManager.java:1312)

at org.apache.hadoop.service.AbstractService.start(AbstractService.java:194)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.main(ResourceManager.java:1507)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.yarn.server.timelineservice.collector.TimelineCollectorManager

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 36 more

9.3 JDK安装目录

启动过程中会报错,找不到JAVA_HOME,这是因为Hadoop无法识别JAVA_HOME路径中的空格。Windows默认JAVA安装位置为C:\Program Files\Java,其中空格Hadoop无法识别。

方案一(推荐)

重新安装JDK,路径中不要带有空格

方案二

编辑hadoop-3.1.3\etc\hadoophadoop-env.cmd,路径替代符

set JAVA_HOME=C:\PROGRA~1\Java\jdk1.8.0_291

因为PROGRA~1是 C:\Program Files 目录的dos文件名模式下的缩写 。长于8个字符的文件名和文件夹名,都被简化成前面6个有效字符,后面~1,有重名的就 ~2,~3

方法三

用引号括起来

set JAVA_HOME="C:\Program Files"\Java\jdk1.8.0_291

9.4 HBase shell报错

缺少org.fusesource.jansi.internal.Kernel32造成,下载jansi-1.18.jar包放到hbase-2.3.5\lib下

[ERROR] Terminal initialization failed; falling back to unsupported

java.lang.NoClassDefFoundError: Could not initialize class org.fusesource.jansi.internal.Kernel32

at org.fusesource.jansi.internal.WindowsSupport.getConsoleMode(WindowsSupport.java:50)

at jline.WindowsTerminal.getConsoleMode(WindowsTerminal.java:177)

at jline.WindowsTerminal.init(WindowsTerminal.java:80)

at jline.TerminalFactory.create(TerminalFactory.java:101)

at jline.TerminalFactory.get(TerminalFactory.java:159)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.jruby.javasupport.JavaMethod.invokeDirectWithExceptionHandling(JavaMethod.java:438)

at org.jruby.javasupport.JavaMethod.invokeStaticDirect(JavaMethod.java:360)

at org.jruby.java.invokers.StaticMethodInvoker.call(StaticMethodInvoker.java:36)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:298)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:127)

at org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:344)

at org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:72)

at org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:78)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:144)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:130)

at org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:194)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:298)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:127)

at org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:344)

at org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:72)

at org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:78)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:144)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:130)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:308)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:137)

at org.jruby.RubyClass.newInstance(RubyClass.java:995)

at org.jruby.RubyClass$INVOKER$i$newInstance.call(RubyClass$INVOKER$i$newInstance.gen)

at org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:194)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:298)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:127)

at org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:344)

at org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:72)

at org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:78)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:144)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:130)

at org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:194)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:298)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:127)

at org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:344)

at org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:72)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:109)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:95)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:278)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:79)

at org.jruby.ir.instructions.CallBase.interpret(CallBase.java:432)

at org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:360)

at org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:72)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:109)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:95)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:278)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:79)

at org.jruby.ir.instructions.CallBase.interpret(CallBase.java:432)

at org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:360)

at org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:72)

at org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:84)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:179)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:165)

at org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:202)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:318)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:155)

at org.jruby.ir.interpreter.InterpreterEngine.processCall(InterpreterEngine.java:315)

at org.jruby.ir.interpreter.StartupInterpreterEngine.interpret(StartupInterpreterEngine.java:72)

at org.jruby.ir.interpreter.InterpreterEngine.interpret(InterpreterEngine.java:78)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.INTERPRET_METHOD(MixedModeIRMethod.java:144)

at org.jruby.internal.runtime.methods.MixedModeIRMethod.call(MixedModeIRMethod.java:130)

at org.jruby.internal.runtime.methods.DynamicMethod.call(DynamicMethod.java:194)

at org.jruby.runtime.callsite.CachingCallSite.cacheAndCall(CachingCallSite.java:298)

at org.jruby.runtime.callsite.CachingCallSite.call(CachingCallSite.java:127)

at D_3a_.ProgramFiles.Apache.hbase_minus_2_dot_3_dot_5.bin.$_dot_dot_.bin.hirb.invokeOther172:print_banner(D:\ProgramFiles\Apache\hbase-2.3.5\bin\..\bin\hirb.rb:190)

at D_3a_.ProgramFiles.Apache.hbase_minus_2_dot_3_dot_5.bin.$_dot_dot_.bin.hirb.RUBY$script(D:\ProgramFiles\Apache\hbase-2.3.5\bin\..\bin\hirb.rb:190)

at java.lang.invoke.MethodHandle.invokeWithArguments(MethodHandle.java:627)

at org.jruby.ir.Compiler$1.load(Compiler.java:94)

at org.jruby.Ruby.runScript(Ruby.java:830)

at org.jruby.Ruby.runNormally(Ruby.java:749)

at org.jruby.Ruby.runNormally(Ruby.java:767)

at org.jruby.Ruby.runFromMain(Ruby.java:580)

at org.jruby.Main.doRunFromMain(Main.java:417)

at org.jruby.Main.internalRun(Main.java:305)

at org.jruby.Main.run(Main.java:232)

at org.jruby.Main.main(Main.java:204)