Ambari+HDP集群关闭kerberos认证后遇到的问题

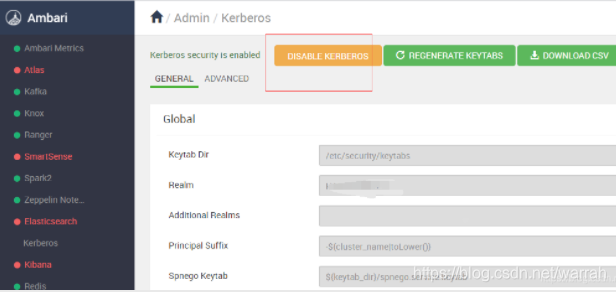

先关闭kerberos。

有一台机器关掉有问题,

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SPARK2/package/scripts/job_history_server.py", line 102, in <module>

JobHistoryServer().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SPARK2/package/scripts/job_history_server.py", line 61, in stop

spark_service('jobhistoryserver', upgrade_type=upgrade_type, action='stop')

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SPARK2/package/scripts/spark_service.py", line 178, in spark_service

environment={'JAVA_HOME': params.java_home}

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 166, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 263, in action_run

returns=self.resource.returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 72, in inner

result = function(command, **kwargs)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 102, in checked_call

tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy, returns=returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 150, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 314, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/hdp/current/spark2-historyserver/sbin/stop-history-server.sh' returned 1. stopping org.apache.spark.deploy.history.HistoryServer

/usr/hdp/current/spark2-client/sbin/spark-daemon.sh: line 209: kill: (7874) - Operation not permitted

根本原因是因为点击Regenerate Keytabs,没有成功后,导致认证都失败了。另外还需要将/tmp下面的进程文件清理掉,否则ambari监控的状态总不对

[root@bg8 sbin]# kinit -kt /etc/security/keytabs/spark.service.keytab spark/bg8.whty.com.cn@HADOOP.WHTY

kinit: Password incorrect while getting initial credentials

运维实力不够,还是不要开启kerberos,因为对应开发代码均需要调整。

1 zookeeper

zookeeper还是使用了SASL

java.io.IOException: Could not configure server because SASL configuration did not allow the ZooKeeper server to authenticate itself properly: javax.security.auth.login.LoginException: Receive timed out

at org.apache.zookeeper.server.ServerCnxnFactory.configureSaslLogin(ServerCnxnFactory.java:207)

at org.apache.zookeeper.server.NIOServerCnxnFactory.configure(NIOServerCnxnFactory.java:87)

at org.apache.zookeeper.server.quorum.QuorumPeerMain.runFromConfig(QuorumPeerMain.java:130)

at org.apache.zookeeper.server.quorum.QuorumPeerMain.initializeAndRun(QuorumPeerMain.java:111)

at org.apache.zookeeper.server.quorum.QuorumPeerMain.main(QuorumPeerMain.java:78)

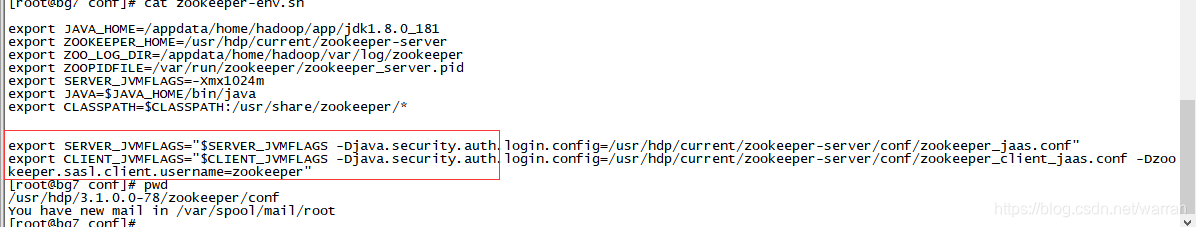

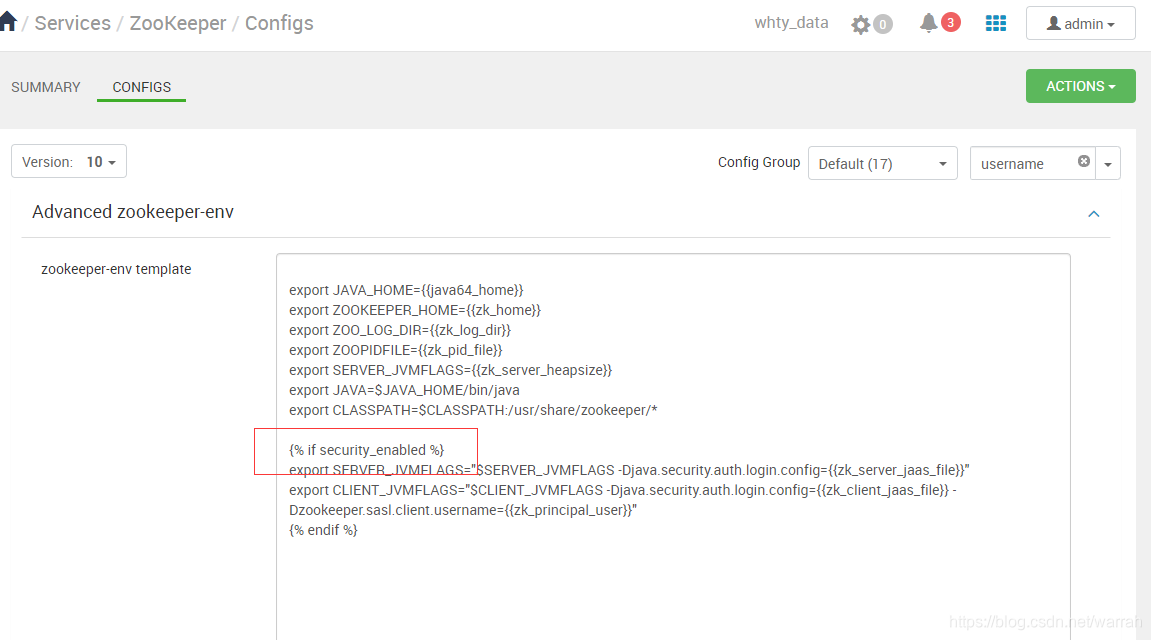

查看/usr/hdp/3.1.0.0-78/zookeeper/conf/zookeeper-env.sh中,这块是多余的

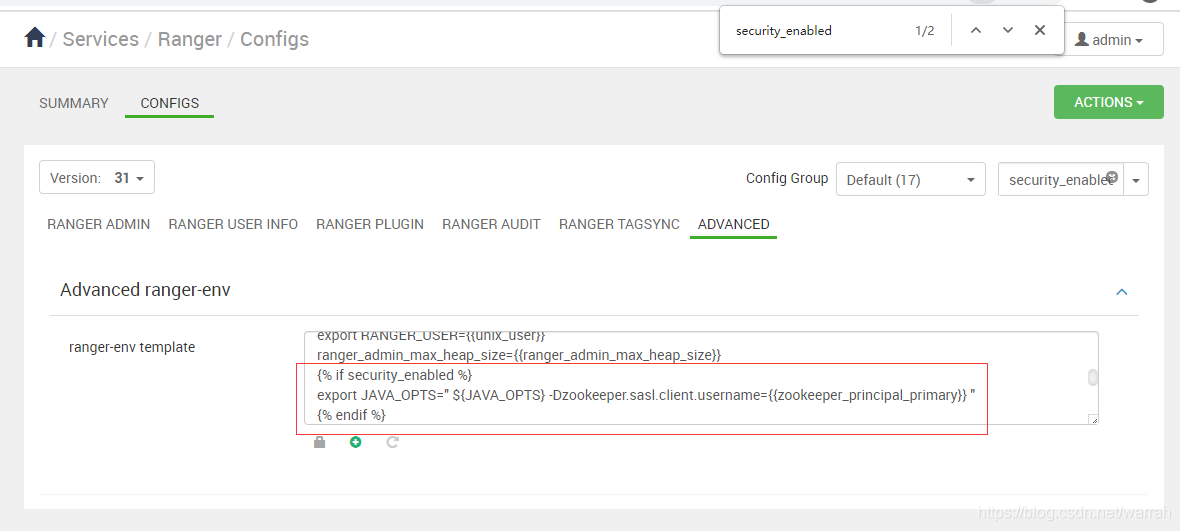

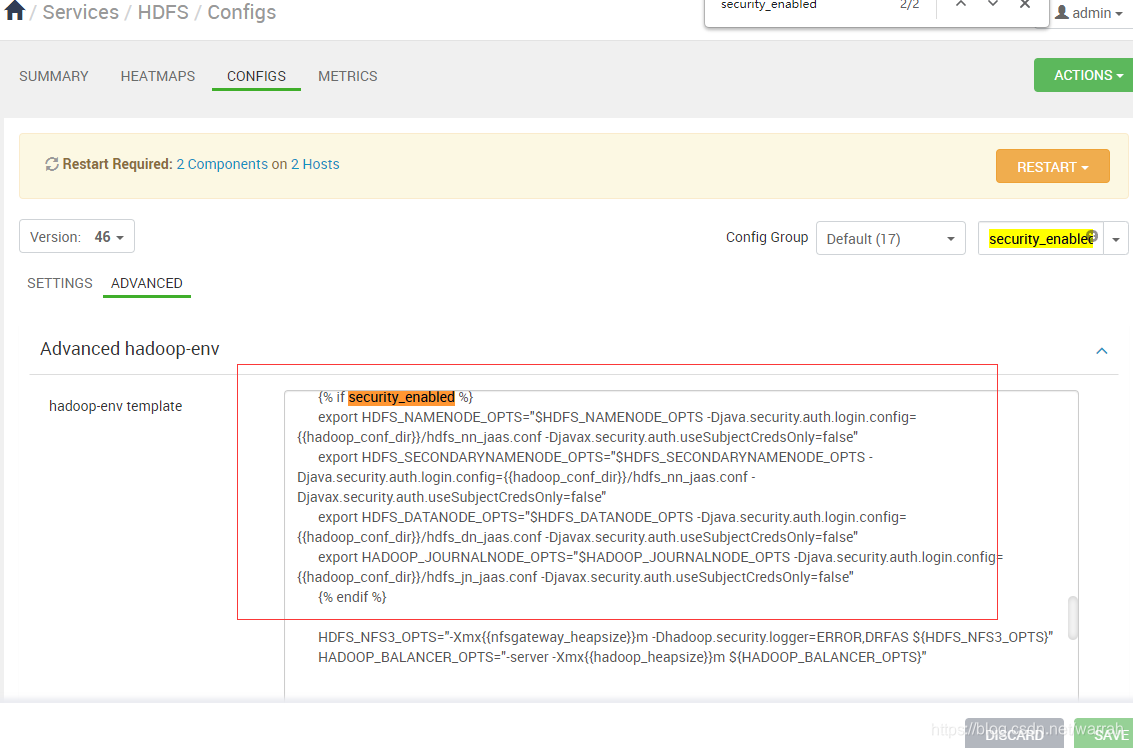

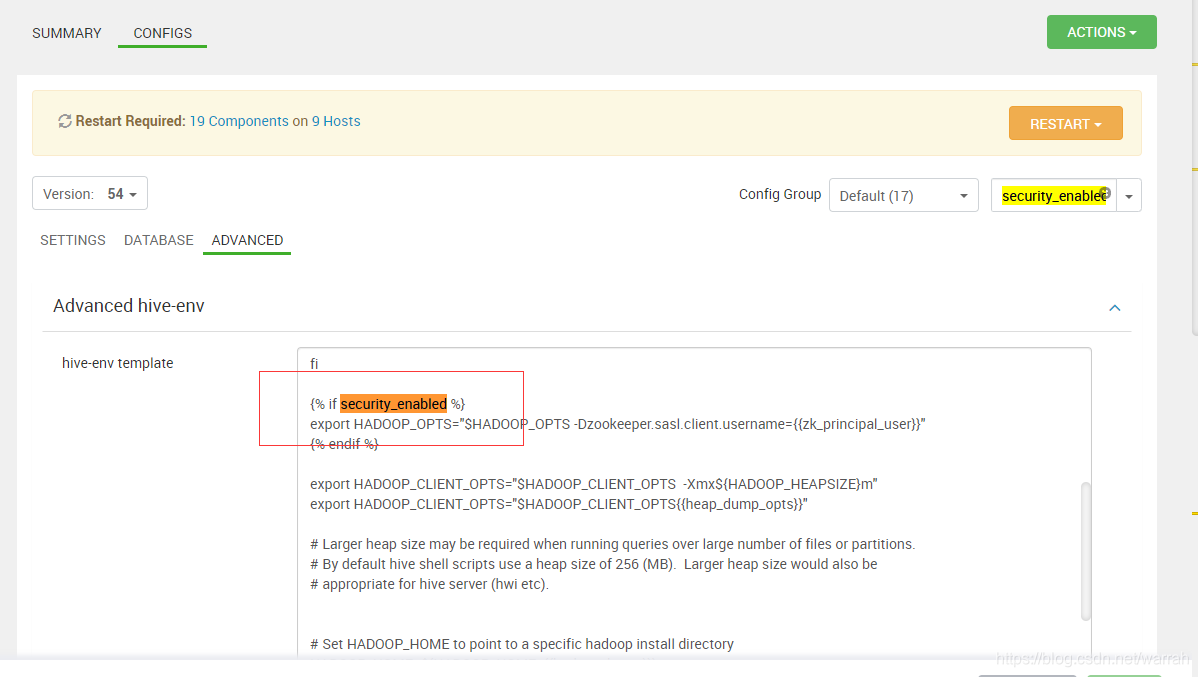

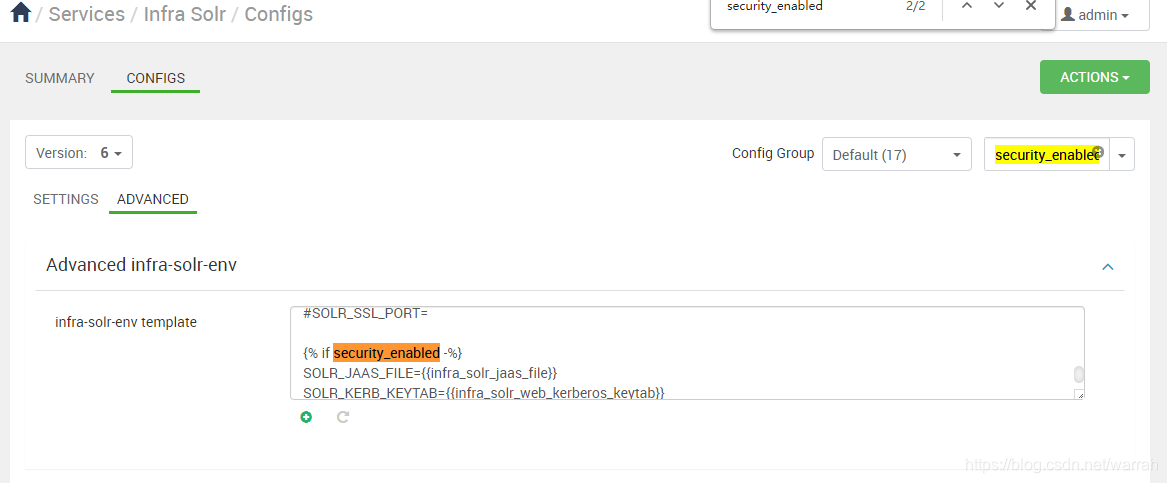

ambari中有一个判断security_enabled,这个是在哪里呢?不管了,删掉就可以,你会发现ranger也有

2 ranger

同时将atlas.authentication.method.kerberos设置为false

3 hdfs

hadoop.security.authentication 更为 simple

将下面的也要删掉

4 yarn

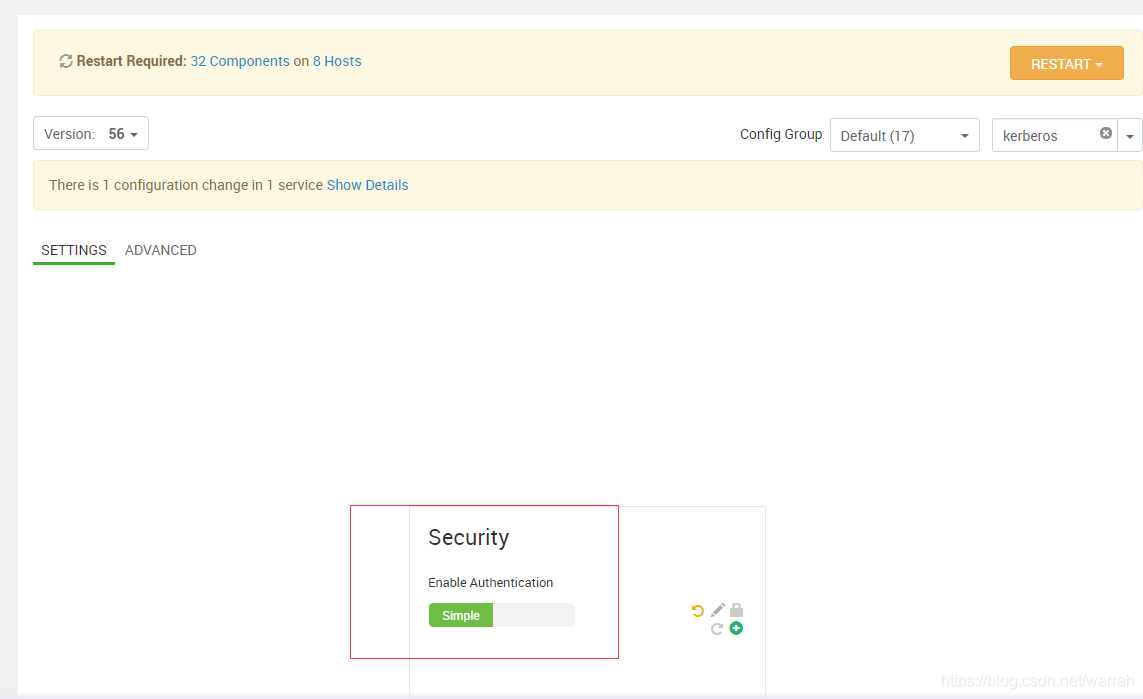

将Enable Authentication设置为simple

将yarn.timeline-service.http-authentication.type设置为simple

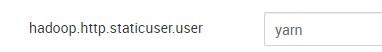

出现下面这个问题的解决办法是在hdfs中custom core-site中添加自定义属性hadoop.http.staticuser.user=yarn

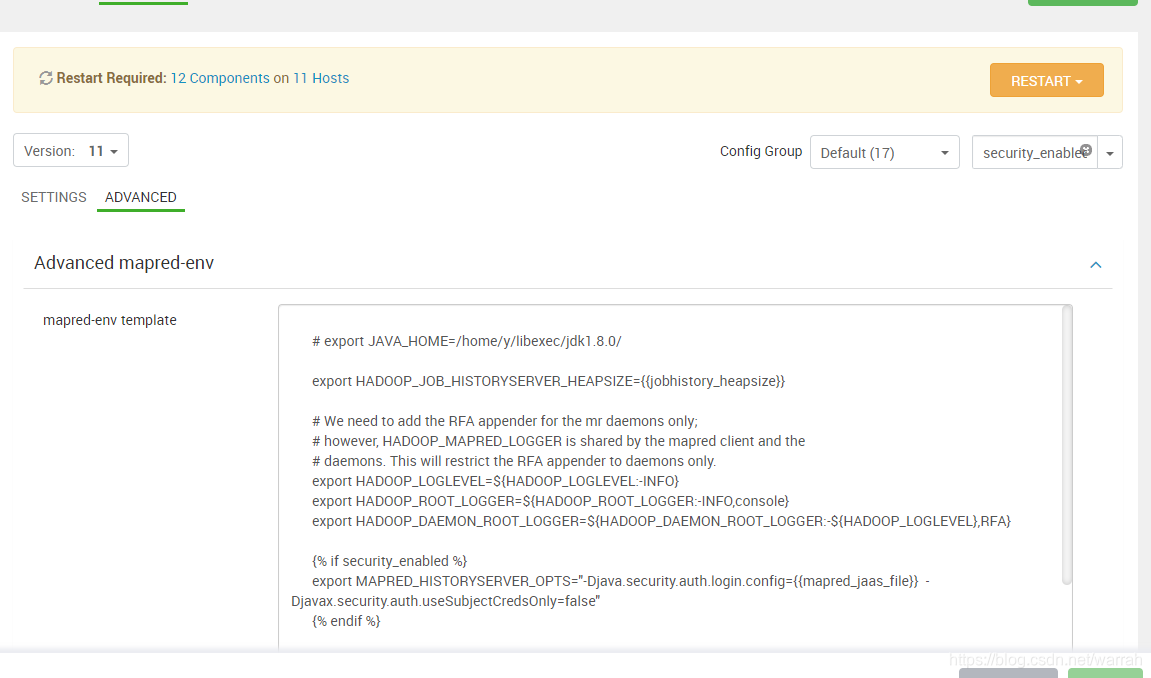

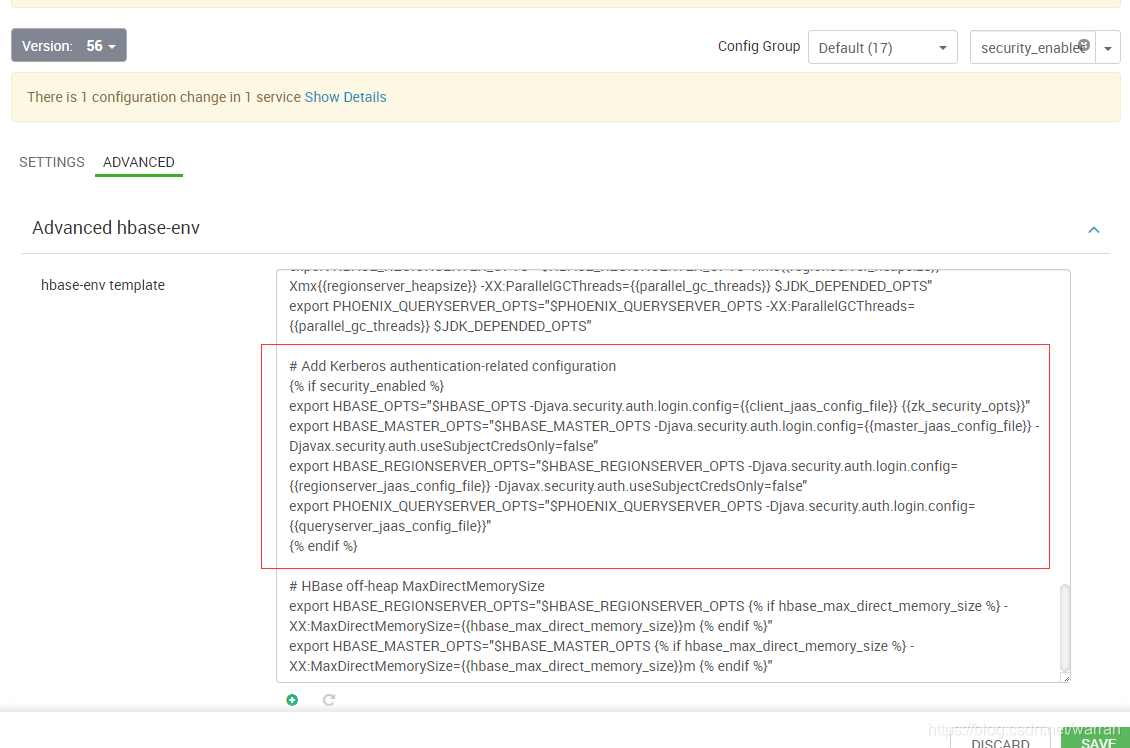

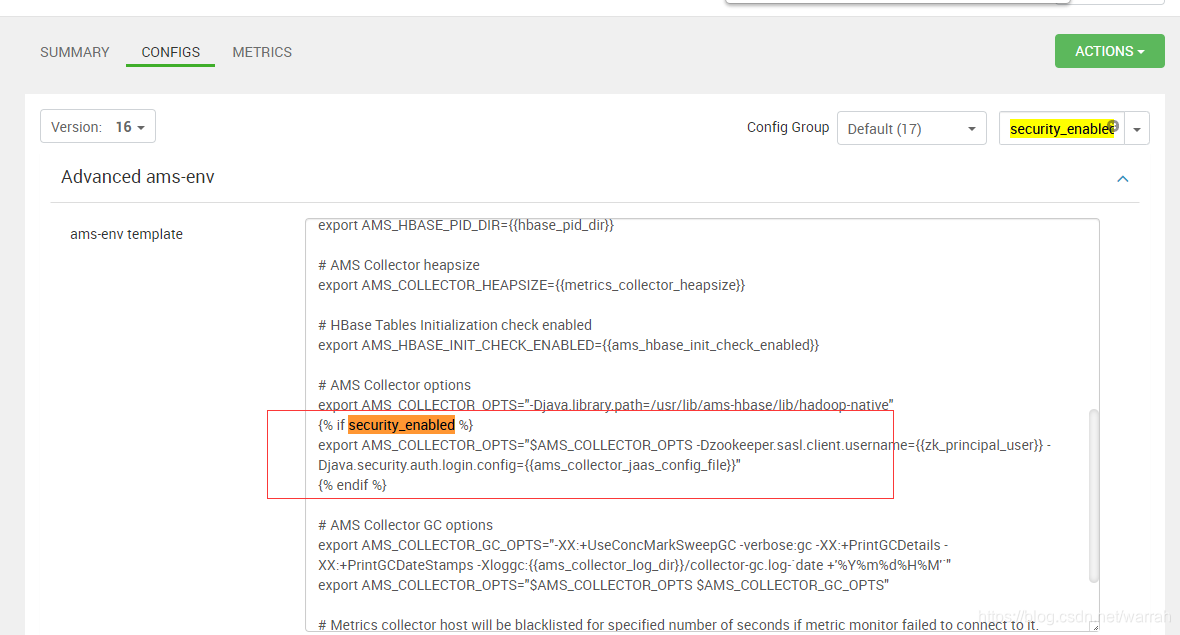

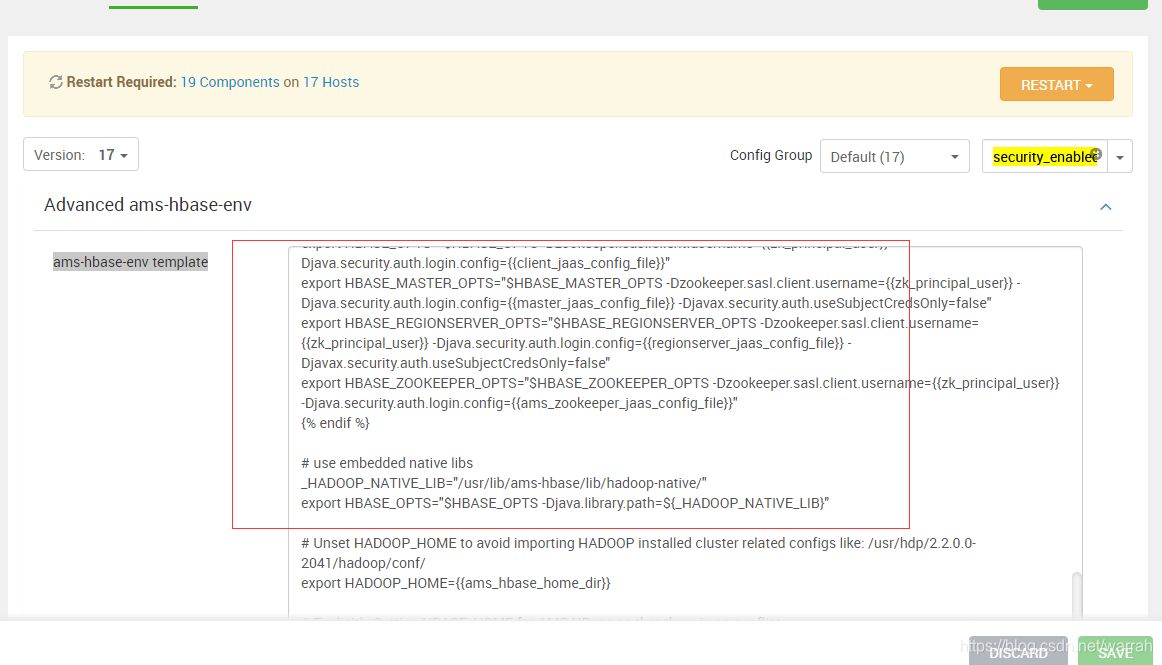

yarn-env template,hbase-env template中security_enabled都取消

File "/usr/lib/ambari-agent/lib/resource_management/libraries/providers/hdfs_resource.py", line 295, in _run_command

raise WebHDFSCallException(err_msg, result_dict)

resource_management.libraries.providers.hdfs_resource.WebHDFSCallException: Execution of 'curl -sS -L -w '%{http_code}' -X PUT -d '' -H 'Content-Length: 0' --negotiate -u : 'http://bg2.whty.com.cn:50070/webhdfs/v1/ats/done?op=SETPERMISSION&permission=755'' returned status_code=403.

{

"RemoteException": {

"exception": "AccessControlException",

"javaClassName": "org.apache.hadoop.security.AccessControlException",

"message": "Permission denied. user=dr.who is not the owner of inode=/ats/done"

}

}

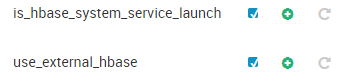

参考Timeline Service V2.0 Reader 启动不了,is_hbase_system_service_launch和use_external_hbase勾选,yarn可以启动了

5 mapreduce2

4 hbase

将hbase的Enable Authentication设置为simple

将hadoop.security.authorization设置为false

将dfs.datanode.address设置为0.0.0.0:50010

将dfs.datanode.http.address设置为0.0.0.0:50075

保存的时候,ambari会将hbase.coprocessor.region.classes调整为

org.apache.hadoop.hbase.security.access.SecureBulkLoadEndpoint,org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor

按照上面调整后,启动还是报错,提示下面的异常信息,跟ranger有些关系。

2021-04-07 16:27:01,185 ERROR provider.BaseAuditHandler (BaseAuditHandler.java:logError(329)) - Error writing to log file.

org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create directory /ranger/audit/hdfs/20210407. Name node is in safe mode.

The reported blocks 9554 needs additional 462 blocks to reach the threshold 1.0000 of total blocks 10016.

The number of live datanodes 8 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. NamenodeHostName:bg2.whty.com.cn

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.newSafemodeException(FSNamesystem.java:1454)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1441)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:3149)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:1126)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:707)

"RemoteException": {

"exception": "AccessControlException",

"javaClassName": "org.apache.hadoop.security.AccessControlException",

"message": "Permission denied. user=dr.who is not the owner of inode=/ranger/audit"

}

}

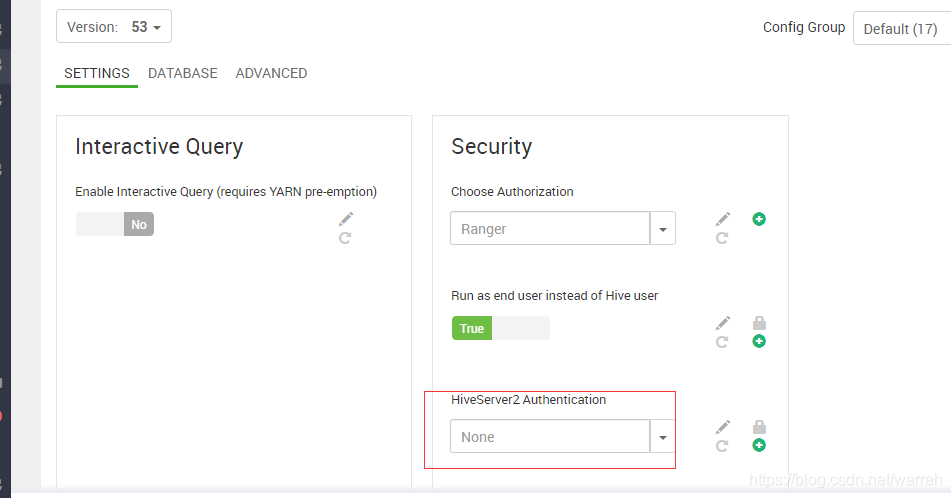

3 hive

将hive.metastore.sasl.enabled设置为false

hbase.security.authentication设置为simple

进入到zookeeper中rmr /hiveserver2

将下面的hive-interactive-env template和hive-env template中security_enabled的配置也删掉

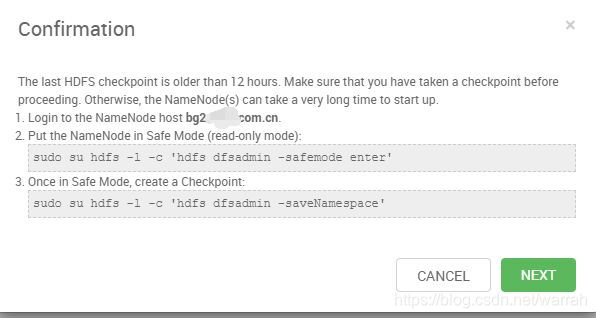

由于过了一晚上SNAMENODE没有启动,于是ambari提示我:

[root@bg2 ~]# sudo su hdfs -l -c 'hdfs dfsadmin -safemode enter'

Safe mode is ON

[root@bg2 ~]# sudo su hdfs -l -c 'hdfs dfsadmin -saveNamespace'

Save namespace successful

设置完毕后,查看启动日志发现

/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://bg2.test.com.cn:8020 -safemode get

再看,看来跟ranger有关系

resource_management.libraries.providers.hdfs_resource.WebHDFSCallException: Execution of 'curl -sS -L -w '%{http_code}' -X PUT -d '' -H 'Content-Length: 0' --negotiate -u : 'http://bg2.test.com.cn:50070/webhdfs/v1/ranger/audit?op=SETOWNER&owner=hdfs&group=hdfs'' returned status_code=403.

{

"RemoteException": {

"exception": "SafeModeException",

"javaClassName": "org.apache.hadoop.hdfs.server.namenode.SafeModeException",

"message": "Cannot set owner for /ranger/audit. Name node is in safe mode.\nThe reported blocks 0 needs additional 10016 blocks to reach the threshold 1.0000 of total blocks 10016.\nThe number of live datanodes 0 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. NamenodeHostName:bg2.test.com.cn"

}

}

执行sudo su hdfs -l -c 'hdfs dfsadmin -safemode leave',退出安全模式,但hdfs启动的时候回自动设置为safe mode

7 solr

将xasecure.audit.destination.solr.force.use.inmemory.jaas.config设置为false

8 Ambari Metrics

9 kafka

过滤一下security_enabled,有三个配置Advanced kafka-env、Advanced kafka_jaas_conf、Advanced kafka-env

注意kafka-env template这个配置,没有那么容易,这该怎么删呢,是保留export KAFKA_KERBEROS_PARAMS={{kafka_kerberos_params}}吗?

{% if kerberos_security_enabled or kafka_other_sasl_enabled %}

export KAFKA_KERBEROS_PARAMS="-Djavax.security.auth.useSubjectCredsOnly=false {{kafka_kerberos_params}}"

{% else %}

export KAFKA_KERBEROS_PARAMS={{kafka_kerberos_params}}

{% endif %}