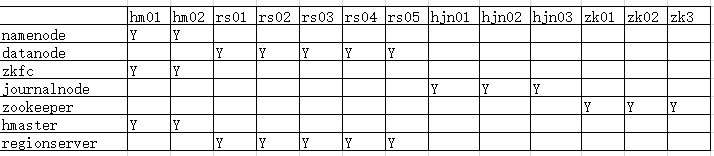

一、环境配置

1、环境介绍

操作系统:centos 7.9

jdk版本:8u291

hadoop版本:2.10.1

hbase版本:2.3.5

hbase下载地址:

https://mirrors.bfsu.edu.cn/apache/hbase/

zookeeper版本:3.6.3

hm01,hm02:

4核心 8G内存 数据盘400G

rs01-rs05:

16核心 16G内存 数据盘2T

hjn01-hjn03:

2核心 2G内存

zk01-zk03(复用现有):

4核心 8G内存

2、主机名和IP地址设置

注意:编写/etc/hosts和/etc/hostname

#zookeeper node

10.99.27.11 zk01.wtown.com

10.99.27.12 zk02.wtown.com

10.99.27.13 zk03.wtown.com

#hmaster

10.99.27.71 hm01.wtown.com

10.99.27.72 hm02.wtown.com

#regionserver

10.99.27.73 rs01.wtown.com

10.99.27.74 rs02.wtown.com

10.99.27.75 rs03.wtown.com

10.99.27.76 rs04.wtown.com

10.99.27.77 rs05.wtown.com

#hbase journalnode

10.99.27.34 hjn01.wtown.com

10.99.27.35 hjn02.wtown.com

10.99.27.36 hjn03.wtown.com

3、挂载数据盘到/data

注意:所有hmaster和regionserver点建立/data文件夹

https://blog.csdn.net/zyj81092211/article/details/118054000

4、安装JDK

https://blog.csdn.net/zyj81092211/article/details/118055068

二、zookeeper集群

1、安装zookeeper集群

https://blog.csdn.net/zyj81092211/article/details/118066724

三、hadoop HA 集群

1、创建hadoop用户,并设置sudo权限(hbase journalnode、hmaster和regionserver节点)

useradd hadoop

echo hadoop|passwd --stdin hadoop

visudo

添加如下:

hadoop ALL=(ALL) NOPASSWD:ALL

2、设置ssh免认证(hbase journalnode、hmaster和regionserver节点)

root用户:

ssh-keygen -t rsa

ssh-copy-id -i .ssh/id_rsa.pub root@hm01.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@hm02.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@rs01.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@rs02.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@rs03.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@rs04.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@rs05.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@hjn01.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@hjn02.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub root@hjn03.wtown.com

hadoop用户:

ssh-keygen -t rsa

ssh-copy-id -i .ssh/id_rsa.pub hadoop@hm01.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@hm02.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@rs01.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@rs02.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@rs03.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@rs04.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@rs05.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@hjn01.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@hjn02.wtown.com

ssh-copy-id -i .ssh/id_rsa.pub hadoop@hjn03.wtown.com

3、修改hadoop配置文件

置文件目录hadoop\etc\hadoop

hadoop-env.sh文件

配置如下:

export JAVA_HOME=/usr/local/java

core-site.xml文件

<configuration>

<!-- 指定hdfs的nameservice为ns2 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns2/</value>

</property>

<!-- 指定hadoop临时目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/data</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>zk01.wtown.com:2181,zk02.wtown.com:2181,zk03.wtown.com:2181</value>

</property>

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>3000</value>

</property>

<property>

<name>ha.zookeeper.parent-znode</name>

<value>/hbase-hadoop1</value>

</property>

</configuration>

注:复用zookeeper需要指定ha.zookeeper.parent-znode参数,默认值是hadoop-ha

hdfs-site.xml文件

<configuration>

<!--指定hdfs的nameservice为ns2,与core-site.xml中的一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns2</value>

</property>

<!-- ns2下面有两个NameNode,分别是hm01,hm02 ,多个NameNode用逗号隔开-->

<property>

<name>dfs.ha.namenodes.ns2</name>

<value>hm01,hm02</value>

</property>

<!-- hm01的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns2.hm01</name>

<value>hm01.wtown.com:8020</value>

</property>

<!-- hm01的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns2.hm01</name>

<value>hm01.wtown.com:50070</value>

</property>

<!-- hm02的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns2.hm02</name>

<value>hm02.wtown.com:8020</value>

</property>

<!-- hm02的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns2.hm02</name>

<value>hm02.wtown.com:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hjn01.wtown.com:8485;hjn02.wtown.com:8485;hjn03.wtown.com:8485/ns2</value>

</property>

<!-- 开启NameNode失败自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns2</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制方法,多个机制用换行隔开-->

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 使用sshfence隔离机制时需要ssh免登陆,使用hadoop用户-->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<!-- 配置sshfence隔离机制超时时间 -->

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<!--指定namenode名称空间的存储地址 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///data/hadoop/hdfs/name</value>

</property>

<!--指定datanode数据存储地址 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///data/hadoop/hdfs/data</value>

</property>

<!--指定数据副本数 -->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

注意:复用journalnode 需要配置dfs.namenode.shared.edits.dir中路径

slaves文件

rs01.wtown.com

rs02.wtown.com

rs03.wtown.com

rs04.wtown.com

rs05.wtown.com

4、创建配置文件中出现的对应文件夹

hadoop临时文件夹:hadoop/data

HDFS文件夹:hadoop/hdfs/name和hadoop/hdfs/data

journalnode数据文件:hadoop/journaldata

5、将软件包上传至所有节点(hbase journalnode、hmaster和regionserver节点),并创建软连接

ln -s /data/hadoop /usr/local/hadoop

6、设置hadoop环境变量(hbase journalnode、hmaster和regionserver节点)

vi /etc/profile

添加如下内容:

# hadoop environment

export HADOOP_HOME=/data/hadoop

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

重新加载profile

source /etc/profile

7、添加hadoop命令执行权限(hbase journalnode、hmaster和regionserver节点)

sudo chmod +x /data/hadoop/bin/*

sudo chmod +x /data/hadoop/sbin/*

8、更改/data目录权限为hadoop(hbase journalnode、hmaster和regionserver节点)

chown -R hadoop.hadoop /data

9、hadoop用户启动journalnode(hjn01、hjn02、hjn03上)

hadoop-daemon.sh start journalnode

10、hadoop用户格式化HDFS(hm01上)

hdfs namenode -format

11、hadoop用户格式化zkfc(hm01上)

hdfs zkfc -formatZK

12、hadoop用户启动hdfs(hm01上)

start-dfs.sh

13、hadoop用户复制并启动HDFS备用节点(hm02上)

hdfs namenode -bootstrapStandby

hadoop-daemon.sh start namenode

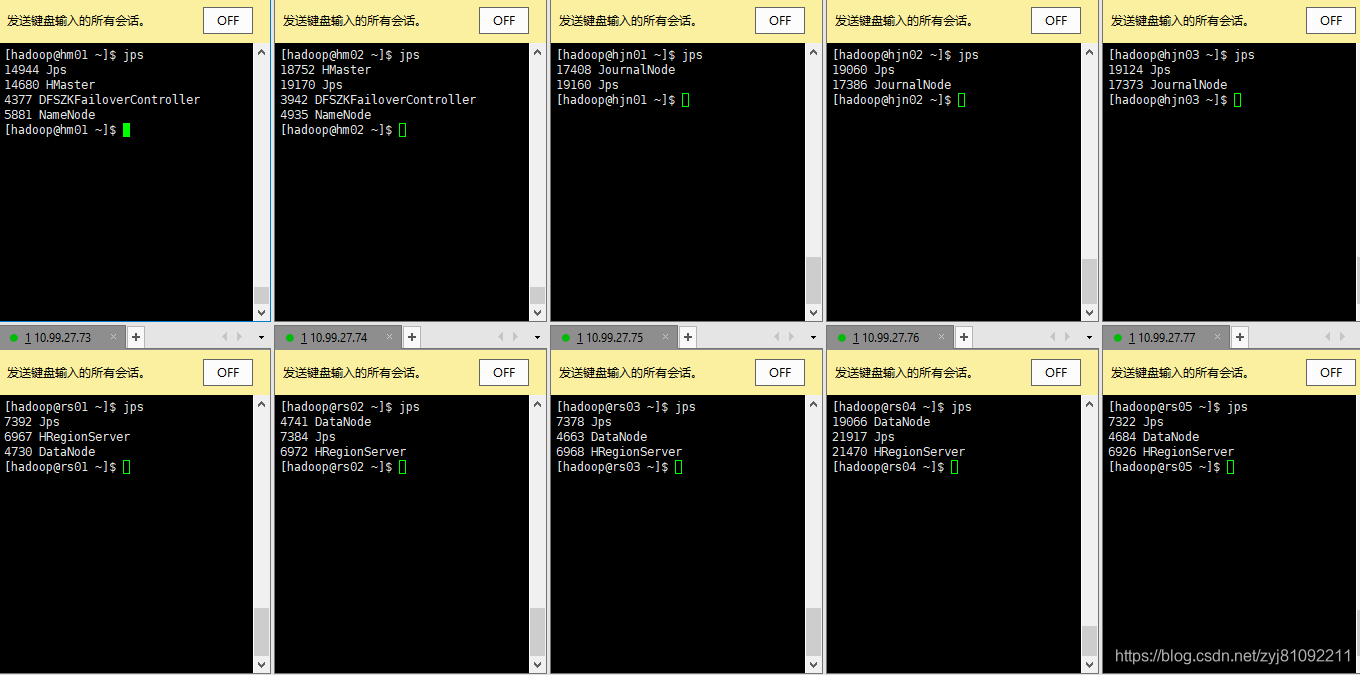

14、查看状态

四、Hbase HA集群

注:以下操作,建议使用hadoop用户;其他用户,请注意软件包权限问题

1、配置hbase-env.sh文件

添加如下

export JAVA_HOME=/usr/local/java

#关闭hbase管理zookeeper

export HBASE_MANAGES_ZK=false

2、配置hbase-site.xml文件

<configuration>

<!-- 启用分布式模式 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!--设置hdfs,ns2为 hadoop hdfs配置的nameservices值-->

<property>

<name>hbase.rootdir</name>

<value>hdfs://ns2/hbase</value>

</property>

<!--配置zookeeper集群地址-->

<property>

<name>hbase.zookeeper.quorum</name>

<value>zk01.wtown.com,zk02.wtown.com,zk03.wtown.com</value>

</property>

<!--zookeeper集群通讯端口-->

?<property>

?<name>hbase.zookeeper.property.clientPort</name>

?<value>2181</value>

</property>

<!--配置znode根节点位置,多hbase共用一个zookeeper集群时需要配置-->

<property>

<name>zookeeper.znode.parent</name>

<value>/hbase1</value>

</property>

</configuration>

3、配置regionservers文件

rs01.wtown.com

rs02.wtown.com

rs03.wtown.com

rs04.wtown.com

rs05.wtown.com

4、hbase软连接

sudo ln -s /data/hbase /usr/local/hbase

5、创建hadoop配置文件连接

sudo ln -s /data/hadoop/etc/hadoop/core-site.xml /data/hbase/conf/core-site.xml

sudo ln -s /data/hadoop/etc/hadoop/hdfs-site.xml /data/hbase/conf/hdfs-site.xml

6、添加Hbase环境变量

vi /etc/profile

添加如下:

# hbaes environment

export HBASE_HOME=/data/hbase

export PATH=$HBASE_HOME/bin:$PATH

7、启动Hbase ha集群

在hm01上:

start-hbase.sh

在hm02上:

hbase-daemon.sh start master

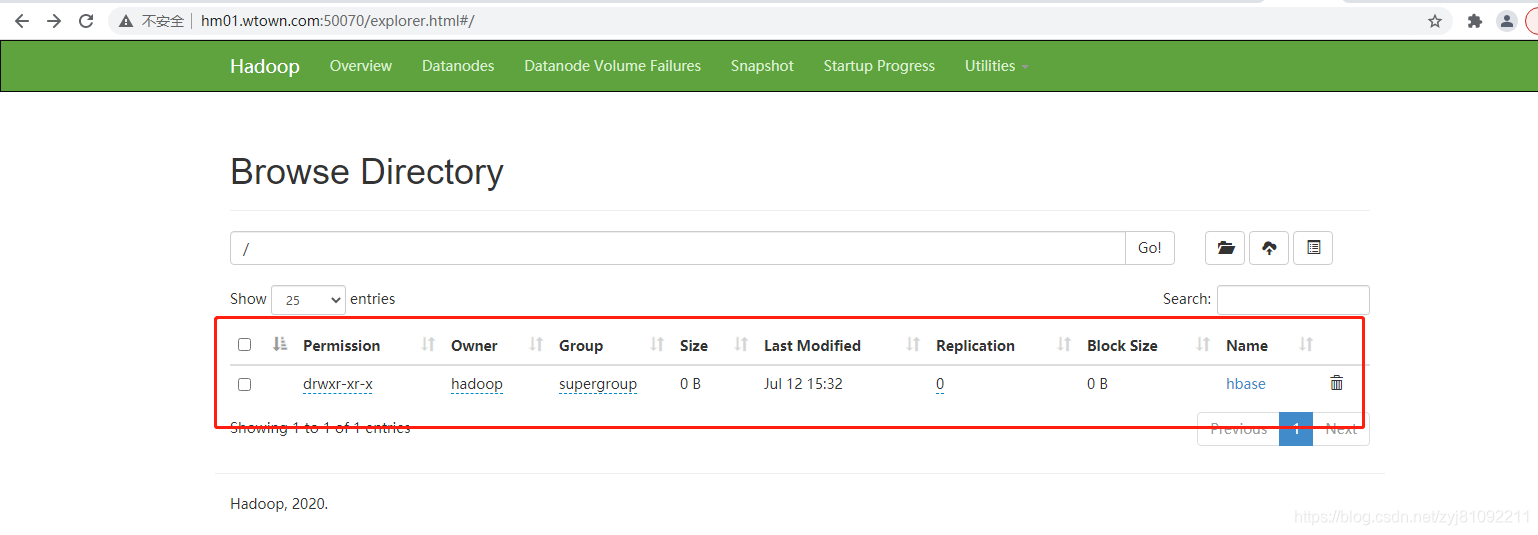

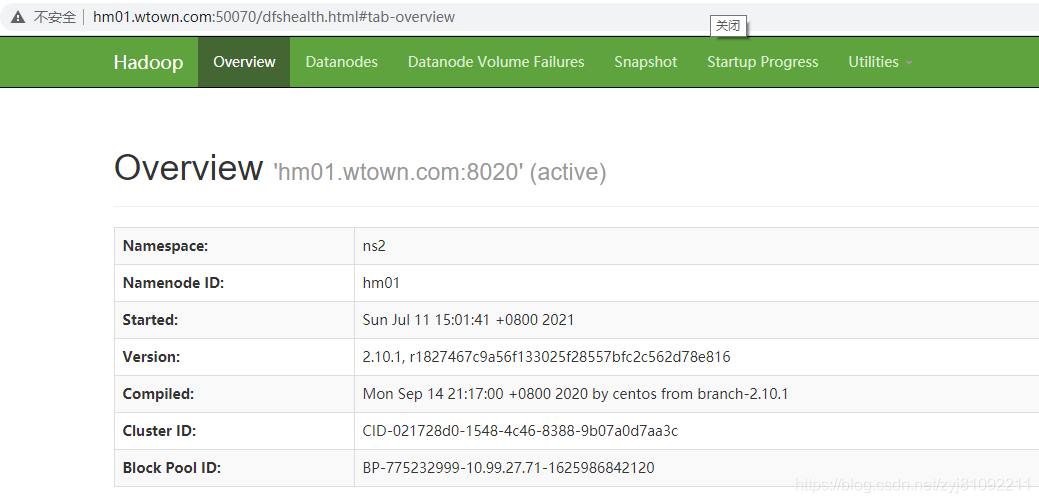

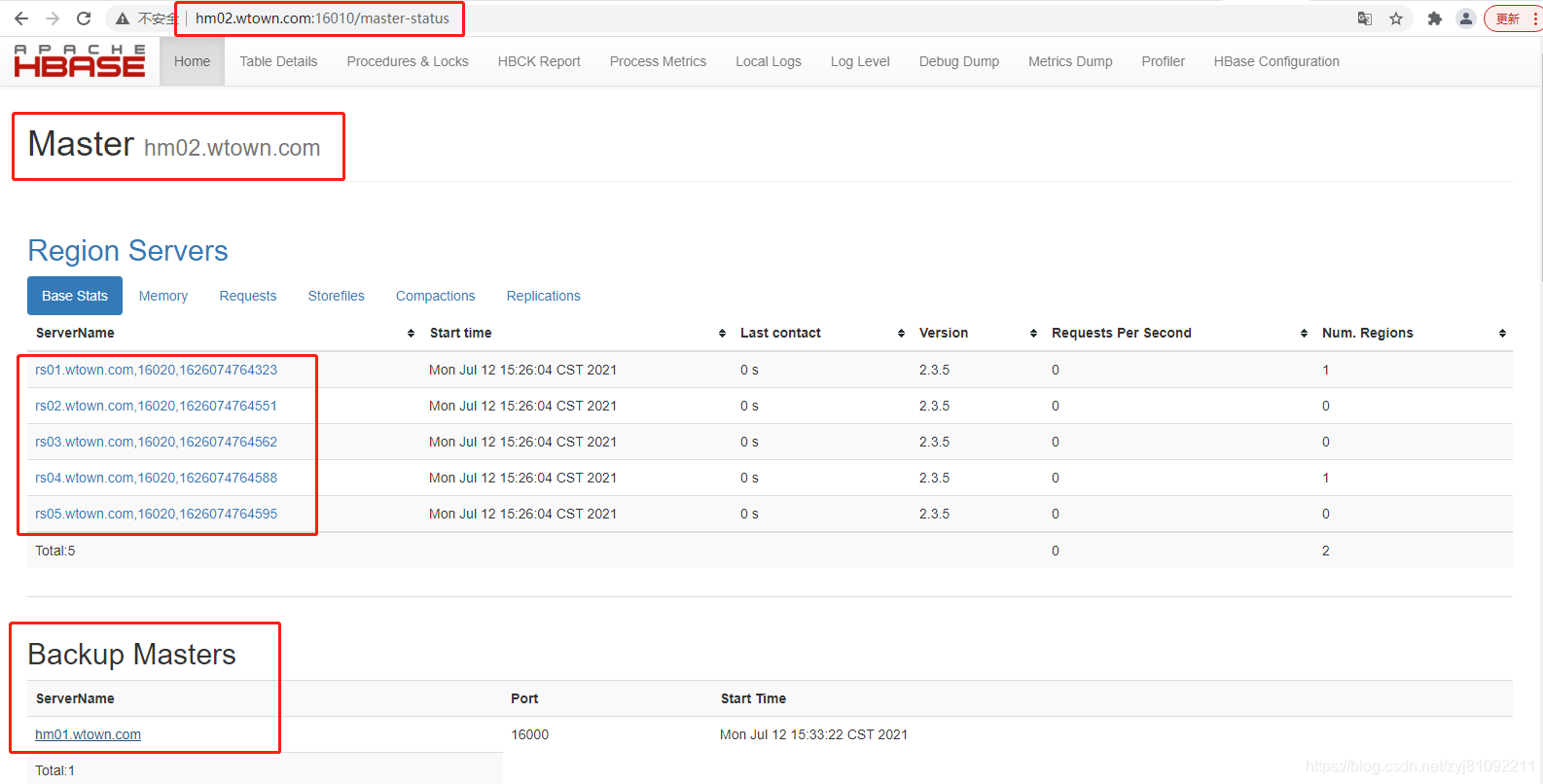

8、查看集群状态

web界面:

HDFS状态: