一.flink CDC ?目前还有许多要完善的,用起来感觉还不错的,我这边自己研究了下.

自己有些心得

1.在flink cdc 自定义反序列化器 测试 修改主键后会终止程序 报错 Recovery is suppressed by NoRestartBackoffTimeStrategy

我这边没时间继续查找研究.希望有人继续研究吧!

2.希望flink cdc 支持 Oracle,这个很重要,成功了也是个壮举啊!

二. mysql的配置 ?mysql肯定都安装 但是初学者找到这个配置确实有点难度

指令:

mysql --help|grep my.cnf

查看 mysql 配置文件的位置 可能会有几个但是只有一个是有效的

我这边是这个/etc/my.cnf

添加配置:?

max_allowed_packet=1024M

server-id=1

log-bin=mysql-bin

binlog_format=row?

binlog-do-db=ssm ?# 这个是很重要,不然会监控mysql中所有的数据库.

?三.报错问题

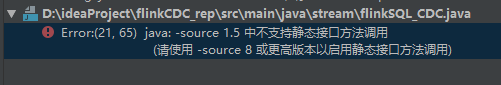

这个报错主要还是idea中内置的java source版本过低了,这个不提供解决方法了 百度下 有很多解决方案,但是值得一说的是 要找全面.

四.撸代码

需要的依赖包含flink CDC 和flinkSQL cdc 放在一起.

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.49</version>

</dependency>

<dependency>

<groupId>com.alibaba.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>1.1.1</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.75</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.12</artifactId>

<version>1.12.0</version>

</dependency>

</dependencies>

?(1)flink CDC 部分(这里面的断点续传只是演示下 没有具体的研究,由于flink版本 和flink高版本需要整合hadoop的问题,没在研究.)

public class flinkCDC {

public static void main(String[] args) throws Exception {

//1.创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2.flinkcdc 做断点续传,需要将flinkcdc读取binlog的位置信息以状态方式保存在checkpoint中即可.

//(1)开启checkpoint 每隔5s 执行一次ck 指定ck的一致性语义

env.enableCheckpointing(5000L);

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//3.设置任务关闭后,保存最后后一次ck数据.

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3,2000L));

env.setStateBackend(new FsStateBackend("hdfs://192.168.1.204:9000/flinkCDC"));

//4.设置访问HDFS的用户名

System.setProperty("HADOOP_USER_NAME","root");

//5.创建Sources数据源

Properties prop = new Properties();

prop.setProperty("scan.startup.mode","initial"); //"scan.startup.mode","initial" 三种要补充解释下

DebeziumSourceFunction<String> mysqlSource = MySQLSource.<String>builder()

.hostname("192.168.1.205")

.port(3306)

.username("root")

.password("Root@123")

.tableList("ssm.order") //这里的表名称,书写格式:db.table 不然会报错

.databaseList("ssm")

.debeziumProperties(prop)

.deserializer(new StringDebeziumDeserializationSchema())

.build();

//6.添加数据源

DataStreamSource<String> source = env.addSource(mysqlSource);

//7.打印数据

source.print();

//8.执行任务

env.execute();

}

}(2)flink SQL版本 在这里 需要注意这个mysql的格式

public class flinkSQL_CDC {

public static void main(String[] args) throws Exception {

//1.创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableENV = StreamTableEnvironment.create(env);

//创建一个虚拟机的表 order_info 真实存在于mysql中的表是 ssm.order

tableENV.executeSql("CREATE TABLE order_info(\n" +

"orderid INT,\n" +

"orderNmae STRING,\n" +

"orderAddr STRING,\n" +

"orderTime DATE\n" +

")WITH(\n" +

"'connector' = 'mysql-cdc',\n" +

"'hostname' = '192.168.1.205',\n" +

"'port' = '3306',\n" +

"'username' = 'root',\n" +

"'password' = 'Root@123',\n" +

"'database-name' = 'ssm',\n" +

"'table-name' = 'order'\n" +

")");

tableENV.executeSql("select * from order_info ").print();

env.execute();

}

}

(3)自定义反序列化器

/**

* @author quruiwei

* @version 1.0

* @date 2021/7/13 13:33

* @descreption 自定义反序列化器

*/

public class flinkCDC_custom_deserializer {

public static void main(String[] args) throws Exception {

//1.创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//5.创建Sources数据源

Properties prop = new Properties();

prop.setProperty("scan.startup.mode","initial");

DebeziumSourceFunction<String> mysqlSource = MySQLSource.<String>builder()

.hostname("192.168.1.205")

.port(3306)

.username("root")

.password("Root@123")

.tableList("ssm.order") //这里的表名称,书写格式:db.table 不然会报错

.databaseList("ssm")

.debeziumProperties(prop)

.deserializer(new DebeziumDeserializationSchema<String>() {

@Override

public TypeInformation<String> getProducedType() {

return TypeInformation.of(String.class);

}

@Override

public void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception {

//获取topic topic包含着数据库和表名

String[] split = sourceRecord.topic().split("\\.");

String db = split[1];

String tableName = split[2];

//System.out.println(sourceRecord.topic());

//System.out.println(db);

//System.out.println(tableName);

//获取操作类型 增删查改

Envelope.Operation operation = Envelope.operationFor(sourceRecord);

//System.out.println(operation);

//获取数据的操作

Struct struct = (Struct) sourceRecord.value();

Struct after = (Struct) struct.get("after");

JSONObject data = new JSONObject(); //创建json 将数据存放在json中,成为json格式

for (Field field : after.schema().fields()) {

//System.out.println(field);

Object o = after.get(field);

data.put(field.name(),o);

}

JSONObject resout = new JSONObject();

resout.put("operation",operation.toString().toLowerCase());

resout.put("data",data);

resout.put("database",db);

resout.put("table",tableName);

//查看下封装的数据

//System.out.println(resout); //{"database":"ssm","data":{"orderTime":18828,"orderid":8,"orderNmae":"11","orderAddr":"1"},"operation":"update","table":"order"}

collector.collect(resout.toJSONString());

}

})

.build();

//6.添加数据源

env.addSource(mysqlSource).print();

//8.执行任务

env.execute();

}

}