选举算法源码中的总思路

Zookeeper 的 Leader 选举类是 FastLeaderElection,该类是 ZAB 协议在 Leader 选举中的工程应用,所以直接找到该类对其进行分析。该类中的最为重要的方法为 lookForLeader(),是选举 Leader 的核心方法。该方法大体思路可以划分为以下几块:

1.选举前的准备工作:选举前需要做一些准备工作,例如,创建选举对象、创建选举过程中需要用到的集合、初始化选举时限等。

2.将自己作为初始化 Leader 投出去:在当前 Server 第一次投票时会先将自己作为 Leader,然后将自己的选票广播给其它所有 Server。

3.循环交换投票直至选出Leader

4.验证自己的投票与大家的投票谁更适合做 Leader:在“我选我”后,当前 Server 同样会接收到其它 Server 发送来的选票通知(Notification)。通过 while 循环,遍历所有接收到的选票通知,比较谁更适合做 Leader。若找到一个比自己更适合的 Leader,则修改自己选票,重新将新的选票广播出去。当然,每验证一个选票,则会将其记录到一个集合中,将来用于进行票数统计。

5.判断本轮选举是否应结束:其实在每次验证过谁更适合做 Leader 后,就会马上判断当前的选举是否可以结束了,即当前主机所推荐的这个选票是否过半了。若过半了,则直接完成后续的一些收尾工作,例如清空选举过程中所使用的集合,以备下次使用;再例如,生成最终的选票,以备其它 Server来同步数据。若没有过半,则继续从队列中读取出下一个来自于其它主机的选票,然后进行验证。

6.无需选举的情况:对一些特殊情况的处理。

源码解读

需要注意,对源码的阅读主要包含两方面。一个是对重要类、重要成员变量、重要方法的注释的阅读;一个是对重要方法的业务逻辑的分析。篇幅过长,本篇先解读重要类、成员变量

下面源码部分代码被省略,只列出来了目前需要关注的部分,并且调整了顺序,方便理解:

重要类、成员变量的解读

QuorumPeer

一个QuorumPeer可以理解为一个准备参加选举的ZK的server,即配置文件zoo.cfg中配置的服务器

代表具有选举权的Server,它具有三个状态LOOKING, FOLLOWING, LEADING(不包含OBSERVING状态)

/**

* This class manages the quorum protocol. There are three states this server can be in:

* <ol>

* <li>Leader election - each server will elect a leader (proposing itself as a

* leader initially).</li>

* <li>Follower - the server will synchronize with the leader and replicate any

* transactions.</li>

* <li>Leader - the server will process requests and forward them to followers.

* A majority of followers must log the request before

* it can be accepted.</li>

* </ol>

* 翻译:这个类管理着“法定人数投票”协议。这个服务器有三个状态:

* (1)Leader election:(处于该状态的)每一个服务器将选举一个Leader(最初推荐自

* 己作为Leader)。(这个状态即LOOKING状态)

* (2)Follower:(处于该状态的)服务器将与Leader做同步,并复制所有的事务(注意这里的事务指

* 的是最终的提议Proposal。不要忘记txid中的tx即为事务)。

* (3)Leader:(处于该状态的)服务器将处理请求,并将这些请求转发给其它Follower。

* 大多数Follower在该写请求被批准之前(before it can be accepted)都必须

* 要记录下该请求(注意,这里的请求指的是写请求,Leader在接收到写请求后会向所有

* Follower发出提议,在大多数Follower同意后该写请求才会被批准)。

*

* This class will setup a datagram socket that will always respond with its

* view of the current leader. The response will take the form of:

* 翻译:这个类将设置一个数据报套接字(就是一种数据结构),这个数据报套接字将

* 总是使用它的视图(格式)来响应当前的Leader。响应将采用的格式为:

*

* <pre>

* int xid;

*

* long myid;

*

* long leader_id;

*

* long leader_zxid;

* </pre>

*

* The request for the current leader will consist solely

* of an xid: int xid;

* 翻译:当前Leader的请求将仅(solely)包含(consist)一个xid(注意,xid即事务id,

* 是一个新的提议的唯一标识)。

*

*/

public class QuorumPeer extends ZooKeeperThread implements QuorumStats.Provider {

...

}

FastLeaderElection 选举类

系统初始化时,每一个QuorumPeer对象维护了一个FastLeaderElection对象来为自己的选举工作进行代言。

/**

* Implementation of leader election using TCP. It uses an object of the class

* QuorumCnxManager to manage connections. Otherwise, the algorithm is push-based

* as with the other UDP implementations.

* 翻译:使用TCP实现了Leader的选举。它使用QuorumCnxManager类的对象进行连接管理

* (与其它Server间的连接管理)。否则(即若不使用QuorumCnxManager对象的话),将使用

* UDP的基于推送的算法实现。

*

* There are a few parameters that can be tuned to change its behavior. First,

* finalizeWait determines the amount of time to wait until deciding upon a leader.

* This is part of the leader election algorithm.

* 翻译:有几个参数可以用来改变它(选举)的行为。第一,finalizeWait(这是一个代码中的常量)

* 决定了选举出一个Leader的时间,这是Leader选举算法的一部分。

*/

public class FastLeaderElection implements Election {

private static final Logger LOG = LoggerFactory.getLogger(FastLeaderElection.class);

/**

* 翻译:完成等待时间,一旦它(这个过程)认为它已经到达了选举的最后。

* (该常量)确定还需要等待多长时间

*

* 解释:

* finalizeWait:选举时发送投票给其他服务器,并接受回复,接受到所有回复用到到最长时间就是200毫秒

* 因为一般200毫秒之内选举已经结束了,所以一般都低于200毫秒

*/

final static int finalizeWait = 200;

/**

* 翻译:(该常量指定了)两个连续的notification检查间的时间间隔上限。

* 它影响了系统在经历了长时间分割后再次重启的时间。目前60秒。

*

* 该常量是前面的finalizeWait所描述的超时时限的最大值

*

* 解释:

* finalizeWait这个值会增大,直到增大到maxNotificationInterval,一但到达maxNotificationInterval,

* 还没有选举出来(长时间分割意思就是没有leader),就会重启机器,相当于重新再进行一次新的选举

*/

final static int maxNotificationInterval = 60000;

/**

* 翻译:连接管理者。FastLeaderElection(选举算法)使用TCP(管理)

* 两个同辈Server的通信,并且QuorumCnxManager还管理着这些连接。

*

* 解释:通过该类管理和其他Server之间的TCP连接

*/

QuorumCnxManager manager;

// 可以简单理解为:代表当前参与选举的Server

QuorumPeer self;

// 逻辑时钟

AtomicLong logicalclock = new AtomicLong(); /* Election instance */

// 记录当前Server所推荐的leader信息,即ServerId,Zxid,Epoch

long proposedLeader;

long proposedZxid;

long proposedEpoch;

/**

* 翻译:Notifications是一个让其它Server知道当前Server已经改变

* 了投票的通知消息,(为什么它要改变投票呢?)要么是因为它参与了

* Leader选举(新一轮的投票,首先投向自己),要么是它知道了另一个

* Server具有更大的zxid,或者zxid相同但ServerId更大(所以它要

* 通知给其它所有Server,它要修改自己的选票)。

*/

static public class Notification {

...

/*

* 它是一个内部类,里面封装了:

* leader:proposed leader,当前通知所推荐的leader的serverId

* zxid:当前通知所推荐的leader的zxid

* electionEpoch:当前通知所处的选举epoch,即逻辑时钟

* state:QuorumPeer.ServerState,当前通知发送者的状态

* 四个状态:LOOKING, FOLLOWING, LEADING, OBSERVING;

* sid:当前发送者的ServerId

* peerEpoch: 当前通知所推荐的leader的epoch

*/

long leader;

long zxid;

long electionEpoch;

QuorumPeer.ServerState state;

long sid;

long peerEpoch;

...

}

/**

* Notification是作为本地处理通知消息时封装的对象

* 如果要将通知发送出去或者接收,用的是ToSend对象,其成员变量和Notification一致

*/

static public class ToSend {

static enum mType {crequest, challenge, notification, ack}

ToSend(mType type,

long leader,

long zxid,

long electionEpoch,

ServerState state,

long sid,

long peerEpoch) {

...

}

...

}

/**

* 发送投票通知的时候,将ToSend封装的选票信息转换成二进制字节数据传输

*/

static ByteBuffer buildMsg(int state,

long leader,

long zxid,

long electionEpoch,

long epoch) {

byte requestBytes[] = new byte[40];

ByteBuffer requestBuffer = ByteBuffer.wrap(requestBytes);

/*

* Building notification packet to send

* 注意,是按照一定顺序转换的,接收的时候也按照该顺序解析

*/

requestBuffer.clear();

requestBuffer.putInt(state);

requestBuffer.putLong(leader);

requestBuffer.putLong(zxid);

requestBuffer.putLong(electionEpoch);

requestBuffer.putLong(epoch);

requestBuffer.putInt(Notification.CURRENTVERSION);

return requestBuffer;

}

/**

* 选举时发送和接收选票通知都是异步的,先放入队列,有专门线程处理

* 下面两个就是发送消息队列,和接收消息的队列

*/

LinkedBlockingQueue<ToSend> sendqueue;

LinkedBlockingQueue<Notification> recvqueue;

Messenger messenger;

/**

* 翻译:消息处理程序的多线程实现。Messenger实现了两个子类:WorkReceiver和WorkSender。

* 从名称可以明显看出它们的功能。每一个都生成一个新线程。

*

* 解释:通过创建该类可以创建两个线程分别处理消息发送和接收

*/

protected class Messenger {

/**

* 翻译:接收QuorumCnxManager实例在方法run()上的消息,并处理这些消息。

*/

class WorkerReceiver extends ZooKeeperThread {

//大概逻辑就是通过QuorumCnxManager manager,获取其他Server发来的消息,然后处理,如果

//符合一定条件,放到recvqueue队列里

...

}

/**

* 翻译:这个worker只是取出要发送的消息并将其放入管理器的队列中。

*/

class WorkerSender extends ZooKeeperThread {

//大概逻辑就是从sendqueue队列中获取要发送的消息ToSend对象,调用上面说过的buildMsg方法

//转换成字节数据,再通过QuorumCnxManager manager广播出去

...

}

WorkerSender ws;

WorkerReceiver wr;

/**

* Constructor of class Messenger.

* 通过构造Messenger对象,即可启动两个线程处理收发消息

*/

Messenger(QuorumCnxManager manager) {

this.ws = new WorkerSender(manager);

Thread t = new Thread(this.ws,"WorkerSender[myid=" + self.getId() + "]");

t.setDaemon(true);

t.start();

this.wr = new WorkerReceiver(manager);

t = new Thread(this.wr,"WorkerReceiver[myid=" + self.getId() + "]");

t.setDaemon(true);

t.start();

}

/**

* Stops instances of WorkerSender and WorkerReceiver

* 调用该方法,让收发消息两个线程停止工作

*/

void halt(){

this.ws.stop = true;

this.wr.stop = true;

}

}

...

/**

* 翻译:FastLeaderElection的构造函数。它接受两个参数,

* 一个是实例化此对象的QuorumPeer对象,另一个是连接管理器。

* 在ZooKeeper服务的一个实例期间,每个对等点只应该创建这样的对象一次。

*/

public FastLeaderElection(QuorumPeer self, QuorumCnxManager manager){

this.stop = false;

this.manager = manager;

//starter:启动选举,初始化一些数据、启动收发消息的线程等操作..

starter(self, manager);

}

...

/**

* 翻译:开启新一轮的Leader选举。无论何时,只要我们的QuorumPeer的

* 状态变为了LOOKING,那么这个方法将被调用,并且它会发送notifications

* 给所有其它的同级服务器。

*

* 这个方法就是选举的核心方法!!!!!!!!后面专门讲解这个方法

*/

public Vote lookForLeader() throws InterruptedException {

}

...

}

QuorumCnxManager 连接管理器

QuorumCnxManager 这个类我们只需要知道该类大概的作用就可以了。

/**

* This class implements a connection manager for leader election using TCP. It

* maintains one connection for every pair of servers.

* The tricky part is to guarantee that there is exactly

* one connection for every pair of servers that are operating correctly

* and that can communicate over the network.

* 翻译:这个类为使用TCP的领袖选举实现了一个连接管理器。

* 它为每对服务器维护一个连接。棘手的部分是确保每一对服务器都有一个连接,

* 这些服务器都在正确地运行,并且可以通过网络进行通信。

*

* 解释:每队服务器维护一个连接的意思就是A连接服务器

*

* If two servers try to start a connection concurrently, then the

* connection manager uses a very simple tie-breaking mechanism

* to decide which connection to drop based on the IP addressed of

* the two parties.

* 翻译:如果两个服务器试图同时启动一个连接,则连接管理器使用非常简单的中断连接

* 机制来决定哪个中断,基于双方的IP地址。

*

* For every peer, the manager maintains a queue of messages to send. If the

* connection to any particular peer drops, then the sender thread

* puts the message back on the list. As this implementation

* currently uses a queue implementation to maintain messages to send to

* another peer, we add the message to the tail of the queue, thus

* changing the order of messages.Although this is not a problem for the

* leader election, it could be a problem when consolidating peer

* communication. This is to be verified, though.

* 翻译:对于每个对等体,管理器维护着一个消息发送队列。如果连接到任何

* 特定的Server中断,那么发送者线程将消息放回到这个队列中。

* 作为这个实现,当前使用一个队列来实现维护发送给另一方的消息,因此我们将消息

* 添加到队列的尾部,从而更改了消息的顺序。虽然对于Leader选举来说这不是一个问题,

* 但对于加强对等通信可能就是个问题。不过,这一点有待验证。

*

* 解释:比如发送消息1给某一个Server,如果和该Server连接断开后,想再给该Server发送消息2时

* 此时消息就会存到该Server在本地对应维护的一个消息发送队列中,等连接恢复后会重新尝试发送。

*/

public class QuorumCnxManager {

...

//每一个QuorumPeer都有一个QuorumCnxManager对象负责选举期间QuorumPeer之间连接的

//建立和发送、接收消息队列的维护,而这些消息是通过以下4个集合被处理的:

//发送器集合。每个SenderWorker消息发送器,都对应一台远程ZooKeeper服务器,负责消息的发送,在这个集合中,key为SID

final ConcurrentHashMap<Long, SendWorker> senderWorkerMap;

//每个SID需要发送的消息队列

final ConcurrentHashMap<Long, ArrayBlockingQueue<ByteBuffer>> queueSendMap;

最近发送过的消息。在这个集合中,为每个SID保留最近发送过的一个消息

final ConcurrentHashMap<Long, ByteBuffer> lastMessageSent;

//收到的消息存放到该队列

public final ArrayBlockingQueue<Message> recvQueue;

...

}

这个类维护了当前Server和其他Server之间的TCP连接,并且保证了服务器之间只有一个连接。

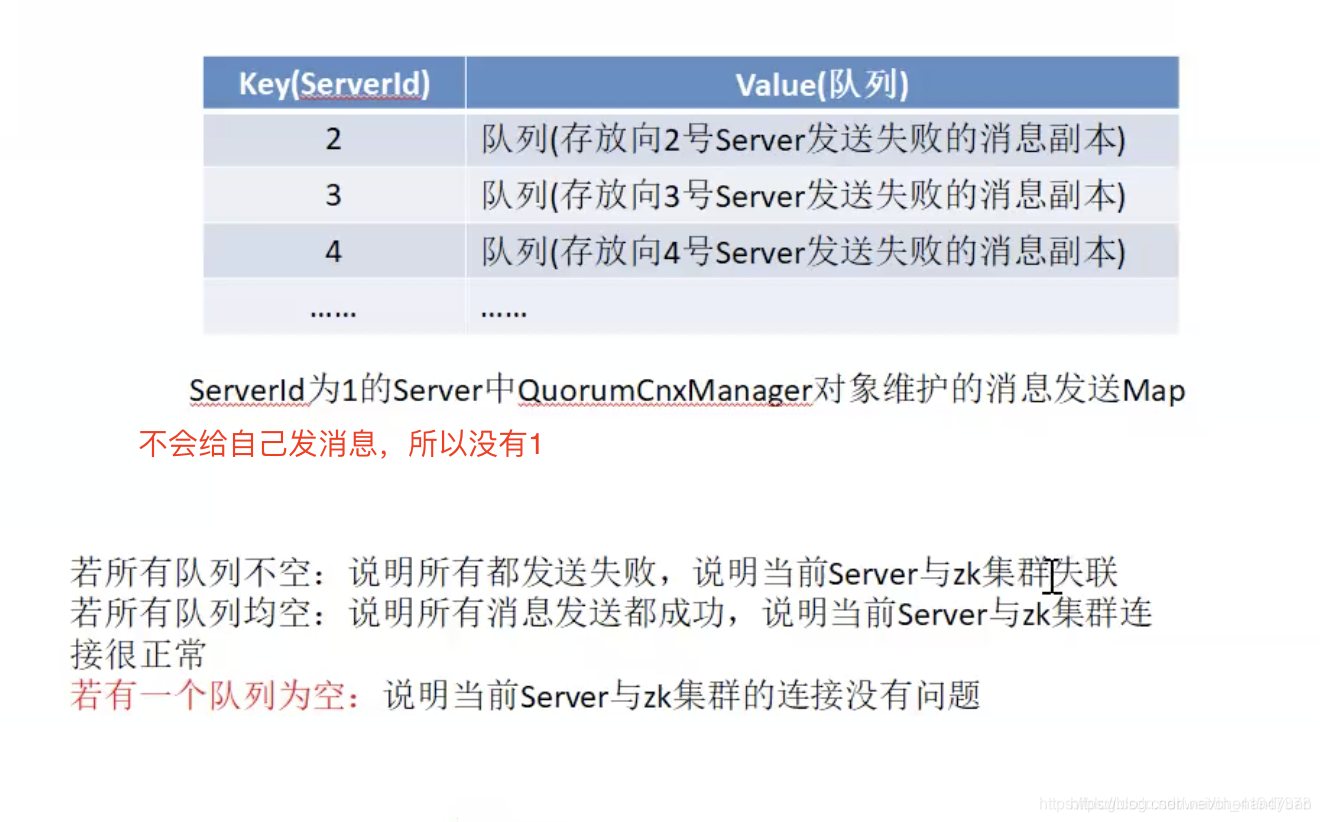

另外该类还维护了一个Map结构的数据queueSendMap,其中key为集群中其他Server的ServerId,value为一个消息队列,保存了当前Server给其他Server发送消息时失败的消息副本。

有一个点需要关注,通过本地维护的消息队列,可以判断当前Server与集群之间的连接是否正常,如下图:

在这里插入图片描述

QuorumCnxManager还有分别负责消息的发送和接收的SenderWorker和RecvWorker两个继承了Runnable接口的线程内部类,这两个线程类的构造方法如下:

SendWorker(Socket sock, Long sid) {

super("SendWorker:" + sid);

this.sid = sid;

this.sock = sock;

recvWorker = null;

try {

dout = new DataOutputStream(sock.getOutputStream());

} catch (IOException e) {

LOG.error("Unable to access socket output stream", e);

closeSocket(sock);

running = false;

}

LOG.debug("Address of remote peer: " + this.sid);

}

RecvWorker(Socket sock, Long sid, SendWorker sw) {

super("RecvWorker:" + sid);

this.sid = sid;

this.sock = sock;

this.sw = sw;

try {

din = new DataInputStream(sock.getInputStream());

// OK to wait until socket disconnects while reading.

sock.setSoTimeout(0);

} catch (IOException e) {

LOG.error("Error while accessing socket for " + sid, e);

closeSocket(sock);

running = false;

}

}

每个SenderWorker或者RecvWorker都有一个sid变量,显然,每一个sid对应的QuorumPeer都会有与之对应的SenderWorker和RecvWorker来专门负责处理接收到的它的消息或者向它发送消息。

接下来再看下对应的run()方法做了什么操作

SendWorker.run():

pubic void run() {

threadCnt.incrementAndGet();

try {

/**

* If there is nothing in the queue to send, then we

* send the lastMessage to ensure that the last message

* was received by the peer. The message could be dropped

* in case self or the peer shutdown their connection

* (and exit the thread) prior to reading/processing

* the last message. Duplicate messages are handled correctly

* by the peer.

*

* If the send queue is non-empty, then we have a recent

* message than that stored in lastMessage. To avoid sending

* stale message, we should send the message in the send queue.

*/

ArrayBlockingQueue<ByteBuffer> bq = queueSendMap.get(sid);

if (bq == null || isSendQueueEmpty(bq)) {

ByteBuffer b = lastMessageSent.get(sid);

if (b != null) {

LOG.debug("Attempting to send lastMessage to sid=" + sid);

send(b);

}

}

} catch (IOException e) {

LOG.error("Failed to send last message. Shutting down thread.", e);

this.finish();

}

try {

while (running && !shutdown && sock != null) {

ByteBuffer b = null;

try {

ArrayBlockingQueue<ByteBuffer> bq = queueSendMap

.get(sid);

if (bq != null) {

b = pollSendQueue(bq, 1000, TimeUnit.MILLISECONDS);

} else {

LOG.error("No queue of incoming messages for " +

"server " + sid);

break;

}

if(b != null){

lastMessageSent.put(sid, b);

send(b);

}

} catch (InterruptedException e) {

LOG.warn("Interrupted while waiting for message on queue",

e);

}

}

} catch (Exception e) {

LOG.warn("Exception when using channel: for id " + sid + " my id = " +

self.getId() + " error = " + e);

}

this.finish();

LOG.warn("Send worker leaving thread");

}

}

RecvWorker.run():

public void run() {

threadCnt.incrementAndGet();

try {

while (running && !shutdown && sock != null) {

/**

* Reads the first int to determine the length of the

* message

*/

int length = din.readInt();

if (length <= 0 || length > PACKETMAXSIZE) {

throw new IOException(

"Received packet with invalid packet: "

+ length);

}

/**

* Allocates a new ByteBuffer to receive the message

*/

byte[] msgArray = new byte[length];

din.readFully(msgArray, 0, length);

ByteBuffer message = ByteBuffer.wrap(msgArray);

addToRecvQueue(new Message(message.duplicate(), sid));

}

} catch (Exception e) {

LOG.warn("Connection broken for id " + sid + ", my id = " +

self.getId() + ", error = " , e);

} finally {

LOG.warn("Interrupting SendWorker");

sw.finish();

if (sock != null) {

closeSocket(sock);

}

}

}

代码可以看到,SenderWorker负责不断从全局的queueSendMap中读取自己所负责的sid对应的消息的列表,然后将消息发送给对应的sid。

而RecvWorker负责从与自己负责的sid建立的TCP连接中读取数据放入到recvQueue的末尾。

从QuorumCnxManager.SenderWorker和QuorumCnxManager.RecvWorker的run方法中可以看出,这两个worker都是基于QuorumCnxManager建立的连接,与对应的server进行消息的发送和接收,而要发送的消息则来自FastLeaderElection,接收到的消息,也是被FastLeaderElection处理,因此,QuorumCnxManager的两个worker并不负责具体的算法实现,只是消息发送、接收的代理类,FastLeaderElection不需要理睬怎么与其它的server通信、怎么获得其它server的投票信息这些细节,只需要从QuorumCnxManager提供的队列里面取消息或者放入消息。

FastLeaderElection.Messenger.WorkerReceiver 和 FastLeaderElection.Messenger.WorkerSender

在选举过程中需要进行消息的沟通,因此在FastLeaderElection中维护了两个变量:

LinkedBlockingQueue<ToSend> sendqueue;

LinkedBlockingQueue<Notification> recvqueue;

recvqueue中存放了选举过程中接收到的消息,这些消息被交给了FastLeaderElection的最核心方法lookForLeader()进行处理以选举出leader。而sendqueue中存放了待发送出去的消息。

同时,每一个FastLeaderElection变量维护了一个内置类Messager,Messager类包含了两个实现了Runnable接口的类WorkerReceiver和WorkerSender,从名字可以看出,这两个类分别负责消息的发送和接收。即WorkerReceiver负责接收消息并将接收到的消息放入recvqueue中等待处理,WorkerSender负责从sendqueue中取出待发送消息,交给下层的连接管理类QuorumCnxManager进行发送。

下面,我们来看看FastLeaderElection.Messager.WorkekSender和 FastLeaderElection.Messager.WorkerReceiver各自的run方法:

WorkerSender.run():很简单不说了。

public void run() {

while (!stop) {

try {

ToSend m = sendqueue.poll(3000, TimeUnit.MILLISECONDS);

if(m == null) continue;

process(m);

} catch (InterruptedException e) {

break;

}

}

LOG.info("WorkerSender is down");

}

/**

* Called by run() once there is a new message to send.

*

* @param m message to send

*/

void process(ToSend m) {

ByteBuffer requestBuffer = buildMsg(m.state.ordinal(),

m.leader,

m.zxid,

m.electionEpoch,

m.peerEpoch);

manager.toSend(m.sid, requestBuffer);

}

WorkerReceiver.run():

public void run() {

Message response;

while (!stop) {

// Sleeps on receive

try{

response = manager.pollRecvQueue(3000, TimeUnit.MILLISECONDS);

if(response == null) continue;

/*

* 翻译:如果它是来自一个观察者,立即响应。注意,下面的谓词假设,

* 如果服务器不是追随者,那么它必须是观察者。如果将来我们遇到其

* 他类型的学习者,我们就必须改变我们检查观察者的方式。

*

* 即:如果收到的选票是来自观察者的,直接返回当前的Server的当前选票

*/

if(!validVoter(response.sid)){

Vote current = self.getCurrentVote();

ToSend notmsg = new ToSend(ToSend.mType.notification,

current.getId(),

current.getZxid(),

logicalclock.get(),

self.getPeerState(),

response.sid,

current.getPeerEpoch());

sendqueue.offer(notmsg);

} else {

// Receive new message

if (LOG.isDebugEnabled()) {

LOG.debug("Receive new notification message. My id = "

+ self.getId());

}

/*

* We check for 28 bytes for backward compatibility

*/

if (response.buffer.capacity() < 28) {

LOG.error("Got a short response: "

+ response.buffer.capacity());

continue;

}

boolean backCompatibility = (response.buffer.capacity() == 28);

response.buffer.clear();

// Instantiate Notification and set its attributes

// 将收到的通知解析后会封装成Notification 放入队列recvqueue

Notification n = new Notification();

// State of peer that sent this message

QuorumPeer.ServerState ackstate = QuorumPeer.ServerState.LOOKING;

// 判断该通知的发送者的状态

switch (response.buffer.getInt()) {

case 0:

ackstate = QuorumPeer.ServerState.LOOKING;

break;

case 1:

ackstate = QuorumPeer.ServerState.FOLLOWING;

break;

case 2:

ackstate = QuorumPeer.ServerState.LEADING;

break;

case 3:

ackstate = QuorumPeer.ServerState.OBSERVING;

break;

default:

continue;

}

n.leader = response.buffer.getLong();

n.zxid = response.buffer.getLong();

n.electionEpoch = response.buffer.getLong();

n.state = ackstate;

n.sid = response.sid;

if(!backCompatibility){

n.peerEpoch = response.buffer.getLong();

} else {

if(LOG.isInfoEnabled()){

LOG.info("Backward compatibility mode, server id=" + n.sid);

}

n.peerEpoch = ZxidUtils.getEpochFromZxid(n.zxid);

}

/*

* Version added in 3.4.6

*/

n.version = (response.buffer.remaining() >= 4) ?

response.buffer.getInt() : 0x0;

/*

* Print notification info

*/

if(LOG.isInfoEnabled()){

printNotification(n);

}

if(self.getPeerState() == QuorumPeer.ServerState.LOOKING){

//如果当前Server状态是Looking,则将该选票放入recvqueue队列,用来参与选举

recvqueue.offer(n);

//如果发送该选票的Server状态也是Looking,并且它的选举逻辑时钟比我小,则发送我当前的选票给他

if((ackstate == QuorumPeer.ServerState.LOOKING)

&& (n.electionEpoch < logicalclock.get())){

Vote v = getVote();

ToSend notmsg = new ToSend(ToSend.mType.notification,

v.getId(),

v.getZxid(),

logicalclock.get(),

self.getPeerState(),

response.sid,

v.getPeerEpoch());

sendqueue.offer(notmsg);

}

} else {

// 如果当前服务器不是LOOKING,即已经选出Leader了,并且该消息的发送者是Looking

// 则我会将当前选票发给他

Vote current = self.getCurrentVote();

if(ackstate == QuorumPeer.ServerState.LOOKING){

if(LOG.isDebugEnabled()){

LOG.debug("Sending new notification. My id = " +

self.getId() + " recipient=" +

response.sid + " zxid=0x" +

Long.toHexString(current.getZxid()) +

" leader=" + current.getId());

}

ToSend notmsg;

if(n.version > 0x0) {

notmsg = new ToSend(

ToSend.mType.notification,

current.getId(),

current.getZxid(),

current.getElectionEpoch(),

self.getPeerState(),

response.sid,

current.getPeerEpoch());

} else {

Vote bcVote = self.getBCVote();

notmsg = new ToSend(

ToSend.mType.notification,

bcVote.getId(),

bcVote.getZxid(),

bcVote.getElectionEpoch(),

self.getPeerState(),

response.sid,

bcVote.getPeerEpoch());

}

sendqueue.offer(notmsg);

}

}

}

} catch (InterruptedException e) {

System.out.println("Interrupted Exception while waiting for new message" +

e.toString());

}

}

LOG.info("WorkerReceiver is down");

}