HQL-DDL命令

参考:https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL

DDL(data definition language): 主要的命令有CREATE、ALTER、DROP等。

DDL主要是用在定义、修改数据库对象的结构 或 数据类型。

第 1 节 数据库操作

Hive有一个默认的数据库default,在操作HQL时,如果不明确的指定要使用哪个库,则使用默认数据库;

Hive的数据库名、表名均不区分大小写;

名字不能使用数字开头;

不能使用关键字,尽量不使用特殊符号;

创建数据库语法

CREATE [REMOTE] (DATABASE|SCHEMA) [IF NOT EXISTS] database_name

[COMMENT database_comment]

[LOCATION hdfs_path]

[MANAGEDLOCATION hdfs_path]

[WITH DBPROPERTIES (property_name=property_value, ...)];

-- IF NOT EXISTS 如果不存在创建

-- COMMENT 给添加注释

-- LOCATION 指定存储路径

-- MANAGEDLOCATION 管理位置

-- 创建数据库,在HDFS上存储路径为 /user/hive/warehouse/*.db

hive (default)> create database mydb;

hive (default)> dfs -ls /user/hive/warehouse;

-- 避免数据库已经存在时报错,使用 if not exists 进行判断【标准写法】

hive (default)> create database if not exists mydb;

-- 创建数据库。添加备注,指定数据库在存放位置

hive (default)> create database if not exists mydb2

comment 'this is mydb2'

location '/user/hive/mydb2.db';

查看数据库

-- 查看所有数据库

show database;

-- 查看数据库信息

desc database mydb2;

-- 进一步详细信息展示

desc database extended mydb2;

describe database extended mydb2;

使用数据库

USE database_name;

USE DEFAULT;

-- 查看当前使用的数据库

SELECT current_database();

删除数据库

DROP (DATABASE|SCHEMA) [IF EXISTS] database_name [RESTRICT|CASCADE];

-- 删除一个空数据库

drop database databasename;

-- 如果数据库不为空,使用 cascade 强制删除

drop database databasename cascade;

修改数据库

-- 修改数据库参数

ALTER (DATABASE|SCHEMA) database_name SET DBPROPERTIES (property_name=property_value, ...); -- (Note: SCHEMA added in Hive 0.14.0)

--

ALTER (DATABASE|SCHEMA) database_name SET OWNER [USER|ROLE] user_or_role; -- (Note: Hive 0.13.0 and later; SCHEMA added in Hive 0.14.0)

ALTER (DATABASE|SCHEMA) database_name SET LOCATION hdfs_path; -- (Note: Hive 2.2.1, 2.4.0 and later)

ALTER (DATABASE|SCHEMA) database_name SET MANAGEDLOCATION hdfs_path; -- (Note: Hive 4.0.0 and later)

第 2 节 建表语法

create [external] table [IF NOT EXISTS] table_name

[(colName colType [comment 'comment'], ...)]

[comment table_comment]

[partitioned by (colName colType [comment col_comment], ...)]

[clustered BY (colName, colName, ...)

[sorted by (col_name [ASC|DESC], ...)] into num_buckets buckets]

[row format row_format]

[stored as file_format]

[LOCATION hdfs_path]

[TBLPROPERTIES (property_name=property_value, ...)]

[AS select_statement];

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS]

[db_name.]table_name

LIKE existing_table_or_view_name

[LOCATION hdfs_path];

-

CREATE TABLE:按给定名称创建表,如果表已经存在则抛出异常。可使用if not exists规避。 -

EXTERNAL:有关键字是创建外部表,否则创建的是内部表(管理表)。删除内部表时,数据和表的定义同时被删除;

删除外部表时,仅仅删除了表的定义,数据保留;

在生产环境中,多使用外部表;

-

comment:表的注释 -

partitioned by:对表中数据进行分区,指定表的分区字段 -

clustered by:创建分桶表,指定分桶字段 -

sorted by:对桶中的一个或多个列排序,较少使用 -

存储子句:

ROW FORMAT DELIMITED

[FIELDS TERMINATED BY char]

[COLLECTION ITEMS TERMINATED BY char]

[MAP KEYS TERMINATED BY char]

[LINES TERMINATED BY char] | SERDE serde_name

[WITH SERDEPROPERTIES (property_name=property_value,

property_name=property_value, ...)]

? 建表时可指定 SerDe 。如果没有指定 ROW FORMAT 或者 ROW FORMAT DELIMITED,将会使用 默认的 SerDe。建表时还需要为表指定列,在指定列的同时也会指定自定义的 SerDe。Hive通过 SerDe 确定表的具体的列的数据。

? SerDe是 Serialize/Deserilize 的简称, hive使用Serde进行行对象的序列与反序列化。

-

stored as SEQUENCEFILE|TEXTFILE|RCFILE:如果文件数据是纯文本,可以使用STORED AS TEXTFILE(缺省);如果数据需要压缩,使用STORED AS SEQUENCEFILE(二进制序列文件)。 -

LOCATION:表在HDFS上的存放位置 -

TBLPROPERTIES:定义表的属性 -

AS:后面可以接查询语句,表示根据后面的查询结果创建表 -

LIKE:like 表名,允许用户复制现有的表结构,但是不复制数据

官网文档

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name -- (Note: TEMPORARY available in Hive 0.14.0 and later)

[(col_name data_type [column_constraint_specification] [COMMENT col_comment], ... [constraint_specification])]

[COMMENT table_comment]

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)]

[CLUSTERED BY (col_name, col_name, ...) [SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS]

[SKEWED BY (col_name, col_name, ...) -- (Note: Available in Hive 0.10.0 and later)]

ON ((col_value, col_value, ...), (col_value, col_value, ...), ...)

[STORED AS DIRECTORIES]

[

[ROW FORMAT row_format]

[STORED AS file_format]

| STORED BY 'storage.handler.class.name' [WITH SERDEPROPERTIES (...)] -- (Note: Available in Hive 0.6.0 and later)

]

[LOCATION hdfs_path]

[TBLPROPERTIES (property_name=property_value, ...)] -- (Note: Available in Hive 0.6.0 and later)

[AS select_statement]; -- (Note: Available in Hive 0.5.0 and later; not supported for external tables)

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name

LIKE existing_table_or_view_name

[LOCATION hdfs_path];

data_type

: primitive_type

| array_type

| map_type

| struct_type

| union_type -- (Note: Available in Hive 0.7.0 and later)

primitive_type

: TINYINT

| SMALLINT

| INT

| BIGINT

| BOOLEAN

| FLOAT

| DOUBLE

| DOUBLE PRECISION -- (Note: Available in Hive 2.2.0 and later)

| STRING

| BINARY -- (Note: Available in Hive 0.8.0 and later)

| TIMESTAMP -- (Note: Available in Hive 0.8.0 and later)

| DECIMAL -- (Note: Available in Hive 0.11.0 and later)

| DECIMAL(precision, scale) -- (Note: Available in Hive 0.13.0 and later)

| DATE -- (Note: Available in Hive 0.12.0 and later)

| VARCHAR -- (Note: Available in Hive 0.12.0 and later)

| CHAR -- (Note: Available in Hive 0.13.0 and later)

array_type

: ARRAY < data_type >

map_type

: MAP < primitive_type, data_type >

struct_type

: STRUCT < col_name : data_type [COMMENT col_comment], ...>

union_type

: UNIONTYPE < data_type, data_type, ... > -- (Note: Available in Hive 0.7.0 and later)

row_format

: DELIMITED [FIELDS TERMINATED BY char [ESCAPED BY char]] [COLLECTION ITEMS TERMINATED BY char]

[MAP KEYS TERMINATED BY char] [LINES TERMINATED BY char]

[NULL DEFINED AS char] -- (Note: Available in Hive 0.13 and later)

| SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value, property_name=property_value, ...)]

file_format:

: SEQUENCEFILE

| TEXTFILE -- (Default, depending on hive.default.fileformat configuration)

| RCFILE -- (Note: Available in Hive 0.6.0 and later)

| ORC -- (Note: Available in Hive 0.11.0 and later)

| PARQUET -- (Note: Available in Hive 0.13.0 and later)

| AVRO -- (Note: Available in Hive 0.14.0 and later)

| JSONFILE -- (Note: Available in Hive 4.0.0 and later)

| INPUTFORMAT input_format_classname OUTPUTFORMAT output_format_classname

column_constraint_specification:

: [ PRIMARY KEY|UNIQUE|NOT NULL|DEFAULT [default_value]|CHECK [check_expression] ENABLE|DISABLE NOVALIDATE RELY/NORELY ]

default_value:

: [ LITERAL|CURRENT_USER()|CURRENT_DATE()|CURRENT_TIMESTAMP()|NULL ]

constraint_specification:

: [, PRIMARY KEY (col_name, ...) DISABLE NOVALIDATE RELY/NORELY ]

[, PRIMARY KEY (col_name, ...) DISABLE NOVALIDATE RELY/NORELY ]

[, CONSTRAINT constraint_name FOREIGN KEY (col_name, ...) REFERENCES table_name(col_name, ...) DISABLE NOVALIDATE

[, CONSTRAINT constraint_name UNIQUE (col_name, ...) DISABLE NOVALIDATE RELY/NORELY ]

[, CONSTRAINT constraint_name CHECK [check_expression] ENABLE|DISABLE NOVALIDATE RELY/NORELY ]

第 3 节 内部表 & 外部表

在创建表的时候,可指定表的类型。表有两种类型,分别是内部表(管理表)、外部表。

-

默认情况下,创建内部表。如果要创建外部表,需要使用关键字

external -

在删除内部表时,表的定义(元数据) 和 数据 同时被删除

-

在删除外部表时,仅删除表的定义,数据被保留

-

在生产环境中,多使用外部表

内部表

- t1.dat文件内容

2;zhangsan;book,TV,code;beijing:chaoyang,shagnhai:pudong

3;lishi;book,code;nanjing:jiangning,taiwan:taibei

4;wangwu;music,book;heilongjiang:haerbin

- 创建表 SQL

-- 创建内部表

create table t1(

id int,

name string,

hobby array<string>,

addr map<string, string>

)

row format delimited

fields terminated by ";"

collection items terminated by ","

map keys terminated by ":";

-- 显示表的定义,显示的信息较少

desc t1;

-- 显示表的定义,显示的信息多,格式友好

desc formatted t1;

-- 加载数据

load data local inpath '/home/hadoop/data/t1.dat' into table t1;

-- 查询数据

select * from t1;

-- 查询数据文件

dfs -ls /user/hive/warehouse/mydb.db/t1;

-- 删除表。表和数据同时被删除

drop table t1;

-- 再次查询数据文件,已经被删除

外部表

-- 创建外部表

create external table t2(

id int,

name string,

hobby array<string>,

addr map<string, string>

)

row format delimited

fields terminated by ";"

collection items terminated by ","

map keys terminated by ":";

-- 显示表的定义

desc formatted t2;

-- 加载数据

load data local inpath '/home/hadoop/data/t1.dat' into table t2;

-- 查询数据

select * from t2;

-- 删除表。表删除了,目录仍然存在

drop table t2;

-- 再次查询数据文件,仍然存在

内部表与外部表的转换

-- 创建内部表,加载数据,并检查数据文件和表的定义

create table t1(

id int,

name string,

hobby array<string>,

addr map<string, string>

)

row format delimited

fields terminated by ";"

collection items terminated by ","

map keys terminated by ":";

load data local inpath '/home/hadoop/data/t1.dat' into table t1;

dfs -ls /user/hive/warehouse/mydb.db/t1;

desc formatted t1;

-- 内部表转外部表

alter table t1 set tblproperties('EXTERNAL'='TRUE');

-- 查询表信息,是否转换成功

desc formatted t1;

-- 外部表转内部表。EXTERNAL 大写,false 不区分大小

alter table t1 set tblproperties('EXTERNAL'='FALSE');

-- 查询表信息,是否转换成功

desc formatted t1;

小结

-

建表时:

- 如果不指定

external关键字,创建的是内部表; - 指定

external关键字,创建的是外部表;

- 如果不指定

-

删表时

- 删除外部表时,仅删除表的定义,表的数据不受影响

- 删除内部表时,表的数据和定义同时被删除

-

外部表的使用场景

- 想保留数据时使用。生产多用外部表

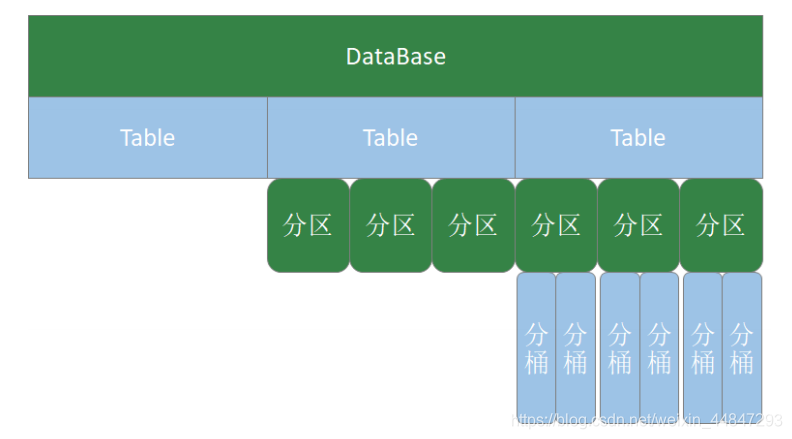

第 4 节 分区表

Hive在执行查询时,一般会扫描整个表的数据。由于表的数据量大,全表扫描消耗时间长、效率低。

而有时候,查询只需要扫描表中的一部分数据即可,Hive引入了分区表的概念,将表的数据存储在不同的子目录中,每一个子目录对应一个分区。只查询部分分区数据时,可避免全表扫描,提高查询效率。

在实际中,通常根据时间、地区等信息进行分区。

分区表创建与数据加载

-- 创建表

create table if not exists t3(

id int,

name string,

hobby array<string>,

addr map<String,string>

)

partitioned by (dt string)

row format delimited

fields terminated by ';'

collection items terminated by ','

map keys terminated by ':';

-- 加载数据。

load data local inpath "/home/hadoop/data/t1.dat" into table t3 partition(dt="2020-06-01");

load data local inpath "/home/hadoop/data/t1.dat" into table t3partition(dt="2020-06-02");

备注:分区字段不是表中已经存在的数据,可以将分区字段看成伪列

查看分区

show partitions t3;

新增分区并设置数据

-- 增加一个分区,不加载数据

alter table t3 add partition(dt='2020-06-03');

-- 增加多个分区,不加载数据

alter table t3 add partition(dt='2020-06-05') partition(dt='2020-06-06');

-- 增加多个分区。准备数据

hdfs dfs -cp /user/hive/warehouse/mydb.db/t3/dt=2020-06-01 /user/hive/warehouse/mydb.db/t3/dt=2020-06-07

hdfs dfs -cp /user/hive/warehouse/mydb.db/t3/dt=2020-06-01 /user/hive/warehouse/mydb.db/t3/dt=2020-06-08

-- 增加多个分区。加载数据

alter table t3 add

partition(dt='2020-06-07') location '/user/hive/warehouse/mydb.db/t3/dt=2020-06-07'

partition(dt='2020-06-08') location '/user/hive/warehouse/mydb.db/t3/dt=2020-06-08';

-- 查询数据

select * from t3;

修改分区的hdfs路径

alter table t3 partition(dt='2020-06-01')

set location '/user/hive/warehouse/t3/dt=2020-06-03';

删除分区

-- 可以删除一个或多个分区,用逗号隔开

alter table t3 drop partition(dt='2020-06-03');

alter table t3 drop partition(dt='2020-06-03'), partition(dt='2020-06-04');

第 5 节 分桶表

当单个的分区或者表的数据量过大,分区不能更细粒度的划分数据,就需要使用分桶技术将数据划分成更细的粒度。将数据按照指定的字段进行分成多个桶中去,即将数据按照字段进行划分,数据按照字段划分到多个文件当中去。分桶的原理:

-

MR中:

key.hashCode % reductTask -

Hive中:

分桶字段.hashCode % 分桶个数

-- 测试数据

1 java 90

1 c 78

1 python 91

1 hadoop 80

2 java 75

2 c 76

2 python 80

2 hadoop 93

3 java 98

3 c 74

3 python 89

3 hadoop 91

5 java 93

6 c 76

7 python 87

8 hadoop 88

-- 创建分桶表

create table course(

id int,

name string,

score int

)

clustered by (id) into 3 buckets

row format delimited

fields terminated by "\t";

-- 创建普通表

create table course_common(

id int,

name string,

score int

)

row format delimited

fields terminated by "\t";

-- 普通表加载数据

load data local inpath '/home/hadoop/data/course.dat' into table course_common;

-- 通过 insert ... select ... 给桶表加载数据

insert into table course select * from course_common;

-- 观察分桶数据。数据按照:(分区字段.hashCode) % (分桶数) 进行分区

desc formatted course;

备注:

-

分桶规则:

分桶字段.hashCode % 分桶数 -

分桶表加载数据时,使用

insert... select ...方式进行 -

网上有资料说要使用分区表需要设置

hive.enforce.bucketing=true,那是Hive1.x 以前的版本;Hive 2.x 中,删除了该参数,始终可以分桶;

第 6 节 修改表 & 删除表

-- 修改表名。

rename alter table course_common

rename to course_common1;

-- 修改列名。change column

alter table course_common1

change column id cid int;

-- 修改字段类型。change column

alter table course_common1

change column cid cid string;

-- The following columns have types incompatible with the existing columns in their respective positions

-- 修改字段数据类型时,要满足数据类型转换的要求。如int可以转为string,但是 string不能转为int

-- 增加字段。add columns

alter table course_common1

add columns (common string);

-- 删除字段:replace columns

-- 这里仅仅只是在元数据中删除了字段,并没有改动 hdfs上的数据文件

alter table course_common1 replace columns(

id string,

cname string,

score int

);

-- 删除表

drop table course_common1;

HQL DDL命令小结:

- 主要对象:数据库、表

- 表的分类:

- 内部表:删除表时,同时删除元数据和表数据

- 外部表:删除表时,仅删除元数据,保留表中数据;生产环境多使用外部表

- 分区表:按照分区字段将表中的数据放置在不同的目录中,提高SQL查询的性能

- 分桶表:按照分桶字段,将表中数据分开。 分桶字段.hashCode % 分桶数据

- 主要命令:

create、alter、drop

HQL-数据操作

第 1 节 数据导入

装载数据(load)

基本语法:

LOAD DATA [LOCAL] INPATH 'filepath'

[OVERWRITE] INTO TABLE tablename [PARTITION (partcol1=val1, partcol2=val2 ...)]

-

LOCAL:LOAD DATA LOCAL… 从本地文件系统加载数据到Hive表中。本地文件会拷贝到Hive表指定的位置LOAD DATA… 从HDFS加载数据到Hive表中。HDFS文件移动到Hive表指定的位置

-

INPATH:加载数据的路径 -

OVERWRITE:覆盖表中已有数据;否则表示追加数据 -

PARTITION:将数据加载到指定的分区

准备工作:

-- 创建表

CREATE TABLE tabA (

id int,

name string,

area string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ',' ;

-- 数据文件(~/data/sourceA.txt):

1,fish1,SZ

2,fish2,SH

3,fish3,HZ

4,fish4,QD

5,fish5,SR

-- 拷贝文件到

HDFS hdfs dfs -put sourceA.txt data/

装载数据:

-- 加载本地文件到hive(tabA)

LOAD DATA LOCAL INPATH '/home/hadoop/data/sourceA.txt' INTO TABLE tabA;

-- 检查本地文件 还在

-- 加载hdfs文件到hive(tabA)

LOAD DATA INPATH 'data/sourceA.txt' INTO TABLE tabA;

-- 检查HDFS文件,已经被转移

-- 加载数据覆盖表中已有数据

LOAD DATA INPATH 'data/sourceA.txt' OVERWRITE INTO TABLE tabA;

-- 创建表时 加载数据

hdfs dfs -mkdir /user/hive/tabB hdfs dfs -put sourceA.txt /user/hive/tabB

CREATE TABLE tabB (

id INT,

name string,

area string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

Location '/user/hive/tabB';

插入数据(insert)

-- 创建分区表

CREATE TABLE tabC (

id INT,

name string,

area string

)partitioned by (month string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ',';

-- 插入数据

insert into table tabC

partition(month='202001')

values (5, 'wangwu', 'BJ'), (4, 'lishi', 'SH'), (3, 'zhangsan', 'TJ');

-- 插入查询的结果数据

insert into table tabC partition(month='202002')

select

id,

name,

area

from tabC where month='202001';

-- 多表(多分区)插入模式

from tabC

insert overwrite table tabC partition(month='202003')

select id, name, area where month='202002'

insert overwrite table tabC partition(month='202004')

select id, name, area where month='202002';

-- select 不用写from 在sql前已经写了

创建表并插入数据(as select)

-- 根据查询结果创建表

create table if not exists tabD as

select * from tabC;

-- 这个插入 tabD 不是分区表

使用import导入数据

import table student2 partition(month='201709') from '/user/hive/warehouse/export/student';

第 2 节 数据导入

-- 将查询结果导出到本地

-- 只有 overwrite 没有 into 这里是导出数据

-- local directory 为导入 linux本地文件路径

-- 这个语句没有指定数据分隔格式,导出文件内分隔为hive默认 `^A`。可使用 cat -A 命令查看

insert overwrite local directory '/home/hadoop/data/tabC'

select * from tabC;

-- 将查询结果格式化输出到本地

insert overwrite local directory '/home/hadoop/data/tabC2'

row format delimited

fields terminated by ' '

select * from tabC;

-- 将查询结果导出到HDFS

-- 没有 local 则是hdfs上路径

insert overwrite directory '/user/hadoop/data/tabC3'

row format delimited

fields terminated by ' '

select * from tabC;

-- dfs 命令导出数据到本地。本质是执行数据文件的拷贝

dfs -get /user/hive/warehouse/mydb.db/tabc/month=202001 /home/hadoop/data/tabC4

-- hive 命令导出数据到本地。执行查询将查询结果重定向到文件

-- 使用 hive -e 命令是hql要加上 库名 ,否则默认查询 default 库

hive -e "select * from mydb.tabC" > a.log

-- export 导出数据到 HDFS下。使用export导出数据时,不仅有数还有表的元数据信息 `_metadata`文件

export table tabC to '/user/hadoop/data/tabC4';

-- export 导出的数据,可以使用 import 命令导入到 Hive 表中

-- 使用 like tname创建的表结构与原表一致。

-- create ... as select ... 结构可能不一致

create table tabE like tabc;

-- 查看表结构

desc tabE;

import table tabE from ''/user/hadoop/data/tabC4';

清除数据

-- 截断表,清空数据。(注意:仅能操作 内部表 )

truncate table tabE;

-- 以下语句报错,外部表 不能执行 truncate 操作

alter table tabC set tblproperties("EXTERNAL"="TRUE");

truncate table tabC;

小结:

数据导入: load data / insert / create table .... as select ..... / import table

数据导出: insert overwrite ... diretory ... / hdfs dfs -get / hive -e "select ..." >

a.log / export table …

Hive的数据导入与导出还可以使用其他工具:Sqoop(阿帕奇)、DataX(阿里)等;

HQL-DQL命令【重点】

DQL – Data Query Language 数据查询语言

select语法:

SELECT [ALL | DISTINCT] select_expr, select_expr, ...

FROM table_reference

[WHERE where_condition]

[GROUP BY col_list]

[ORDER BY col_list]

[CLUSTER BY col_list | [DISTRIBUTE BY col_list] [SORT BY col_list]]

[LIMIT [offset,] rows]

sql语句书写注意事项:

-

SQL语句对大小写不敏感

-

SQL语句可以写一行(简单SQL)也可以写多行(复杂SQL)

-

关键字不能缩写,也不能分行

-

各子句一般要分行

-

使用缩进格式,提高SQL语句的可读性(重要)

创建表,加载数据

-- 测试数据 /home/hadoop/data/emp.dat

7369,SMITH,CLERK,7902,2010-12-17,800,,20

7499,ALLEN,SALESMAN,7698,2011-02-20,1600,300,30

7521,WARD,SALESMAN,7698,2011-02-22,1250,500,30

7566,JONES,MANAGER,7839,2011-04-02,2975,,20

7654,MARTIN,SALESMAN,7698,2011-09-28,1250,1400,30

7698,BLAKE,MANAGER,7839,2011-05-01,2850,,30

7782,CLARK,MANAGER,7839,2011-06-09,2450,,10

7788,SCOTT,ANALYST,7566,2017-07-13,3000,,20

7839,KING,PRESIDENT,,2011-11-07,5000,,10

7844,TURNER,SALESMAN,7698,2011-09-08,1500,0,30

7876,ADAMS,CLERK,7788,2017-07-13,1100,,20

7900,JAMES,CLERK,7698,2011-12-03,950,,30

7902,FORD,ANALYST,7566,2011-12-03,3000,,20

7934,MILLER,CLERK,7782,2012-01-23,1300,,10

-- 建表并加载数据

CREATE TABLE emp (

empno int,

ename string,

job string,

mgr int,

hiredate DATE,

sal int,

comm int,

deptno int

)row format delimited fields terminated by ",";

-- 加载数据

LOAD DATA LOCAL INPATH '/home/hadoop/data/emp.dat'

INTO TABLE emp;

第 1 节 基本查询

-- 省略from子句的查询

select 8*888 ;

select current_date ;

-- 使用列别名 as可省略

select 8*888 product;

select current_date as currdate;

-- 全表查询

select * from emp;

-- 选择特定列查询

select ename, sal, comm from emp;

-- 使用函数

select count(*) from emp;

-- count(colname) 按字段进行count,不统计NULL

select sum(sal) from emp;

select max(sal) from emp;

select min(sal) from emp;

select avg(sal) from emp;

-- 使用limit子句限制返回的行数

select * from emp limit 3;

第 2 节 where 子句

WHERE子句紧随FROM子句,使用WHERE子句,过滤不满足条件的数据;

where 子句中不能使用列的别名;

select * from emp where sal > 2000;

where子句中会涉及到较多的比较运算 和 逻辑运算;

比较运算符

官方文档:https://cwiki.apache.org/confluence/display/Hive/LanguageManual+UDF

| 比较运算符 | 描述 |

|---|---|

=、==、<=> | 等于 |

| <>、!= | 不等于 |

| <、<=、 >、>= | 大于等于、小于等于 |

| is [not] null | 如果A等于NULL,则返回TRUE,反之返回FALSE。使用NOT关键字结果相反。 |

| in(value1,value2, …) | 匹配列表中的值 |

| LIKE | 简单正则表达式,也称通配符模式。‘x%’ 表示必须以字母 ‘x’ 开头;’%x’表示必须以字母’x’结尾;’%x%‘表示包含有字母’x’,可以位于字符串任意位置。使用NOT关键字结果相反。% 代表匹配零个或多个字符(任意个字符);_ 代表匹配一个字符。 |

| [NOT] BETWEEN … AND … | 范围的判断,使用NOT关键字结果相反。 |

| RLIKE、REGEXP | 基于java的正则表达式,匹配返回TRUE,反之返回FALSE。匹配使用的是JDK中的正则表达式接口实现的,因为正则也依据其中的规则。例如,正则表达式必须和整个字符串A相匹配,而不是只需与其字符串匹配。 |

备注:通常情况下NULL参与运算,返回值为NULL;NULL<=>NULL的结果为true

逻辑运算符

就是我们所熟悉的:and、or、not

-- 比较运算符,null参与运算

select null=null;

select null==null;

select null<=>null;

-- 使用 is null 判空

select * from emp where comm is null;

-- 使用 in

select * from emp where deptno in (20, 30);

-- 使用 between ... and ...

select * from emp where sal between 1000 and 2000;

-- 使用 like

select ename, sal from emp where ename like '%L%';

-- 使用 rlike。正则表达式,名字以A或S开头

select ename, sal from emp where ename rlike '^(A|S).*';

第 3 节 group by子句

GROUP BY语句通常与聚组函数一起使用,按照一个或多个列对数据进行分组,对每个组进行聚合操作。

-- 计算emp表每个部门的平均工资

select deptno, avg(sal)

from emp

group by deptno;

-- 计算emp每个部门中每个岗位的最高薪水

select deptno, job, max(sal)

from emp

group by deptno, job;

- where子句针对表中的数据发挥作用;having针对查询结果(聚组以后的结果)发挥作用

- where子句不能有分组函数;having子句可以有分组函数

- having只用于group by分组统计之后

-- 求每个部门的平均薪水大于2000的部门

select deptno, avg(sal)

from emp

group by deptno

having avg(sal) > 2000;

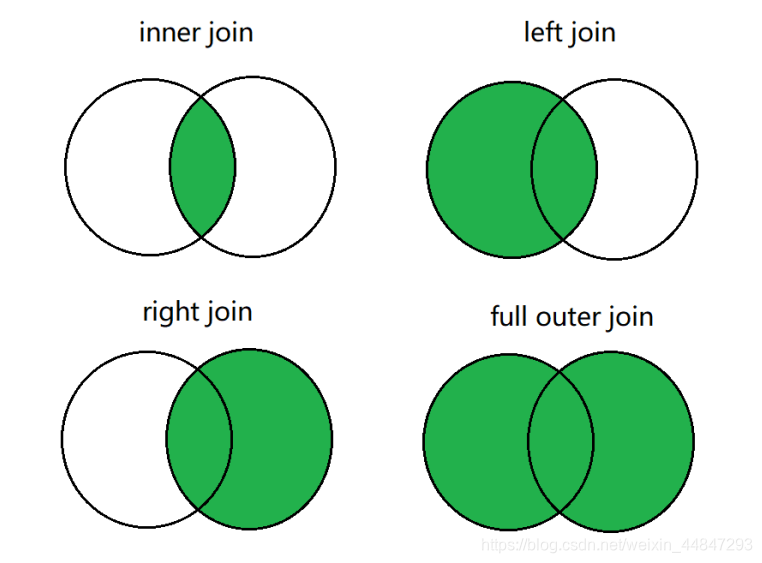

第 4 节 表链接

Hive支持通常的SQL JOIN语句。默认情况下,仅支持等值连接,不支持非等值连接。

JOIN 语句中经常会使用表的别名。使用别名可以简化SQL语句的编写,使用表名前缀可以提高SQL的解析效率。

连接查询操作分为两大类:内连接和外连接,而外连接可进一步细分为三种类型:

1. 内连接: [inner] join

2. 外连接 (outer join)

- 左外连接。 left [outer] join,左表的数据全部显示

- 右外连接。 right [outer] join,右表的数据全部显示

- 全外连接。 full [outer] join,两张表的数据都显示

案例演示:

-- 准备数据

-- u1.txt数据:

1,a

2,b

3,c

4,d

5,e

6,f

-- u2.txt数据:

4,d

5,e

6,f

7,g

8,h

9,i

create table if not exists u1(

id int,

name string)

row format delimited fields terminated by ',';

create table if not exists u2(

id int,

name string)

row format delimited fields terminated by ',';

load data local inpath '/home/hadoop/data/u1.txt' into table u1;

load data local inpath '/home/hadoop/data/u2.txt' into table u2;

-- 内连接

select * from u1 join u2 on u1.id = u2.id;

-- 左外连接

select * from u1 left join u2 on u1.id = u2.id;

-- 右外连接

select * from u1 right join u2 on u1.id = u2.id;

-- 全外连接

select * from u1 full join u2 on u1.id = u2.id;

多表连接

连接 n张表,至少需要 n-1 个连接条件。例如:连接四张表,至少需要三个连接条件。

多表连接查询,查询老师对应的课程,以及对应的分数,对应的学生:

select *

from techer t

left join course c on t.t_id = c.t_id

left join score s on s.c_id = c.c_id

left join student stu on s.s_id = stu.s_id;

Hive总是按照从左到右的顺序执行,Hive会对每对 JOIN 连接对象启动一个MapReduce 任务。

上面的例子中会首先启动一个 MapReduce job 对表 t 和表 c 进行连接操作;然后再启动一个 MapReduce job 将第一个 MapReduce job 的输出和表 s 进行连接操作;然后再继续直到全部操作;

笛卡儿积

满足以下条件将会产生笛卡尔集:

-

没有连接条件

-

连接条件无效

-

所有表中的所有行互相连接

如果表A、B分别有M、N条数据,其笛卡尔积的结果将有 M*N 条数据;缺省条件下hive不支持笛卡尔积运算;

set hive.strict.checks.cartesian.product=false;

select * from u1, u2;

第 5 节 排序子句【重点】

全局排序(order by)

order by 子句出现在select语句的结尾;

order by子句对最终的结果进行排序;

默认使用升序(ASC);可以使用DESC,跟在字段名之后表示降序;

ORDER BY执行全局排序,只有一个reduce;

-- 普通排序

select * from emp order by deptno;

-- 按别名排序

select empno, ename, job, mgr, sal + nvl(comm, 0) salcomm, deptno

from emp

order by salcomm desc;

-- 多列排序

select empno, ename, job, mgr, sal + nvl(comm, 0) salcomm, deptno

from emp

order by deptno, salcomm desc;

-- 排序字段要出现在select子句中。以下语句无法执行(因为select子句中缺少 deptno):

select empno, ename, job, mgr, sal + nvl(comm, 0) salcomm

from emp

order by deptno, salcomm desc;

每个MR内部排序(sort by)

对于大规模数据而言order by效率低;

在很多业务场景,我们并不需要全局有序的数据,此时可以使用sort by;

sort by为每个reduce产生一个排序文件,在reduce内部进行排序,得到局部有序的结果;

-- 设置reduce个数

set mapreduce.job.reduces=2;

-- 按照工资降序查看员工信息

select * from emp sort by sal desc;

-- 将查询结果导入到文件中(按照工资降序)。生成两个输出文件,每个文件内部数据按 工资降序排列

insert overwrite local directory '/home/hadoop/output/sortsal'

select * from emp sort by sal desc;

分区排序(distribute by)

distribute by 将特定的行发送到特定的reducer中,便于后继的聚合 与 排序操作;

distribute by 类似于MR中的分区操作,可以结合sort by操作,使分区数据有序;

distribute by 要写在sort by之前;

-- 启动2个reducer task;先按 deptno 分区,在分区内按 sal+comm 排序

set mapreduce.job.reduces=2;

-- 将结果输出到文件,观察输出结果

insert overwrite local directory '/home/hadoop/output/distBy'

select empno, ename, job, deptno, sal + nvl(comm, 0) salcomm

from emp

distribute by deptno

sort by salcomm desc;

-- 上例中,数据被分到了统一区,看不出分区的结果

-- 将数据分到3个区中,每个分区都有数据

set mapreduce.job.reduces=3;

insert overwrite local directory '/home/hadoop/output/distBy1'

select empno, ename, job, deptno, sal + nvl(comm, 0) salcomm

from emp

distribute by deptno

sort by salcomm desc;

Cluster By

当distribute by 与 sort by是同一个字段时,可使用cluster by简化语法;

cluster by 只能是升序,不能指定排序规则;

-- 语法上是等价的

select * from emp distribute by deptno sort by deptno;

select * from emp cluster by deptno;

排序小结:

-

order by。执行全局排序,效率低。生产环境中慎用

-

sort by。使数据局部有序(在reduce内部有序)

-

distribute by。按照指定的条件将数据分组,常与sort by联用,使数据局部有序

-

cluster by。当distribute by 与 sort by是同一个字段时,可使用cluster by简化语法

函数

Hive内置函数:https://cwiki.apache.org/confluence/display/Hive/LanguageManual+UDF#LanguageManualUDF-Built-inFunctions

第 1 节 系统内置函数

查看系统函数

-- 查看系统自带函数

show functions;

-- 显示自带函数的用法

desc function upper;

desc function extended upper;

日期函数【重要】

-- 当前前日期

select current_date;

select unix_timestamp();

-- 建议使用current_timestamp,有没有括号都可以

select current_timestamp();

-- 时间戳转日期

select from_unixtime(1505456567);

select from_unixtime(1505456567, 'yyyyMMdd');

select from_unixtime(1505456567, 'yyyy-MM-dd HH:mm:ss');

-- 日期转时间戳

select unix_timestamp('2019-09-15 14:23:00');

-- 计算时间差

select datediff('2020-04-18','2019-11-21');

select datediff('2019-11-21', '2020-04-18');

-- 查询当月第几天

select dayofmonth(current_date);

-- 计算月末:

select last_day(current_date);

-- 当月第1天:

select date_sub(current_date, dayofmonth(current_date)-1)

-- 下个月第1天:

select add_months(date_sub(current_date,

dayofmonth(current_date)-1), 1)

-- 字符串转时间(字符串必须为:yyyy-MM-dd格式)

select to_date('2020-01-01');

select to_date('2020-01-01 12:12:12');

-- 日期、时间戳、字符串类型格式化输出标准时间格式

select date_format(current_timestamp(), 'yyyy-MM-dd HH:mm:ss');

select date_format(current_date(), 'yyyyMMdd');

select date_format('2020-06-01', 'yyyy-MM-dd HH:mm:ss');

-- 计算emp表中,每个人的工龄

select *, round(datediff(current_date, hiredate)/365,1) workingyears from emp;

字符串函数

-- 转小写。lower

select lower("HELLO WORLD");

-- 转大写。upper

select lower(ename), ename from emp;

-- 求字符串长度。length

select length(ename), ename from emp;

-- 字符串拼接。 concat / ||

select empno || " " ||ename idname from emp;

select concat(empno, " " ,ename) idname from emp;

-- 指定分隔符。concat_ws(separator, [string | array(string)]+)

SELECT concat_ws('.', 'www', array('lagou', 'com'));

select concat_ws(" ", ename, job) from emp;

-- 求子串。substr

SELECT substr('www.lagou.com', 5);

SELECT substr('www.lagou.com', -5);

SELECT substr('www.lagou.com', 5, 5);

-- 字符串切分。split,注意 '.' 要转义

select split("www.lagou.com", "\\.");

数字函数

-- 四舍五入。round

select round(314.15926);

select round(314.15926, 2);

select round(314.15926, -2);

-- 向上取整。ceil

select ceil(3.1415926);

-- 向下取整。floor

select floor(3.1415926);

-- 其他数学函数包括:绝对值、平方、开方、对数运算、三角运算等

条件函数【重要】

-- if (boolean testCondition, T valueTrue, T valueFalseOrNull)

select sal, if (sal<1500, 1, if (sal < 3000, 2, 3)) from emp;

-- CASE WHEN a THEN b [WHEN c THEN d]* [ELSE e] END

-- 将emp表的员工工资等级分类:0-1500、1500-3000、3000以上

select sal, if (sal<=1500, 1, if (sal <= 3000, 2, 3)) from emp;

-- CASE WHEN a THEN b [WHEN c THEN d]* [ELSE e] END

-- 复杂条件用 case when 更直观

select sal, case when sal<=1500 then 1

when sal<=3000 then 2

else 3 end sallevel

from emp;

-- 以下语句等价

select ename, deptno,

case deptno when 10 then 'accounting'

when 20 then 'research'

when 30 then 'sales'

else 'unknown' end deptname

from emp;

select ename, deptno,

case when deptno=10 then 'accounting'

when deptno=20 then 'research'

when deptno=30 then 'sales'

else 'unknown' end deptname

from emp;

-- COALESCE(T v1, T v2, ...)。返回参数中的第一个非空值;如果所有值都为 NULL,那么返回NULL

select sal, coalesce(comm, 0) from emp;

-- isnull(a) isnotnull(a)

select * from emp where isnull(comm);

select * from emp where isnotnull(comm);

-- nvl(T value, T default_value)

select empno, ename, job, mgr, hiredate, deptno, sal + nvl(comm,0) sumsal from emp;

-- nullif(x, y) 相等为空,否则为a

SELECT nullif("b", "b"), nullif("b", "a");

UDTF 函数【重要】

UDTF : User Defined Table-Generating Functions。用户定义表生成函数,一行输入,多行输出。

-- explode,炸裂函数

-- 就是将一行中复杂的 array 或者 map 结构拆分成多行

select explode(array('A','B','C')) as col;

select explode(map('a', 8, 'b', 88, 'c', 888));

-- UDTF's are not supported outside the SELECT clause, nor nested in expressions

-- SELECT pageid, explode(adid_list) AS myCol... is not supported

-- SELECT explode(explode(adid_list)) AS myCol... is not supported

-- lateral view 常与 表生成函数explode结合使用

-- lateral view 语法:

lateralView: LATERAL VIEW udtf(expression) tableAlias AS columnAlias (',' columnAlias)* fromClause: FROM baseTable (lateralView)*

-- lateral view 的基本使用

with t1 as (

select 'OK' cola, split('www.lagou.com', '\\.') colb

)

select cola, colc

from t1

lateral view explode(colb) t2 as colc;

-- SQL

select uid, tag

from market

lateral view explode(split(tags, ",")) t2 as tag;

UDTF 案例1:

-- 数据(uid tags):

1 1,2,3

2 2,3

3 1,2

--编写sql,实现如下结果:

1 1

1 2

1 3

2 2

2 3

3 1

3 2

-- 建表加载数据

create table market(

uid int,

tags string

)

row format delimited fields terminated by '\t';

load data local inpath '/hivedata/market.txt' into table market;

UDTF 案例2:

-- 数据准备

lisi|Chinese:90,Math:80,English:70

wangwu|Chinese:88,Math:90,English:96

maliu|Chinese:99,Math:65,English:60

-- 创建表

create table studscore(

name string

,score map<String,string>)

row format delimited

fields terminated by '|'

collection items terminated by ','

map keys terminated by ':';

-- 加载数据

load data local inpath '/home/hadoop/data/score.dat' overwrite into table studscore;

-- 需求:找到每个学员的最好成绩

-- 第一步,使用 explode 函数将map结构拆分为多行

select explode(score) as (subject, socre) from studscore;

-- 但是这里缺少了学员姓名,加上学员姓名后出错。下面的语句有是错的

select name, explode(score) as (subject, socre) from studscore;

-- 第二步:explode常与 lateral view 函数联用,这两个函数结合在一起能关联其 他字段

select name, subject, score1 as score from studscore

lateral view explode(score) t1 as subject, score1;

-- 第三步:找到每个学员的最好成绩

select name, max(mark) maxscore

from (select name, subject, mark

from studscore lateral view explode(score) t1 as

subject, mark) t1

group by name;

with tmp as (

select name, subject, mark

from studscore lateral view explode(score) t1 as subject, mark

)

select name, max(mark) maxscore

from tmp

group by name;

小结:

-

将一行数据转换成多行数据,可以用于array和map类型的数据;

-

lateral view 与 explode 联用,解决 UDTF 不能添加额外列的问题

第 2 节 窗口函数【重要】

窗口函数又名开窗函数,属于分析函数的一种。用于解决复杂报表统计需求的功能强大的函数,很多场景都需要用到。窗口函数用于计算基于组的某种聚合值,它和聚合函数的不同之处是:对于每个组返回多行,而聚合函数对于每个组只返回一行。

窗口函数指定了分析函数工作的数据窗口大小,这个数据窗口大小可能会随着行的变化而变化。

over 关键字

使用窗口函数之前一般要要通过over()进行开窗

-- 查询emp表工资总和

select sum(sal) from emp;

-- 不使用窗口函数,有语法错误

select ename, sal, sum(sal) salsum from emp;

-- 使用窗口函数,查询员工姓名、薪水、薪水总和

select ename, sal, sum(sal) over() salsum, concat(round(sal / sum(sal) over()*100, 1) || '%') ratiosal from emp;

注意:窗口函数是针对每一行数据的;如果over中没有参数,默认的是全部结果集;

partition by子句

在over窗口中进行分区,对某一列进行分区统计,窗口的大小就是分区的大小

-- 查询员工姓名、薪水、部门薪水总和

select ename, sal, sum(sal) over(partition by deptno) salsum from emp;

order by 子句

order by 子句对输入的数据进行排序

-- 增加了order by子句;sum:从分组的第一行到当前行求和

select ename, sal, deptno, sum(sal) over(partition by deptno order by sal) salsum from emp;

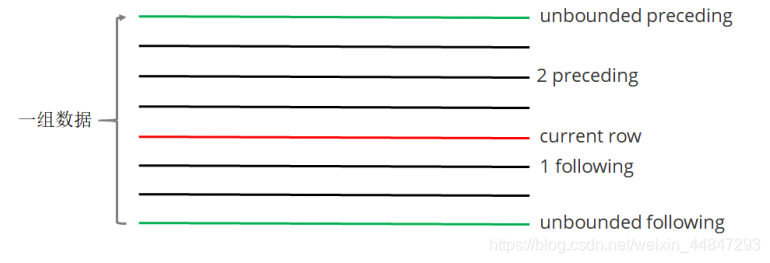

window子句

rows between ... and ...

如果要对窗口的结果做更细粒度的划分,使用window子句,有如下的几个选

项:!clear

-

unbounded preceding。组内第一行数据

-

n preceding。组内当前行的前n行数据

-

current row。当前行数据

-

n following。组内当前行的后n行数据

-

unbounded following。组内最后一行数据

-- rows between ... and ... 子句

-- 等价。组内,第一行到当前行的和

select ename, sal, deptno, sum(sal) over(partition by deptno order by ename) from emp;

select ename, sal, deptno,

sum(sal) over(partition by deptno order by ename

rows between unbounded preceding and current

row

)

from emp;

-- 组内,第一行到最后一行的和

select ename, sal, deptno,

sum(sal) over(partition by deptno order by ename

rows between unbounded preceding and

unbounded following

)

from emp;

-- 组内,前一行 + 当前行 +后一行

select ename, sal, deptno,

sum(sal) over(partition by deptno order by ename

rows between 1 preceding and 1 following

)

from emp;

排名函数

都是从1开始,生成数据项在分组中的排名。

-

row_number()。排名顺序增加不会重复;如1、2、3、4、… …

-

RANK()。 排名相等会在名次中留下空位;如1、2、2、4、5、… …

-

DENSE_RANK()。 排名相等会在名次中不会留下空位 ;如1、2、2、3、4、… …

-- row_number / rank / dense_rank

100 1 1 1

100 2 1 1

100 3 1 1

99 4 4 2

98 5 5 3

98 6 5 3

97 7 7 4

-- 数据准备

class1 s01 100

class1 s03 100

class1 s05 100

class1 s07 99

class1 s09 98

class1 s02 98

class1 s04 97

class2 s21 100

class2 s24 99

class2 s27 99

class2 s22 98

class2 s25 98

class2 s28 97

class2 s26 96

-- 创建表加载数据

create table t2(

cname string,

sname string,

score int

) row format delimited fields terminated by '\t';

load data local inpath '/home/hadoop/data/t2.dat' into table t2;

-- 按照班级,使用3种方式对成绩进行排名

select cname, sname, score,

row_number() over (partition by cname order by score desc)

rank1,

rank() over (partition by cname order by score desc)

rank2,

dense_rank() over (partition by cname order by score desc)

rank3

from t2;

-- 求每个班级前3名的学员--前3名的定义是什么--假设使用dense_rank

select cname, sname, score, rank

from (select cname, sname, score,

dense_rank() over (partition by cname order by

score desc) rank

from t2) tmp

where rank <= 3;

序列函数

-

lag。返回当前数据行的上一行数据

-

lead。返回当前数据行的下一行数据

-

first_value。取分组内排序后,截止到当前行,第一个值

-

last_value。分组内排序后,截止到当前行,最后一个值

-

ntile。将分组的数据按照顺序切分成n片,返回当前切片值

-- 测试数据 userpv.dat。cid ctime pv

cookie1,2019-04-10,1

cookie1,2019-04-11,5

cookie1,2019-04-12,7

cookie1,2019-04-13,3

cookie1,2019-04-14,2

cookie1,2019-04-15,4

cookie1,2019-04-16,4

cookie2,2019-04-10,2

cookie2,2019-04-11,3

cookie2,2019-04-12,5

cookie2,2019-04-13,6

cookie2,2019-04-14,3

cookie2,2019-04-15,9

cookie2,2019-04-16,7

-- 建表语句

create table userpv(

cid string,

ctime date,

pv int

)

row format delimited fields terminated by ",";

-- 加载数据

Load data local inpath '/home/hadoop/data/userpv.dat' into table

userpv;

-- lag。返回当前数据行的上一行数据

-- lead。功能上与lag类似

select cid, ctime, pv,

lag(pv) over(partition by cid order by ctime) lagpv,

lead(pv) over(partition by cid order by ctime) leadpv

from userpv;

-- first_value / last_value

select cid, ctime, pv,

first_value(pv) over (partition by cid order by ctime rows

between unbounded preceding and unbounded following) as firstpv,

last_value(pv) over (partition by cid order by ctime rows

between unbounded preceding and unbounded following) as lastpv

from userpv;

-- ntile。按照cid进行分组,每组数据分成2份

select cid, ctime, pv,

ntile(2) over(partition by cid order by ctime) ntile

from userpv;

sql面试题

连续7天登录的用户

-- 数据。uid dt status(1 正常登录,0 异常)

1 2019-07-11 1

1 2019-07-12 1

1 2019-07-13 1

1 2019-07-14 1

1 2019-07-15 1

1 2019-07-16 1

1 2019-07-17 1

1 2019-07-18 1

2 2019-07-11 1

2 2019-07-12 1

2 2019-07-13 0

2 2019-07-14 1

2 2019-07-15 1

2 2019-07-16 0

2 2019-07-17 1

2 2019-07-18 0

3 2019-07-11 1

3 2019-07-12 1

3 2019-07-13 1

3 2019-07-14 0

3 2019-07-15 1

3 2019-07-16 1

3 2019-07-17 1

3 2019-07-18 1

-- 建表语句

create table ulogin(

uid int,

dt date,

status int

)

row format delimited fields terminated by ' ';

-- 加载数据

load data local inpath '/home/hadoop/data/ulogin.dat' into table ulogin;

-- 连续值的求解,面试中常见的问题。这也是同一类,基本都可按照以下思路进行

-- 1、使用 row_number 在组内给数据编号(rownum)

-- 2、某个值 - rownum = gid,得到结果可以作为后面分组计算的依据

-- 3、根据求得的gid,作为分组条件,求最终结果

select uid, dt,

date_sub(dt, row_number() over (partition by uid order by

dt)) gid

from ulogin

where status=1;

select uid, count(*) countlogin

from (select uid, dt,

date_sub(dt, row_number() over (partition by uid

order by dt)) gid

from ulogin

where status=1) t1

group by uid, gid

having countlogin >= 7;

编写sql语句实现每班前三名,分数一样并列,同时求出前三名按名次排序的分差

-- 数据。sid class score

1 1901 90

2 1901 90

3 1901 83

4 1901 60

5 1902 66

6 1902 23

7 1902 99

8 1902 67

9 1902 87

-- 待求结果数据如下:

class score rank lagscore

1901 90 1 0

1901 90 1 0

1901 83 2 -7

1901 60 3 -23

1902 99 1 0

1902 87 2 -12

1902 67 3 -20

-- 建表语句

create table stu(

sno int,

class string,

score int

)row format delimited fields terminated by ' ';

-- 加载数据

load data local inpath '/home/hadoop/data/stu.dat' into table stu;

-- 求解思路:

-- 1、上排名函数,分数一样并列,所以用dense_rank

-- 2、将上一行数据下移,相减即得到分数差

-- 3、处理 NULL

with tmp as (

select sno, class, score,

dense_rank() over (partition by class order by score desc)

as rank

from stu

)

select class, score, rank,

nvl(score - lag(score) over (partition by class order by

score desc), 0) lagscore

from tmp

where rank<=3;

行 <=> 列

-- 数据:id course

1 java

1 hadoop

1 hive

1 hbase

2 java

2 hive

2 spark

2 flink

3 java

3 hadoop

3 hive

3 kafka

-- 建表加载数据

create table rowline1(

id string,

course string

)row format delimited fields terminated by ' ';

load data local inpath '/root/data/data1.dat' into table rowline1;

-- 编写sql,得到结果如下(1表示选修,0表示未选修)

id java hadoop hive hbase spark flink kafka

1 1 1 1 1 0 0 0

2 1 0 1 0 1 1 0

3 1 1 1 0 0 0 1

-- 使用case when;group by + sum

select id,

sum(case when course="java" then 1 else 0 end) as java,

sum(case when course="hadoop" then 1 else 0 end) as hadoop,

sum(case when course="hive" then 1 else 0 end) as hive,

sum(case when course="hbase" then 1 else 0 end) as hbase,

sum(case when course="spark" then 1 else 0 end) as spark,

sum(case when course="flink" then 1 else 0 end) as flink,

sum(case when course="kafka" then 1 else 0 end) as kafka

from rowline1

group by id;

-- 数据。id1 id2 flag

a b 2

a b 1

a b 3

c d 6

c d 8

c d 8

-- 编写sql实现如下结果

id1 id2 flag

a b 2|1|3

c d 6|8

-- 创建表 & 加载数据

create table rowline2(

id1 string,

id2 string,

flag int

) row format delimited fields terminated by ' ';

load data local inpath '/root/data/data2.dat' into table rowline2;

-- 第一步 将元素聚拢

select id1, id2, collect_set(flag) flag from rowline2 group by

id1, id2;

select id1, id2, collect_list(flag) flag from rowline2 group by

id1, id2;

select id1, id2, sort_array(collect_set(flag)) flag from rowline2

group by id1, id2;

-- 第二步 将元素连接在一起

select id1, id2, concat_ws("|", collect_set(flag)) flag

from rowline2

group by id1, id2;

-- 这里报错,CONCAT_WS must be "string or array<string>"。加一个类型转

换即可

select id1, id2, concat_ws("|", collect_set(cast (flag as

string))) flag

from rowline2

group by id1, id2;

-- 创建表 rowline3

create table rowline3 as

select id1, id2, concat_ws("|", collect_set(cast (flag as

string))) flag

from rowline2

group by id1, id2;

-- 第一步:将复杂的数据展开

select explode(split(flag, "\\|")) flat from rowline3;

-- 第二步:lateral view 后与其他字段关联

select id1, id2, newflag

from rowline3 lateral view explode(split(flag, "\\|")) t1 as

newflag;

lateralView: LATERAL VIEW udtf(expression) tableAlias AS

columnAlias (',' columnAlias)*

fromClause: FROM baseTable (lateralView)*

小结:

case when + sum + group by

collect_set、collect_list、concat_ws

sort_array

explode + lateral view

第 3 节 自定义函数

当 Hive 提供的内置函数无法满足实际的业务处理需要时,可以考虑使用用户自定义函数进行扩展。用户自定义函数分为以下三类:

-

UDF(User Defined Function)。用户自定义函数,一进一出

-

UDAF(User Defined Aggregation Function)。用户自定义聚集函数,多进一出;类似于:count/max/min

-

UDTF(User Defined Table-Generating Functions)。用户自定义表生成函数,一进多出;类似于:explode

UDF开发:

-

继承org.apache.hadoop.hive.ql.exec.UDF

-

需要实现evaluate函数;evaluate函数支持重载

-

UDF必须要有返回类型,可以返回null,但是返回类型不能为void

UDF开发步骤

-

创建maven java 工程,添加依赖

-

开发java类继承UDF,实现evaluate 方法

-

将项目打包上传服务器

-

添加开发的jar包

-

设置函数与自定义函数关联

-

使用自定义函数

需求:扩展系统 nvl 函数功能:

nvl(ename, "OK"): ename==null => 返回第二个参数nvl

(ename, "OK"): ename==null or ename=="" or ename==" " => 返回第二个参数

1、创建maven java 工程,添加依赖

<!-- pom.xml 文件 -->

<dependencies>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.3.7</version>

</dependency>

</dependencies>

2、开发java类继承UDF,实现evaluate 方法

package cn.lagou.hive.udf;

import org.apache.hadoop.hive.ql.exec.UDF;

public class nvl extends UDF {

public Text evaluate(final Text t, final Text x) {

if (t == null || t.toString().trim().length()==0) {

return x;

}

return t;

}

}

3、将项目打包上传服务器

4、添加开发的jar包(在Hive命令行中)

add jar /home/hadoop/hiveudf.jar;

5、创建临时函数。指定类名一定要完整的路径,即包名加类名

create temporary function mynvl as "cn.lagou.hive.udf.nvl";

6、执行查询

-- 基本功能还有

select mynvl(comm, 0) from mydb.emp;

-- 测试扩充的功能

select mynvl("", "OK");

select mynvl(" ", "OK");

7、退出Hive命令行,再进入Hive命令行。执行步骤6的测试,发现函数失效。

备注:创建临时函数每次进入Hive命令行时,都必须执行以下语句,很不方便:

add jar /home/hadoop/hiveudf.jar;

create temporary function mynvl as "cn.lagou.hive.udf.nvl";

可创建永久函数:

1、将jar上传HDFS

hdfs dfs -put hiveudf.jar jar/

2、在Hive命令行中创建永久函数

create function mynvl1 as 'cn.lagou.hive.udf.nvl' using jar 'hdfs:/user/hadoop/jar/hiveudf.jar';

-- 查询所有的函数,发现 mynvl1 在列表中

show functions;

3、退出Hive,再进入,执行测试

-- 基本功能还有

select mynvl(comm, 0) from mydb.emp;

-- 测试扩充的功能

select mynvl("", "OK");

select mynvl(" ", "OK");

4、删除永久函数,并检查

drop function mynvl1;

show functions;

HQL-DML命令

数据操纵语言DML(Data Manipulation Language),DML主要有三种形式:插入(INSERT)、删除(DELETE)、更新(UPDATE)。

事务(transaction)是一组单元化操作,这些操作要么都执行,要么都不执行,是一个不可分割的工作单元。

事务具有的四个要素:原子性(Atomicity)、一致性(Consistency)、隔离性(Isolation)、持久性(Durability),这四个基本要素通常称为ACID特性。

-

原子性。一个事务是一个不可再分割的工作单位,事务中的所有操作要么都发生,要么都不发生。

-

一致性。事务的一致性是指事务的执行不能破坏数据库数据的完整性和一致性,一个事务在执行之前和执行之后,数据库都必须处于一致性状态。

-

隔离性。在并发环境中,并发的事务是相互隔离的,一个事务的执行不能被其他事务干扰。即不同的事务并发操纵相同的数据时,每个事务都有各自完整的数据空间,即一个事务内部的操作及使用的数据对其他并发事务是隔离的,并发执行的各个事务之间不能互相干扰。

-

持久性。事务一旦提交,它对数据库中数据的改变就应该是永久性的。

第 1 节 Hive 事务

Hive从0.14版本开始支持事务 和 行级更新,但缺省是不支持的,需要一些附加的配置。要想支持行级insert、update、delete,需要配置Hive支持事务。

Hive事务的限制:

- Hive提供行级别的ACID语义

- BEGIN、COMMIT、ROLLBACK 暂时不支持,所有操作自动提交

- 目前只支持 ORC 的文件格式

- 默认事务是关闭的,需要设置开启

- 要是使用事务特性,表必须是分桶的

- 只能使用内部表

- 如果一个表用于ACID写入(INSERT、UPDATE、DELETE),必须在表中设置表属性 : “transactional=true”

- 必须使用事务管理器 org.apache.hadoop.hive.ql.lockmgr.DbTxnManager

- 目前支持快照级别的隔离。就是当一次数据查询时,会提供一个数据一致性的快照

- LOAD DATA语句目前在事务表中暂时不支持

HDFS是不支持文件的修改;并且当有数据追加到文件,HDFS不对读数据的用户提供一致性的。为了在HDFS上支持数据的更新:

- 表和分区的数据都被存在基本文件中(base files)

- 新的记录和更新,删除都存在增量文件中(delta files)

- 一个事务操作创建一系列的增量文件

- 在读取的时候,将基础文件和修改,删除合并,最后返回给查询

第 2 节 Hive 事务操作示例

-- 这些参数也可以设置在hive-site.xml中

SET hive.support.concurrency = true;

-- Hive 0.x and 1.x only

SET hive.enforce.bucketing = true;

SET hive.exec.dynamic.partition.mode = nonstrict;

SET hive.txn.manager =

org.apache.hadoop.hive.ql.lockmgr.DbTxnManager;

-- 创建表用于更新。满足条件:内部表、ORC格式、分桶、设置表属性

create table zxz_data(

name string,

nid int,

phone string,

ntime date)

clustered by(nid) into 5 buckets

stored as orc

tblproperties('transactional'='true');

-- 创建临时表,用于向分桶表插入数据

create table temp1(

name string,

nid int,

phone string,

ntime date)

row format delimited

fields terminated by ",";

-- 数据

name1,1,010-83596208,2020-01-01

name2,2,027-63277201,2020-01-02

name3,3,010-83596208,2020-01-03

name4,4,010-83596208,2020-01-04

name5,5,010-83596208,2020-01-05

-- 向临时表加载数据;向事务表中加载数据

load data local inpath '/home/hadoop/data/zxz_data.txt' overwrite

into table temp1;

insert into table zxz_data select * from temp1;

-- 检查数据和文件

select * from zxz_data;

dfs -ls /user/hive/warehouse/mydb.db/zxz_data ;

-- DML 操作

delete from zxz_data where nid = 3;

dfs -ls /user/hive/warehouse/mydb.db/zxz_data ;

insert into zxz_data values ("name3", 3, "010-83596208",

current_date); -- 不支持

insert into zxz_data values ("name3", 3, "010-83596208", "2020-

06-01"); -- 执行

insert into zxz_data select "name3", 3, "010-83596208",

current_date;

dfs -ls /user/hive/warehouse/mydb.db/zxz_data ;

insert into zxz_data values

("name6", 6, "010-83596208", "2020-06-02"),

("name7", 7, "010-83596208", "2020-06-03"),

("name8", 9, "010-83596208", "2020-06-05"),

("name9", 8, "010-83596208", "2020-06-06");

dfs -ls /user/hive/warehouse/mydb.db/zxz_data ;

update zxz_data set name=concat(name, "00") where nid>3;

dfs -ls /user/hive/warehouse/mydb.db/zxz_data ;

-- 分桶字段不能修改,下面的语句不能执行

-- Updating values of bucketing columns is not supported

update zxz_data set nid = nid + 1;