背景:这是一个数据清洗,多表关联处理的程序。之前一直运行良好。突然就报错如下

{"exception":"Illegal pattern component: XXX

org.apache.commons.lang3.time.FastDateFormat.parsePattern(FastDateFormat.java:577)

at org.apache.commons.lang3.time.FastDateFormat.init(FastDateFormat.java:444)

at org.apache.commons.lang3.time.FastDateFormat.<init>(FastDateFormat.java:437)

at org.apache.commons.lang3.time.FastDateFormat$1.createInstance(FastDateFormat.java:110)

at org.apache.commons.lang3.time.FastDateFormat$1.createInstance(FastDateFormat.java:109)

at org.apache.commons.lang3.time.FormatCache.getInstance(FormatCache.java:82)

at org.apache.commons.lang3.time.FastDateFormat.getInstance(FastDateFormat.java:205)

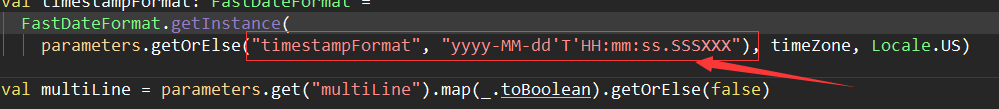

at org.apache.spark.sql.catalyst.json.JSONOptions.<init>(JSONOptions.scala:87)

at org.apache.spark.sql.catalyst.json.JSONOptions.<init>(JSONOptions.scala:44)

at org.apache.spark.sql.DataFrameReader.json(DataFrameReader.scala:453)

at org.apache.spark.sql.DataFrameReader.json(DataFrameReader.scala:439)

at services.DealFactoryService$$anonfun$dealFacoryInfo$2.apply(DealFactoryService.scala:67)

at services.DealFactoryService$$anonfun$dealFacoryInfo$2.apply(DealFactoryService.scala:29)

at scala.collection.immutable.List.foreach(List.scala:392)

at services.DealFactoryService.dealFacoryInfo(DealFactoryService.scala:29)

at services.DistributeService$$anonfun$hisTypeJob$1$$anonfun$apply$1.apply(DistributeService.scala:102)

at services.DistributeService$$anonfun$hisTypeJob$1$$anonfun$apply$1.apply(DistributeService.scala:99)

at scala.collection.immutable.List.foreach(List.scala:392)

at services.DistributeService$$anonfun$hisTypeJob$1.apply(DistributeService.scala:99)

at services.DistributeService$$anonfun$hisTypeJob$1.apply(DistributeService.scala:94)

at scala.collection.immutable.List.foreach(List.scala:392)

at services.DistributeService.hisTypeJob(DistributeService.scala:94)

at mlcfactory$.main(mlcfactory.scala:22)

at mlcfactory.main(mlcfactory.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:855)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:930)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:939)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)第一时间并没有思考,直接百度了这个异常。找到一篇文章如下

https://blog.csdn.net/lds_include/article/details/89329139

给出的方案是

修改option选项修改默认的timeStampFormat,代码如下

res.write ? ? ? .option("timestampFormat", "yyyy/MM/dd HH:mm:ss ZZ") ? ? ? .mode("append") ? ? ? .json("C://out")对比我自己的代码

ss.read .json(c.toDS()).createOrReplaceTempView(output)于是盲目的增加了参数.option("timestampFormat", "yyyy/MM/dd HH:mm:ss ZZ")

但是放到线上运行报错?org.elasticsearch.hadoop.serialization.EsHadoopSerializationException: org.codehaus.jackson.JsonParseException: Unexpected character ('?' (code 239)): was expecting comma to separate ARRAY entries。

后来经过分析,这个问题就是ss.read.json去解析时间格式的时候报错,因为读取的数据源各种时间格式都有所以按照上面固定传参不行。

但是这个程序在本地运行没问题,放到线上就报错。于是查看源代码去寻找报错的代码发现没有。经过过对比多个版本的commoms-lang3的源代码发现

FastDateFormat.parsePattern这个方法在3.1及其以下版本才有。如图所示

3.1版本有

3.2版本没有?

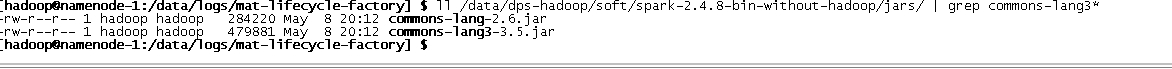

?于是我就意识到是包冲突导致的问题。于是到线上spark的jar包目录寻找是否有低版本的包

并未发现3.1及其以下的包

于是进行全盘扫描并对低版本包注释掉,发现还是不起作用。

?一时没排查到原因于是想方法强制spark使用我自己的包

参考这篇文章https://blog.csdn.net/adorechen/article/details/90722933

spark.{driver/executor}.userClassPathFirst ??

该配置的意思是优先使用用户路径下的依赖包。通常是系统默认提供的依赖包版本较低,需要使用用户自己提供的依赖包,使用该选项可以很好的解决90%的问题。

spark.{driver/executor}.extraClassPath?

某些时候同一个类的两个不同版本被加载进ClassLoader,link的时候发生错误。这种冲突就需要使用extraClassPath手动指定版本。该选项指定的依赖包具有最高优先级。缺点是只能指定一个包。

注意:extraClassPath=jarName 不用给path。 例如:

--jars snappy-java-version.jar ? \

--conf "spark.driver.extraClassPath=snappy-java-version.jar" \

--conf "spark.executor.extraClassPath=snappy-java-version.jar" \

于是参考上面的参数进行修改提交脚本,但是引发了其他的包冲突。于是打消了这个念头,不过上篇文章中的一个参数启发了我

在spark-submit命令里添加观察参数能够打印依赖关系

--driver-java-options -verbose:class于是长时候得到信息如下

[Loaded org.apache.spark.sql.catalyst.json.JSONOptions$$anonfun$29 from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/spark-catalyst_2.11-2.4.8.jar]

//注意这里

[Loaded org.apache.commons.lang3.time.FastDateFormat from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FormatCache from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$1 from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$Rule from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$NumberRule from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$TextField from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$TwoDigitYearField from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FormatCache$MultipartKey from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$PaddedNumberField from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$CharacterLiteral from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$TwoDigitMonthField from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.commons.lang3.time.FastDateFormat$TwoDigitNumberField from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/hive-exec-1.2.1.jar]

[Loaded org.apache.spark.sql.catalyst.json.JSONOptions$$anonfun$30 from file:/data/dps-hadoop/soft/spark-2.4.8-bin-without-hadoop/jars/spark-catalyst_2.11-2.4.8.jar]

[Loaded utils.LogUtil$ from file:/data/webroot/mat-lifecycle-factory/20210716125258-mat-lifecycle-factory-47/mat-lifecycle-factory.jar]

[Loaded utils.LogUtil$$anonfun$getErrorString$1 from file:/data/webroot/mat-lifecycle-factory/20210716125258-mat-lifecycle-factory-47/mat-lifecycle-factory.jar]

[Loaded net.minidev.json.JSONAware from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JSONAwareEx from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JSONStreamAware from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JSONStreamAwareEx from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JSONObject from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JSONValue from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.parser.ParseException from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.parser.ContainerFactory from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.parser.ContentHandler from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JSONStyle from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JStylerObj$MustProtect from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JStylerObj$MPAgressive from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JStylerObj$MPSimple from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]

[Loaded net.minidev.json.JStylerObj$StringProtector from file:/data/dps-hadoop/hadoop-2.10.1/share/hadoop/common/lib/json-smart-1.3.1.jar]部分信息省略。我发现commons-lang3引用自hive1.2.1这个版本的包。于是意识到是hive降版本引发的低版本问题。于是得以解决