1、基础环境安装

(1)静态ip设置

https://blog.csdn.net/weixin_40612128/article/details/119007776

(2)主机名设置

https://blog.csdn.net/weixin_40612128/article/details/119008039?spm=1001.2014.3001.5501

(3)jdk安装

https://blog.csdn.net/weixin_40612128/article/details/119007401?spm=1001.2014.3001.5501

(4)防火墙设置:关闭防火墙

https://blog.csdn.net/weixin_40612128/article/details/107575374?spm=1001.2014.3001.5501

(5)ssh免登陆设置

https://blog.csdn.net/weixin_40612128/article/details/119008155?spm=1001.2014.3001.5501

2、Hadoop安装

hadoop3.2.0下载地址:

链接:https://pan.baidu.com/s/1_pReniVNytsMNTxVxpCFBg

提取码:3p28

也可以去官网自己下载:

https://hadoop.apache.org/

国内镜像地址:

http://mirror.bit.edu.cn/apache/

https://mirrors.tuna.tsinghua.edu.cn/apache

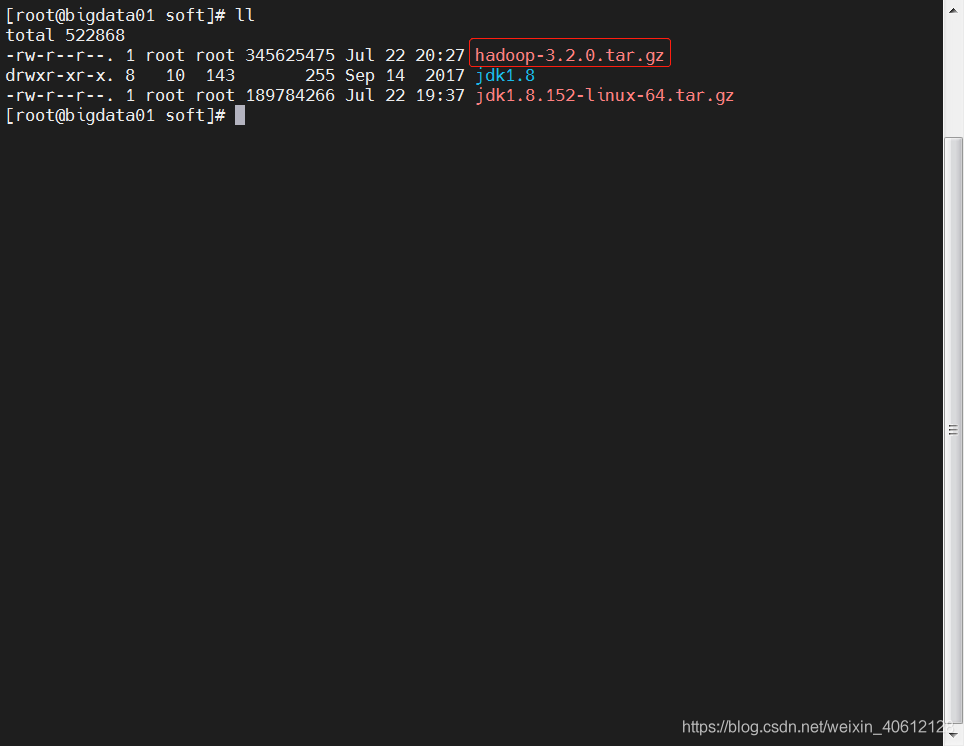

(1)上传解压安装包

将安装包上传到/data/soft目录,然后进行解压

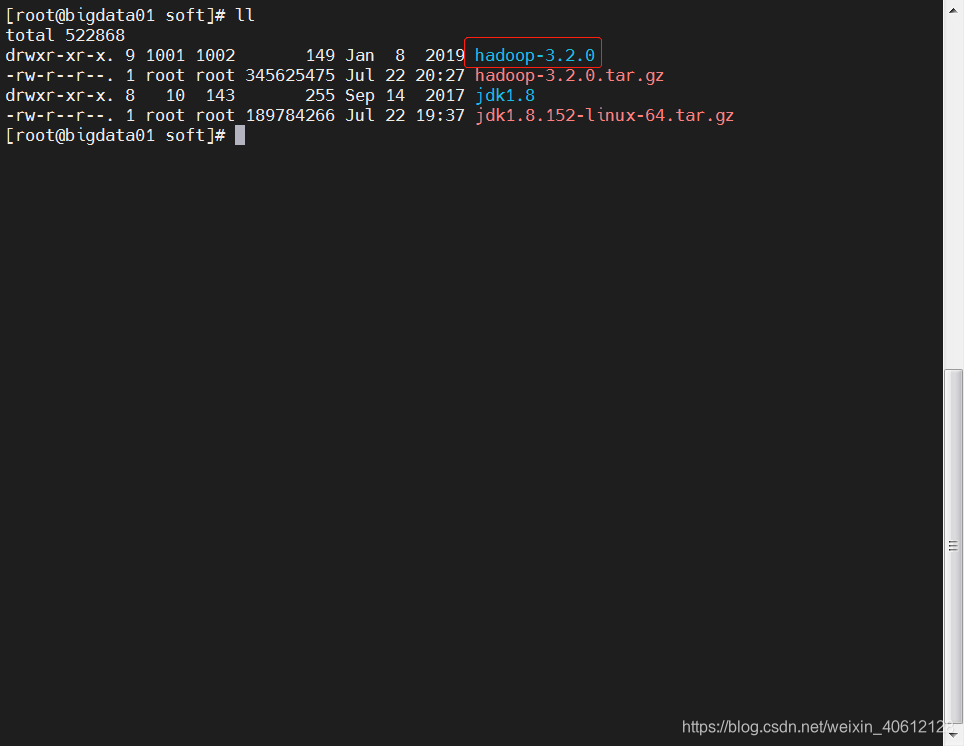

进行解压

tar -zxvf hadoop-3.2.0.tar.gz

如下,就解压好了。

(2)配置环境变量

vi /etc/profile

配置如下:

export JAVA_HOME=/data/soft/jdk1.8

export HADOOP_HOME=/data/soft/hadoop-3.2.0

export PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$PATH

source 一下环境变量文件

source /etc/profile

(3)修改hadoop相关配置文件

修改 /data/soft/hadoop-3.2.0/etc/hadoop/ 目录下6个配置文件

cd /data/soft/hadoop-3.2.0/etc/hadoop/

1)hadoop-env.sh

vi hadoop-env.sh

添加如下配置:

export JAVA_HOME=/data/soft/jdk1.8

export HADOOP_LOG_DIR=/data/hadoop_repo/logs/hadoop

2)core-site.xml

vi core-site.xml

添加如下配置:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://bigdata01:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop_repo</value>

</property>

</configuration>

3)hdfs-site.xml

vi hdfs-site.xml

添加如下配置:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

4)mapred-site.xml

vi mapred-site.xml

添加如下配置:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

5)yarn-site.xml

vi yarn-site.xml

添加如下配置:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

6)workers

vi workers

添加如下配置:

bigdata01

修改 /data/soft/hadoop-3.2.0/sbin 目录下4个配置文件

cd /data/soft/hadoop-3.2.0/sbin

7)start-dfs.sh

vi start-dfs.sh

添加如下配置:

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

8)stop-dfs.sh

vi stop-dfs.sh

添加如下配置:

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

9)start-yan.sh

vi start-yarn.sh

添加如下配置:

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

10)stop-yan.sh

vi stop-yarn.sh

添加如下配置:

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

(4)格式化HDFS

cd /data/soft/hadoop-3.2.0

bin/hdfs namenode -format

如下,说明格式化成功!

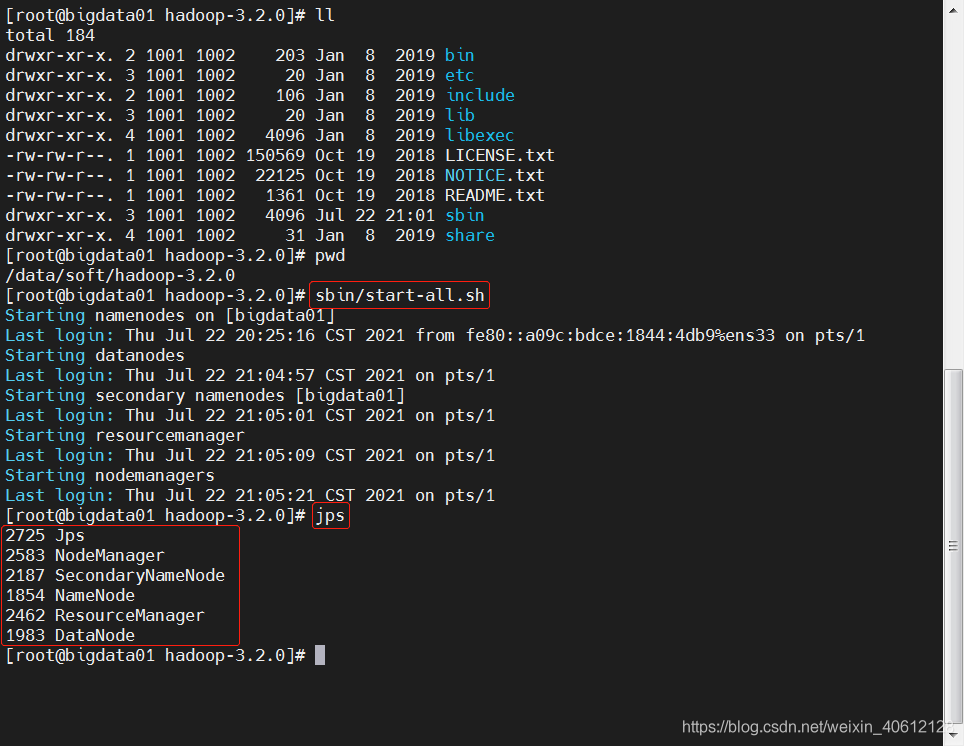

(6)启动hadoop伪分布集群

cd /data/soft/hadoop-3.2.0

sbin/start-all.sh

如下,说明集群启动成功!

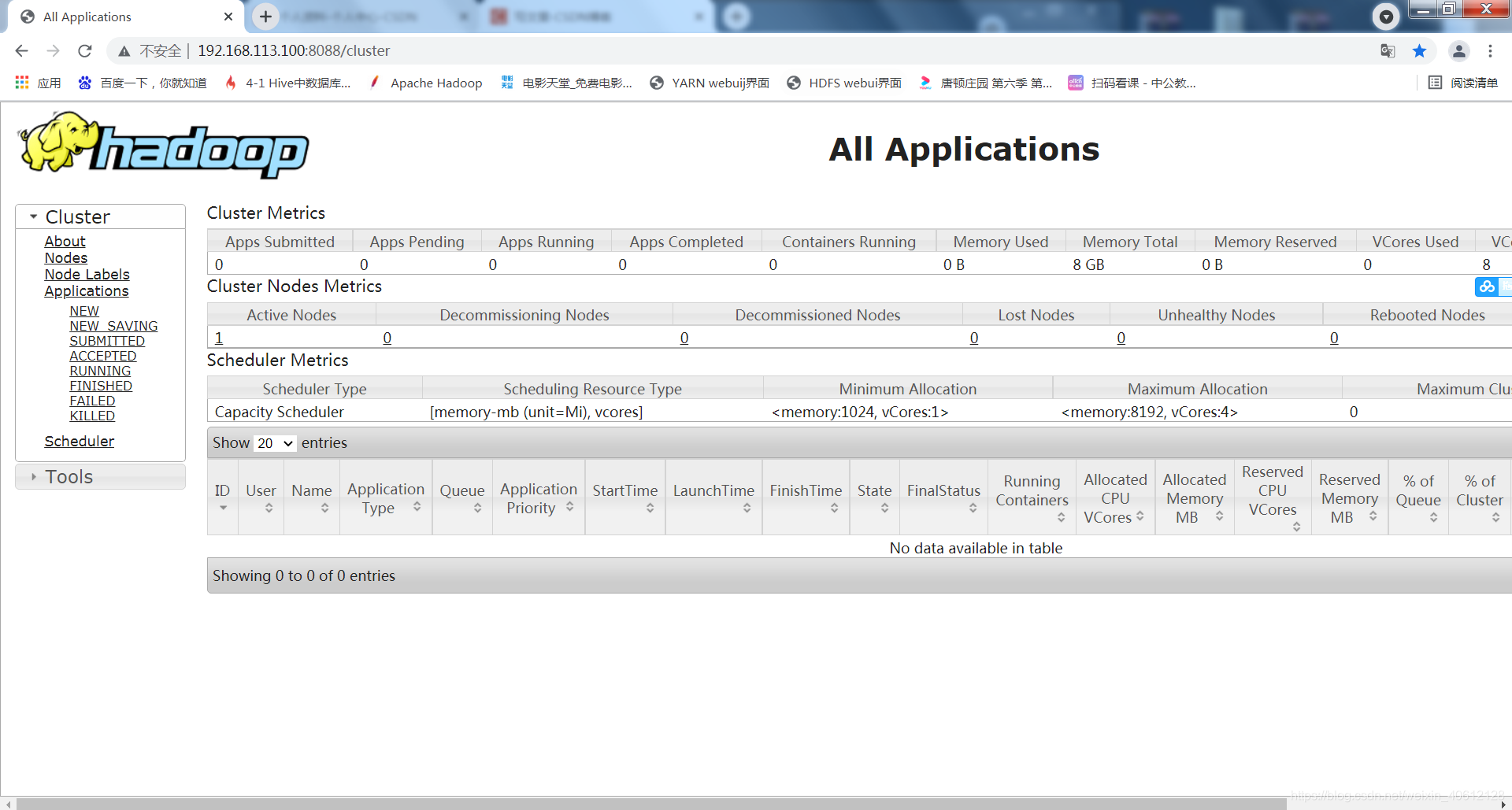

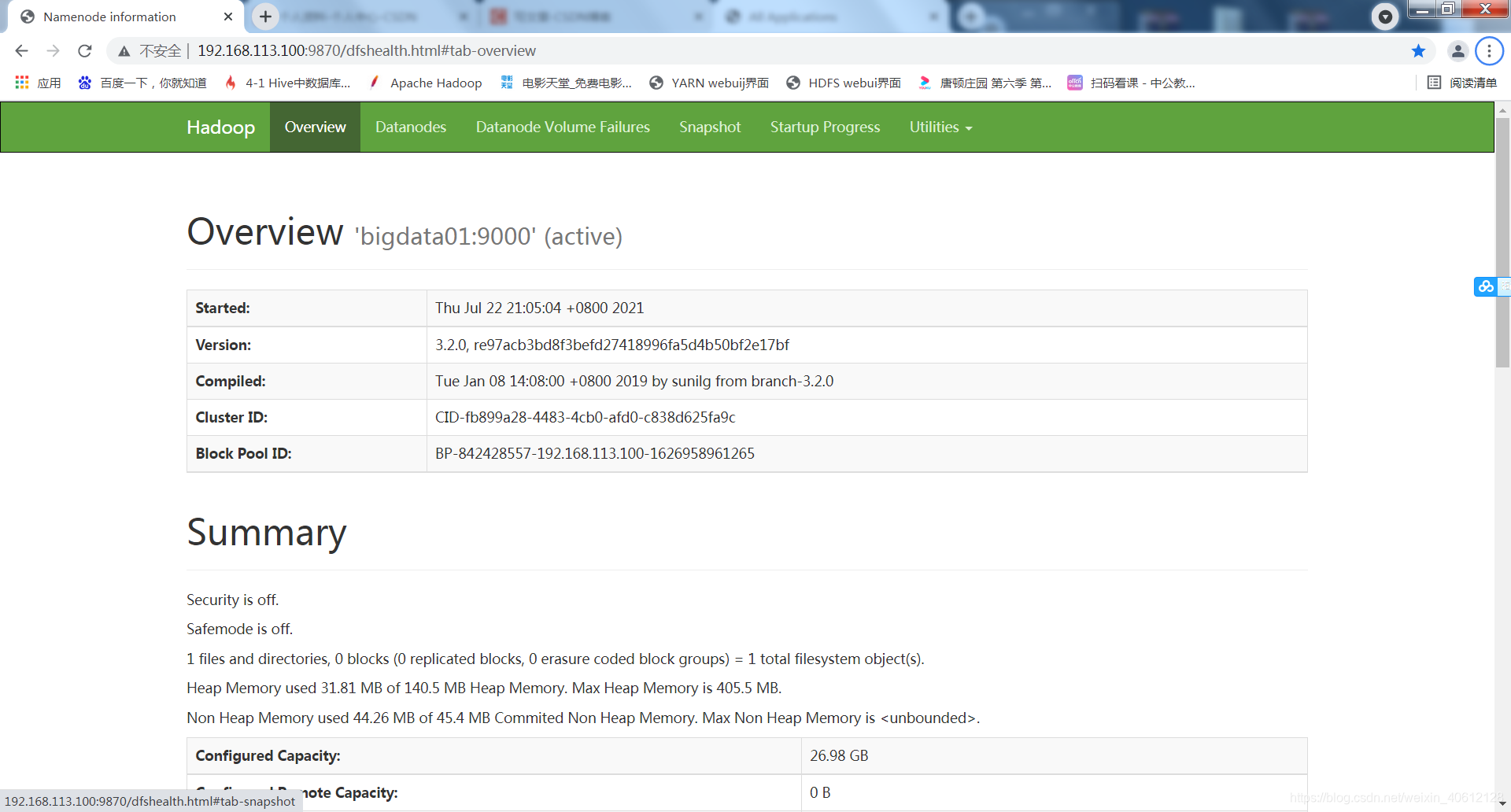

(7)进行验证

http://192.168.113.100:8088/cluster

http://192.168.113.100:9870/dfshealth.html#tab-overview

如上,说明伪分布hadoop集群安装成功!

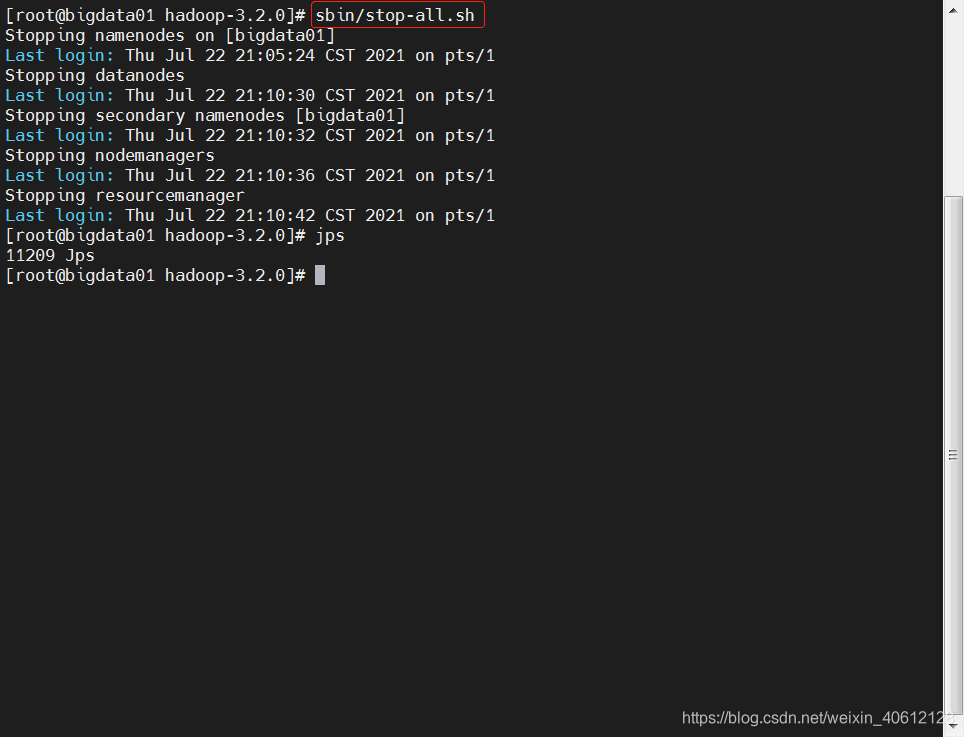

(8)停止集群

sbin/stop-all.sh