需求:需要使用jmeter往Kafka里压大量的数据。

????????这里使用java写一个工具也可以实现,但是之前没自己写过Jmeter脚本。整体的思路就是继承AbstractJavaSamplerClient类实现runTest()方法。但是连接Kafka操作会比较耗资源,查找资料发现了setupTest()方法,在runTest()方法前执行(应该Jmeter创建进程前执行)。这样Kafka就只连接一次,节省资源,保证每个线程的执行时间。

坑:因为之前没写过Jmeter的脚本,需要把所有用到依赖的jar包放到lib目录下。

先贴代码!

依赖:

<dependencies>

<dependency>

<groupId>org.apache.jmeter</groupId>

<artifactId>ApacheJMeter_core</artifactId>

<version>5.0</version>

</dependency>

<dependency>

<groupId>org.apache.jmeter</groupId>

<artifactId>ApacheJMeter_java</artifactId>

<version>5.0</version>

</dependency>

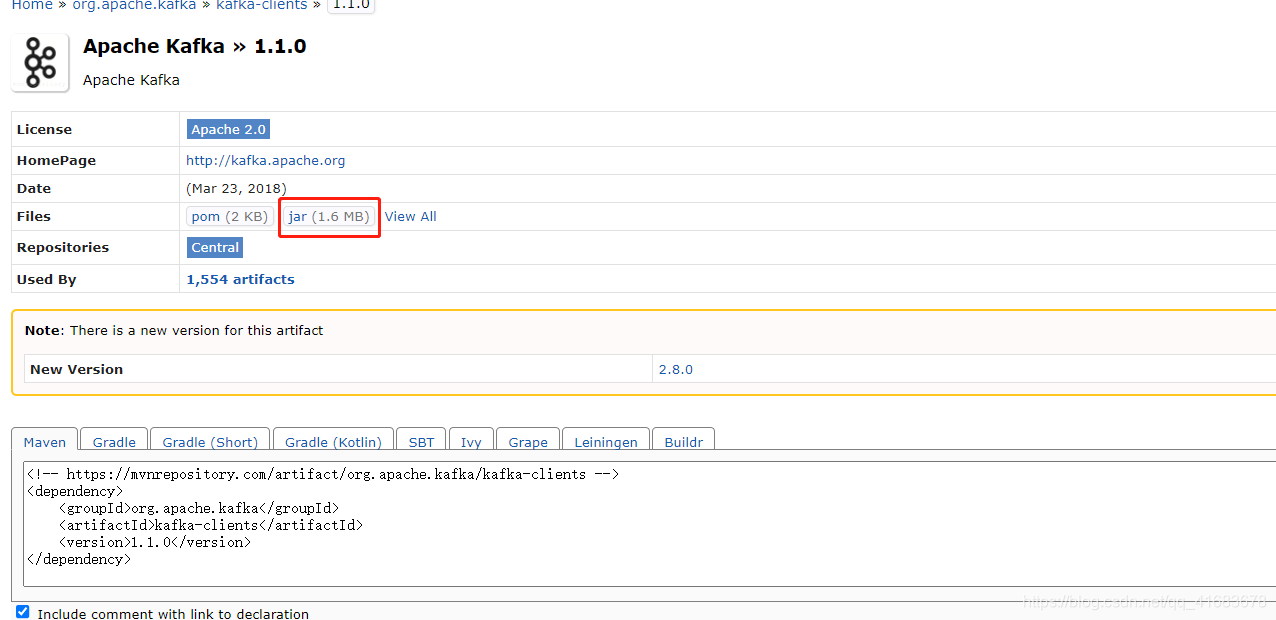

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>1.1.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.47</version>

</dependency>

</dependencies>代码:

import org.apache.jmeter.config.Arguments;

import org.apache.jmeter.protocol.java.sampler.AbstractJavaSamplerClient;

import org.apache.jmeter.protocol.java.sampler.JavaSamplerContext;

import org.apache.jmeter.samplers.SampleResult;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.junit.Test;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Future;

public class JmeterTest extends AbstractJavaSamplerClient {

private Producer<String, String> procuder;

/**

* Jmeter默认参数

*

* @return

*/

@Override

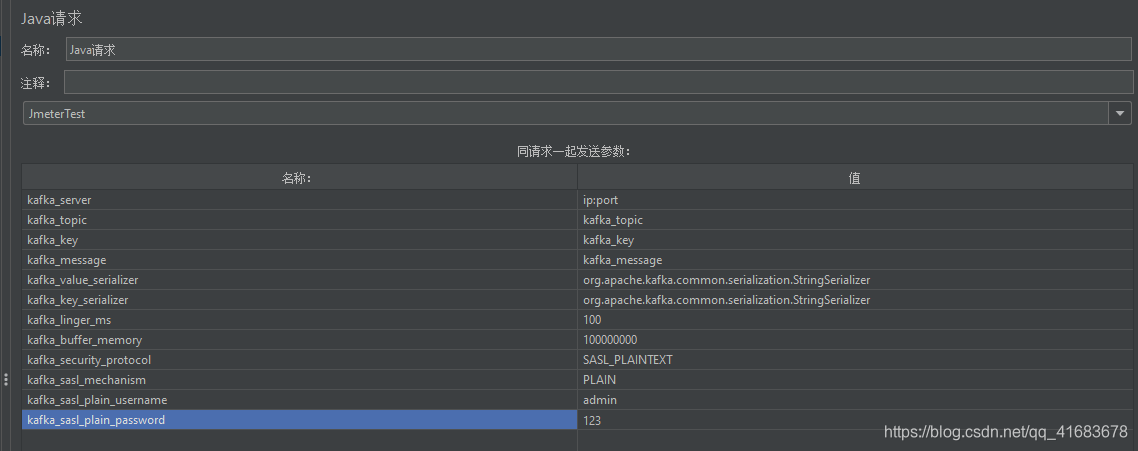

public Arguments getDefaultParameters() {

Arguments params = new Arguments();

params.addArgument("kafka_server", "ip:port");//jmeter中变量的默认值

params.addArgument("kafka_topic", "kafka_topic");

params.addArgument("kafka_key", "kafka_key");

params.addArgument("kafka_message", "kafka_message");

params.addArgument("kafka_value_serializer", "org.apache.kafka.common.serialization.StringSerializer");

params.addArgument("kafka_key_serializer", "org.apache.kafka.common.serialization.StringSerializer");

params.addArgument("kafka_linger_ms", "100");

params.addArgument("kafka_buffer_memory", "100000000");

params.addArgument("kafka_security_protocol", "SASL_PLAINTEXT");

params.addArgument("kafka_sasl_mechanism", "PLAIN");

params.addArgument("kafka_sasl_plain_username", "admin");

params.addArgument("kafka_sasl_plain_password", "123");

return params;

}

/**

* 进程启动前的方法

*

* @param context

*/

@Override

public void setupTest(JavaSamplerContext context) {

Properties props = new Properties();

props.put("bootstrap.servers", context.getParameter("kafka_server"));

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", context.getParameter("kafka_linger_ms"));

props.put("buffer.memory", context.getParameter("kafka_buffer_memory"));

props.put("key.serializer", context.getParameter("kafka_key_serializer"));

props.put("value.serializer", context.getParameter("kafka_value_serializer"));

props.put("security.protocol", context.getParameter("kafka_security_protocol"));

props.put("sasl.mechanism", context.getParameter("kafka_sasl_mechanism"));

props.put("sasl.jaas.config", "org.apache.kafka.common.security.scram.ScramLoginModule required username=\"" + context.getParameter("kafka_sasl_plain_username") + "\" password=\"" + context.getParameter("kafka_sasl_plain_password") + "\";");

//org.apache.kafka.common.security.plain.PlainLoginModule required

this.procuder = new KafkaProducer<>(props);

System.out.println("procuder初始化成功");

}

public void producer(String kafka_message, String kafka_topic, String kafka_key) throws ExecutionException, InterruptedException {

ProducerRecord<String, String> msg = new ProducerRecord<String, String>(kafka_topic, kafka_key, kafka_message);

Future<RecordMetadata> f = procuder.send(msg);

System.out.println(f.get());

}

/**

* jmeter测试入口

*

* @param context

* @return

*/

@Override

public SampleResult runTest(JavaSamplerContext context) {

SampleResult sr = new SampleResult();

sr.sampleStart();// jmeter 开始统计响应时间标记

try {

producer(context.getParameter("kafka_message"), context.getParameter("kafka_topic"), context.getParameter("kafka_key"));

} catch (ExecutionException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

sr.setSuccessful(true);

sr.sampleEnd();

return sr;

}

/**

* 测试方法

* @throws ExecutionException

* @throws InterruptedException

*/

@Test

public void test() throws ExecutionException, InterruptedException {

Properties props = new Properties();

props.put("bootstrap.servers", "192.168.172.30:9102");

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", "1000");

props.put("buffer.memory", "100000000");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("security.protocol", "SASL_PLAINTEXT");

props.put("sasl.mechanism", "PLAIN");

props.put("sasl.jaas.config", "org.apache.kafka.common.security.scram.ScramLoginModule required username=\"" + "admin" + "\" password=\"" + "123" + "\";");

//org.apache.kafka.common.security.plain.PlainLoginModule required

this.procuder = new KafkaProducer<>(props);

producer("{\n" +

"\t\"parm\": \"test\"\n" +

"}", "topic", "987654123");

}

}

这里的 身份认证使用的是SASL,其他方式参考官方文档:

http://kafka.apache.org/0102/javadoc/index.html?org/apache/kafka/clients/producer/KafkaProducer.html

步骤:

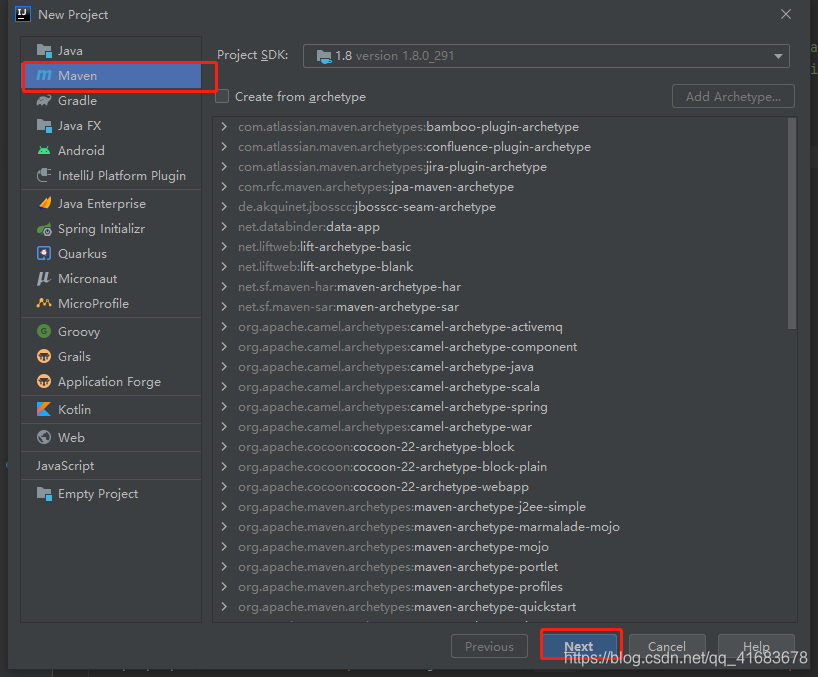

1.建工程

2.引依赖(见代码)

3.代码实现(见代码)?

4.打包

5.将打好的包放在apache-jmeter-5.4.1\lib\ext? 目录下

6.从MVN仓库或者其他地方将引用的jar包放在apache-jmeter-5.4.1\lib 下(这里坑了我好好长时间MD)?

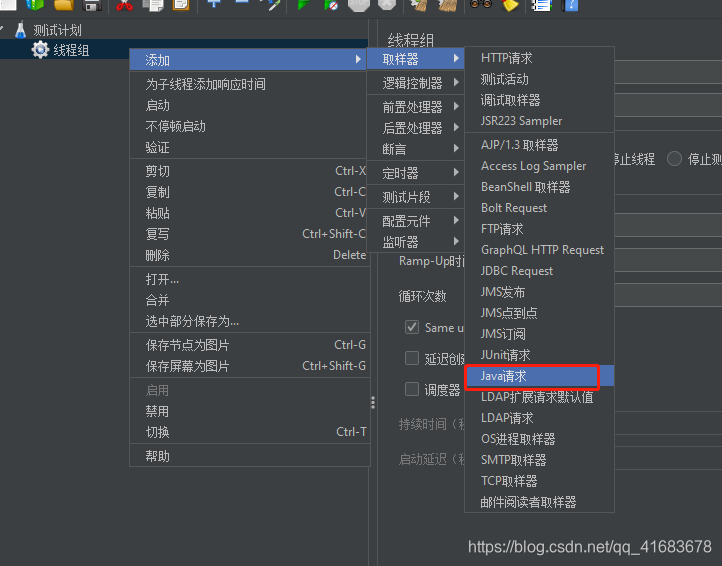

7.使用Jmeter

?

?

?