说明:本地在idea连接虚拟机时遇到了很多问题,为了以后避免入坑,特记录文档如下,包括我搭建的集群环境及idea中是怎么配置的,中间遇到问题报错记录及解决方法

一、集群环境:

| 大数据各个组件版本 | 192.168.15.10 | 192.168.15.11 | 192.168.15.12 |

|---|---|---|---|

| jdk1.80 | √ | √ | √ |

| hadoop-2.6.1 | √ | √ | √ |

| mysql5.7 | √ | ||

| hive | √ | ||

| Scala | √ | √ | √ |

| spark | √ | √ | √ |

二、本地windows环境:

1.安装jdk1.8

2.安装scala2.11.8

3.安装maven3.3.9

4.安装hadoop-2.6.1-windows,这里需要注意一下,还需要配置hadoop-2.6.1-windows的环境变量

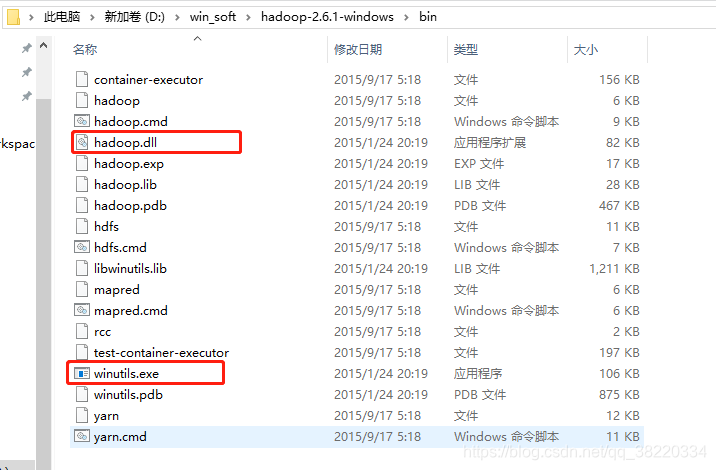

,注意这里需要将下面两个文件复制到hadoop-2.6.1-windows/bin目录下,还需要复制到C盘的System32/SysWOW64目录下

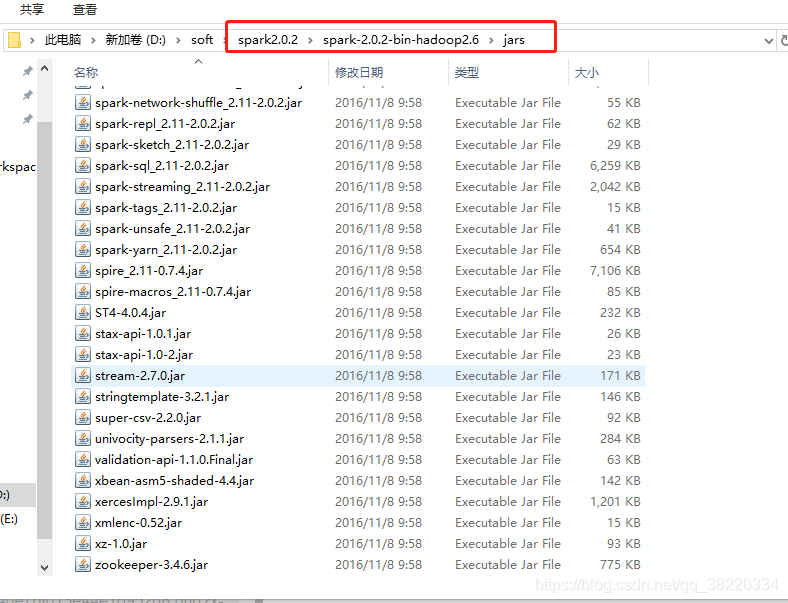

5.把集群上的spark2.0.2安装包拷贝到Windows上一份,解压后将jars目录下的jar包都拷贝到项目中,这一步很重要

三、idea配置:

1.配置idea中的jdk,scala,maven,Scala插件

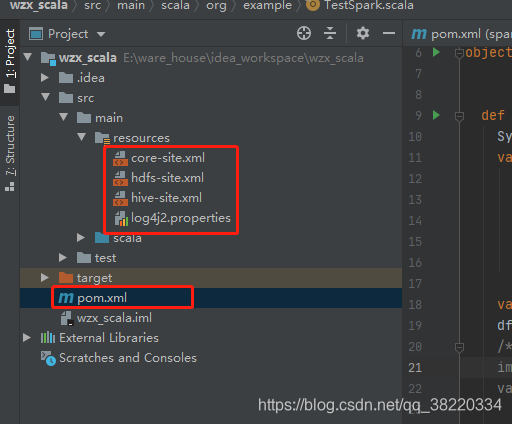

2.创建maven项目,目录结构如下;代码我也粘贴到下面吧,前三个配置文件在集群上拷贝过来即可

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<!--<value>hdfs://192.168.77.10:9000</value>-->

<value>hdfs://192.168.15.10:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/src/hadoop-2.6.1/tmp</value>

</property>

</configuration>

hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<!--<value>master:9001</value>-->

<value>192.168.15.10:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/src/hadoop-2.6.1/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/src/hadoop-2.6.1/dfs/data</value>

</property>

<property> <name>dfs.replication</name> <value>3</value>

</property>

</configuration>

hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<!--<value>jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true</value>-->

<!-- <value>jdbc:mysql://192.168.15.10:3306/hive?createDatabaseIfNotExist=true</value>-->

<value>jdbc:mysql://192.168.15.10:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

</configuration>

log4j2.properties

appender.out.type = Console

appender.out.name = out

appender.out.layout.type = PatternLayout

appender.out.layout.pattern = [%30.30t] %-30.30c{1} %-5p %m%n

logger.springframework.name = org.springframework

logger.springframework.level = WARN

rootLogger.level = INFO

rootLogger.appenderRef.out.ref = out

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.badou_recommand</groupId>

<artifactId>sparkProject</artifactId>

<packaging>jar</packaging>

<version>1.0-SNAPSHOT</version>

<name>A Camel Scala Route</name>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<scala.binary.version>2.11</scala.binary.version>

<PermGen>64m</PermGen>

<MaxPermGen>512m</MaxPermGen>

<spark.version>2.0.2</spark.version>

<scala.version>2.11</scala.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_${scala.version}</artifactId>

<version>${spark.version}</version>

<!--<exclusions>-->

<!--<exclusion>-->

<!--<groupId>org.slf4j</groupId>-->

<!--<artifactId>slf4j-log4j12</artifactId>-->

<!--</exclusion>-->

<!--</exclusions>-->

</dependency>

<dependency>

<groupId>com.github.scopt</groupId>

<artifactId>scopt_${scala.binary.version}</artifactId>

<version>3.3.0</version>

<exclusions>

<exclusion>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>19.0</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.jpmml</groupId>

<artifactId>pmml-evaluator</artifactId>

<version>1.4.1</version>

</dependency>

<dependency>

<groupId>org.jpmml</groupId>

<artifactId>pmml-evaluator-extension</artifactId>

<version>1.4.1</version>

</dependency>

<dependency>

<groupId>org.tensorflow</groupId>

<artifactId>tensorflow</artifactId>

<version>1.8.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-8_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.17</version>

</dependency>

<dependency>

<groupId>com.huaban</groupId>

<artifactId>jieba-analysis</artifactId>

<version>1.0.2</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.31</version>

</dependency>

<dependency>

<groupId>org.scalatest</groupId>

<artifactId>scalatest_2.11</artifactId>

<version>3.2.0-SNAP5</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/junit/junit -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>2.6.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.6.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>2.6.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.3.1</version>

<exclusions>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.thrift</groupId>

<artifactId>thrift</artifactId>

</exclusion>

<exclusion>

<groupId>org.jruby</groupId>

<artifactId>jruby-complete</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-2.1</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-api-2.1</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>servlet-api-2.5</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-core</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-json</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-server</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

</exclusion>

<exclusion>

<groupId>tomcat</groupId>

<artifactId>jasper-runtime</artifactId>

</exclusion>

<exclusion>

<groupId>tomcat</groupId>

<artifactId>jasper-compiler</artifactId>

</exclusion>

<exclusion>

<groupId>org.jruby</groupId>

<artifactId>jruby-complete</artifactId>

</exclusion>

<exclusion>

<groupId>org.jboss.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

<exclusion>

<groupId>io.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-protocol</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-annotations</artifactId>

<version>1.3.1</version>

<type>test-jar</type>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-hadoop-compat</artifactId>

<version>1.3.1</version>

<scope>test</scope>

<type>test-jar</type>

<exclusions>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.thrift</groupId>

<artifactId>thrift</artifactId>

</exclusion>

<exclusion>

<groupId>org.jruby</groupId>

<artifactId>jruby-complete</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-2.1</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-api-2.1</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>servlet-api-2.5</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-core</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-json</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-server</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

</exclusion>

<exclusion>

<groupId>tomcat</groupId>

<artifactId>jasper-runtime</artifactId>

</exclusion>

<exclusion>

<groupId>tomcat</groupId>

<artifactId>jasper-compiler</artifactId>

</exclusion>

<exclusion>

<groupId>org.jruby</groupId>

<artifactId>jruby-complete</artifactId>

</exclusion>

<exclusion>

<groupId>org.jboss.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

<exclusion>

<groupId>io.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-hadoop2-compat</artifactId>

<version>1.3.1</version>

<scope>test</scope>

<type>test-jar</type>

<exclusions>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.thrift</groupId>

<artifactId>thrift</artifactId>

</exclusion>

<exclusion>

<groupId>org.jruby</groupId>

<artifactId>jruby-complete</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-2.1</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jsp-api-2.1</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>servlet-api-2.5</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-core</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-json</artifactId>

</exclusion>

<exclusion>

<groupId>com.sun.jersey</groupId>

<artifactId>jersey-server</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

</exclusion>

<exclusion>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

</exclusion>

<exclusion>

<groupId>tomcat</groupId>

<artifactId>jasper-runtime</artifactId>

</exclusion>

<exclusion>

<groupId>tomcat</groupId>

<artifactId>jasper-compiler</artifactId>

</exclusion>

<exclusion>

<groupId>org.jruby</groupId>

<artifactId>jruby-complete</artifactId>

</exclusion>

<exclusion>

<groupId>org.jboss.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

<exclusion>

<groupId>io.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.3.1</version>

<!--<scope>provided</scope>-->

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>2.15.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.6.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>2.3</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.19</version>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

</plugins>

<defaultGoal>compile</defaultGoal>

</build>

</project>

scala测试代码

package org.example

import org.apache._

import org.apache.spark.sql.SparkSession

object TestSpark {

def main(args: Array[String]): Unit = {

System.setProperty("hadoop.home.dir", "D:\\win_soft\\hadoop-2.6.1-windows")

val spark = SparkSession

.builder()

.appName("test")

.master("local[2]")

.enableHiveSupport()

.getOrCreate()

val df = spark.sql("select * from orders.order_table")

df.show()

}

}

四、集群启动、开始测试脚本运行:

1.启动hdfs

2.启动hive hive --service metastore

3.启动spark

运行结果如下;

+--------+-------+--------+------------+---------+-----------------+----------------------+

|order_id|user_id|eval_set|order_number|order_dow|order_hour_of_day|days_since_prior_order|

+--------+-------+--------+------------+---------+-----------------+----------------------+

|order_id|user_id|eval_set|order_number|order_dow|order_hour_of_day| days_since_prior_...|

| 2539329| 1| prior| 1| 2| 08| |

| 2398795| 1| prior| 2| 3| 07| 15.0|

| 473747| 1| prior| 3| 3| 12| 21.0|

| 2254736| 1| prior| 4| 4| 07| 29.0|

| 431534| 1| prior| 5| 4| 15| 28.0|

| 3367565| 1| prior| 6| 2| 07| 19.0|

| 550135| 1| prior| 7| 1| 09| 20.0|

| 3108588| 1| prior| 8| 1| 14| 14.0|

| 2295261| 1| prior| 9| 1| 16| 0.0|

| 2550362| 1| prior| 10| 4| 08| 30.0|

| 1187899| 1| train| 11| 4| 08| 14.0|

| 2168274| 2| prior| 1| 2| 11| |

| 1501582| 2| prior| 2| 5| 10| 10.0|

| 1901567| 2| prior| 3| 1| 10| 3.0|

| 738281| 2| prior| 4| 2| 10| 8.0|

| 1673511| 2| prior| 5| 3| 11| 8.0|

| 1199898| 2| prior| 6| 2| 09| 13.0|

| 3194192| 2| prior| 7| 2| 12| 14.0|

| 788338| 2| prior| 8| 1| 15| 27.0|

+--------+-------+--------+------------+---------+-----------------+----------------------+

only showing top 20 rows

报错记录:

Exception in thread “main” java.lang.IllegalAccessError: tried to access method org.bson.

这种报错其实就是jar包冲突导致的,直接在本地库中删除对应的jar包即可

org.apache.spark.sql.AnalysisException: Table or view not found

这种的情况有两种:1.集群上spark的conf目录下没有对应的hive-site.xml

2.idea中配置文件hive-site.xml中的hive写成其他的了

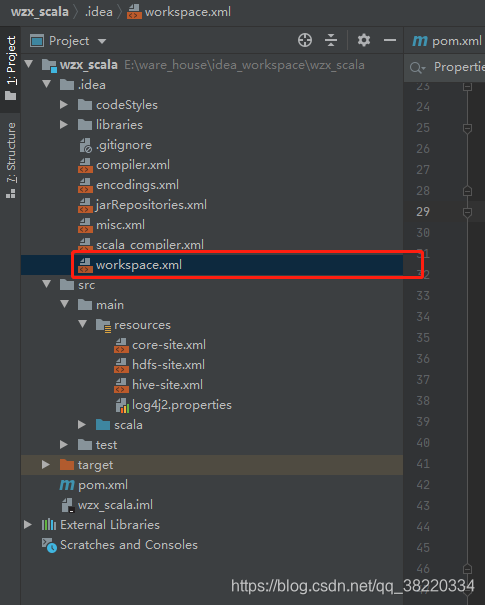

Error running ‘test’: Command line is too long. Shorten command line for test or also for Application default configuration.

解决方法如下;

找到标签 。在标签里加一行 :