hdfs使用一段时间后,发现空间占用很多,经查是/hbase/oldWALs占用很多,原因在最后,先上解决方法

以下是我的处理流程:

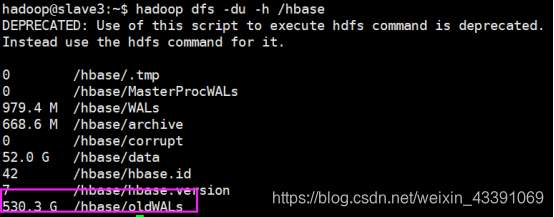

hadoop dfs -du -h /hbase

一、先闭关hbase

二、修改配置文件

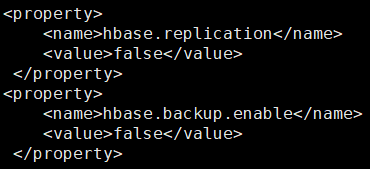

确保hbase-site.xml中的属性hbase.replication=false和属性hbase.backup.enable=false 如果是true就改成false,如果没有那两个属性则添加上去后重启整个hbase集群。

hbase.replication

false

hbase.backup.enable

false

三、再启动hbase

四、再查看目录时,oldWALs容量变小

五、过一段时间,oldWALs目录自动删除了

六、再把配置恢复后,再重启hbase

七、空间释放了

这是改动之前:

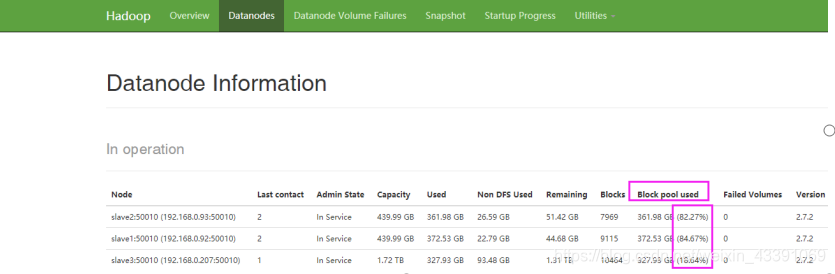

这是改后:

这是改后:

【原因】

为什么会出现oldWALs?

当/hbase/WALs中的HLog文件被持久化到存储文件中,且这些Hlog日志文件不再被需要时,就会被转移到{hbase.rootdir}/oldWALs目录下,该目录由HMaster上的定时任务负责定期清理。

HMaster在做定期清理的时候首先会检查zookeeper中/hbase/replication/rs下是否有对应的复制文件,如果有就放弃清理,如果没有就清理对应的hlog。在手动清理oldWALs目录数据的同时,如果没有删除对应的znode数据,就会导致HMaster不会自动清理oldWALs。

另附某网友的解答:

The folder gets cleaned regularly by a chore in master. When a WAL file is not needed any more for recovery purposes (when HBase can guaratee HBase has flushed all the data in the WAL file), it is moved to the oldWALs folder for archival. The log stays there until all other references to the WAL file are finished. There is currently two services which may keep the files in the archive dir. First is a TTL process, which ensures that the WAL files are kept at least for 10 min. This is mainly for debugging. You can reduce this time by setting hbase.master.logcleaner.ttl configuration property in master. It is by default 600000. The other one is replication. If you have replication setup, the replication processes will hang on to the WAL files until they are replicated. Even if you disabled the replication, the files are still referenced.