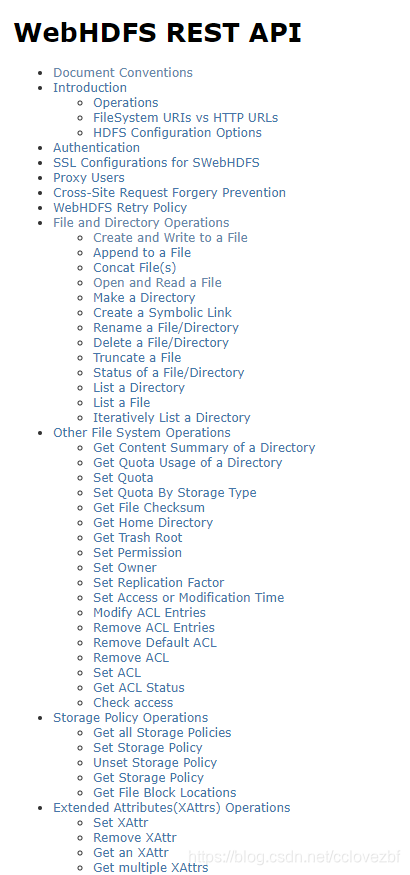

官网永远是学习最好的地方

https://hadoop.apache.org/docs/r3.2.2/hadoop-yarn/hadoop-yarn-site/FairScheduler.html

?为什么要用rest api呢? 使用这个之前我们是如何操作hdfs上的文件的?

通过fs地址 和filesysterm,

现在webHdfs这个明显好用。。。

直接开搞。两种方式 一种是shell脚本 一种是java代码。

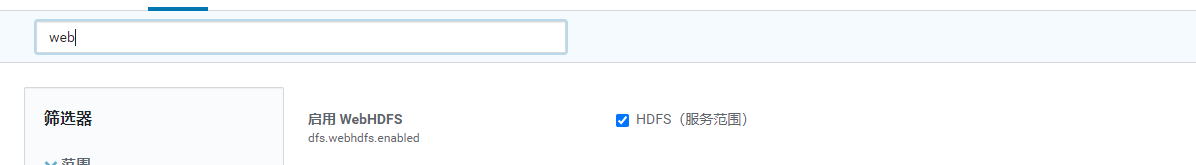

webhdfs已经开启?

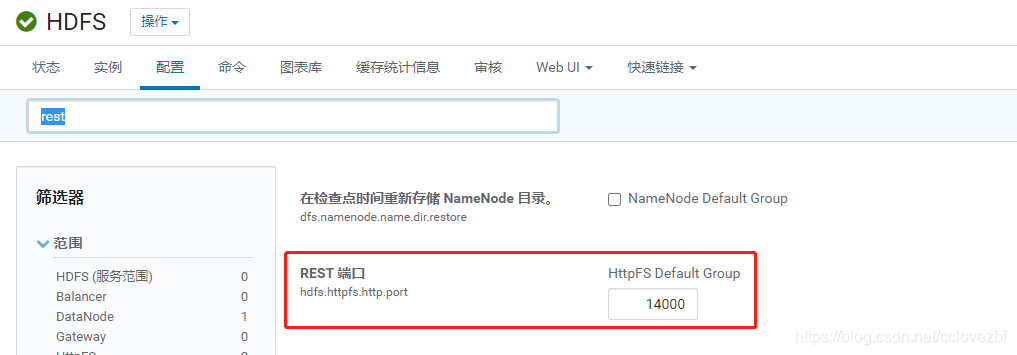

?rest 端口为14000

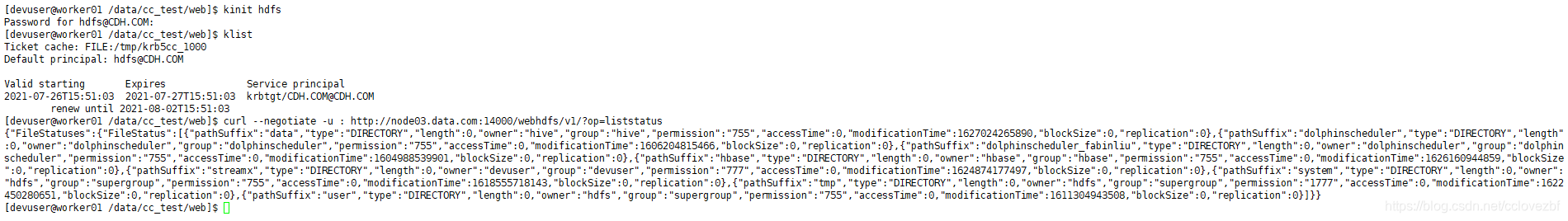

集群为cdh 安装了kerberos的认证。

shell版本

[devuser@worker01 /data/cc_test/web]$ kinit hdfs

Password for hdfs@CDH.COM:

[devuser@worker01 /data/cc_test/web]$ klist

Ticket cache: FILE:/tmp/krb5cc_1000

Default principal: hdfs@CDH.COM

Valid starting Expires Service principal

2021-07-26T15:51:03 2021-07-27T15:51:03 krbtgt/CDH.COM@CDH.COM

renew until 2021-08-02T15:51:03

[devuser@worker01 /data/cc_test/web]$ curl --negotiate -u : http://node03.data.com:14000/webhdfs/v1/?op=liststatus

{"FileStatuses":{"FileStatus":[{"pathSuffix":"data","type":"DIRECTORY","length":0,"owner":"hive","group":"hive","permission":"755","accessTime":0,"modificationTime":1627024265890,"blockSize":0,"replication":0},{"pathSuffix":"dolphinscheduler","type":"DIRECTORY","length":0,"owner":"dolphinscheduler","group":"dolphinscheduler","permission":"755","accessTime":0,"modificationTime":1606204815466,"blockSize":0,"replication":0},{"pathSuffix":"dolphinscheduler_xxx","type":"DIRECTORY","length":0,"owner":"dolphinscheduler","group":"dolphinscheduler","permission":"755","accessTime":0,"modificationTime":1604988539901,"blockSize":0,"replication":0},{"pathSuffix":"hbase","type":"DIRECTORY","length":0,"owner":"hbase","group":"hbase","permission":"755","accessTime":0,"modificationTime":1626160944859,"blockSize":0,"replication":0},{"pathSuffix":"streamx","type":"DIRECTORY","length":0,"owner":"devuser","group":"devuser","permission":"777","accessTime":0,"modificationTime":1624874177497,"blockSize":0,"replication":0},{"pathSuffix":"system","type":"DIRECTORY","length":0,"owner":"hdfs","group":"supergroup","permission":"755","accessTime":0,"modificationTime":1618555718143,"blockSize":0,"replication":0},{"pathSuffix":"tmp","type":"DIRECTORY","length":0,"owner":"hdfs","group":"supergroup","permission":"1777","accessTime":0,"modificationTime":1622450280651,"blockSize":0,"replication":0},{"pathSuffix":"user","type":"DIRECTORY","length":0,"owner":"hdfs","group":"supergroup","permission":"755","accessTime":0,"modificationTime":1611304943508,"blockSize":0,"replication":0}]}}

[devuser@worker01 /data/cc_test/web]$

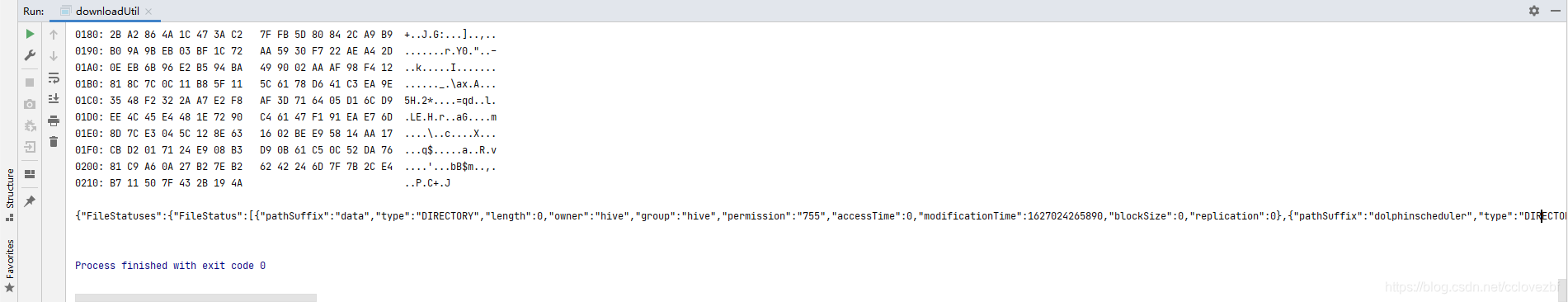

java代码版本

import cn.hutool.core.lang.Console;

import cn.hutool.http.HttpUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.security.UserGroupInformation;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

import java.util.Properties;

public class downloadUtil {

private static Logger logger=LoggerFactory.getLogger(downloadUtil.class);

private static String listStatus_url="http://node03.data.com:14000/webhdfs/v1/?op=liststatus";

public static void main(String[] args) {

kerberos_auth(null);

String result1= HttpUtil.get(listStatus_url);

Console.log(result1);

}

private static void kerberos_auth(Properties prop){

if (System.getProperty("os.name").toLowerCase().contains("windows")) {

try {

String krb5FilePath = "C:\\Users\\coder\\Desktop\\kerberos\\krb5.ini";

String krb5KeyTabPath = "C:\\Users\\coder\\Desktop\\kerberos\\hive.keytab";

String krbPrincipal = "hive@CDH.COM";

// System.setProperty("java.security.krb5.conf", krb5Conf);

System.setProperty("java.security.krb5.conf", krb5FilePath);

System.setProperty("sun.security.krb5.debug", "true");

Configuration configuration = new Configuration();

configuration.set("hadoop.security.authentication", "Kerberos");

configuration.set("keytab.file", krb5KeyTabPath);

configuration.set("kerberos.principal", krbPrincipal);

UserGroupInformation.setConfiguration(configuration);

UserGroupInformation.loginUserFromKeytab(krbPrincipal, krb5KeyTabPath);

} catch (IOException e) {

logger.error("kerberos login异常 : {}", e.getMessage());

}

}else {

try {

String hive_keytable = prop.getProperty("hive_keytable");

String hive_principal= prop.getProperty("hive_principal");

logger.info("验证kerberos,hive_principal={},hive_keytable={}",hive_principal,hive_keytable);

UserGroupInformation.loginUserFromKeytab(hive_principal, hive_keytable);

} catch (IOException e) {

logger.error("kerberos login异常 : {}", e.getMessage());

}

}

}

}

打印日志

{

"FileStatuses": {

"FileStatus": [

{

"pathSuffix": "data",

"type": "DIRECTORY",

"length": 0,

"owner": "hive",

"group": "hive",

"permission": "755",

"accessTime": 0,

"modificationTime": 1627024265890,

"blockSize": 0,

"replication": 0

},

{

"pathSuffix": "dolphinscheduler",

"type": "DIRECTORY",

"length": 0,

"owner": "dolphinscheduler",

"group": "dolphinscheduler",

"permission": "755",

"accessTime": 0,

"modificationTime": 1606204815466,

"blockSize": 0,

"replication": 0

},

{

"pathSuffix": "dolphinscheduler_xxx",

"type": "DIRECTORY",

"length": 0,

"owner": "dolphinscheduler",

"group": "dolphinscheduler",

"permission": "755",

"accessTime": 0,

"modificationTime": 1604988539901,

"blockSize": 0,

"replication": 0

},

{

"pathSuffix": "hbase",

"type": "DIRECTORY",

"length": 0,

"owner": "hbase",

"group": "hbase",

"permission": "755",

"accessTime": 0,

"modificationTime": 1626160944859,

"blockSize": 0,

"replication": 0

},

{

"pathSuffix": "streamx",

"type": "DIRECTORY",

"length": 0,

"owner": "devuser",

"group": "devuser",

"permission": "777",

"accessTime": 0,

"modificationTime": 1624874177497,

"blockSize": 0,

"replication": 0

},

{

"pathSuffix": "system",

"type": "DIRECTORY",

"length": 0,

"owner": "hdfs",

"group": "supergroup",

"permission": "755",

"accessTime": 0,

"modificationTime": 1618555718143,

"blockSize": 0,

"replication": 0

},

{

"pathSuffix": "tmp",

"type": "DIRECTORY",

"length": 0,

"owner": "hdfs",

"group": "supergroup",

"permission": "1777",

"accessTime": 0,

"modificationTime": 1622450280651,

"blockSize": 0,

"replication": 0

},

{

"pathSuffix": "user",

"type": "DIRECTORY",

"length": 0,

"owner": "hdfs",

"group": "supergroup",

"permission": "755",

"accessTime": 0,

"modificationTime": 1611304943508,

"blockSize": 0,

"replication": 0

}

]

}

}