CentOS 7搭建hadoop服务

节点规划

hostname:master、slave1、slave2

IP地址:192.168.81.132、192.168.81.133、192.168.81.137

服务角色:NameNode、DataNode1、DataNode2

- 关闭防火墙、SELinux

注意:在每个节点上同样需要关闭防火墙、selinux

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# setenforce 0

- 添加主机映射

注意:在每个节点上同样需要配置映射

[root@localhost ~]# vim /etc/hosts

192.168.81.132 master

192.168.81.133 slave1

192.168.81.137 slave2

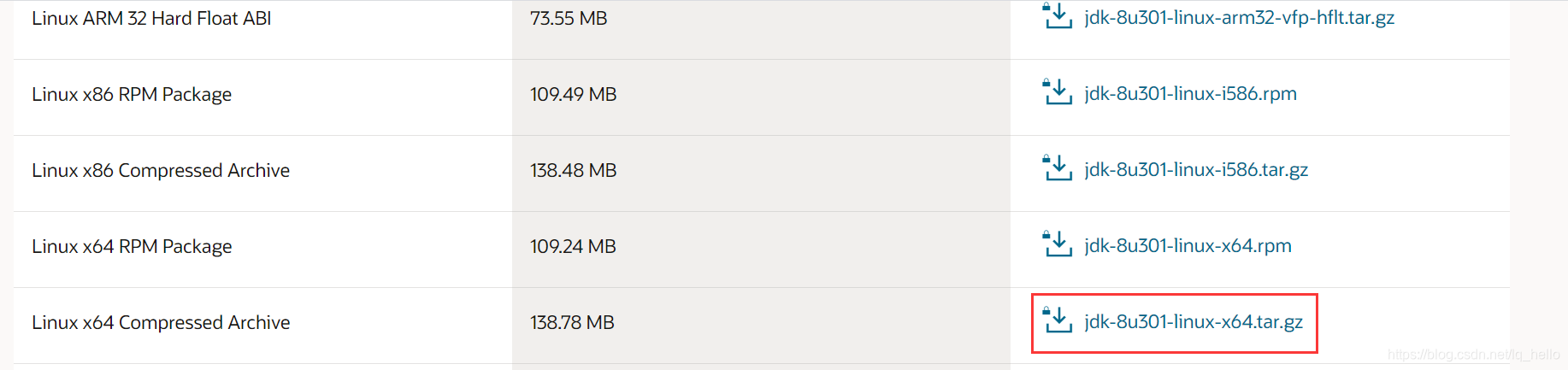

- JDK安装与配置

注意:在每个节点上都要做以下相同的操作

官网下载地址:https://www.oracle.com/java/technologies/javase/javase-jdk8-downloads.html

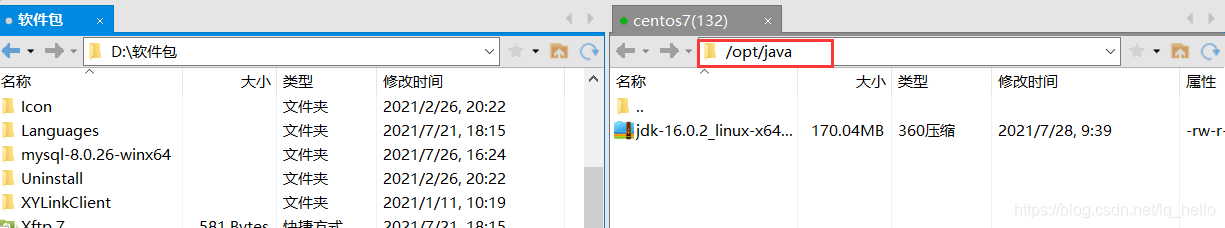

[root@localhost ~]# mkdir /opt/java //创建目录存放jdk

[root@localhost ~]# 把下载好的jdk版本上传到/opt/java目录下解压

[root@localhost ~]# cd /opt/java/

[root@localhost java]#

[root@localhost java]# tar zxf jdk-16.0.2_linux-x64_bin.tar.gz

[root@localhost java]# ll

总用量 174120

drwxr-xr-x. 9 root root 107 7月 28 09:40 jdk-16.0.2

-rw-r--r--. 1 root root 178295771 7月 28 09:39 jdk-16.0.2_linux-x64_bin.tar.gz

- 配置JDK环境变量并让配置文件立即生效

[root@localhost java]# vim /etc/profile

export JAVA_HOME=/opt/java/jdk-16.0.2

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:{JAVA_HOME}/lib:{JAVA_HOME}/lib

export PATH=$PATH:${JAVA_HOME}/bin:$PATH

[root@localhost java]# source /etc/profile

[root@localhost java]# java -version

java version "16.0.2" 2021-07-20

Java(TM) SE Runtime Environment (build 16.0.2+7-67)

Java HotSpot(TM) 64-Bit Server VM (build 16.0.2+7-67, mixed mode, sharing)

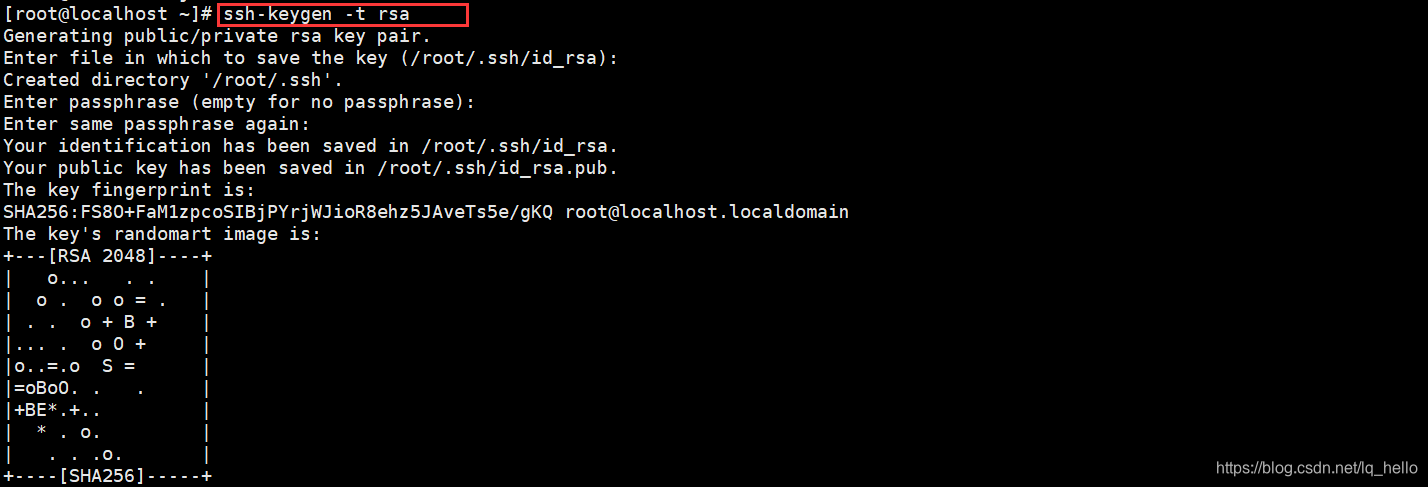

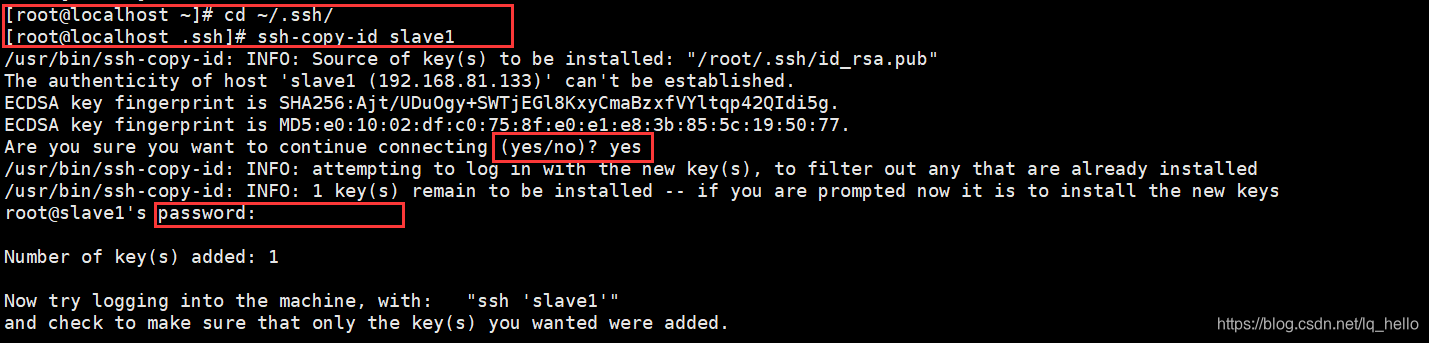

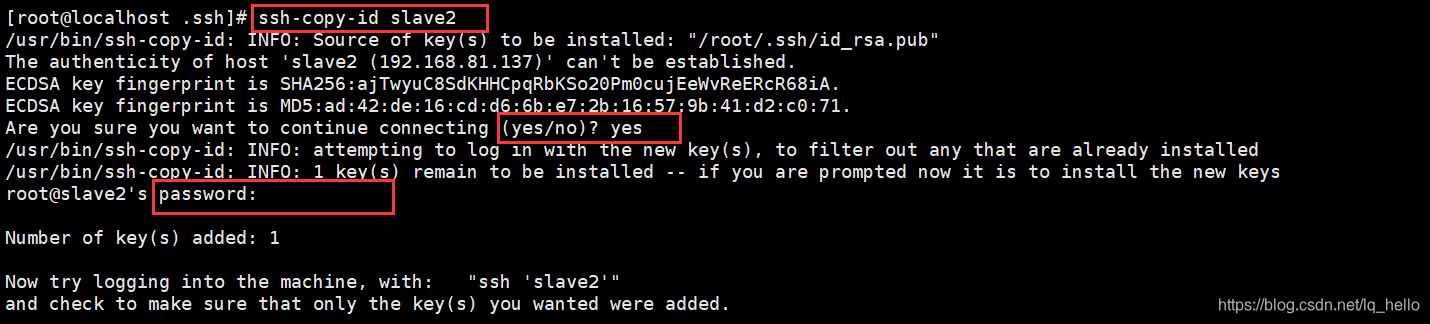

- 配置SSH免密码登录

为什么配置免密登录:hadoop 的各个节点之间需要频繁地进行通信,为了避免每次的通信都要输入密码,需对各个节点进行 SSH 免密码登录配置。

注意:在每个节点上都要生成密钥对,做以下操作

- 测试

第一次可能需要进行确认,第二次就不需要了。

[root@master ~]# ssh slave1

root@slave1's password:

Last login: Tue Jul 27 11:15:21 2021 from slave1

[root@master ~]# exit

登出

Connection to slave1 closed.

[root@master ~]# ssh slave2

Last login: Tue Jul 27 10:14:15 2021 from 192.168.81.1

[root@localhost ~]# exit

登出

Connection to slave2 closed.

注意:上面的操作都要在三台机器上做同样的操作!!!!!

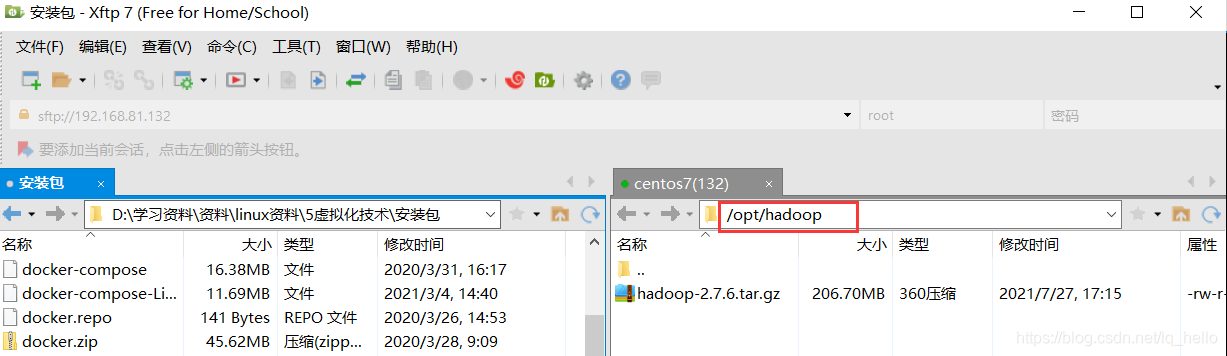

- 安装Hadoop

hadoop更多版本下载链接:https://archive.apache.org/dist/hadoop/common/,访问比较慢,进不去多刷新几次就可以了。

[root@master ~]# mkdir /opt/hadoop //创建一个存放hadoop包的目录

- 解压安装包并配置环境变量

[root@localhost hadoop]# tar zxf hadoop-2.7.6.tar.gz

[root@localhost hadoop]# vim /etc/profile

export HADOOP_HOME=/opt/hadoop/hadoop-2.7.6

export PATH=$PATH:${HADOOP_HOME}/bin:$PATH:${HADOOP_HOME}/Sbin

[root@localhost hadoop]# source /etc/profile

[root@localhost hadoop]# hadoop version

Hadoop 2.7.6

Subversion https://shv@git-wip-us.apache.org/repos/asf/hadoop.git -r 085099c66cf28be31604560c376fa282e69282b8

Compiled by kshvachk on 2018-04-18T01:33Z

Compiled with protoc 2.5.0

From source with checksum 71e2695531cb3360ab74598755d036

This command was run using /opt/hadoop/hadoop-2.7.6/share/hadoop/common/hadoop-common-2.7.6.jar

- 修改配置文件

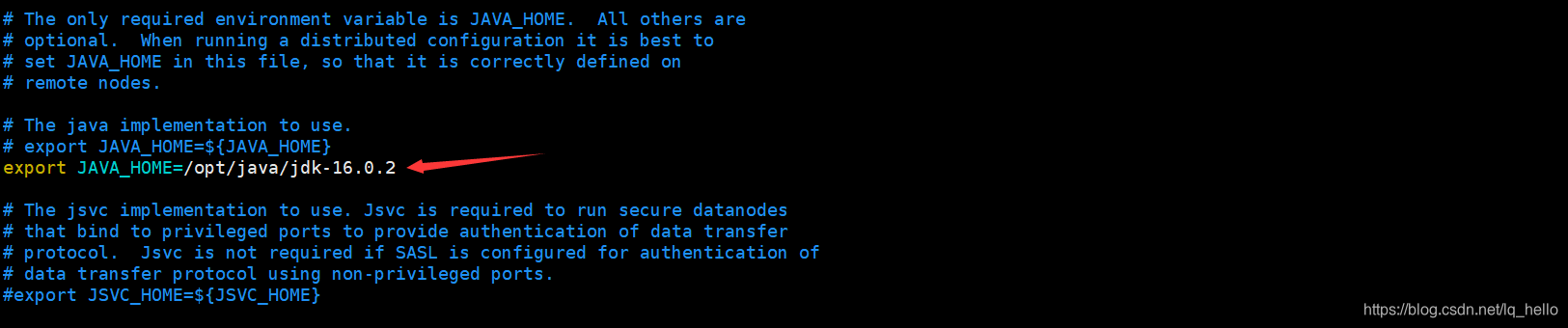

配置 hadoop-env.sh文件

[root@localhost ~]# cd /opt/hadoop/hadoop-2.7.6/etc/hadoop/

[root@localhost hadoop]# vim hadoop-env.sh

配置 hadoop-env.cmd文件

[root@localhost hadoop]# vim hadoop-env.cmd

配置 core-site.xml文件

[root@localhost hadoop]# vim core-site.xml

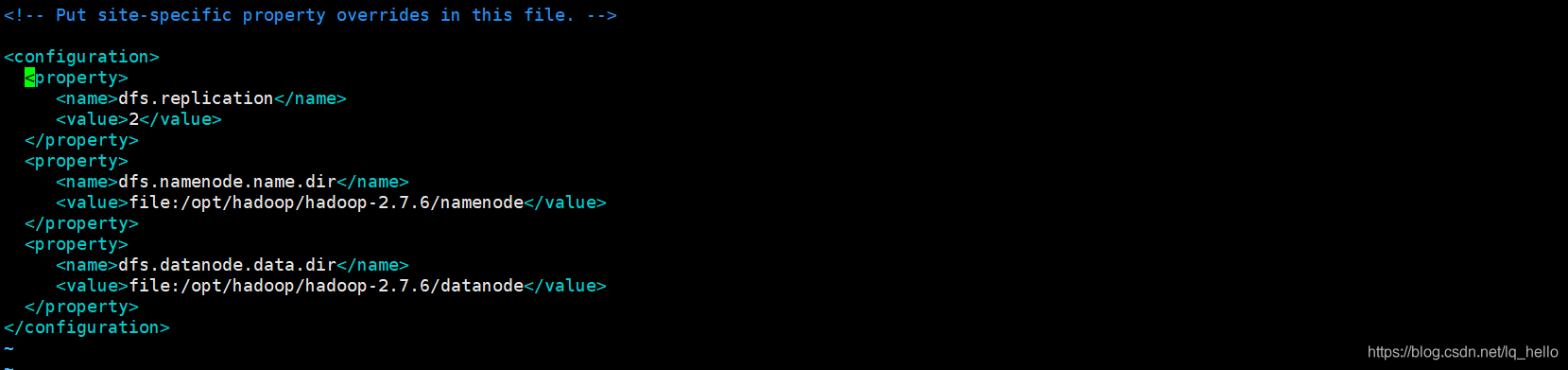

配置 hdfs-site.xml文件

[root@localhost hadoop]# vim hdfs-site.xml

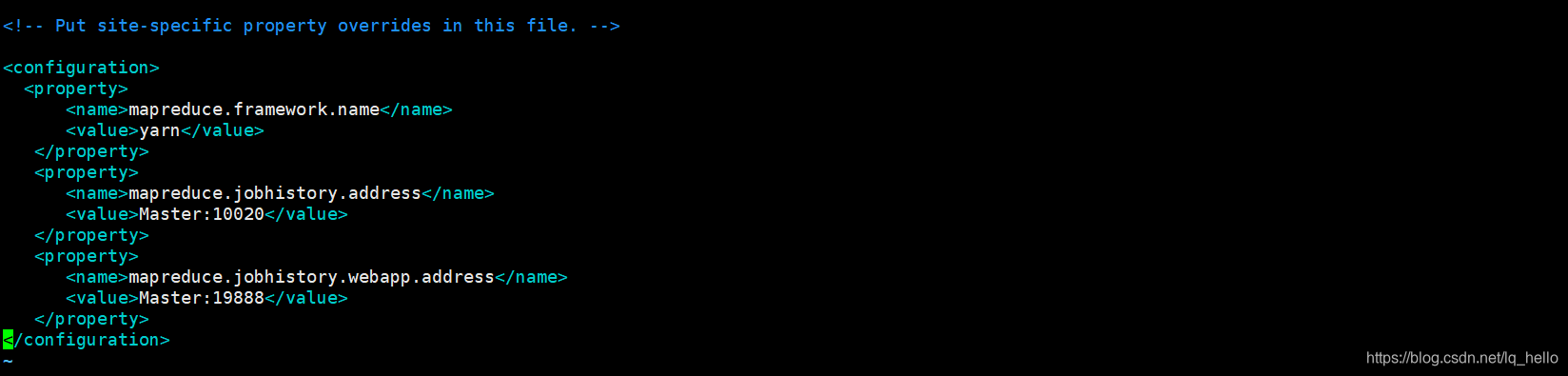

配置 mapred-site.xml文件

[root@localhost hadoop]# mv mapred-site.xml.template mapred-site.xml

[root@localhost hadoop]# vim mapred-site.xml

配置 yarn-site.xml文件

[root@localhost hadoop]# vim yarn-site.xml

编辑slaves文件

删除默认的localhost,然后将节点的名称加入进去

[root@localhost hadoop]# vim slaves

slave1

slave2

~

~

格式化并启动服务

[root@localhost hadoop-2.7.6]# bin/hdfs namenode -format //格式化

STARTUP_MSG: java = 16.0.2

************************************************************/

21/07/28 16:30:33 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

21/07/28 16:30:33 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-b62b585c-e22e-41e2-b204-04d8e624ed13

21/07/28 16:30:34 INFO namenode.FSNamesystem: No KeyProvider found.

21/07/28 16:30:34 INFO namenode.FSNamesystem: fsLock is fair: true

21/07/28 16:30:34 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

21/07/28 16:30:34 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

21/07/28 16:30:34 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

21/07/28 16:30:34 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

21/07/28 16:30:34 INFO blockmanagement.BlockManager: The block deletion will start around 2021 7月 28 16:30:34

21/07/28 16:30:34 INFO util.GSet: Computing capacity for map BlocksMap

21/07/28 16:30:34 INFO util.GSet: VM type = 64-bit

21/07/28 16:30:34 INFO util.GSet: 2.0% max memory 1000 MB = 20 MB

21/07/28 16:30:34 INFO util.GSet: capacity = 2^21 = 2097152 entries

21/07/28 16:30:34 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

21/07/28 16:30:34 INFO blockmanagement.BlockManager: defaultReplication = 2

21/07/28 16:30:34 INFO blockmanagement.BlockManager: maxReplication = 512

21/07/28 16:30:34 INFO blockmanagement.BlockManager: minReplication = 1

21/07/28 16:30:34 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

21/07/28 16:30:34 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

21/07/28 16:30:34 INFO blockmanagement.BlockManager: encryptDataTransfer = false

21/07/28 16:30:34 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

21/07/28 16:30:34 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

21/07/28 16:30:34 INFO namenode.FSNamesystem: supergroup = supergroup

21/07/28 16:30:34 INFO namenode.FSNamesystem: isPermissionEnabled = true

21/07/28 16:30:34 INFO namenode.FSNamesystem: HA Enabled: false

21/07/28 16:30:34 INFO namenode.FSNamesystem: Append Enabled: true

21/07/28 16:30:34 INFO util.GSet: Computing capacity for map INodeMap

21/07/28 16:30:34 INFO util.GSet: VM type = 64-bit

21/07/28 16:30:34 INFO util.GSet: 1.0% max memory 1000 MB = 10 MB

21/07/28 16:30:34 INFO util.GSet: capacity = 2^20 = 1048576 entries

21/07/28 16:30:34 INFO namenode.FSDirectory: ACLs enabled? false

21/07/28 16:30:34 INFO namenode.FSDirectory: XAttrs enabled? true

21/07/28 16:30:34 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

21/07/28 16:30:34 INFO namenode.NameNode: Caching file names occuring more than 10 times

21/07/28 16:30:34 INFO util.GSet: Computing capacity for map cachedBlocks

21/07/28 16:30:34 INFO util.GSet: VM type = 64-bit

21/07/28 16:30:34 INFO util.GSet: 0.25% max memory 1000 MB = 2.5 MB

21/07/28 16:30:34 INFO util.GSet: capacity = 2^18 = 262144 entries

21/07/28 16:30:34 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

21/07/28 16:30:34 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

21/07/28 16:30:34 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

21/07/28 16:30:34 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

21/07/28 16:30:34 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

21/07/28 16:30:34 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

21/07/28 16:30:34 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

21/07/28 16:30:34 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

21/07/28 16:30:34 INFO util.GSet: Computing capacity for map NameNodeRetryCache

21/07/28 16:30:34 INFO util.GSet: VM type = 64-bit

21/07/28 16:30:34 INFO util.GSet: 0.029999999329447746% max memory 1000 MB = 307.2 KB

21/07/28 16:30:34 INFO util.GSet: capacity = 2^15 = 32768 entries

21/07/28 16:30:34 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1778654297-192.168.81.132-1627461034761

21/07/28 16:30:34 INFO common.Storage: Storage directory /opt/hadoop/hadoop-2.7.6/tmp/dfs/name has been successfully formatted.

21/07/28 16:30:34 INFO namenode.FSImageFormatProtobuf: Saving image file /opt/hadoop/hadoop-2.7.6/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

21/07/28 16:30:34 INFO namenode.FSImageFormatProtobuf: Image file /opt/hadoop/hadoop-2.7.6/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 321 bytes saved in 0 seconds.

21/07/28 16:30:34 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

21/07/28 16:30:34 INFO util.ExitUtil: Exiting with status 0

21/07/28 16:30:34 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.81.132

************************************************************/

[root@master hadoop-2.7.6]# sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

The authenticity of host 'master (192.168.81.132)' can't be established.

ECDSA key fingerprint is SHA256:fi3C87su8LrMyUHrxlUmhp5Ab6j1vgb+yGnZUCvqnVI.

ECDSA key fingerprint is MD5:10:34:19:05:dc:e2:ec:4c:e6:64:0c:f3:ab:75:63:fe.

Are you sure you want to continue connecting (yes/no)? yes

master: Warning: Permanently added 'master,192.168.81.132' (ECDSA) to the list of known hosts.

root@master's password:

master: starting namenode, logging to /opt/hadoop/hadoop-2.7.6/logs/hadoop-root-namenode-master.out

slave1: starting datanode, logging to /opt/hadoop/hadoop-2.7.6/logs/hadoop-root-datanode-slave1.out

slave2: starting datanode, logging to /opt/hadoop/hadoop-2.7.6/logs/hadoop-root-datanode-slave2.out

Starting secondary namenodes [slave1]

slave1: starting secondarynamenode, logging to /opt/hadoop/hadoop-2.7.6/logs/hadoop-root-secondarynamenode-slave1.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop/hadoop-2.7.6/logs/yarn-root-resourcemanager-master.out

slave2: starting nodemanager, logging to /opt/hadoop/hadoop-2.7.6/logs/yarn-root-nodemanager-slave2.out

slave1: starting nodemanager, logging to /opt/hadoop/hadoop-2.7.6/logs/yarn-root-nodemanager-slave1.out