目录

一、问题一

1 问题

Apache Atlas 执行导入hive元数据脚本import-hive.sh报错

bash import-hive.sh

Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8 at [row,col,system-id]: [3223,96,“file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml”]

报错日志:

2021-07-28T10:00:32,343 ERROR [main] org.apache.hadoop.conf.Configuration - error parsing conf file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml

com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3223,96,"file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml"]

at com.ctc.wstx.sr.StreamScanner.constructWfcException(StreamScanner.java:621) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.throwParseError(StreamScanner.java:491) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.reportIllegalChar(StreamScanner.java:2456) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.validateChar(StreamScanner.java:2403) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.resolveCharEnt(StreamScanner.java:2369) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1515) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.nextFromTree(BasicStreamReader.java:2828) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.next(BasicStreamReader.java:1123) ~[woodstox-core-5.0.3.jar:5.0.3]

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3257) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3063) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2986) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2931) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2806) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1460) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:4996) [hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:5069) [hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5156) [hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5099) [hive-common-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

2021-07-28T10:00:32,345 ERROR [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - Import failed

java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3223,96,"file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml"]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3003) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2931) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2806) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1460) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:4996) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:5069) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5156) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5099) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3223,96,"file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml"]

at com.ctc.wstx.sr.StreamScanner.constructWfcException(StreamScanner.java:621) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.throwParseError(StreamScanner.java:491) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.reportIllegalChar(StreamScanner.java:2456) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.validateChar(StreamScanner.java:2403) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.resolveCharEnt(StreamScanner.java:2369) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1515) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.nextFromTree(BasicStreamReader.java:2828) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.next(BasicStreamReader.java:1123) ~[woodstox-core-5.0.3.jar:5.0.3]

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3257) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3063) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2986) ~[hadoop-common-3.1.1.jar:?]

... 8 more

Failed to import Hive Meta Data!!!

2 解决

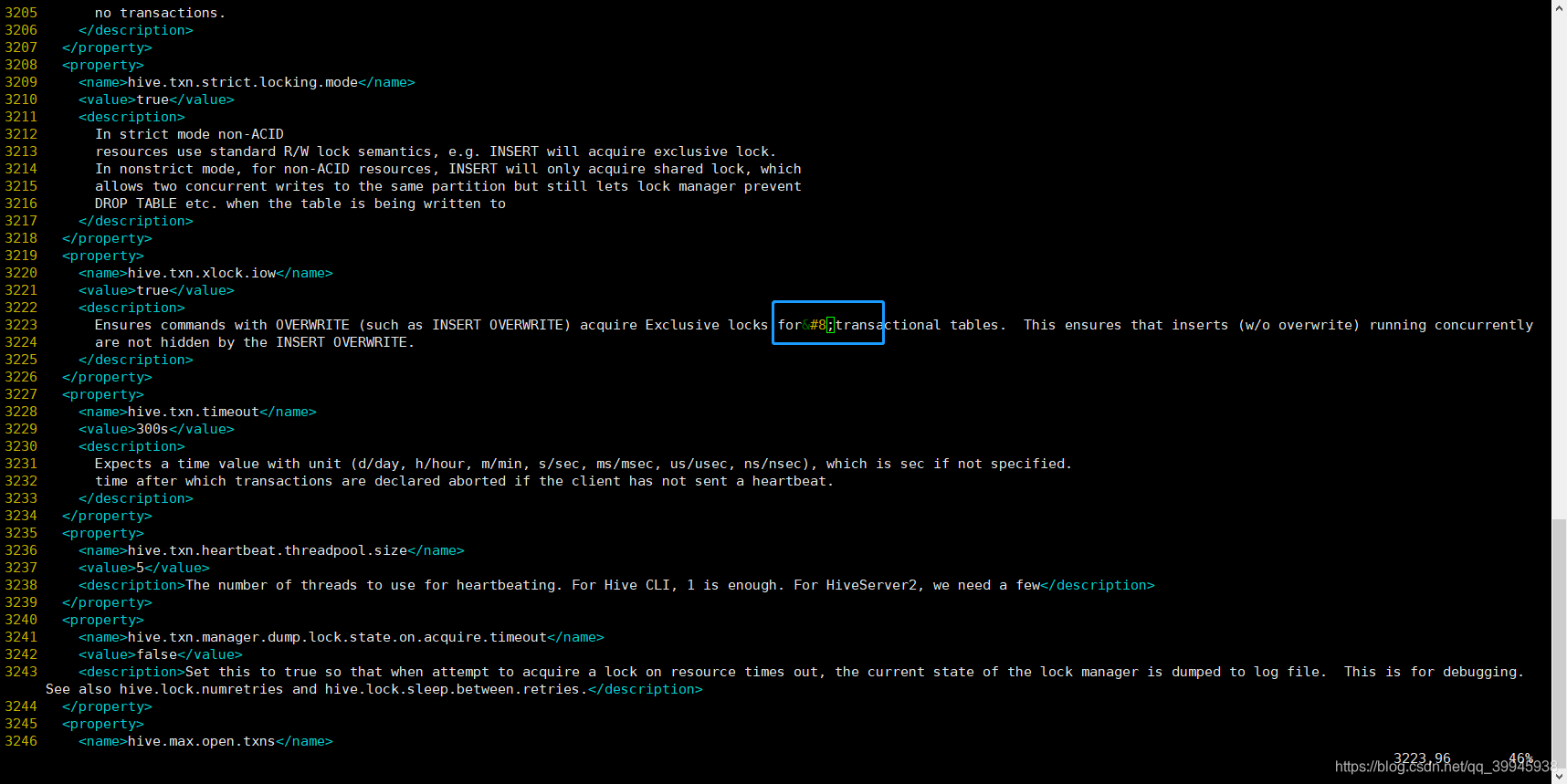

报错提示文件中有非法字符

vim打开文件

vim hive-site.xml

# 设置行号

:set nu

找到第3223行的第96个字符,如下所示

把特殊字符删了试试看

按 i 进入编辑模式

删除特殊字符

按ESC推出编辑模式

输入:wq保存并推出

再次执行import-hive.sh,发现已经不报这个错了,报了其他的错

bash import-hive.sh

二、问题二

1 问题

Apache Atlas 执行导入hive元数据脚本import-hive.sh报错

SQLSyntaxErrorException: Table/View ‘DBS’ does not exist.

StandardException: Table/View ‘DBS’ does not exist.

.MetaException: Version information not found in metastore.

RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

2021-07-28T10:16:46,502 WARN [main] org.apache.hadoop.hive.metastore.MetaStoreDirectSql - Self-test query [select "DB_ID" from "DBS"] failed; direct SQL is disabled

javax.jdo.JDODataStoreException: Error executing SQL query "select "DB_ID" from "DBS"".

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:543) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:391) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:216) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.apache.hadoop.hive.metastore.MetaStoreDirectSql.runTestQuery(MetaStoreDirectSql.java:276) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.MetaStoreDirectSql.<init>(MetaStoreDirectSql.java:184) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.initializeHelper(ObjectStore.java:498) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:420) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:77) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:137) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:59) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:67) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStoreForConf(HiveMetaStore.java:718) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:696) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:690) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:767) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) [?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) [?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) [?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) [?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216) [hive-bridge-2.1.0.jar:2.1.0]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

Caused by: java.sql.SQLSyntaxErrorException: Table/View 'DBS' does not exist.

at org.apache.derby.impl.jdbc.SQLExceptionFactory.getSQLException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.Util.generateCsSQLException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.TransactionResourceImpl.wrapInSQLException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.TransactionResourceImpl.handleException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.handleException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.ConnectionChild.handleException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement.<init>(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement42.<init>(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.jdbc.Driver42.newEmbedPreparedStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at com.zaxxer.hikari.pool.ProxyConnection.prepareStatement(ProxyConnection.java:325) ~[HikariCP-2.6.1.jar:?]

at com.zaxxer.hikari.pool.HikariProxyConnection.prepareStatement(HikariProxyConnection.java) ~[HikariCP-2.6.1.jar:?]

at org.datanucleus.store.rdbms.SQLController.getStatementForQuery(SQLController.java:345) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getPreparedStatementForQuery(RDBMSQueryUtils.java:211) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.performExecute(SQLQuery.java:633) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1855) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.executeWithArray(SQLQuery.java:807) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.execute(Query.java:1726) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:374) ~[datanucleus-api-jdo-4.2.4.jar:?]

... 46 more

Caused by: org.apache.derby.iapi.error.StandardException: Table/View 'DBS' does not exist.

at org.apache.derby.iapi.error.StandardException.newException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.iapi.error.StandardException.newException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.FromBaseTable.bindTableDescriptor(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.FromBaseTable.bindNonVTITables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.FromList.bindTables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.SelectNode.bindNonVTITables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.DMLStatementNode.bindTables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.DMLStatementNode.bind(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.CursorNode.bindStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.GenericStatement.prepMinion(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.GenericStatement.prepare(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.conn.GenericLanguageConnectionContext.prepareInternalStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement.<init>(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement42.<init>(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.jdbc.Driver42.newEmbedPreparedStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at com.zaxxer.hikari.pool.ProxyConnection.prepareStatement(ProxyConnection.java:325) ~[HikariCP-2.6.1.jar:?]

at com.zaxxer.hikari.pool.HikariProxyConnection.prepareStatement(HikariProxyConnection.java) ~[HikariCP-2.6.1.jar:?]

at org.datanucleus.store.rdbms.SQLController.getStatementForQuery(SQLController.java:345) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getPreparedStatementForQuery(RDBMSQueryUtils.java:211) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.performExecute(SQLQuery.java:633) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1855) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.executeWithArray(SQLQuery.java:807) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.execute(Query.java:1726) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:374) ~[datanucleus-api-jdo-4.2.4.jar:?]

... 46 more

2021-07-28T10:16:46,509 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - Initialized ObjectStore

2021-07-28T10:16:46,618 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,618 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,618 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,619 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,619 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,619 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,981 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,981 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,981 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,982 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,982 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,982 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:50,038 WARN [main] DataNucleus.Query - Query for candidates of org.apache.hadoop.hive.metastore.model.MVersionTable and subclasses resulted in no possible candidates

org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "VERSION" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.schema.autoCreateTables"

at org.datanucleus.store.rdbms.table.AbstractTable.exists(AbstractTable.java:606) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3385) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2896) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:119) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.manageClasses(RDBMSStoreManager.java:1627) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:672) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getStatementForCandidates(RDBMSQueryUtils.java:425) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileQueryFull(JDOQLQuery.java:865) [datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileInternal(JDOQLQuery.java:347) [datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1816) [datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.query.Query.executeWithArray(Query.java:1744) [datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.query.Query.execute(Query.java:1726) [datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:374) [datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:216) [datanucleus-api-jdo-4.2.4.jar:?]

at org.apache.hadoop.hive.metastore.ObjectStore.getMSchemaVersion(ObjectStore.java:9101) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.getMetaStoreSchemaVersion(ObjectStore.java:9085) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:9042) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:9027) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97) [hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy42.verifySchema(Unknown Source) [?:?]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:697) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:690) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:767) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) [?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) [?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) [?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) [?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216) [hive-bridge-2.1.0.jar:2.1.0]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

2021-07-28T10:16:50,059 ERROR [main] org.apache.hadoop.hive.metastore.RetryingHMSHandler - MetaException(message:Version information not found in metastore.)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:9049)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:9027)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy42.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:697)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:690)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:767)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141)

2021-07-28T10:16:50,059 ERROR [main] org.apache.hadoop.hive.metastore.RetryingHMSHandler - HMSHandler Fatal error: MetaException(message:Version information not found in metastore.)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:9049)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:9027)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy42.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:697)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:690)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:767)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141)

2021-07-28T10:16:50,060 WARN [main] hive.ql.metadata.Hive - Failed to register all functions.

java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:86) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216) [hive-bridge-2.1.0.jar:2.1.0]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) ~[hive-exec-3.1.2.jar:3.1.2]

... 15 more

Caused by: org.apache.hadoop.hive.metastore.api.MetaException: Version information not found in metastore.

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:84) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) ~[hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) ~[hive-exec-3.1.2.jar:3.1.2]

... 15 more

2021-07-28T10:16:50,078 ERROR [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - Import failed

org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:279) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216) ~[hive-bridge-2.1.0.jar:2.1.0]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:86) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) ~[hive-exec-3.1.2.jar:3.1.2]

... 6 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) ~[hive-exec-3.1.2.jar:3.1.2]

... 6 more

Caused by: org.apache.hadoop.hive.metastore.api.MetaException: Version information not found in metastore.

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:84) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) ~[hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) ~[hive-exec-3.1.2.jar:3.1.2]

... 6 more

Failed to import Hive Meta Data!!!

2 解决

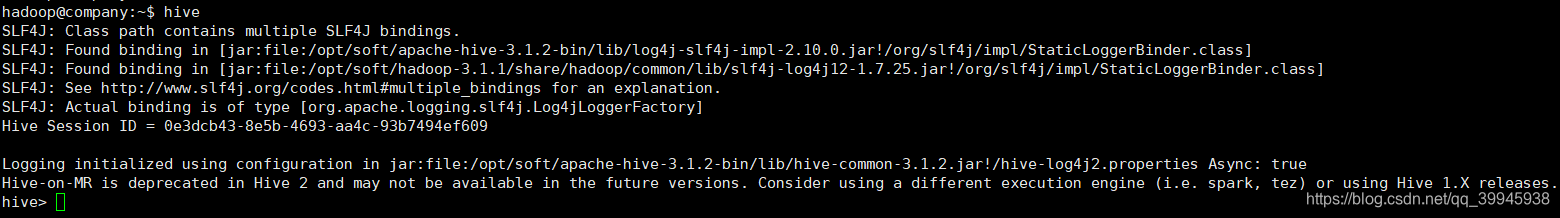

2.1 查看hive是否正常

hadoop@company:/opt/soft/apache-hive-3.1.2-bin/bin$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/soft/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/soft/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 4fd86b81-9039-4a80-b96d-8d405395783e

Logging initialized using configuration in jar:file:/opt/soft/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at org.apache.hadoop.fs.Path.initialize(Path.java:259)

at org.apache.hadoop.fs.Path.<init>(Path.java:217)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:710)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:627)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:591)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:747)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:318)

at org.apache.hadoop.util.RunJar.main(RunJar.java:232)

Caused by: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at java.net.URI.checkPath(URI.java:1822)

at java.net.URI.<init>(URI.java:745)

at org.apache.hadoop.fs.Path.initialize(Path.java:256)

... 12 more

发现hive已经启动命令行客户端

2.2 解决hive命令行无法启动问题

将变量替换为绝对路径

mkdir -p /tmp/hive/tmpdir

vim hive-site.xml

搜索${system:java.io.tmpdir}

将hive-site.xml中所有的${system:java.io.tmpdir}替换为/tmp/hive/tmpdir

之后保存推出

再启动hive命令行客户端,已经可以启动了

查看所有数据库,报错Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

hive> show databases;

FAILED: HiveException java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

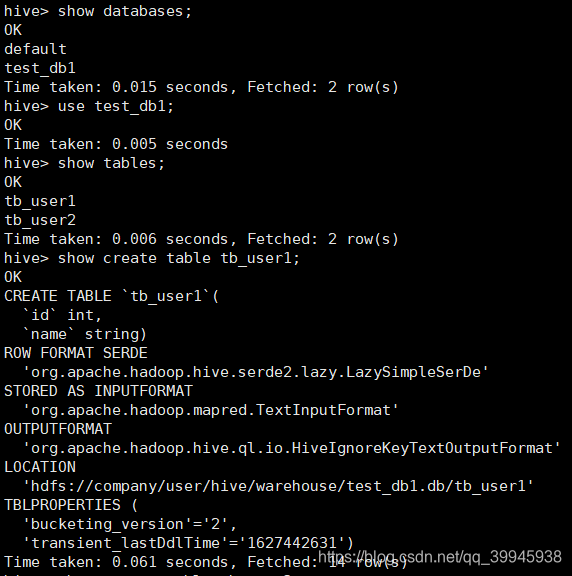

2.3 解决hive无法查询数据库问题

笔者搭建的是单机版的基于derby的hive

- 删除hive安装目录下的metastore_db

- 修改hive-site.xml,将hive.exec.local.scratchdir、hive.querylog.location、hive.downloaded.resources.dir三个配置中的${system:user.name}替换成hadoop

- 重新执行初始化命令schematool -dbType derby -initSchema

再重新启动hive,发现hive已经正常了;由于删除了metastore_db,为了测试Apache Atlas所以需要重新创建一个数据库和几个表;

数据库和表如下

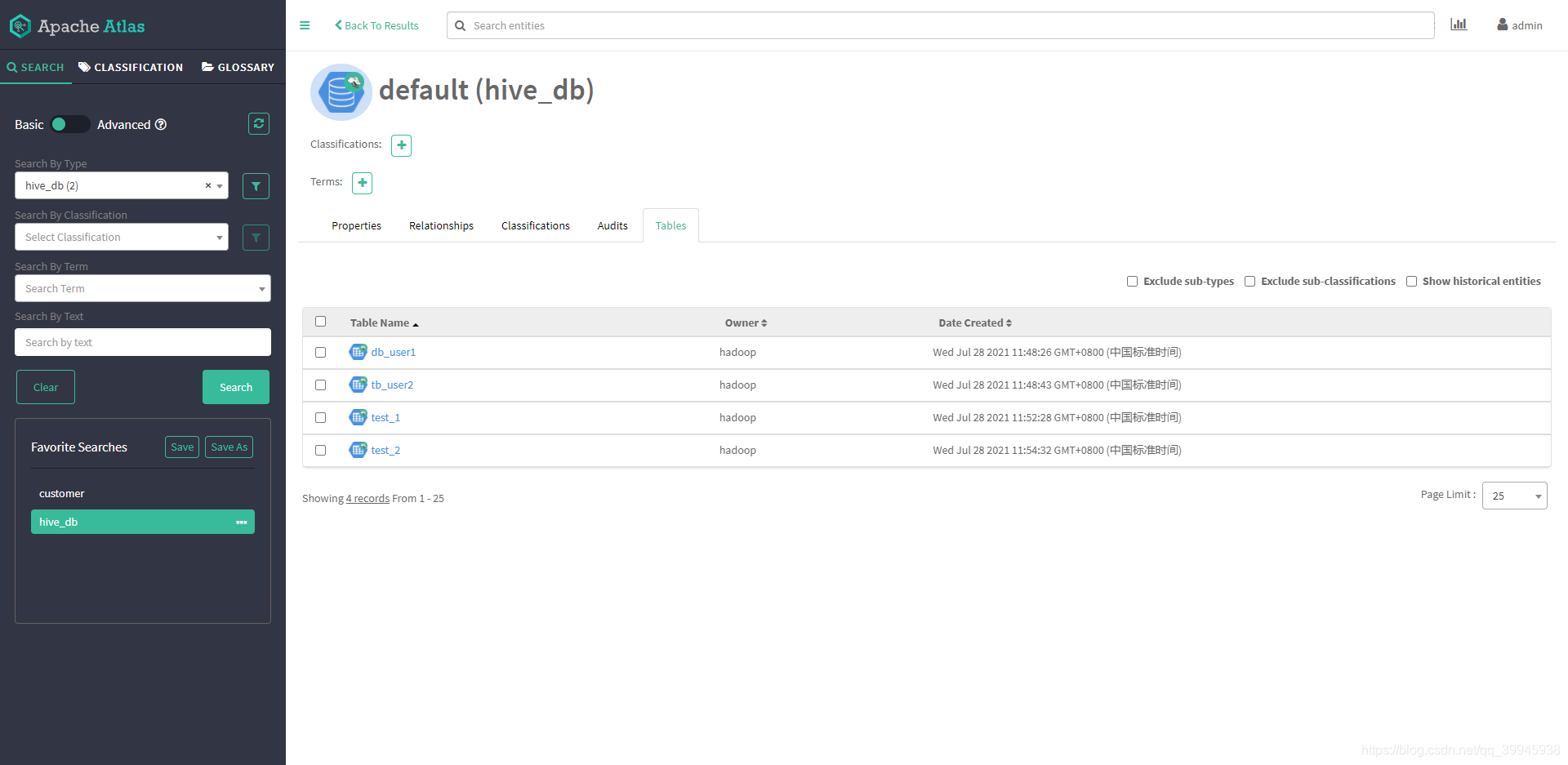

再次执行Apache Atlas提供的导入hive元数据的脚本

bash import-hive.sh

又报新的错

2021-07-28T11:35:07,082 WARN [main] DataNucleus.Query - Query for candidates of org.apache.hadoop.hive.metastore.model.MDatabase and subclasses resulted in no possible candidates

org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "DBS" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.schema.autoCreateTables"

at org.datanucleus.store.rdbms.table.AbstractTable.exists(AbstractTable.java:606) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3385) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2896) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:119) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.manageClasses(RDBMSStoreManager.java:1627) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:672) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getStatementForCandidates(RDBMSQueryUtils.java:425) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileQueryFull(JDOQLQuery.java:865) [datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileInternal(JDOQLQuery.java:347) [datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1816) [datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.query.Query.executeWithArray(Query.java:1744) [datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:368) [datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:241) [datanucleus-api-jdo-4.2.4.jar:?]

at org.apache.hadoop.hive.metastore.ObjectStore.getMDatabase(ObjectStore.java:973) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.getJDODatabase(ObjectStore.java:1026) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore$1.getJdoResult(ObjectStore.java:1016) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore$1.getJdoResult(ObjectStore.java:1008) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore$GetHelper.run(ObjectStore.java:3586) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.getDatabaseInternal(ObjectStore.java:1018) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.getDatabase(ObjectStore.java:990) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97) [hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy42.getDatabase(Unknown Source) [?:?]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB_core(HiveMetaStore.java:744) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:773) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) [?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) [?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) [?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) [?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216) [hive-bridge-2.1.0.jar:2.1.0]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

2021-07-28T11:35:07,084 INFO [main] DataNucleus.JDO - Exception thrown

org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "DBS" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.schema.autoCreateTables"

at org.datanucleus.store.rdbms.table.AbstractTable.exists(AbstractTable.java:606) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3385) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2896) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:119) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.manageClasses(RDBMSStoreManager.java:1627) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:672) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.getPropertiesForGenerator(RDBMSStoreManager.java:2088) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.AbstractStoreManager.getStrategyValue(AbstractStoreManager.java:1271) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.ExecutionContextImpl.newObjectId(ExecutionContextImpl.java:3760) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.state.StateManagerImpl.setIdentity(StateManagerImpl.java:2267) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.state.StateManagerImpl.initialiseForPersistentNew(StateManagerImpl.java:484) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.state.StateManagerImpl.initialiseForPersistentNew(StateManagerImpl.java:120) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.state.ObjectProviderFactoryImpl.newForPersistentNew(ObjectProviderFactoryImpl.java:218) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.ExecutionContextImpl.persistObjectInternal(ExecutionContextImpl.java:2079) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.ExecutionContextImpl.persistObjectWork(ExecutionContextImpl.java:1923) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.ExecutionContextImpl.persistObject(ExecutionContextImpl.java:1778) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.ExecutionContextThreadedImpl.persistObject(ExecutionContextThreadedImpl.java:217) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:724) [datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:749) [datanucleus-api-jdo-4.2.4.jar:?]

at org.apache.hadoop.hive.metastore.ObjectStore.createDatabase(ObjectStore.java:952) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97) [hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy42.createDatabase(Unknown Source) [?:?]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB_core(HiveMetaStore.java:751) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:773) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) [?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) [?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) [?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) [?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216) [hive-bridge-2.1.0.jar:2.1.0]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

2021-07-28T11:35:07,086 ERROR [main] org.apache.hadoop.hive.metastore.RetryingHMSHandler - Retrying HMSHandler after 2000 ms (attempt 10 of 10) with error: javax.jdo.JDODataStoreException: Required table missing : "DBS" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.schema.autoCreateTables"

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:553)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:729)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:749)

at org.apache.hadoop.hive.metastore.ObjectStore.createDatabase(ObjectStore.java:952)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy42.createDatabase(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB_core(HiveMetaStore.java:751)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:773)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141)

NestedThrowablesStackTrace:

Required table missing : "DBS" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.schema.autoCreateTables"

org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "DBS" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.schema.autoCreateTables"

at org.datanucleus.store.rdbms.table.AbstractTable.exists(AbstractTable.java:606)

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3385)

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2896)

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:119)

at org.datanucleus.store.rdbms.RDBMSStoreManager.manageClasses(RDBMSStoreManager.java:1627)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:672)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getPropertiesForGenerator(RDBMSStoreManager.java:2088)

at org.datanucleus.store.AbstractStoreManager.getStrategyValue(AbstractStoreManager.java:1271)

at org.datanucleus.ExecutionContextImpl.newObjectId(ExecutionContextImpl.java:3760)

at org.datanucleus.state.StateManagerImpl.setIdentity(StateManagerImpl.java:2267)

at org.datanucleus.state.StateManagerImpl.initialiseForPersistentNew(StateManagerImpl.java:484)

at org.datanucleus.state.StateManagerImpl.initialiseForPersistentNew(StateManagerImpl.java:120)

at org.datanucleus.state.ObjectProviderFactoryImpl.newForPersistentNew(ObjectProviderFactoryImpl.java:218)

at org.datanucleus.ExecutionContextImpl.persistObjectInternal(ExecutionContextImpl.java:2079)

at org.datanucleus.ExecutionContextImpl.persistObjectWork(ExecutionContextImpl.java:1923)

at org.datanucleus.ExecutionContextImpl.persistObject(ExecutionContextImpl.java:1778)

at org.datanucleus.ExecutionContextThreadedImpl.persistObject(ExecutionContextThreadedImpl.java:217)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:724)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:749)

at org.apache.hadoop.hive.metastore.ObjectStore.createDatabase(ObjectStore.java:952)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy42.createDatabase(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB_core(HiveMetaStore.java:751)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:773)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.<init>(HiveMetaStoreBridge.java:216)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141)

2021-07-28T11:35:09,088 WARN [main] DataNucleus.Query - Query for candidates of org.apache.hadoop.hive.metastore.model.MCatalog and subclasses resulted in no possible candidates

org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "CTLGS" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.schema.autoCreateTables"

at org.datanucleus.store.rdbms.table.AbstractTable.exists(AbstractTable.java:606) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3385) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2896) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:119) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.manageClasses(RDBMSStoreManager.java:1627) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:672) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getStatementForCandidates(RDBMSQueryUtils.java:425) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileQueryFull(JDOQLQuery.java:865) [datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileInternal(JDOQLQuery.java:347) [datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1816) [datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.query.Query.executeWithArray(Query.java:1744) [datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:368) [datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:228) [datanucleus-api-jdo-4.2.4.jar:?]

at org.apache.hadoop.hive.metastore.ObjectStore.getMCatalog(ObjectStore.java:909) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.getCatalog(ObjectStore.java:851) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97) [hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy42.getCatalog(Unknown Source) [?:?]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultCatalog(HiveMetaStore.java:725) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:768) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) [?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) [?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) [?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) [?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:435) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) [hive-exec-3.1.2.jar:3.1.2]