轻量级日志收集 FileBeat + ElasticSearch

前言

轻量级的服务,未构建独立的spring cloud 体系,如单体spring boot 使用ELK组件进行日志收集,整体过于复杂繁琐,推荐轻量级日志收集框架:spring boot logback json 格式输出 +FileBeat + ElasticSearch +kibana(查询展示也可忽略)。

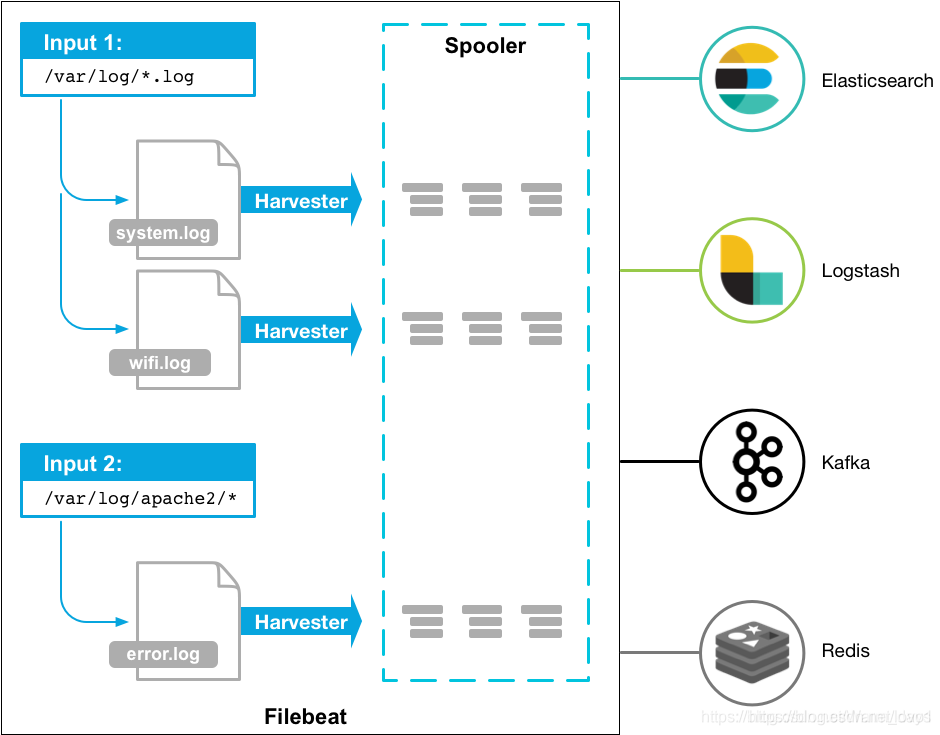

一、Filebeat是什么?

Filebeat是轻量级日志收集转发插件。作为服务器上的代理安装,Filebeat监视您指定的日志文件或位置,收集日志事件,并将它们转发到Elasticsearch或Logstash进行索引。

参考官网:https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-overview.html

1. 原理

启动Filebeat时,它会启动一个或多个查找器,查看日志文件指定的本地路径。 对于prospector 所在的每个日志文件,prospector 启动harvester。 每个harvester都会为新内容读取单个日志文件,并将新日志数据发送到libbeat,后者将聚合事件并将聚合数据发送到对应的输出源(ES,Logstash,kafka等)。 简单理解: 收集--过滤聚合 ---输出。

二、实现Spring boot Logback +FileBeat + ElasticSearch 轻量级日志收集

1.Spring boot Logback 实现日志json 格式输出;

logback.xml:

<?xml version="1.0" encoding="UTF-8"?>

<!-- 日志级别从低到高分为TRACE < DEBUG < INFO < WARN < ERROR < FATAL,如果设置为WARN,则低于WARN的信息都不会输出 -->

<configuration scan="true" scanPeriod="10 seconds">

<contextName>logback</contextName>

<!-- name的值是变量的名称,value的值时变量定义的值。通过定义的值会被插入到logger上下文中。定义后,可以使“${}”来使用变量。 -->

<!-- 路径 -->

<springProperty scope="context" name="log.path" source="path.log"/>

<!-- appName -->

<springProperty scope="context" name="appName" source="spring.application.name" defaultValue="test"/>

<!-- 最大保存时间 -->

<property name="maxHistory" value="15"/>

<!-- 异步缓冲队列的深度,该值会影响性能.默认值为256 -->

<property name="queueSize" value="512"></property>

<!--0. 日志格式和颜色渲染 -->

<!-- 彩色日志依赖的渲染类 -->

<conversionRule conversionWord="clr" converterClass="org.springframework.boot.logging.logback.ColorConverter"/>

<conversionRule conversionWord="wex"

converterClass="org.springframework.boot.logging.logback.WhitespaceThrowableProxyConverter"/>

<conversionRule conversionWord="wEx"

converterClass="org.springframework.boot.logging.logback.ExtendedWhitespaceThrowableProxyConverter"/>

<!-- 彩色日志格式 -->

<property name="CONSOLE_LOG_PATTERN"

value=" ${CONSOLE_LOG_PATTERN:-%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{200}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}}"/>

<property name="FILE_LOG_PATTERN"

value="|%d{yyyy-MM-dd HH:mm:ss}| %thread |%level| |%class| %ip |%method| %line %msg%n"/>

<!-- IP 配置,在类中定义然后引入-->

<conversionRule conversionWord="ip" converterClass="com.qdport.admin.config.IPLogConfig"/>

<!--1. 输出到控制台-->

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<!--此日志appender是为开发使用,只配置最底级别,控制台输出的日志级别是大于或等于此级别的日志信息-->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>debug</level>

</filter>

<encoder>

<Pattern>${FILE_LOG_PATTERN}</Pattern>

<!-- 设置字符集 -->

<charset>UTF-8</charset>

</encoder>

</appender>

<!-- 2.1 level为 所有 日志,时间滚动输出 -->

<appender name="ALL_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!-- 正在记录的日志文档的路径及文档名 -->

<file>log/log.log</file>

<!--日志文档输出格式-->

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<jsonFactoryDecorator class="net.logstash.logback.decorate.CharacterEscapesJsonFactoryDecorator">

<escape>

<targetCharacterCode>10</targetCharacterCode>

<escapeSequence>\u2028</escapeSequence>

</escape>

</jsonFactoryDecorator>

<providers>

<pattern>

<pattern>

{

"createTime":"%d{yyyy-MM-dd HH:mm:ss.SSS}",

"ip": "%ip",

"app": "${appName}",

"level": "%level",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"parent": "%X{X-B3-ParentSpanId:-}",

"thread": "%thread",

"class": "%logger",

"method": "%method",

"line": "%line",

"message": "%message",

"exception": "%exception{200}"

}

</pattern>

</pattern>

</providers>

</encoder>

<!-- 日志记录器的滚动策略,按日期,按大小记录 -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- 日志归档 -->

<fileNamePattern>log/test-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>100MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<!--日志文档保留天数-->

<maxHistory>${maxHistory}</maxHistory>

</rollingPolicy>

</appender>

<appender name="ASYNC_JSON_FILE" class="ch.qos.logback.classic.AsyncAppender">

<!-- 不丢失日志.默认的,如果队列的80%已满,则会丢弃TRACT、DEBUG、INFO级别的日志 -->

<discardingThreshold>0</discardingThreshold>

<!-- 更改默认的队列的深度,该值会影响性能.默认值为256 -->

<queueSize>${queueSize}</queueSize>

<appender-ref ref="ALL_FILE"/>

</appender>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="ASYNC_JSON_FILE"/>

</root>

</configuration>

pom.xml 依赖:

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.2.13.RELEASE</version>

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<!-- 日志依赖 begin -->

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-core</artifactId>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.28</version>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.2</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>1.2.3</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-access</artifactId>

<version>1.2.3</version>

</dependency>

<!-- 日志依赖 end-->

</dependencies>

文件输出格式:

{"createTime":"2021-07-29 17:27:27.562","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"background-preinit","class":"org.hibernate.validator.internal.util.Version","method":"<clinit>","line":"21","message":"HV000001: Hibernate Validator 6.0.22.Final","exception":""}

{"createTime":"2021-07-29 17:27:27.603","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"com.qdport.admin.AdminApplication","method":"logStarting","line":"55","message":"Starting AdminApplication on LAPTOP-9N9JJQV8 with PID 9424 (D:\\proc_\\spring-boot-test\\target\\classes started by EDY in D:\\-saas\\test-test)","exception":""}

{"createTime":"2021-07-29 17:27:27.604","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"com.qdport.admin.AdminApplication","method":"logStartupProfileInfo","line":"651","message":"No active profile set, falling back to default profiles: default","exception":""}

{"createTime":"2021-07-29 17:27:29.151","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.springframework.boot.web.embedded.tomcat.TomcatWebServer","method":"initialize","line":"91","message":"Tomcat initialized with port(s): 8080 (http)","exception":""}

{"createTime":"2021-07-29 17:27:29.199","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.apache.coyote.http11.Http11NioProtocol","method":"log","line":"173","message":"Initializing ProtocolHandler [\"http-nio-8080\"]","exception":""}

{"createTime":"2021-07-29 17:27:29.200","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.apache.catalina.core.StandardService","method":"log","line":"173","message":"Starting service [Tomcat]","exception":""}

{"createTime":"2021-07-29 17:27:29.200","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.apache.catalina.core.StandardEngine","method":"log","line":"173","message":"Starting Servlet engine: [Apache Tomcat/9.0.41]","exception":""}

{"createTime":"2021-07-29 17:27:29.287","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.apache.catalina.core.ContainerBase.[Tomcat].[localhost].[/]","method":"log","line":"173","message":"Initializing Spring embedded WebApplicationContext","exception":""}

{"createTime":"2021-07-29 17:27:29.287","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.springframework.boot.web.servlet.context.ServletWebServerApplicationContext","method":"prepareWebApplicationContext","line":"283","message":"Root WebApplicationContext: initialization completed in 1563 ms","exception":""}

{"createTime":"2021-07-29 17:27:29.499","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor","method":"initialize","line":"181","message":"Initializing ExecutorService 'applicationTaskExecutor'","exception":""}

{"createTime":"2021-07-29 17:27:29.663","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.apache.coyote.http11.Http11NioProtocol","method":"log","line":"173","message":"Starting ProtocolHandler [\"http-nio-8080\"]","exception":""}

{"createTime":"2021-07-29 17:27:29.690","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"org.springframework.boot.web.embedded.tomcat.TomcatWebServer","method":"start","line":"203","message":"Tomcat started on port(s): 8080 (http) with context path ''","exception":""}

{"createTime":"2021-07-29 17:27:29.693","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"com.qdport.admin.AdminApplication","method":"logStarted","line":"61","message":"Started AdminApplication in 2.729 seconds (JVM running for 4.692)","exception":""}

{"createTime":"2021-07-29 17:27:29.761","ip":"10.17.8.241","app":"test","level":"INFO","trace":"","span":"","parent":"","thread":"main","class":"com.qdport.admin.AdminApplication","method":"main","line":"24","message":"-------------ip:10.17.8.241:10.17.8.241","exception":""}

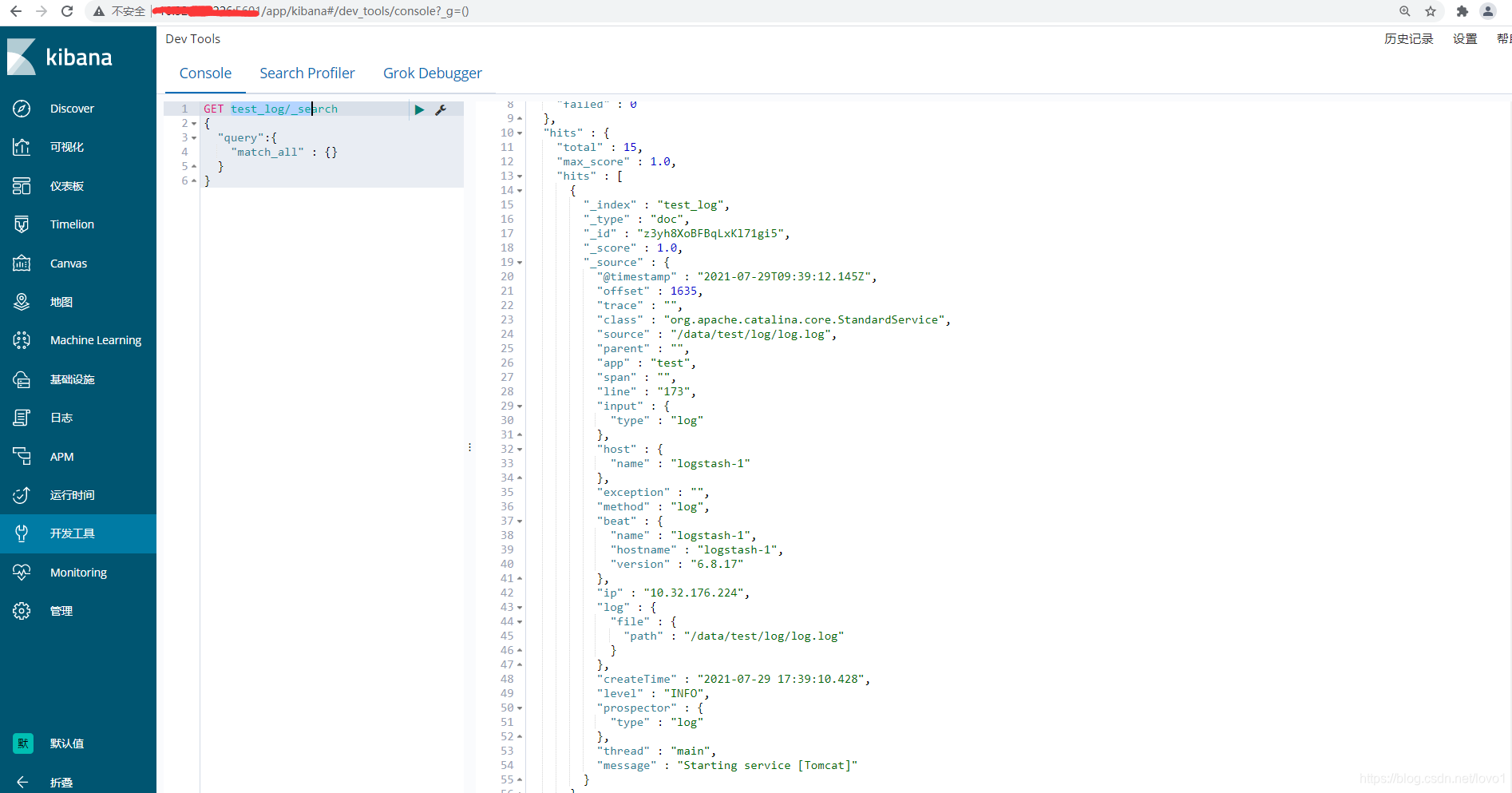

2.FileBeat 监控spring boot 项目日志输出目录,采集日志文件,解析日志json 格式,同步到ES。

编写FileBeat 配置文件 filebeat_config.yml:

filebeat.inputs:

- type: log # 收集类型

paths:

- /data/test/log/*.log # 日志所在目录

json.keys_under_root: true

json.overwrite_keys: true

json.add_error_key: true

processors:

- decode_json_fields:

fields: ["inner"]

output.elasticsearch:

hosts: ["10.132.176.216:9200"] # 同步Es 地址,多个地址用逗号分割

indices:

- index: "test_log" # 索引信息

filebeat 启动脚本,fileBeat 解压后根目录下,指定配置文件

./filebeat -e -c filebeat_config.yml -d "publish"

总结

FileBeat 本身支持Grok 的pipine 脚本,支持通过正则匹配实现日志提取,但需编写繁琐正则表达式,推荐使用logback 中LoggingEventCompositeJsonEncoder直接实现日志内容json 格式输出。FileBeat 与实现业务服务完全解耦,FileBeat 只需监控日志输出目录即可。