@TOC

报错信息

21/07/20 18:31:25 [task-result-getter-1] WARN TaskSetManager: Lost task 491.1 in stage 10.0 (TID 5637, BJLFRZ-10k-210-143.hadoop.jd.local, executor 94): org.apache.spark.SparkException: Task failed while writing rows.

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:306)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$write$15(FileFormatWriter.scala:215)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:129)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:462)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1468)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:465)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.NullPointerException

at java.lang.System.arraycopy(Native Method)

at org.apache.hadoop.hive.ql.io.orc.DynamicByteArray.add(DynamicByteArray.java:115)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.addNewKey(StringRedBlackTree.java:48)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.add(StringRedBlackTree.java:55)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl$StringTreeWriter.write(WriterImpl.java:1216)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl$StructTreeWriter.write(WriterImpl.java:1739)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl.addRow(WriterImpl.java:2407)

at org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat$OrcRecordWriter.write(OrcOutputFormat.java:86)

at org.apache.spark.sql.hive.execution.HiveOutputWriter.write(HiveFileFormat.scala:156)

at org.apache.spark.sql.execution.datasources.SingleDirectoryDataWriter.write(FileFormatDataWriter.scala:151)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$executeTask$1(FileFormatWriter.scala:286)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1502)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:294)

... 9 more

Suppressed: java.lang.IndexOutOfBoundsException: Index 177 is outside of 0..176

at org.apache.hadoop.hive.ql.io.orc.DynamicIntArray.get(DynamicIntArray.java:73)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree$VisitorContextImpl.setPosition(StringRedBlackTree.java:138)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:151)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:153)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:150)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:153)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:153)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:153)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:153)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:150)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.recurse(StringRedBlackTree.java:150)

at org.apache.hadoop.hive.ql.io.orc.StringRedBlackTree.visit(StringRedBlackTree.java:163)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl$StringTreeWriter.flushDictionary(WriterImpl.java:1290)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl$StringTreeWriter.writeStripe(WriterImpl.java:1249)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl$StructTreeWriter.writeStripe(WriterImpl.java:1749)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl.flushStripe(WriterImpl.java:2138)

at org.apache.hadoop.hive.ql.io.orc.WriterImpl.close(WriterImpl.java:2427)

at org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat$OrcRecordWriter.close(OrcOutputFormat.java:106)

at org.apache.spark.sql.hive.execution.HiveOutputWriter.close(HiveFileFormat.scala:161)

at org.apache.spark.sql.execution.datasources.FileFormatDataWriter.releaseResources(FileFormatDataWriter.scala:68)

at org.apache.spark.sql.execution.datasources.FileFormatDataWriter.abort(FileFormatDataWriter.scala:94)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$executeTask$2(FileFormatWriter.scala:291)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1513)

... 10 more

问题定位

通过网上搜索相关问题,发现是由于写入的是ORC压缩格式,不允许存在空值写入,所以导致的空指针、索引越界BUG。

报错的原因找到了,但是通过网上目前搜到的方式都没有办法解决我的问题;

比如1:

**hive.exec.dynamic.partition = true**

**hive.exec.dynamic.partition.mode = strict**

**hive.exec.max.dynamic.partitions.pernode = 100**

**hive.exec.max.dynamic.partitions = 1000**

**hive.exec.max.created.files = 100000**

**hive.error.on.empty.partition = false**

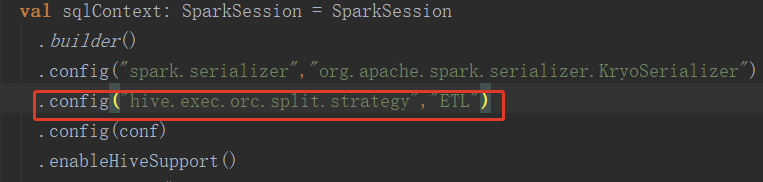

比如2:

set hive.exec.orc.split.strategy=ETL

但是以上方法都不能解决我的BUG。

我的解决办法

既然问题已经确定,就是因为写入的orc表有空值导致报错。那么我们对于空值情况给他空字符串或者默认值。

接下来就是每个字段的滤空判断,如果为空就给他默认值。

每个字段都做了滤空,还是报上面的错误(哎,我的BUG有点深)

最后发现由于我们是使用Scala开发的Spark程序,并且有空值那个字段我们进行了BASE64加密后写入Hive,可是对于Scala中的None进行BASE64机密后不再是空,所以导致我的滤空条件不生效。

最后,在进行BASE64加密前进行滤空判断,给默认值。问题解决!

BASE64加密这个深坑导致我问题找了好久,所以把这次BUG记录下来。