暑期生活记录

一.搭建和测试hadoop集群

这个过程对linux命令和xshell的使用也有了一定的了解和掌握

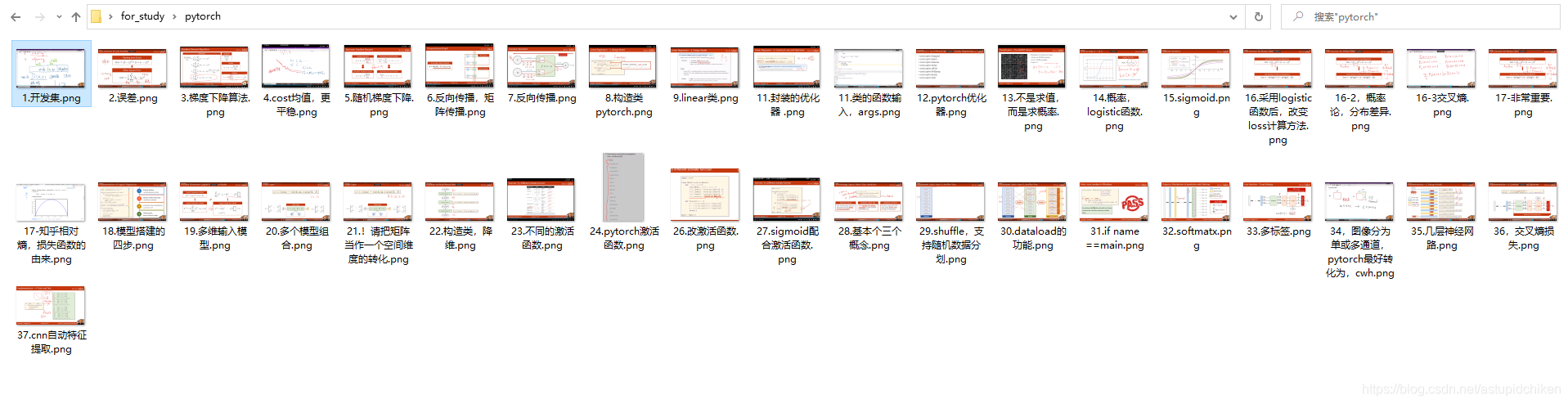

二.关于机器学习

学习搭建了一些基础模型,参考学习了b站刘二大人的pytorch教学视频。

```python

# #1.

# import numpy as np

# import matplotlib.pyplot as plt

# x_data=[1.0,2.0,3.0]

# y_data=[2.0,4.0,6.0]

# def forward(x):

# return x*w

#

# def loss(x,y):

# y_pred=forward(x)

# return (y_pred-y)*(y_pred-y)

#

# w_list=[]

# mse_list=[]

#

# for w in np.arange(0.0,4.1,0.1):

# print('w=',w)

# l_sum =0

# for x_val,y_val in zip(x_data,y_data):

# y_pred_val =forward(x_val)

# loss_val =loss(x_val,y_val)

# l_sum +=loss_val

# print('\t',x_val,y_val,y_pred_val,loss_val)

# print('MSE=',l_sum/3)

# w_list.append(w)

# mse_list.append(l_sum/3)

# plt.plot(w_list,mse_list)

# plt.ylabel('loss')

# plt.xlabel('w')

# plt.show()

#2

# import numpy as np

# import matplotlib.pyplot as plt

# x_data=[1.0,2.0,3.0]

# y_data=[2.0,4.0,6.0]

# w=1.0

# def forward(x):

# return x*w

# def cost(xs,ys):

# cost=0

# for x,y in zip(xs,ys):

# y_pred=forward(x)

# cost+=(y_pred-y)**2

# return cost/len(xs)

# def gradient(xs,ys):

# grad=0

# for x,y in zip(xs,ys):

# grad+=2*x*(x*w-y)

# return grad/len(xs)

# xxx=[]

# yyy=[]

# print('pridict (before training',4,forward(4))

# for epoch in range(100):

# cost_val=cost(x_data,y_data)

# grad_val=gradient(x_data,y_data)

# w-=0.01*grad_val

# print('Epoch',epoch,'w=',w,'loss=',cost_val)

# xxx.append(epoch)

# yyy.append(cost_val)

# print('pridict (after training',4,forward(4))

# plt.plot(xxx,yyy)

# plt.ylabel('loss')

# plt.xlabel('w')

# plt.show()

# #随机梯度下降

# import numpy as np

# import matplotlib.pyplot as plt

# x_data=[1.0,2.0,3.0]

# y_data=[2.0,4.0,6.0]

# w=1.0

# def forward(x):

# return x*w

# def lost(x,y):

# y_pred=forward(x)

# cost=(y_pred-y)**2

# return cost

# def gradient(x,y):

# grad=2*x*(x*w-y)

# return grad

# xxx=[]

# yyy=[]

# print('pridict (before training',4,forward(4))

# for epoch in range(100):

# for x,y in zip(x_data,y_data):

# grad_val=gradient(x,y)

# w-=0.01*grad_val

# l=lost(x,y)

# print('Epoch',epoch,'w=',w,'loss=',l)

# print('pridict (after training',4,forward(4))

#3

# import torch

# x_data=[1.0,2.0,3.0]

# y_data=[2.0,4.0,6.0]

# w=torch.Tensor([1.0])

# w.requires_grad=True #它是需要计算梯度的,true

# def forward(x):

# return x*w

#

# def loss(x,y):

# y_pred=forward(x)

# return (y_pred-y)**2

#

# print("predict(before training",4,forward(4).item())

# for epoch in range(100):

# for x,y in zip(x_data,y_data):

# l=loss(x,y)

# l.backward()

# print('\tgrad:',x,y,w.grad.item())

# w.data=w.data-0.01*w.grad.data

# w.grad.data.zero_()

# print('progress:',epoch,l.item())

# print ("predict (after training)",4,forward(4).item())

#3 课后练习

# import torch

# x_data=[1.0,2.0,3.0]

# y_data=[2.0,4.0,6.0]

# w1=torch.Tensor([1.0])

# w2=torch.Tensor([1.0])

# b=torch.Tensor([1.0])

# w1.requires_grad=True #它是需要计算梯度的,true

# w2.requires_grad=True #它是需要计算梯度的,true

# b.requires_grad=True #它是需要计算梯度的,true

# def forward(x):

# return w1*(x**2)+w2*x+b

#

# def loss(x,y):

# y_pred=forward(x)

# return (y_pred-y)**2

#

# print("predict(before training",4,forward(4).item())

# for epoch in range(200):

# for x,y in zip(x_data,y_data):

# l=loss(x,y)

# l.backward()

# print('\tgrad:',x,y,w1.grad.item(),w2.grad.item(),b.grad.item())

# w1.data=w1.data-0.01*w1.grad.data

# w2.data = w2.data - 0.01 * w2.grad.data

# b.data = b.data - 0.01 * b.grad.data

# w1.grad.data.zero_()

# w2.grad.data.zero_()

# b.grad.data.zero_()

# print('progress:',epoch,l.item())

# print ("predict (after training)",4,forward(4).item())

# print(w1.item(),w2.item(),b.item())

#4 pytorch真正的使用,nn,neurol newwork 神经网路的意思,线性模型

# import torch

# import numpy as np

# import matplotlib.pyplot as plt

# x_data=torch.Tensor([[1.0],[2.0],[3.0]])

# y_data=torch.Tensor([[2.0],[4.0],[6.0]])

# class LinearModer (torch.nn.Module): #继承module类,有太多方法来自module类

# def __init__(self): #构造函数 ,module自动帮你实现backward()

# super(LinearModer,self).__init__() #super调用父类的构造

# self.linear=torch.nn.Linear(1,1) #torch.nn.Linear是一个类,包含了权重和偏置w,和b,y=wx+b

# def forward(self,x):

# y_pred=self.linear(x)

# return y_pred

#

# model =LinearModer()

# criterion=torch.nn.MSELoss(size_average=False) #权重的选择

# optimizer=torch.optim.SGD(model.parameters(),lr=0.01) #优化器

#

# xxx=[]

# yyy=[]

# for epoch in range(100):

# y_pred=model(x_data) #求y

# loss=criterion(y_pred,y_data) #得到损失值

# print(epoch,loss)

# xxx.append(epoch)

# yyy.append(loss.item())

#

# optimizer.zero_grad() #权重归零

# loss.backward() #反向传播

# optimizer.step() #更新权重

# print('w=',model.linear.weight.item())

# print('b=',model.linear.bias.item())

#

# x_test=torch.tensor([[4.0]])

# y_test=model(x_test)

# print('y_pred=',y_test.data)

# plt.plot(xxx,yyy)

# plt.ylabel('loss')

# plt.xlabel('times')

# plt.show()

#5,图片识别,logistic函数

# import torchvision

# import numpy as np

# import matplotlib.pyplot as plt

# train_set=torchvision.datasets.MNIST(root='../dataset/mnist',train=True,download=True) #数字的测试data

# test_set=torchvision.datasets.MNIST(root='../dataset/mnist',train=False,download=True)

# train_set=torchvision.datasets.CIFAR10(root='../dataset/mnist',train=True,download=True) #猫狗的测试data

# test_set=torchvision.datasets.CIFAR10(root='../dataset/mnist',train=False,download=True)

# 做个图玩玩,logistic function 函数

# xxx=[]

# yyy=[]

# e=2.718281828459

# for epoch in range(-100,100):

# xxx.append(epoch)

# temp=1/(1+2.718281828459**(-epoch))

# yyy.append(temp)

# plt.plot(xxx,yyy)

# plt.ylabel('loss')

# plt.xlabel('times')

# plt.show()

# import torch

# import torch.nn.functional as F

# import numpy as np

# import matplotlib.pyplot as plt

# x_data=torch.Tensor([[1.0],[2.0],[3.0]]) #m每周学习 1,2,3小时的概率

# y_data=torch.Tensor([[0],[0],[1]])

# class LogisticRegressionModel(torch.nn.Module):

# def __init__(self):

# super(LogisticRegressionModel,self).__init__() # 继承

# self.linear =torch.nn.Linear(1,1)

# def forward(self,x):

# # y_pred=F.sigmoid(self.linear(x)) # linear线性变换后。转化成sigmoid

# y_pred=torch.sigmoid(self.linear(x))

# return y_pred

# model=LogisticRegressionModel()

# criterion=torch.nn.BCELoss(size_average=False)

# optimizer=torch.optim.SGD(model.parameters(),lr=0.01)

# for epoch in range(1000):

# y_pred=model(x_data)

# loss=criterion(y_pred,y_data)

# print(epoch,loss.item())

# optimizer.zero_grad()

# loss.backward()

# optimizer.step()

# print('w=',model.linear.weight.item())

# print('b=',model.linear.bias.item())

# import numpy as np

# import matplotlib.pyplot as plt

# x=np.linspace(0,10,200) # 0~10小时采200个点

# x_t=torch.Tensor(x).view((200,1)) # 转换成Tensor格式

# y_t=model(x_t)

# y=y_t.data.numpy()

# plt.plot(x,y)

# plt.plot([0,10],[0.5,0.5],c='r')

# plt.xlabel('hours')

# plt.ylabel('possibile')

# plt.grid()

# plt.show()

# 6.多维特征的输入

# import torch

# class Model(torch.nn.Module): # 建立模型

# def __init__(self):

# super(Model,self).__init__()

# self.linear=torch.nn.Linear(8,1) # 初始化,输入八维,输出一维

# self.sigmoid= torch.nn.Sigmoid()

# def forward(self,x):

# y_pred=self.sigmoid(self.linear(x))

# return y_pred

#

# import numpy as np

# import torch

# class Model(torch.nn.Module): # 建立模型

# def __init__(self):

# super(Model,self).__init__()

# self.linear1=torch.nn.Linear(5,2) # 初始化,输入五维,输出二维

# self.linear2 = torch.nn.Linear(2, 1) # 初始化,输入二维,输出一维

# self.sigmoid= torch.nn.Sigmoid()

# def forward(self,x):

# y_pred1=self.sigmoid(self.linear1(x))

# y_pred2=self.sigmoid(self.linear2(y_pred1))

# # x=self.sigmoid(self.linear1(x))

# # x=self.sigmoid(self.linear2(x))

# return y_pred2

# # xy=np.loadtxt('C:/py-need/Lib/site-packages/sklearn/datasets/data/X.csv',delimiter=' ',dtype=np.float32)

# # x_data=torch.from_numpy(xy[:,:-1])

# # y_data=torch.from_numpy(xy[:,[-1]])

# # print(x_data) # 读取糖尿病的测试数据,但是刚好在做建模训练,所以采用下面17年国赛C题数据

# xy = np.loadtxt('C:/Users/47971/Desktop/Data2.txt',encoding='utf-8',delimiter=',',dtype=np.float32) #指定数据类型,float32或者double

# x_data=torch.from_numpy(xy[:,:-1])

# y_data=torch.from_numpy(xy[:,[-1]])

# print(x_data)

# print(y_data)

# model=Model()

# criterion = torch.nn.BCELoss(size_average=True) #优化器,size_average为True时,返回的loss为平均值;为False时,返回的各样本的loss之和。

# # criterion = torch.nn.BCELoss(size_average=False) #优化器

# optimizer =torch.optim.SGD(model.parameters(),lr=0.1) #优化器

# for epoch in range(100):

# # forward 正向传播

# y_pred=model(x_data)

# loss=criterion(y_pred,y_data)

# print(epoch,loss.item())

#

# #反向传播

# optimizer.zero_grad()

# loss.backward()

# #更新权重

# optimizer.step()

# 7.数据的导入和处理

import numpy as np

import torch

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

# class DiabetesDataset(Dataset): # j继承dataset类

# def __init__(self):

# pass

# def __getitem__(self, item):

# pass

# def __len__(self):

# pass

# dataset=DiabetesDataset()

# train_loader=DataLoader(dataset=dataset,batch_size=32,shuffle=True,num_workers=2)

#dataset数据集对象,一个小批量容量,batchsize,shuffle,是否打乱,num——workers,多线程吗

# for epoch in range(100)

# for i,data in enumerate(train_loader,0)

# win下会报错

#要封装

# if __name__=='__main__':

# for epoch in range(100):

# for i,data in enumerate(train_loader,0):

#训练要这么训练

# class DiabetesDataset(Dataset):

# def __init__(self,filepath):

# xy=np.loadtxt(filepath,delimiter=',',dtype=np.float32)

# self.len=xy.shape[0]

# self.x_data=torch.from_numpy(xy[:,:-1])

# self.y_data=torch.from_numpy(xy[:,[-1]])

# def __getitem__(self, item):

# return self.x_data[item],self.y_data[item]

# def __len__(self):

# return self.len

# dataset=DiabetesDataset('C:/Users/47971/Desktop/for_study/diabetes.csv')

# train_loader=DataLoader(dataset=dataset,batch_size=32,shuffle=True,num_workers=2)

#

# import numpy as np

# import torch

# class Model(torch.nn.Module): # 建立模型

# def __init__(self):

# super(Model,self).__init__()

# self.linear1=torch.nn.Linear(8,4) # 初始化,输入五维,输出二维

# self.linear2 = torch.nn.Linear(4, 2) # 初始化,输入四维,输出二维

# self.linear3 = torch.nn.Linear(2, 1) # 初始化,输入二维,输出一维

# self.sigmoid= torch.nn.Sigmoid()

# def forward(self,x):

# y_pred1=self.sigmoid(self.linear1(x))

# y_pred2=self.sigmoid(self.linear2(y_pred1))

# y_pred3=self.sigmoid(self.linear3(y_pred2))

# # x=self.sigmoid(self.linear1(x))

# # x=self.sigmoid(self.linear2(x))

# # x=self.sigmoid(self.linear3(x))

# return y_pred3

# model=Model()

# criterion = torch.nn.BCELoss(size_average=True) #优化器,size_average为True时,返回的loss为平均值;为False时,返回的各样本的loss之和。

# # # criterion = torch.nn.BCELoss(size_average=False) #优化器

# optimizer =torch.optim.SGD(model.parameters(),lr=0.1) #优化器

# if __name__=='__main__':

# for epoch in range(100):

# for i,data in enumerate(train_loader,0): #第二个参数表示起始序列

# #for i, (x,y) in enumerate(train_loader, 0): # 第二个参数表示起始序列

# #for i, (inputs,labels) in enumerate(train_loader, 0): # 第二个参数表示起始序列,也可以

# # 准备数据

# input,labels=data

# # 正向传播

# y_pred=model(input)

# loss=criterion(y_pred,labels)

# print(epoch,i,loss.item())

# # 反向传播

# optimizer.zero_grad()

# loss.backward()

# #更新权重

# optimizer.step()

#糖尿病到此为止,但是要试试后续给的图像的

# import torchvision

# import numpy as np

# import matplotlib.pyplot as plt

# import torch

# from torch.utils.data import DataLoader

# from torchvision import transforms

# from torchvision import datasets

# train_dataset=torchvision.datasets.MNIST(root='../dataset/mnist',train=True,transform=transforms.ToTensor(),download=True) #数字的测试data

# test_dataset=torchvision.datasets.MNIST(root='../dataset/mnist',train=False,transform=transforms.ToTensor(),download=True)

# train_load=DataLoader(dataset=train_dataset,batch_size=32,shuffle=True)

# test_load=DataLoader(dataset=test_dataset,batch_size=32,shuffle=True)

# if __name__=='__main__':

# for epoch in range(100):

# for batch_idx,(inputs,target) in enumerate(train_loader,0): #第二个参数表示起始序列

#kaggle也挂vpn注册了,感觉还阔以

# 8.多标签问题

# import numpy as np

# y=np.array([1,0,0])

# z=np.array([0.2,0.1,-0.1])

# y_pred=np.exp(z)/np.exp(z).sum()

# loss=(-y*np.log(y_pred)).sum()

# print(loss)

#

# import torch

# y=torch.LongTensor([0])

# z=torch.Tensor([[0.2,0.1,-0.1]])

# criterion=torch.nn.CrossEntropyLoss()

# loss=criterion(z,y)

# print(loss)

#

# import torch

# criterion=torch.nn.CrossEntropyLoss()

# y=torch.LongTensor([2,0,1])

# y_pred1=torch.Tensor([[0.1,0.2,0.9], #2

# [1.1,0.1,0.2], #0

# [0.2,2.1,0.1]]) #1

# y_pred2=torch.Tensor([[0.8,0.2,0.3],

# [0.2,0.3,0.5],

# [0.2,0.2,0.5]])

# loss1=criterion(y_pred1,y)

# loss2=criterion(y_pred2,y)

# print(loss1)

# print(loss2)

#

# #MNIST dataset

# import torch

# from torchvision import transforms #针对图像处理的工具

# from torchvision import datasets

# from torch.utils.data import DataLoader

# import torch.nn as nn

# import torch.nn.functional as F #改用更流行的relu

# import torch.optim as optim #优化器的库

#

# batch_size=64

# transform=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.1307,),(0.3081,))])

# #转换格式为tensor #均值 #标准差

# train_dataset=datasets.MNIST(root='../dataset/mnist/',train=True,download=True,transform=transform)

# train_loader=DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

# #下载训练集

# test_dataset=datasets.MNIST(root='../dataset/mnist/',train=False,download=True,transform=transform)

# test_loader=DataLoader(test_dataset,shuffle=False,batch_size=batch_size)

# #下载测试集

# # x=x.view(-1,784) #-1表示动态调整维度

# class NetModel(torch.nn.Module):

# def __init__(self):

# super(NetModel, self).__init__()

# self.l1 = torch.nn.Linear(784, 512)

# self.l2 = torch.nn.Linear(512, 256)

# self.l3 = torch.nn.Linear(256, 128)

# self.l4 = torch.nn.Linear(128, 64)

# self.l5 = torch.nn.Linear(64, 10)

# def forward(self,x):

# x=x.view(-1,784) #把图像变成矩阵

# x = F.relu(self.l1(x))

# x = F.relu(self.l2(x))

# x = F.relu(self.l3(x))

# x = F.relu(self.l4(x))

# x = F.relu(self.l5(x))

# return x

# model =NetModel()

# #损失函数和优化器

# criterion=torch.nn.CrossEntropyLoss() #交叉熵损失

# optimizer=optim.SGD(model.parameters(),lr=0.1,momentum=0.5) #带冲量

# # Momentum 传统的参数 W 的更新是把原始的 W 累加上一个负的学习率(learning rate) 乘以校正值 (dx). 此方法比较曲折。

# # 我们把这个人从平地上放到了一个斜坡上, 只要他往下坡的方向走一点点, 由于向下的惯性, 他不自觉地就一直往下走, 走的弯路也变少了. 这就是 Momentum 参数更新

# def train(epoch):

# running_loss=0.0

# for batch_idx,data in enumerate(train_loader,0):

# inputs,target=data

# optimizer.zero_grad()

# #

# outputs=model(inputs)

# loss=criterion(outputs,target)

# loss.backward()

# optimizer.step()

#

# running_loss+=loss.item()

# if batch_idx%300==299: #每三百次,输出一次

# print('[%d,%5d]loss:%3f'%(epoch+1,batch_idx+1,running_loss/300))

# running_loss=0.0

#

# def test():

# correct=0

# total=0

# with torch.no_grad(): #不需要反向传播

# for data in test_loader:

# images,labels=data

# outputs=model(images)

# _,predicted=torch.max(outputs.data,dim=1) #dim=0是列,1是行,维度问题

# total+=labels.size(0)

# correct+=(predicted==labels).sum().item()

# print('accurate:%d %%'%(100*correct/total))

#

# if __name__=='__main__':

# for epoch in range(10):

# train(epoch)

# test()

三.关于后期

1.完成大数据课设

2.学习CNN以及深入

3.学习往年数学建模例题

注:感觉一路虽然磕磕绊绊,也算是把我想学的都起了步,所以很开心,记录一下

有hadoop搭建的问题,我感觉还是能解决的,改了不少bug。