默认环境配置请参考

Zookeeper 配置

-

压缩包解压并重命名为zk

tar -zxvf zookeeper-3.4.10.tar.gz mv zookeeper-3.4.10 zk -

配置环境变量

vim /etc/profile # 文件末尾添加 export ZOOKEEPER_HOME=/software/zk export PATH=.:$PATH:$ZOOKEEPER_HOME/bin # 环境变量生效 source /etc/profile注:java 和hadoop的路径需要提前配置

# 完整 export JAVA_HOME=/software/jdk export PATH=.:$PATH:$JAVA_HOME/bin export ZOOKEEPER_HOME=/software/zk export PATH=.:$PATH:$ZOOKEEPER_HOME/bin -

修改配置文件

重命名 conf/zoo_sample.cfg 为 conf/zoo.cfg,并配置cd /software/zk/conf mv zoo_sample.cfg zoo.cfg vim zoo.cfg # 修改数据存储路径 dataDir=/software/zk/data # 在最后添加上以下内容,其中 2888 是通讯端口,3888 是选举端口 server.0=hadoop-01:2888:3888 server.1=hadoop-02:2888:3888 server.2=hadoop-03:2888:3888 -

创建zookeeper的数据目录并配置id

mkdir /software/zk/data cd /software/zk/data vim myid # 写入0 0 -

复制到其他节点上

scp -r /software/zk hadoop-02:/software/ scp -r /software/zk hadoop-03:/software/ -

修改hadoop-02 和 hadoop-03 的zk/data/myid为各自相应的id值

# hadoop-02 vim /software/zk/data/myid # 写入1 1 # hadoop-03 vim /software/zk/data/myid # 写入2 2 -

将hadoop-01的/etc/profile文件复制到hadoop-02 和 hadoop-03 或者直接修改/etc/profile

scp -r /etc/profile hadoop-02:/etc/ scp -r /etc/profile hadoop-03:/etc/ # hadoop-02、 hadoop-03 执行 source /etc/profile -

启动测试

分别在三台服务器启动 zookeeper,在 zk 的 bin 目录下面启动cd /software/zk/bin/ zkServer.sh start # 可以通过 zkServer.sh status 查看服务器的角色 root@hadoop-01 bin]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /software/zk/bin/../conf/zoo.cfg Mode: follower注:

a. 启动之前关闭防火墙或者配置安全组策略

b. 集群中的 selinux 关闭vim /etc/selinux/config SELINUX=disabled

配置 HA 集群

-

在 software 目录下创建一个 ha 文件夹

mkdir /software/ha cd /software/ha -

上传安装文件并解压

tar -xzvf hadoop-2.7.4.tar.gz mv hadoop-2.7.4 hadoop -

配置hadoop-env.sh

cd /software/ha/hadoop/etc/hadoop vim hadoop-env.sh # 修改 export JAVA_HOME=/software/jdk -

配置 core-site.xml

vim core-site.xml # 替换 <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://mycluster</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/software/ha/hadoop/data/tmp</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>hadoop-01:2181,hadoop-02:2181,hadoop-03:2181</value> </property> </configuration> -

配置hdfs-site.xml

vim hdfs-site.xml # 替换 <configuration> <!-- 完全分布式集群名称 --> <property> <name>dfs.nameservices</name> <value>mycluster</value> </property> <!-- 集群中 NameNode 节点都有哪些 --> <property> <name>dfs.ha.namenodes.mycluster</name> <value>hadoop-01,hadoop-02</value> </property> <!-- nn1 的 RPC 通信地址 --> <property> <name>dfs.namenode.rpc-address.mycluster.hadoop-01</name> <value>hadoop-01:9000</value> </property> <!-- nn2 的 RPC 通信地址 --> <property> <name>dfs.namenode.rpc-address.mycluster.hadoop-02</name> <value>hadoop-02:9000</value> </property> <!-- nn1 的 http 通信地址 --> <property> <name>dfs.namenode.http-address.mycluster.hadoop-01</name> <value>hadoop-01:50070</value> </property> <!-- nn2 的 http 通信地址 --> <property> <name>dfs.namenode.http-address.mycluster.hadoop-02</name> <value>hadoop-02:50070</value> </property> <!-- NameNode 元数据在 JournalNode 上的存放位置 --> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://hadoop-01:8485;hadoop-02:8485;hadoop-03:8485/mycluster</value> </property> <!-- 声明 journalnode 服务器存储目录--> <property> <name>dfs.journalnode.edits.dir</name> <value>/software/ha/hadoop/data/jn</value> </property> <!-- 开启 NameNode 失败自动切换 --> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <!-- 配置失败自动切换实现方式--> <property> <name>dfs.client.failover.proxy.provider.mycluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <!-- 配置隔离机制,同一时刻只能有一台服务器对外响应 --> <property> <name>dfs.ha.fencing.methods</name> <value>sshfence</value> </property> <!-- 使用隔离机制时需要 ssh 免密登录--> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/root/.ssh/id_dsa</value> </property> <!-- 配置 sshfence 隔离机制超时时间 --> <property> <name>dfs.ha.fencing.ssh.connect-timeout</name> <value>30000</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> </configuration> -

配置 mapred-site.xml

cp mapred-site.xml.template mapred-site.xml vim mapred-site.xml # 替换 <configuration> <!-- 指定 mr 框架为 yarn 方式 --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <!-- 设置 mapreduce 的历史服务器地址和端口号 --> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop-03:10020</value> </property> <!-- mapreduce 历史服务器的 web 访问地址 --> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop-03:19888</value> </property> </configuration> -

配置 yarn-site.xml

vim yarn-site.xml # 替换 <configuration> <!-- Site specific YARN configuration properties --> <!-- 开启 RM 高可用 --> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <!-- 指定 RM 的 cluster id --> <property> <name>yarn.resourcemanager.cluster-id</name> <value>cluster-yarn1</value> </property> <!-- 指定 RM 的名字 --> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>hadoop-01,hadoop-02</value> </property> <!-- 分别指定 RM 的地址 --> <property> <name>yarn.resourcemanager.hostname.hadoop-01</name> <value>hadoop-01</value> </property> <property> <name>yarn.resourcemanager.hostname.hadoop-02</name> <value>hadoop-02</value> </property> <!--指定 zookeeper 集群的地址--> <property> <name>yarn.resourcemanager.zk-address</name> <value>hadoop-01:2181,hadoop-02:2181,hadoop-03:2181</value> </property> <!-- 要运行 MapReduce 程序必须配置的附属服务 --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 开启 YARN 集群的日志聚合功能 --> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <!-- YARN 集群的聚合日志最长保留时长 --> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>86400</value> </property> <!--启用自动恢复--> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <!--指定 resourcemanager 状态信息存储在 zookeeper 集群--> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> </configuration> -

将 Hadoop 配置分发到其他节点

# hadoop-02 和hadoop-03提前创建目录:/software/ha/ scp -r /software/ha/hadoop hadoop-02:/software/ha/ scp -r /software/ha/hadoop hadoop-03:/software/ha/ -

配置环境变量

# 所有节点末尾添加 export HADOOP_HOME=/software/ha/hadoop export PATH=.:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin # 实际线上配置 export JAVA_HOME=/software/jdk export PATH=.:$PATH:$JAVA_HOME/bin export ZOOKEEPER_HOME=/software/zk export PATH=.:$PATH:$ZOOKEEPER_HOME/bin export HADOOP_HOME=/software/ha/hadoop export PATH=.:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin # 所有节点执行 source /etc/profile -

启动 ZooKeeper 集群

# 三个节点 zkServer.sh start -

启动 journalnode

# 三个节点 cd /software/ha/hadoop/sbin/ hadoop-daemon.sh start journalnode -

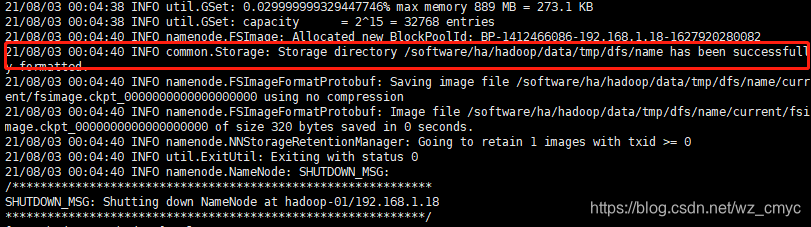

格式化 NameNode

# 在第一个 namenode 节点进行格式化 hdfs namenode -format格式化成功:注意路径

-

拷贝元数据信息

拷贝第一个 namenode 节点上初始化成功的元数据到第二个 namenode 节点的相同目录下scp -r /software/ha/hadoop/data hadoop-02:/software/ha/hadoop -

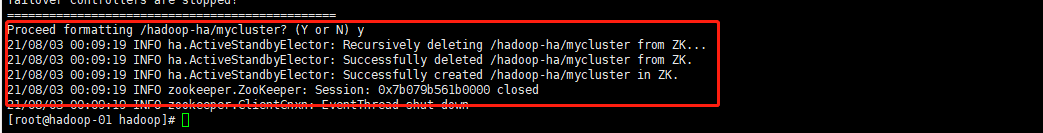

选择一个节点执行 zookeeper 的元数据初始化

hdfs zkfc -formatZK初始化成功

-

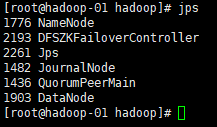

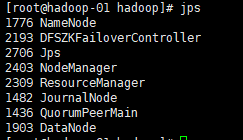

启动 hdfs 集群

start-dfs.sh

-

启动 yarn 集群

start-yarn.sh

-

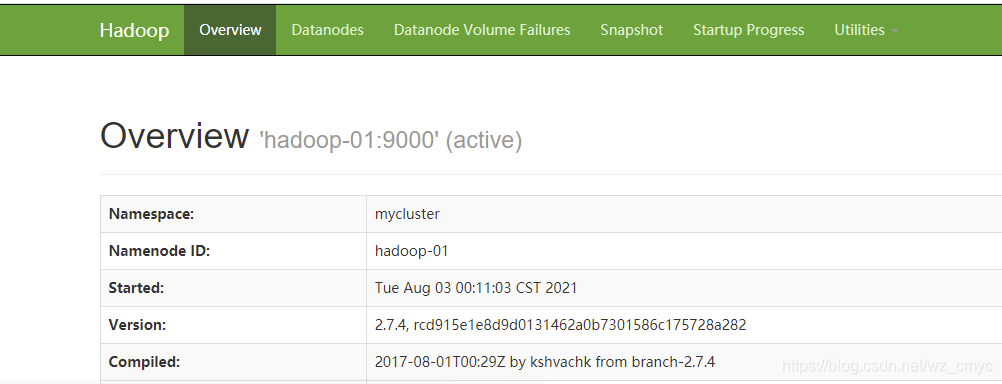

查看 hdfs 的 web 页面

-

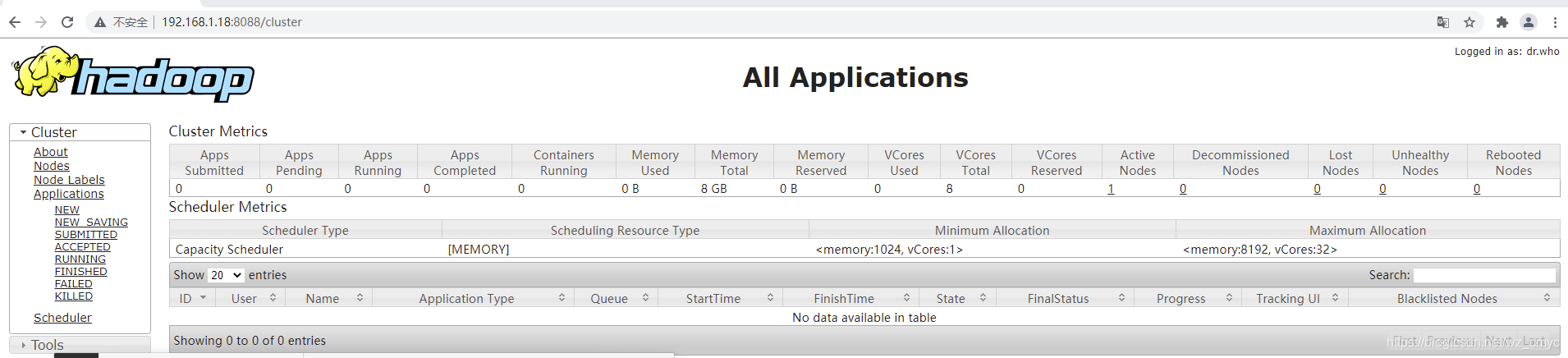

查看 yarn 的 web 页面