Hadoop集群配置

| 配置文件 | 功能描述 |

|---|---|

| hadoop.env.sh | 配置Hadoop运行所需的环境变量 |

| yarn.env.sh | 配置Yarn运行所需的环境变量 |

| core-site.xml | Hadoop核心全局配置文件 |

| hdfs-site.xml | HDFS配置文件,继承core-site.xml配置文件 |

| mapred-site.xml | MapReduce配置文件,继承core-site.xml配置文件 |

| yarn-site.xml | Yarn配置文件,继承core-site.xml配置文件 |

配置文件对应的进程(以及高可用)

| 配置文件 | 进程 |

|---|---|

| hadoop.env.sh | NameNode、SecondaryNameNode和DataNode |

| yarn.env.sh | ResourceMananger和NodeManager |

| hdfs-site.xml | JournalNode和DFSZKFailoverController |

| core-site.xml | QuorumPeerMain |

配置集群主节点

1.查看主机名

2.ssh免密操作

- 生成密钥对(默认在/root/.ssh/目录下)

ssh-keygen

- 拷贝密钥到其他节点

ssh-copy-id hadoop-01

ssh-copy-id hadoop-02

ssh-copy-id hadoop-03

3.集群配置

- 修改配置文件

(1)将hadoop安装包解压后,移动到/export/software/下

(2)修改配置文件。各文件默认位置在/export/software/hadoop-2.4.1/etc/hadoop/目录下

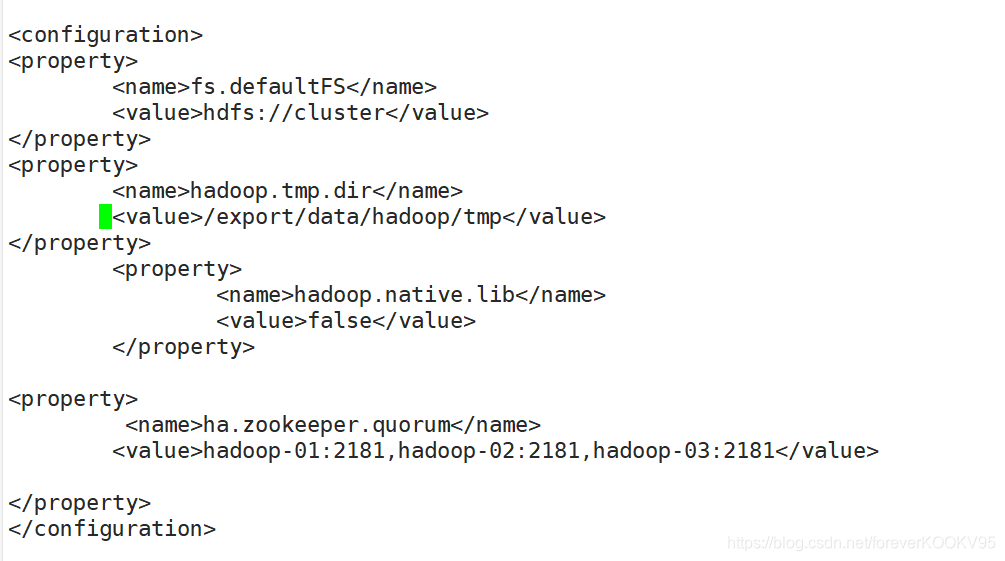

core-site.xml如下

hdfs-site.xml如下

<configuration>

<!--指定HDFS副本的数量,不能超过机器节点数-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 为namenode集群定义一个services name -->

<property>

<name>dfs.nameservices</name>

<value>cluster</value>

</property>

<!-- nameservice 包含哪些namenode,为各个namenode起名 -->

<property>

<name>dfs.ha.namenodes.cluster</name>

<value>nn01,nn02</value>

</property>

<!-- 名为nn01的namenode的rpc地址和端口号,rpc用来和datanode通讯 -->

<property>

<name>dfs.namenode.rpc-address.cluster.nn01</name>

<value>hadoop-01:9000</value>

</property>

<!--名为nn01的namenode的http地址和端口号,用来和web客户端通讯 -->

<property>

<name>dfs.namenode.http-address.cluster.nn01</name>

<value>hadoop-01:50070</value>

</property>

<!-- 名为nn02的namenode的rpc地址和端口号,rpc用来和datanode通讯 -->

<property>

<name>dfs.namenode.rpc-address.cluster.nn02</name>

<value>hadoop-02:9000</value>

</property>

<!--名为nn02的namenode的http地址和端口号,用来和web客户端通讯 -->

<property>

<name>dfs.namenode.http-address.cluster.nn02</name>

<value>hadoop-02:50070</value>

</property>

<!-- namenode间用于共享编辑日志的journal节点列表 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop-01:8485;hadoop-02:8485;hadoop-03:8485/cluster</value>

</property>

<!-- journalnode 上用于存放edits日志的目录 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/export/data/hadoop/journaldata</value>

</property>

<!-- 指定该集群出现故障时,是否自动切换到另一台namenode -->

<property>

<name>dfs.ha.automatic-failover.enabled.cluster</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.cluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 一旦需要NameNode切换,使用ssh方式进行操作 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence

shell(/bin/true)

</value>

</property>

<!-- 如果使用ssh进行故障切换,使用ssh通信时用的密钥存储的位置 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!-- connect-timeout超时时间 -->

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/export/data/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/export/data/hadoop/tmp/dfs/data</value>

</property>

</configuration>

yarn-site.xml如下

<configuration>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>1</value>

</property>

<!-- 启用Resource Manager HA高可用性 -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 指定resourcemanager的名字 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrc</value>

</property>

<!-- 使用了2个resourcemanager,分别指定Resourcemanager的地址 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- 指定rm1的地址 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>hadoop-01</value>

</property>

<!-- 指定rm2的地址 -->

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>hadoop-02</value>

</property>

<!-- 指定zookeeper集群机器 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>hadoop-01:2181,hadoop-02:2181,hadoop-03:2181</value>

</property>

<!-- NodeManager上运行的附属服务,默认是mapreduce_shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

mapred-site.xml如下

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

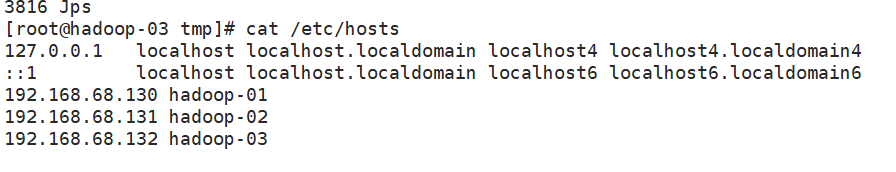

slaves文件如下

hadoop-01

hadoop-02

hadoop-03

hadoop-env.sh——指定jdk地址

export JAVA_HOME=/export/software/jdk1.8.0_161

配置hadoop变量

vi /etc/profile

ZK变量见zookeeper安装部署

export LD_LIBRARY_PATH=$HADOOP_HOME/lib/native

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export HADOOP_HOME=/export/software/hadoop-2.4.1

export ZK_HOME=/export/software/zookeeper-3.5.9

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$$ZK_HOME/bin:$PATH

生效变量

source /etc/profile

- 拷贝复制到其他节点

scp -r /export/software/hadoop-2.4.1/etc/hadoop hadoop-02:/export/software/hadoop-2.4.1/

scp -r /export/software/hadoop-2.4.1/etc/hadoop hadoop-03:/export/software/hadoop-2.4.1/

启动Hadoop

在启动hadoop集群前,需要进行几步格式化操作:

- 启动journalnode,三个节点都要启动。

hadoop-daemon.sh start journalnode

- 在主节点进行的格式化命令:(namenode和zkfc)

hdfs namonode -format

hdfs zkfc -formatZK

- 根据在core-site.xml中设置的tmp的目录位置,将格式化后的tmp文件传给hadoop-02

scp -r /export/data/hadoop/tmp hadoop-02:/export/data/hadoop/

之后就可以启动hadoop了

- 在主节点执行命令:

#启动hadoop集群

start-dfs.sh

start-yarn.sh

#启动ZookeeperFailoverController

hadoop-daemon.sh start zkfc

- 在第二个节点执行命令

yarn-daemon.sh start resourcemanager

hadoop-daemon.sh start zkfc

- 如果想要停止命令,输入以下:

stop-all.sh

hadoop-daemon.sh stop zkfc

yarn-daemon.sh stop resourcemanager

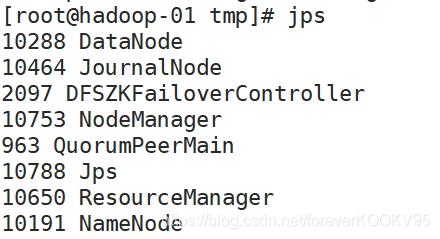

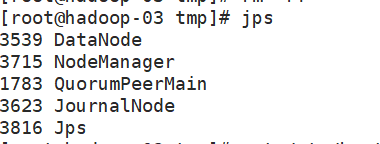

查看进程(最终结果)

hadoop-01

hadoop-02

hadoop-03

Hadoop平台搭建完成